- Ontario Green Energy Policy Blasted By A-G As Costly Folly

- 2012 E&P Spending Plans Call For Another Healthy Increase

- Shale Gas Drilling Faring Better Than Headlines Suggest

- U.S. Wind Industry Confronting Reality Of No Tax Subsidies

- Hurricane Forecasters Give Up Early December Quest

- New Study Says CAFE Standards Will Lead To Larger Cars

Musings From the Oil Patch

December 20, 2011

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Ontario Green Energy Policy Blasted By A-G As Costly Folly (Top)

Readers of the Musings may remember that in our last issue we discussed an analysis of a press release issued by the Independent Electricity System Operator (IESO) in Ontario, Canada trumpeting the hundreds of millions of money raised by selling surplus power to users outside the province. The analysis was developed by Parker Gallant, a retired banker who grew irritated with his rising power bills. He felt that there was incongruity between the IESO’s profit claims and his high power bills. After examining the financial records of the IESO, be determined that the $375 million profit was actually a loss for ratepayers of about $420 million. The analysis highlighted how many of the green energy deals undertaken by the Ontario Power Authority in response to the Green Energy and Green Economy Act that was passed into law by the province in May 2009 were “bad” deals for ratepayers. Due to the way the IESO accounts for these green energy deals, however, their true cost to ratepayers has been obscured by the accounting.

Barely had we published our article when a report was issued by Ontario Auditor-General Jim McCarter blasting Liberal Premier Dalton McGuinty’s administration for its headlong rush to implement a green energy agenda. We learned of the report from a Terence Corcoran column in the Financial Post detailing its conclusions. Mr. Corcoran wrote, “In 2009, the McGuinty government literally bulldozed its own regulatory regimes, charged ahead without cost-benefit analysis or business plans, refused to allow cost-cutting alternatives that would have saved ratepayers at least $8 billion and generally allowed the green-industry lobby to dictate the structure of the province’s electricity sector in its favor. The specifics of the report point not just to just bad policy badly implemented, but to a high level of fiscal negligence, abuse of process and disdain for taxpayers and electricity consumers.”

Mr. Corcoran’s column laid out many of the bad energy deals agreed to by the province in the name of green energy. But also interesting was reading the multitude of comments from readers about the column. As one would expect, the comments were either highly praise-worthy or accused the author of spreading lies and overstating the negative case. After securing a copy of the report, we know that most of the responders had never read the report, or chose to ignore its chapter-and-verse documentation of the criticisms. The critics of the column also latched on to a web site that reported the cost of power in various cities around the country and pointed out that Toronto’s electricity rates, despite the rise in power costs due to renewable energy projects, remained in the middle of the range.

The web site pointed to by critics had survey data collected by Hydro Manitoba showing electricity costs by type of power customer for various cities throughout Canada. The critics pointed to the residential power data as of May 1st of this year, which showed that Toronto was in the middle of the range of all the cities ranked. The data from the table critics cited is contained in Exhibit 1. There are three Ontario cities in the listing with Englehart ranked number two, Toronto at number seven and Kenora at number ten out of a total of 15 cities listed. This table would seem to support the critics claiming that even after the hefty energy cost increases over the years, these power rates are not outrageous and well within the range of residential electricity costs across the nation.

Exhibit 1. Residential Electricity Rates In Canada

Source: Ontario Auditor-General Report

The table, however, was only part of a huge spreadsheet of residential electricity data collected. If one reviewed all the data, at the bottom of the spreadsheet was a set of footnotes that amplified the analysis. The footnote is critical to understanding the survey. It points out that the monthly electricity costs and the cents-per-kilowatt-hour rate were determined after application of Ontario’s 10% Green Energy Credit. This is the only province in Canada to have such a credit, which reduces the cost of Ontario’s power. After adjusting Ontario’s power costs for the credit, Englehart moved up to the number one position, Toronto became number four and Kenora was up to eighth place. These are meaningful increases and highlight how the government may be trying to keep the high cost of its green power agenda down by spreading the incremental cost across all the taxpayers in the province. Without adjusting for this credit, the conclusion that Ontario power prices are not excessive is not completely inaccurate.

Exhibit 2. Rankings And Energy Credit Impact

Source: Ontario Auditor-General Report, PPHB

While Mr. Corcoran used the percentage increase in Ontario’s electricity costs of 65% since 1999, his point was to emphasize that electricity costs are likely to rise by 46% over the next four years. The critics were quick to jump on the percentage price rise and show that it pales in comparison the rise in crude oil prices – an estimated 500%. The key point, however, wasn’t necessarily the magnitude of the historical increase, but the projected future rate of increase and how the increase compares with the forecast at the time the green agenda was embraced.

At the time of the passage of the Green Energy and Green Economy Act in May 2009, the Ontario Energy Ministry said it would lead to modest incremental increases in electricity bills of about 1% annually due to the addition of 1,500 megawatts (MW) of renewable energy under the Feed-in Tariff program. In November 2010, the Energy Ministry’s Long-Term Energy Plan that included electricity price forecasts projected that a typical residential electricity bill would rise about 7.9% annually for the next five years. The Ministry estimated that 56% of that increase was due to the investment in renewable energy that would increase the supply to 10,700 MW by 2018. That study’s conclusions were supported by another study prepared by the Ontario Energy Board (OEB) that regulates the province’s electricity and natural gas industries. The April 2010 study concluded that a typical household’s annual electricity bill will increase by about 46% between 2009 and 2014. The OEB said that more than half of this increase would be because of renewable energy contracts. Quite clearly the huge commitment to new renewable energy sources is contributing to a sharp rise in typical residential annual electricity bills, which is substantially greater than the Energy Ministry proclaimed when the 2009 mandate was enacted.

We will not analyze all the aspects of the renewable energy programs in Ontario audited by the Auditor-General, but suffice it to say that he was highly critical of the lack of analysis performed by the Energy Ministry in implementing the law. Moreover, there was a rush to undertake many projects without any business case being prepared, which led to rapid changes in the program in response to political desires that were implemented without review by other agencies. We will, however, touch on several aspects of renewable energy – its reliability, the need for backup power and the cost of surplus power.

The power generating capacity of a power plant is measured in two ways: the ‘capacity factor’ and the ‘capacity contribution.’ The capacity factor is the ratio of the actual output of a power plant in a given period to the theoretical maximum output of the plant operating at full capacity. The capacity contribution measures the amount of capacity available to generate power at a time of peak electricity demand, which is usually in July and August.

The power-generating capacity of wind and solar technology is much lower than for other energy sources as shown in Exhibit 3. Wind generators operate at a 28% capacity factor but have only 11% availability at peak demand due to lower wind speeds in the summer. On the other hand, solar generators operate at only 13% to 14% capacity factor on average for the year but have 40% availability at peak demand in the summer due to longer periods of sunlight.

The Auditor-General analyzed the performance data from all the wind farms in Ontario in 2010 based on IESO data. While the data showed that wind’s average capacity factor was 28% in the year, it also showed that it fluctuated seasonally from a low of 17% in the summer to 32% in the winter. The data also showed that wind power fluctuated daily, from 0% on summer days to 94% on winter days, which just happens to be the exact opposite of electricity demand. The data demonstrated wind power was often out of phase with demand during the day, as wind output drops around 6 am just as demand is ramping up. Demand remains high during daylight hours while wind output typically drops to its lowest levels then. Around 8 pm, just as electricity demand is dropping, wind output would be increasing. The variability of wind power and its lack of correlation with electricity demand have created operational challenges for IESO, including creating power surpluses and the need for backup power generated by other energy sources.

The Auditor-General commented that the need for backup power supplies has a cost and creates additional environmental issues. The IESO acknowledged that ratepayers have to pay twice for variable renewable power – once for the cost of constructing the renewable power generators and again for the cost of construction of backup generation facilities. The IESO also confirmed that these backup facilities, which can quickly ramp up and down, add to ongoing operational costs, although it has not performed a cost analysis. The only analysis done on backup power by the Energy Ministry was a study done by a consulting firm in 2007 as part of the preparation for the Ministry’s Integrated Power System Plan. The study concluded that 10,000 MW of wind power would require an extra 47% (4,700 MW) of non-wind sources to handle extreme drops in wind. The study was conducted by a firm that also owned a wind farm in Ontario, leading the Auditor-General to question the objectivity of the study.

Exhibit 3. Energy And Performance Ratings

Source: Ontario Auditor-General Report

According to the IESO, the increasing proportion of renewable energy in the Ontario electricity supply mix has exacerbated the challenge called surplus base-load generation (SBG). This is the oversupply of power that occurs when the quantity of electricity from base-load generators is greater than demand for electricity. The IESO informed the Auditor-General that from 2005 to 2007 the province did not have any SBG days. It experienced four such days in 2008, 115 days in 2009 and 55 days in 2010. The increase in SBG days was attributed to several factors including an increase in wind power and a decline in electricity demand.

To deal with SBG, the utility has several options. One is to attempt to store it, although it is difficult due to the seasonal nature of the renewable power and the need for unrealistically large storage capacity. The more acceptable way of dealing with the surplus is to sell it to users outside of the province. The IESO believes that the increase in surplus power is due to increased renewable power and that exports of surplus power have put downward pressure on export prices. To demonstrate this point, the IESO reported that in 2010, 86% of wind power was produced on days when Ontario was already in a net export position.

Exhibit 4. Power Cost Versus Export Price

Source: Ontario Auditor-General Report

The price ratepayers in Ontario pay for electricity and the price Ontario charges its export customers have been moving in opposite directions in recent years. Export prices are set by the dynamics of supply and demand in the electricity market. As a result, export customers have been paying about 3¢/kWh to 4¢/kWh for Ontario power. This exported power costs Ontario ratepayers more than 8¢/kWh to be generated. The Auditor-General estimates, based on an analysis of net exports and pricing data from the IESO that from 2005 until the end of its audit in 2011, Ontario received $1.8 billion less for its electricity exports than it actually cost ratepayers.

Besides the sharp criticism of the Ontario Energy Ministry and the Ontario Power Authority for its aggressive actions in enacting green energy policies and entering into long-term and expensive green energy deals without any analysis or business case studies, the Auditor-General’s report verified many of the economic problems of renewable power. Many of the points raised about the variability of renewables, their lower power capacity, the fact that they tend to produce power when demand is low and not generate it when needed, plus the costly backup power supplies required are well-known to students of this business. However, unknowing politicians have embraced the “benefits” of renewable power and mandated its role in our mix of energy sources without beginning to understand the costs they are imposing on the ratepayers. This report should be required reading by politicians and regulators before enacting more green legislation.

2012 E&P Spending Plans Call For Another Healthy Increase (Top)

The bell-weather survey of oil and gas company E&P budgets is conducted annually by Barclays Capital and calls for a healthy increase in 2012. Globally, the oil and gas industry is projected to spend nearly $600 billion in 2012, up 10% from the estimated $544 billion to be spent this year. The survey estimates that North American spending will increase by 8% in 2012, which is composed of a 10% increase in U.S. spending but only a 3% hike in Canadian budgets. Internationally, budgets are projected to rise by 11%. Given the expected double digit spending increase, Barclays energy analysts are painting a very optimistic picture for the energy business, and especially for the oilfield service companies, next year. We would agree that these budget numbers suggest the positive investment stance is appropriate. Our only caution would be that based on discussions with various energy company executives, the current economic and financial issues dogging the European Union could cause an event that would force all the companies to have to re-assess their forecasted spending plans.

In reading the Barclays survey we discovered a number of interesting industry trends that reflect either the continuation of recent trends or may suggest emerging trends that will shape the business environment of the future. One of the first trends the Barclays analysts noted was the growing percentage of oil and gas company budgets being directed toward exploration. They pointed out that in 2010, 32% of their survey respondents planned on increasing the percentage of their E&P budgets dedicated to new exploration. That percentage rose to 38% of respondents in 2011 and increased further to 42% in the most recent survey. This trend is responsible for the wave of recent significant oil and gas discoveries around the globe in places such as East Africa, the Middle East and Latin America and in turn is stimulating other companies to step up their exploration. The key significance of this trend for the oilfield service industry is that new, large discoveries traditionally generate significant development drilling activity as producers want to first assess exactly how large a field is and then work to bring it into production. The importance of development drilling is that once begun this type of drilling is seldom stopped, even should oil and gas prices fall, as a half-developed field is of little value to producers. This phenomenon is especially true for offshore fields. So a spate of significant new discoveries, especially if they are in new geographic regions where there is little exploration history, should not only expand drilling activity but also sustain it despite the volatility in oil and gas prices being experienced.

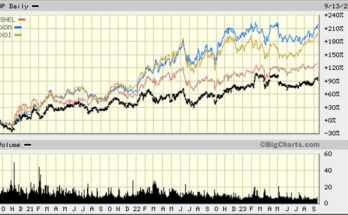

Another observation the Barclays analysts made about the spending plans is that outside of Canada virtually every geographic region of the world is expecting a healthy increase. Some readers may focus on the annual percentage increases or decreases, which ranged between -35% for Other to +42% for the Former Soviet Union and Commonwealth of Independent States (FSU/CIS), when looking at the survey results. We, on the other hand, look more at the absolute dollar increases or decreases projected and try to understand whether the spending changes, and resulting activity changes, are driven by economic and/or political developments, changes in exploration success or failure, or merely offsetting higher oilfield service prices. When increased spending reflects more activity because of improved success or a better environment, rather than merely offsetting rising field costs, the outlook for the energy industry is improving. That trend will not only create a tendency for future increased spending, but it also suggests that the earnings potential for the companies is improving, which in turn should drive stock prices higher.

Exhibit 5. 2012 Spending Up In Every Market

Source: Barclays, PPHB

We found it interesting that the region with the largest dollar increase in projected spending is expected to be Latin America. This increase is driven by the continued ramping up of activity offshore Brazil as Petrobras, the national oil company, moves forward with development of their subsalt discoveries. Brazil has a very active onshore industry, too, that is growing. Equally important for Latin America E&P spending is the planned increase in activity by Pemex, the Mexican state oil company, as it reacts to the need to find and develop new energy supplies to offset the declining production from the Cantarell field. Ecuador is also targeting a large increase in E&P activity as the political problems that have plagued that country are resolved.

Exhibit 6. Some Markets See Much Larger Gains

Source: Barclays, PPHB

Another factor that gives the Barclays analysts confidence about the results of the 2012 spending survey is its track record. Over the past decade, actual spending has been greater than what was projected by the early spending surveys with the exception of 2009 and 2010. The industry cut spending more in 2009 than expected. In 2010, the miss was small, but it is important to understand that some of the spending shortfall was due to the disruption of Gulf of Mexico activity as a result of the Macondo oil spill and the temporary shutting down of all offshore drilling for a period of time. In addition, there was a shift in spending underway for many producers who were moving from emphasizing conventional E&P in favor of unconventional activity. This spending shift has created adjustment periods for many producers as they line up their new activity which can impact the pace of individual company spending. We caution, however, that the underlying trend driving the over-spending of original survey estimates has been the decade-long climb in crude oil prices and the revolutionary new E&P opportunities spawned by unconventional E&P activity in North America.

Forecasts, including those of Barclays’ commodity analysts, call for still higher oil prices in the coming years. As long as the global economy and its financial structure can support it, energy demand growth should continue at a healthy pace driving the need for additional oil production despite higher commodity prices limiting overall energy demand growth. Should North American natural gas prices recover from their currently depressed levels, producer cash flows will be greater than anticipated and the companies are prone

Exhibit 7. E&P Spending Driven By High Oil Prices

Source: Barclays

to spend those extra dollars in adding to their reserves and production. This pattern has been borne out in the past. When actual spending is compared to the original projection, only in 2009 when the industry cut spending more than initially anticipated and again in 2010 when spending was slightly less than expected did the industry fall short of initial estimates. Those two years were associated with the 2008-2009 recession and global financial crisis that clipped oil prices by about two-thirds from peak to trough, so the spending shortfalls were not totally unexpected. In 2002, the actual amount spent by the oil and gas industry and the initial spending estimate happened to coincide. Despite current economic and financial stress in the Euro zone, commodity prices are holding up well. That suggests that the bias for oil and gas industry spending should be to the upside.

Exhibit 8. Budgets Have Been Outspent By Industry

Source: Barclays

Another interesting area that the Barclays survey investigated was producer expectations about oilfield inflation. The oil service companies are facing escalating costs for building new, larger and more sophisticated equipment. In order to be adequately compensated for the higher costs, service companies are aggressively raising prices, at least for those products and services in short supply. Barclays asked its respondents to suggest which products and services they expected to see higher prices and which are likely to have lower prices in the future. The following two charts (Exhibits 9 and 10) show the results of the survey. The most interesting data point in Exhibit 9 was that nearly 30% of respondents believe that no service costs will be lower a year from now. Of the five product lines expected to show price decreases, seismic is projected to decline by nearly twice the amount of the other four, with nearly a 20% drop. Prices for stimulation and fracturing services, along with drill bits and chemicals and proppants are expected to only decline by somewhere between 5% and 10%.

Exhibit 9. Some Costs May Show Declines In 2012

Source: Barclays

On the flip side of the equation, about 10% of the respondents expect all oilfield service costs to rise in 2012 but about 5% think there will be no cost increases. When we look at the categories of products and services expected to experience increased costs, we are somewhat puzzled. There is a 50% expectation that completions costs will be heading up. This is in sharp contrast to the expectation that stimulation and fracturing services are anticipated to see their prices drop. Since these are components of completions, and not insignificant components, it is hard to understand this projection. It must be limited to the cost of downhole completion tools. Likewise, there is the expectation that drilling costs are going up by close to 15%, yet drill bits are expected to decline by 5%. Rig costs are expected to climb by something less than 5%, but that hike should be included in the anticipated increase in drilling costs. The bottom line seems to be that other than completions, expectations are that price increases for oilfield products and services will not be rising materially in 2012, a good thing for producers’ margins.

In the category of newly emerging trends, we found the responses to the question of naming the key determinant of E&P spending to be interesting. For the first time in over a decade, crude oil prices were cited as the primary reason (54% of respondents, up from 49% in

Exhibit 10. Limited Cost Increases Next Year

Source: Barclays

2011) for the rise. In Exhibit 11, we show a portion of the table from the Barclays report. For 2012 spending plans, after oil prices the next two most important factors were natural gas prices and cash flows, the latter having been judged to be the most important determinant last year. Capital and prospect availability combined with drilling success and costs fill out the line-up of factors, in declining importance.

Exhibit 11. E&P Now Driven By Oil Rather Than Gas

Source: Barclays

The extended table in the Barclays report showed that since 2000, natural gas prices were the most important determinant of E&P spending for 10 of the 13 years. Natural gas prices were tied for the leadership spot in 2000, second in 2005 and third in importance last year. There were two years when neither oil nor natural gas prices were the leading determinants driving E&P spending.

Another favorite question Barclays asks in its annual survey is to list the most important oilfield technology. Since 2000, some 13 different technologies have been listed by various respondents. For the fourth year running, and fifth out of the last six years, Fracturing/Stimulation has been the number one technology. Horizontal drilling has been second during the last four years. Both of these technologies have played important roles throughout the entire 13-year period covered in the latest Barclays survey, ranking either first, second or third. The other technology that was considered the most important during this period was Seismic, which held the top spot from 2000 to 2006 and again in 2008. Seismic has lost its position due to the oil and gas shale revolution. Since 2008, when Seismic just edged out Fracturing/Stimulation by one percentage point for the top spot, its significance has been cut more than in half (22% to 10%) as shale developments have dominated the industry’s drilling focus. The ubiquitous nature of shale deposits has virtually eliminated the need for seismic as an important exploration tool. In the more complicated basins, however, seismic is making a comeback as a tool to help find sweet spots among the blanket shale formations. Micro-seismic, a subset of Seismic, is also becoming much more important in the shale development process as it is being utilized to determine the success of hydraulic fracturing treatments.

Exhibit 12. Gas Shale Revolution Technologies Lead

Source: Barclays

It is evident from the spending survey results that the oil and gas industry feels increasingly confident that commodity prices are not going to crash anytime soon. Therefore, the industry is willing to step up its spending plans in a meaningful way to look for and develop additional oil and gas reserves and boost production. There is a caveat, however, to this assessment. That caveat is that Europe can’t implode and take the rest of the world’s economies into a global recession. If not, oil and gas prices will hold up and energy demand, especially in developing economies, will necessitate the industry producing more oil and gas. Increasingly, the wind appears to be behind the industry.

Shale Gas Drilling Faring Better Than Headlines Suggest (Top)

The latest Environmental Protection Agency (EPA) draft report on possible water well pollution from leaking natural gas wells in Wyoming has created the most recent negative headline for shale gas developments and the natural gas industry. Despite this negative publicity, a recent survey by the Deloitte Center for Energy Solutions found that the majority of Americans think developing natural gas contained in shale formations offers greater rewards than it does risks. The poll results also showed that eight of ten respondents believe that natural gas development will help create jobs and help revive the economy.

The positive view of the natural gas market depicted by the Deloitte survey would appear to be countered by the headline from another recent survey done in Pennsylvania by the Muhlenberg Institute of Public Opinion in collaboration with the Gerald R. Ford School of Public Policy at the University of Michigan. The story reporting on the survey’s results was titled “Polling shows public distrust in debate over natural gas drilling.” The article begins with the following statement: “Pennsylvanians have significant doubts about the credibility of the media, environmental groups and scientists on the issue of natural gas drilling using ‘fracking’ methods, a new poll says.”

As the Marcellus shale formation that underlies much of Pennsylvania remains one of the hottest energy plays in North America, attitudes of citizens there would seem to be very important for the pace of its future development. The first survey finding highlighted in the article was that 84% of respondents strongly agreed that drilling companies should have to disclose the chemicals used in fracturing. This is a policy that is being put in place in many states and by the federal government.

The survey results show that citizens are concerned about what various parties say about the environmental impacts of fracturing. By 44% to 41%, respondents believe the media is overstating than understating the environmental impacts while by 48% to 39% they say that environmental groups are overstating rather than overstating the impacts. Scientists fare better by a 34% to 42% ratio by those who say they are overstating the impact of fracturing.

The key conclusion from the survey, which is saved for the very end of the article, is that 41% of respondents say that so far fracturing has provided more benefits than problems for Pennsylvania versus 33% who thought the opposite. But tellingly, 50% expect more benefits than problems in the future compared to only 32% who expect more problems.

It is interesting to compare Pennsylvanians’ views about the future for fracturing to those who were surveyed by Deloitte. That survey found that about two in ten respondents (19%) feel the risks of developing shale gas “somewhat” or “far” outweigh the benefits; 58% believe the benefits outweigh the risks; and almost 25% are unsure. These results are remarkably similar to the Muhlenberg survey. The message is that readers can’t rely just on headlines to understand what is actually found in surveys about natural gas development. These survey results also suggest that the public hasn’t been scared nearly as much as thought by the extremism from anti-fossil fuel advocates, such as those who produced the movie Gasland. But rest assured that those advocates will not give up easily or soon.

U.S. Wind Industry Confronting Reality Of No Tax Subsidies (Top)

On December 8th, a huge winter storm hit the United Kingdom, in particular Scotland. The storm brought gale force winds and blizzard conditions across the northern portion of the country. One news story reported that while citizens were struggling to deal with the wintery weather, the UK’s lone polar bear was thoroughly enjoying himself. The very strong winds, reportedly clocked as high as 265 kilometers per hour (165 miles per hour) created one spectacular event captured by a local photographer, Stuart McMahon. His picture showed a 300-foot tall, £2 million ($3.1 million) wind turbine in flames. The wind turbine was located in Ardrossan, North Ayrshire, Scotland. Mr. McMahon described the situation, saying free-wheeling and throwing off burning material. Fires on the ground started by the burning material were put out by locals before the fire brigade arrived and there were no reported injuries or damage.

Exhibit 13. Scotland Wind Turbine Set Afire By Wind

Source: The Daily Mail

In Coldingham in the Borders, another wind turbine blew down causing the evacuation of several homes and the closure of roads in the area. This turbine was also free-wheeling in 50 mile-per-hour winds. These wind turbine accidents came at a time when most wind farms in Britain were shut down as the wind gusts triggered protection mechanisms according to National Grid PLC (NGG-NYSE), the country’s major utility company. The impact of the forced shutdowns reduced electric output from the nation’s wind farms to 1,343 megawatts (MW) at 5:25 pm in London, compared to a forecast made earlier that day that they would be providing 3,730 MW. A spokesman for National Grid said that there was a 1,500 MW shortfall in wind power generated in Scotland, but because the company’s power forecasting team had anticipated that possibility, the lost power was offset by the use of back-up power sources. This shortfall in wind energy is sufficient to power one million homes.

ScotishPower, which operates 15 wind farms north of the Borders area, said turbines were switched off at “most of them.” A spokesman said that individual turbines have self-protection mechanisms that are activated in sustained storm force winds to prevent damage. They re-activate when the wind speed drops. Officials said that wind power suppliers were not paid compensation – ‘constraint payments’ – to shut down. This happens only when operators have to deactivate turbines because the Grid cannot accommodate the energy they produce.

In an interview with The Daily Mail, a British newspaper, Sir Bernard Ingham, secretary of the pressure group Supporters of Nuclear Energy, said it was ‘ridiculous’ that wind had killed off a wind turbine. He said, “They are no good when the wind doesn’t blow and they are no good when the wind does blow. What on earth is the point of them? They represent the most ridiculous waste of people’s money.”

Sir Ingham’s sentiments seem to be having an impact in the United States. Several things are happening here that will impact the future of renewable fuels and wind projects in particular. NRG Energy (NRG-NYSE) said it was stopping development of an offshore wind project off the coast of Delaware. The company said that the development of a new domestic offshore wind industry was facing “monumental challenges” that were making it impossible to move forward. The company cited its inability to find an investment partner, the lack of a federal loan guarantee and the looming expiration of wind tax incentives as the key reasons behind its decision to abandon the project.

NRG Energy and lead developer of the Mid-Atlantic Wind Park off the coast of Delaware, Bluewater Wind, plan to terminate the project’s 200-MW power purchase agreement (PPA) with the Delmarva Power & Light Company at the end of the year. NRG had acquired Bluewater Wind in November 2009 when the outlook for offshore wind was positive. In describing its rationale for this move, NRG said, “In particular, two aspects of the project critical for success have actually gone backwards: the decisions of Congress to eliminate funding for the Department of Energy’s loan guarantee program applicable to offshore wind, and the failure to extend the Federal Investment and Production Tax Credits for offshore wind which expire at the end of 2012 and which have rendered the Delaware project both unfinanceable and financially untenable for the present.”

The company said its next step would be to close its Bluewater Wind development office but preserve its options by maintaining its development rights and continuing to seek development partners and equity investors. Because there is a debate ongoing in Washington about extending the production tax credits for renewable energy, NRG’s announcement could be seen as a ploy to put pressure on legislators to renew the credits. On the other hand, the inability to gain funding and other investors is not likely to improve dramatically in the next few weeks regardless of what happens with the tax credit since it will only be extended for a couple of years, if at all. The bigger problem is that renewable energy economics are becoming better understood and they don’t stack up with power generated from conventional fuels. Even the public is beginning to realize this fact. We wonder what NRG Energy’s decision means for their Rhode Island offshore wind project?

In one reader’s comment in response to The Daily Mail article about the UK winter storm and the burning wind turbine, he pointed out that while an electric industry executive interviewed discussed the excellent record of specific wind turbines, the industry has a record of over 100 turbines having caught fire in the past decade. One of the wind turbines was cited as the cause of a fire in Australia that burned out a national park. As the reader said, the fire service can’t do anything about turbine fires because they can’t get too close due to debris being thrown off, they can’t use water to put out the fire because of the electricity and they might not even be able to reach a fire on the top of a turbine tower.

Wind power is confronting another challenge coming from the increasing deaths of birds and bats. The American Bird Conservancy (ABC) petitioned the U.S. Department of the Interior to develop regulations that it says will safeguard wildlife and reward responsible wind energy development. They submitted a 100-page petition that urges the U.S. Fish and Wildlife Service to issue regulations establishing a mandatory permitting system for the operation of wind energy projects and mitigation of their impacts on migratory birds. The wind industry is opposed. The industry and government agreed to a voluntary program in 2003 when there were only a limited number of wind turbines. The ABC has totaled 60,000 turbines and meteorological towers either in service or planned as of the end of 2010, so they see bird deaths as an escalating factor.

The government estimates that a minimum of 440,000 birds currently are killed each year by collisions with wind turbines. ABC estimates that without a clear, legally enforceable regulation, the expansion of wind power in the U.S. will likely result in the deaths of more than one million birds each year by 2020. The ABC petition is designed to provide safeguards for more than just the bird species covered by the Migratory Bird Treaty Act. It would require the government to consider the impact of wind farms on all bird species, as well as bats and other wildlife.

We discovered that there is a compilation of wind turbine accidents, which may not be totally complete because of the lack of official reporting requirements worldwide. As the number of installed wind turbines has increased, not surprisingly so has the number of accidents. There were an average of 16 accidents per year from 1995-1999 inclusive, 48 accidents per year from 2000-2004 inclusive and 106 accidents per year from 2005-2010 inclusive.

Exhibit 14. Wind Turbine Accidents Rise Over Time

Source: Caithness Windfarm Information Forum,

www.caithnesswindfarms.co.uk

It is interesting to note that there are 1,093 accidents in this database. As a result of the accidents, there were 88 fatalities with 63 deaths directly related to wind workers and 25 public fatalities, workers not directly dependent on the wind industry. There were also 90 accidents in which humans were injured. In some cases these public injuries and fatalities are due to events such as ice thrown by wind turbine blades that can reach as far as two miles away.

The database also contains information about environmental damage including bird deaths. There are 97 cases reported, the majority since 2007 that probably reflects changes in legislation or reporting requirements. About 40% of the accidents involve confirmed deaths of protected bird species, but known deaths are much higher. A review of reports from studies of individual wind farms in the United States, Spain, Germany, Australia and elsewhere show clearly that the numbers of bird deaths is much greater. One cynic of the Conservancy estimate of the number of bird deaths from wind turbines without a federal standard for wind turbine sitting threw out huge numbers of birds supposedly killed by flying into building windows, flying into power lines, being hunted, attacked by cats or for other reasons. His point was that the number of birds killed by wind turbines was a small number, so it was no big deal. The problem is that the bird species being killed tend to be larger and migratory. The increase in deaths is due to the placement of wind turbines and the expansion of wind farms that change wind patterns for the traveling birds. To criticize certain power sources because of the dangers they supposedly create but then to excuse a ‘favored’ power source because its harmful impact isn’t too great seems to be not only self-serving but arrogant.

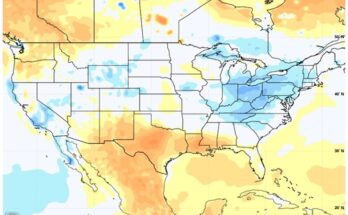

Hurricane Forecasters Give Up Early December Quest (Top)

Some climate change blogs have made a point of highlighting that two long-time and well-regarded hurricane forecasters have given up their quest to accurately predict the number of named storms, hurricanes, major hurricanes, etc. some nine months before the start of the tropical storm season. Dr. Philip Klotzbach and Dr. William Gray of the Department of Atmospheric Science at Colorado State University have been making available an early forecast, usually issued on December 1st, for the upcoming storm season for the past 20 years. Over the years they have made modifications to their forecasting model to account for improved scientific data and better hindcast modeling using different weightings of climate data series. After critically reviewing the performance of their models against actual results, the scientists have concluded that their models, at this time of the year, do not provide better results than climatology.

The authors provided a chart showing their forecasts over the past 20 years compared to the actual storm season outcomes. As shown, the correlation between their forecast and actual results was an abysmal 0.04 percent. With that forecasting record, it was time for the forecasters to ditch that model. Since they have yet to develop a better model (with greater hindcast accuracy) they say they will continue to work on improving the model. They will rely on their later predictive models to help them in their quest.

Exhibit 15. Why They Gave Up On Forecast Model

Source: Klotzbach and Gray

One of the critical variables for forecasting tropical storm activity 9-12 months ahead is being able to project the development of El Niño – the Southern Oscillation (ENSO). El Niño is very influential in determining the number, intensity and path of tropical storms in the Atlantic Basin during hurricane season. Since the professors have been unable to perfect El Nino forecasting, they have ditched their quantitative model and instead will only issue qualitative assessments for upcoming storm seasons.

Drs. Klotzbach and Gray believe that the active storm era, which began in 1995 for the Atlantic basin tropical cyclones, will continue in the upcoming storm season. They believe that typical conditions associated with a positive Atlantic Multi-Decadal Oscillation (AMO) and strong thermohaline circulation (THC) will continue, also. Based on various models and qualitative assessment of current conditions in the tropical Pacific, they believe the odds of an El Niño forming this year are somewhat greater than they have been during the past couple of years. In addition, tropical Atlantic sea surface temperatures tend to cool following La Niña events such as we are currently experiencing. This development needs to be closely monitored. As a result, the forecasters believe the 2012 Atlantic basin hurricane season will be primarily determined by the strength of the AMO/THC and by the state of ENSO.

To provide the public with some assessment of what the 2012 hurricane season might be like, the forecasters have developed four possible scenarios with probabilities attached to each scenario. These scenarios are:

- THC circulation becomes unusually strong in 2012 and no El Niño event occurs (resulting in a seasonal average net tropical cyclone (NTC) activity of ~ 180. 15% chance.

- THC continues in the above-average condition it has been in since 1995 and no El Niño develops (NTC ~ 140). 45% chance.

- THC continues in above-average condition it has been in since 1995 with the development of a significant El Niño (NTC ~ 75). 30% chance.

- THC becomes weaker and there is the development of a significant El Niño (NTC ~ 40). 10% chance.

These four scenarios produce the following possible storm activity forecasts.

- 180 NTC – 14-17 named storms, 9-11 hurricanes and 4-5 major hurricanes.

- 140 NTC – 12-15 named storms, 7-9 hurricanes and 3-4 major hurricanes.

- 75 NTC – 8-11 named storms, 3-5 hurricanes and 1-2 major hurricanes.

- 40 NTC – 5-7 named storms, 2-3 hurricanes and 0-1 major hurricanes.

One of the adjustments the forecasters have made is to switch their climatology measure from the 1950-2000 mean to the 1981-2010 median. Their primary reason for making the switch was to take into account the increased number of weaker storms observed during the latter part of the 20th century and the early part of the 21st century. This trend they believe is due to vastly improved observational capabilities that identifies more weak tropical cyclones. This phenomenon was evident recently when the National Oceanic and Atmospheric Administration (NOAA) upped is count of named storms in 2011 from 18 to 19, almost immediately after it issued the season-ending count.

The impact of this climatology switch is that the normal number of named storms increases from 9.6 to 12.0 and the hurricane count goes from 5.9 to 6.5 but the number of major hurricanes declines from 2.3 to 2.0. As expected due to these changes in storm counts, there is a corresponding increase and decrease in the number of storm days associated with each category.

In assessing the qualitative probability for the upcoming tropical storm season, the forecasters ask several questions about the development of climate events that can shape the season’s storm activity. First is whether El Niño will re-develop for the 2012 hurricane season. To answer this question they examine years since 1950 with similar September-October Multivariate Enso Index (MEI) values to 2011, which is currently 0.5-1.5 standard deviations below normal. Of the 14 years with similar MEI values, five, or 36%, experienced El Niño conditions the following season. In addition, they point out; we have now gone since 2009 without an El Niño. Typically, warm ENSO events (El Niño) occur about every 3-7 years.

The second question posed by the forecasters is: What is the likelihood of a multi-winter La Niña event such as was observed during the winters of 2010/2011 occurring for the winters of 2011/2012? Based on observations from past season with multi-winter La Niña events, there appears to be about a 50% experience of El Niño conditions developing in the next season. The conclusion of the forecasters is that the active storm era for the Atlantic basin will continue and if El Niño does not develop, an active tropical storm season is likely. But it appears there is a “moderate” chance (30-50%) that El Niño will develop. They anticipate the El Niño issue will be better defined by the time of the April 2012 forecast.

In assessing the probabilities of the number of named storms, hurricanes and major hurricanes, the forecasters found that the median El Niño year in an active THC phase is comparable to a non-El Niño year in an inactive THC phase. It is the answers to their two posed questions and the observations surrounding those possible answers that leads Drs. Klotzbach and Gray to suggest the four potential outcomes and the probabilities of each scenario. One point the two forecasters were adamant about is that their abandoning of their forecasting model is not related to claims about increased global warming impacting the frequency or intensity of hurricanes. In fact, the switch to the more recent data as the measure of climatology suggests that storm intensity has weakened in recent years rather than strengthened. We will be anxiously awaiting the professors’ April forecast, but in the meantime, we should expect next year’s hurricane season to be active, so prepare accordingly.

New Study Says CAFE Standards Will Lead To Larger Cars (Top)

A new study by the University of Michigan suggests that auto manufacturers could meet tougher fuel economy standards simply by increasing the size of vehicles they sell. In 2007 when the Corporate Average Fuel Economy (CAFE) standards were revised, a “footprint-based” formula for calculating mileage targets was adopted. According to Wikipedia, “Starting in 2011 the CAFE standards are newly expressed as mathematical functions depending on vehicle "footprint", a measure of vehicle size determined by multiplying the vehicle’s wheelbase by its average track width. CAFE footprint requirements are set up such that a vehicle with a bigger footprint has a lower fuel economy requirement than a vehicle with a smaller footprint. For example, the 2012 Honda Fit has a footprint of 40 sq ft (3.7 m2) must achieve fuel economy (as measured for CAFE) of 36 miles per US gallon (6.5 l/100 km), equivalent to a published fuel economy of 27 miles per US gallon (8.7 l/100 km), while a Ford F-150 with its footprint of 65–75 sq ft (6.0–7.0 m2) must achieve CAFE fuel economy of 22 miles per US gallon (11 l/100 km), i.e., 17 miles per US gallon (14 l/100 km) published.” This method of calculation of fuel-efficiency means greater demands on smaller cars than larger, less-efficient vehicles.

The Michigan researchers believe this approach could eventually lead to bigger vehicles on the road rather than increases in fuel economy. "It’s cheaper to make large vehicles, and meeting fuel-economy standards costs [manufacturers] money in implementing and looking at what consumers will purchase," one of the researchers told Automotive News. They are calling for the National Transportation Safety Administration to overhaul this formula.

Much has been made of the agreement between auto manufacturers and the Obama administration to adopt the 54.5 mile-per-gallon (mpg) CAFE standard beginning in 2025. At the time this new standard was being agreed to, we wrote about what was behind it. The agreement was structured using a weighted formula for determining auto company compliance with the new standards. Electric cars and hybrid vehicles will receive a greater weighting in calculating fleet fuel-efficiency. Here is how it works and why we will wind up with larger, less fuel-efficient vehicles.

An electric car will be rated as having achieved 96/mpg and will be counted twice in the calculation. Hybrid vehicles with high fuel-efficiency ratings will be counted one and a half times. For ease of our calculation, a two car fleet of one electric car and one light truck at 25/mpg will have a CAFE rating of 49.3/mpg, when in reality they will have a 39.7/mpg average. How you ask? The rating is determined by the inverse of the fuel rating. We have a fleet of one electric car and one truck, which averages 39.7/mpg. 2/((1/96)+(1/25)) = 39.7/mpg. For purposes of the CAFE standard, the fleet gets credit for a second electric car so the calculation averages 49.3/mpg. 3/((1/96)+(1/96)+(1/25)) = 49.3/mpg. The net impact is that while auto manufacturers will be able to say they met the standard, the actual fuel-efficiency rating of the fleet will be below the standard.

From the Obama administration’s viewpoint, they have put in place a system that will encourage auto companies to build electric and hybrid vehicles in order to be able to make and sell larger (more profitable) vehicles. This agreement is an example of how politicians, regulators and maybe even company managers can create a mirage to meet popular demand. The problem is this mirage is a fiction, created through the use of smoke and mirrors. In this case, the new CAFE standard is merely another regulatory charade designed to overcome the lousy economics of electric vehicles.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.