- Canadian Oil Forecast Highlights Challenges Facing Industry

- Battle Over Industrial-Size Wind Turbines In Rhode Island

- Global Warming Proponents Jump On Recent Tornadoes

- The Forces Eating Away At Gasoline Demand

- Alternatives Issue May Reflect Energy Executive Confusion

- Self-Driving Cars May Be Years Away From Hitting The Road

Musings From the Oil Patch

June 11, 2013

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Canadian Oil Forecast Highlights Challenges Facing Industry (Top)

Last week the Canadian Association of Petroleum Producers (CAPP) released its 2013 crude oil, markets and transportation forecast. This year’s CAPP forecast boosts its previous projection of future oil output in 2030 by 500,000 barrels a day. The new CAPP forecast is 800,000 barrels a day higher than the most recent forecast from the International Energy Agency (IEA). Of the additional oil in the CAPP forecast, just 200,000 barrels a day is projected to come from higher oil sands output while an additional 300,000 barrels a day should come from conventional oil resources. The new forecast suggests that there are good days ahead for the Canadian petroleum industry – both producers and oilfield service companies. One of the important aspects of the forecast is its implication of future prospects for the various oil producing provinces of Canada. The report also highlights the need for Canada to seek new consumers for its growing oil output beyond North America’s shores.

The CAPP oil output forecast comes at an interesting time for Canada and its petroleum industry. Two significant export pipeline projects to move more conventional oil from Canada and increased bitumen production from Alberta are struggling to attain approval either from the United States government in the case of TransCanada’s (TRP-NYSE) Keystone XL pipeline and from British Columbia with regard to Enbridge’s (ENB-NYSE) Northern Gateway pipeline. This year’s CAPP forecast marks the second consecutive year the organization has increased its estimate of Canada’s future oil output by a significant amount. These increased Canadian output projections are consistent with virtually every other oil forecast of future production volumes for major oil producing countries made by government and private analysts during the past several years. These forecasts are acknowledging the impact of oilfield technology on the capability of the petroleum industry to tap difficult-to-produce shale and tight oil resources globally.

To demonstrate the significance of the increases in the two most recent forecasts issued by CAPP, we present the output projection charts from the 2012 and 2013 reports that show the sources of Canada’s future oil supply. It is not surprising that in both forecasts the oil sands output forms the backbone of Canada’s oil supply. What can be seen when looking at the output mix in 2030 is that in the 2013 forecast total output is about 6.7 million barrels a day compared to the 6.2 million barrels a day projection from the 2012 forecast.

Exhibit 1. CAPP 2013 Oil Output Forecast

Source: CAPP

Exhibit 2. CAPP 2012 Oil Output Forecast

Source: CAPP

The CAPP forecasts also show the split between conventional oil output and the bitumen produced from the oil sands. As the charts in Exhibits 1 and 2 highlight, within the respective crude oil forecasts there are separate forecasts for light conventional oil and heavy conventional oil, pentanes, offshore oil (Eastern Canada) and oil sands output segmented into the production that will come from existing operating mines and in situ operations and those under construction along with a projection for the volumes to come from future development projects not currently underway.

When we compared the 2012 and 2013 forecasts by all these categories, we find some interesting shifts in the outlook between the two forecasts. Our analysis is shown in Exhibit 3. The first interesting observation is the higher level of Eastern oil in the 2013 forecast, which is Canada’s offshore oil production at each measurement point between 2015 and 2025. However, by 2030 the two annual forecasts arrive at the same output estimate. It is also interesting to note that within the West oil forecast the volume of conventional crude oil is projected to climb throughout the entire forecast period in the 2013 forecast while the 2012 forecast projected a decline. The 2013 oil sands forecast projects a slightly lower volume in 2015 compared to the prior year’s forecast but then is higher at all subsequent forecast years. Another observation is that the pace of oil sands output growth accelerates from 2015 to 2025, but then the growth rate slows by 2030.

Exhibit 3. CAPP Forecasts Compared

Source: CAPP, PPHB

When we focus specifically on the Western Canadian oil production forecast, the chart in Exhibit 4 shows that conventional light oil output is projected to increase until 2020 but then declines. Heavy conventional oil output is projected to expand throughout the entire forecast period. Within the oil sands category, bitumen obtained from mining operations grows throughout the entire forecast period but faster growth will be experienced by bitumen produced from in situ operations.

Exhibit 4. In Situ Oil Sands Output Grows Most

Source: CAPP

Another interesting aspect of the CAPP forecast is what they project for output from the various oil and gas producing provinces in Canada. The chart in Exhibit 5 shows CAPP’s provincial output forecast. British Columbia and the Northwest Territories have experienced a steady decline in oil production since 2000, which is projected to continue. Both Manitoba and Saskatchewan increased their output starting in 2006 but are projected to show flat to slightly lower output in the future. While Alberta was slower to embrace tight and shale oil drilling than the other provinces, its oil output from both conventional oil plays and bitumen from oil sands operations have accelerated in recent years and are projected to continue to grow throughout the forecast period. Alberta, which has been the dominant source of Canada’s oil and gas output for many years, is projected to remain the king of oil supply.

Exhibit 5. Alberta Remains King Of Canada Oil

Source: CAPP

Given the increased production forecast, the challenge for Canada’s oil industry will be how best to get the additional supply to market. A key consideration is where the oil will flow – south to the U.S. or exported to Asia. As mentioned previously, approvals for the Keystone XL and Northern Gateway pipelines remain in doubt. Exhibit 6 shows all the existing and proposed pipelines in North America.

Exhibit 6. North American Oil Pipeline Network

Source: CAPP

The dilemma for the oil industry is that the existing pipeline capacity is nearly full, limiting the ability to boost production. The lack of export options is partly to blame for Canadian oil selling at a discount to Brent and West Texas Intermediate oil in the United States. As shown in Exhibit 7, if Canada’s oil output grows as projected, by 2014 there will be no surplus pipeline capacity to move additional oil production.

Exhibit 7. Pipeline Capacity Tightening

Source: CAPP

The solution to the tight pipeline capacity situation is the increased use of rail transportation. Exhibit 8 shows the volume of oil exported by rail and how it has grown during the past two years.

Exhibit 8. Oil Volume Shipped By Rail Exploding

Source: CAPP

If additional oil output moves by rail, the CN Railroad (CNI-NYSE) will clearly be the beneficiary. The railroad has a substantial network allowing it to move increased oil production from western Canada to refineries both in Canada and the United States.

Exhibit 9. Scope Of CN Rail Network

Source: CAPP

For CN to be able to move more oil, additional oil loading facilities must be built. The CAPP report shows where existing and potential oil loading facilities are located. It also shows which sites could support unit train loading facilities. At the present time, there are six terminal projects underway that will be operational in the second half of 2013 or in 2014. These terminals will be able to move nearly 300,000 barrels a day of output.

Exhibit 10. Railcar Loading Facilities

Source: CAPP

The CAPP 2013 oil forecast points to Canada being able to boost its conventional and oil sands output by meaningful increments over the next 18 years, much like forecasts project significant U.S. production growth. Unfortunately, as U.S. domestic oil production grows, Canadian output is at risk of being squeezed out of that market. If Canada decides it wants, and is able, to tap into other international oil markets, the U.S. could ultimately be the big loser.

Battle Over Industrial-Size Wind Turbines In Rhode Island (Top)

The small, bucolic, quasi-agricultural/beach community of Charlestown, Rhode Island, where our summer home is located is involved in a battle over the installation of two wind turbines. The proposal is highly controversial and is facing intense scrutiny, largely because of the people behind the proposal and their ties to members of the town council in addition to concerns over how these industrial-size wind turbines may alter the character of the community. Charlestown is the bright red area in Exhibit 11 on the next page, with the rest of the shaded area representing Washington County, also known as South County. According to the Census Bureau, there is 59.3 square miles of area in the town with 62%, or 36.8 square miles of land, and 22.5 square miles (38%) of water. The 2010 census listed 7,827 people living in Charlestown in roughly 4,800 homes. The town is also headquarters for the Narragansett Indian Tribe, a concern of many residents that the group may want to build a casino on their property or adjacent land.

Exhibit 11. Charlestown Location

Source: Wikipedia

The island south of Charlestown in Exhibit 11 is Block Island, the location for an approved experimental, five-turbine wind farm that is designed to prove the feasibility of a 130-turbine farm in the future. The approval of Deepwater Wind’s experimental wind farm was highly controversial and it still hasn’t met all the requirements needed in order to begin construction. Besides lacking funding, the project has yet to negotiate a landing point in Narragansett for the power cable designed to bring to shore the excess wind power beyond that used by Block Island residents. Because of the premium price to be paid for wind energy under the state’s renewable fuels mandate, which will be paid by every electricity customer in the state regardless of whether it is or is not a customer of National Grid plc (NGG-NYSE), the primary power provider in Rhode Island, the state legislature rewrote the rules under which the state’s public utilities commission (PUC) had to evaluate the contract. The legislative rules were rewritten following the PUC’s initial rejection of the power purchase agreement.

While the Deepwater Wind project is hoping to become the first offshore wind project in the nation, there are a handful of wind turbines scattered throughout the state as people have moved to capitalize on the Rhode Island renewable fuels mandate. Whalerock Renewable Energy, LLC was formed to build two wind turbines on land held in trust by the family behind Whalerock. (The proposed site is located just about where the letter “t” in Post Road is in the map in Exhibit 12.) As in many small towns with a town council form of government there is often political intrigue in development projects. This project is no different. We won’t detail all the machinations of the town’s wind ordinance and this project’s application. Initially, the town council directed Charlestown’s town planner to write a zoning ordinance amendment for wind, which gives the town council sole responsibility for approving an application. With the ordinance in place, the developer proposed a partnership agreement with the town. The terms of the partnership included: a 20-year life, the decision between 1.5 to 2.5 megawatt turbines to be made by the developer, taxes frozen at the present assessed value of the land plus the turbines for 20 years, all building permit fees and any other fees waived, and the developer pays the town 2% of its payments from National Grid up to a limit of $50,000, which is not adjusted for inflation.

Exhibit 12. Location Of Charlestown Wind Turbines

Source: Windows Explorer

With the partnership agreement signed, the applicant proposed two 410-foot turbines. Hearings were scheduled and the town planner said the application was complete but the building inspector said otherwise. The town council voted to exempt the developer from the Site Plan Review and Special Use Permit approval process. Following the November 2010 election that saw three of the five member town council change, the developer withdrew the partnership agreement and moved to seek approval from the town’s zoning board. Immediately thereafter, the town council authorized a four-month moratorium on new wind turbine developments. Shortly thereafter, the building inspector said the application was incomplete. About 45 days later (January 2011), the zoning board ruled the application complete, reversing the building inspector’s ruling. Shortly afterwards, the neighbors of the proposed wind turbines and the town council appealed the zoning board ruling to the courts. Six months later, the court sends the case back to the zoning board to clarify its January ruling allowing the application to move forward under an ordinance that was later repealed.

A few months later, the developer sued the town council and the planning commission demanding that the judge void a section of the town’s zoning ordinances, which allows the planning commission to review special use permit applications for large wind energy systems. Five months later, in April, a Superior Court judge ruled that the zoning board can review the application without an advisory ruling from the planning commission and that the town council cannot appeal. A few weeks ago, the zoning board held a public hearing on the application. We are now awaiting the next chapter in this legal morass. The key issue in the application approval process is that the zoning board must find that the project “will not result in

Exhibit 13. How Wind Turbines Will Impact Neighbors

Source: tripadvisor.com

adverse impacts or create conditions that will be inimical to the public health.” Given the proximity of the proposed wind turbines to neighborhoods, this may be a challenge. Exhibit 13 on the previous page shows the footprint (yellow triangles) of the turbines with a radius of 1,000, 1,500 and 2,000 feet based on a slightly larger turbine than presently proposed, but close enough for analytical purposes (492 feet vs. proposed 410 foot height).

To show how tall these proposed wind turbines are, Exhibit 14 shows a 410-foot turbine compared with another prominent turbine located near the Providence airport and the supports of the Claiborne Pell (Newport) Bridge.

Exhibit 14. Charlestown Wind Turbines Will Be Huge

Source: Charlestown Citizens

To help put the size of these wind turbines in perspective, we have a picture of the Newport Bridge taken from Jamestown Island across from Newport Harbor, which can be seen under the bridge in the background. The height of the bridge was dictated by the requirement that U.S. Navy aircraft carriers stationed at Newport until the late 1960s would be able to pass under.

Exhibit 15. Bridge Supports = Turbine Height

Source: Wikimedia.org

Needing a positive determination to approve the wind turbines, questions are being asked about their location and possible impact on the health of neighbors due to noise and flicker effects. Several weeks before the Charlestown public hearing, there was an open meeting for citizens with Dr. Harold Vincent, a University of Rhode Island Associate Research Professor who addressed the issue of infrasonic sound created by large wind turbines and how it may affect humans. He is a faculty member of the Department of Ocean Engineering at URI’s Graduate School of Oceanography, and he is a member of the Acoustical Society of America. Dr. Vincent is working with the state’s Office of Energy Resources to create siting guidelines governing wind turbines.

It is acknowledged by both proponents and opponents of wind turbines that they generate noise. The noise generated by wind turbines is unusual, containing high levels of very low frequency sound (infrasound – sound which is outside the hearing range of humans). The larger the wind turbine the higher the level of infrasound experienced. To date there have been no systematic long-term studies of prolonged exposure to such sounds on humans or other animals. The infrasound generated by large wind turbines is unrelated to the loudness of the sound heard. Infrasound can only be measured with sound level meters capable of detecting it.

Land based wind turbine proponents say that the audible sound level generated by large wind turbines is low. They claim that at about 1,000 feet away, the sound of a wind turbine generating electricity is about the same level as the noise from a flowing stream. Based on this measurement, the turbines would appear to be quiet. Dr. Vincent’s research shows that the problem lies with the very significant infrasound component of the sound generated. His research has shown that the outer hair cells of the cochlea are stimulated by low frequency sounds. The cochlea, the auditory portion of the inner ear, receives sound in the form of vibrations causing the stereocilia (hair-like structures) to move. The stereocilia convert the vibrations into nerve impulses that are sent to the brain for interpretation. The cochlea outer hair cells are stimulated by low frequency sounds at levels much lower than the inner hair cells. The research shows that infrasounds cannot be heard but do influence inner ear function. What is certain is that there is a biological response to the low frequency sounds of large wind turbines.

The pathway of conscious hearing is well studied and established. It goes from the inner hair cells of the cochlea, through type I auditory nerve fibers, to the brain. The outer hair cells of the ear (the ones sensitive to infrasound) do not connect to this conscious pathway. They connect to type II nerves, then to granule cells that are connected to areas of the brain related to attention and wakefulness. Neighbors of large wind turbines often complain of sleep deprivation that can lead to high blood pressure, memory problems and a cascade of other health problems.

Proponents of placing wind turbines adjacent to populated areas point to a Massachusetts Department of Environmental Protection study on the health effects of wind turbines. While the report acknowledges sleep disturbances for neighbors of wind turbines, it also says that the relationship is not fully understood or sufficiently explained to ban them from neighborhoods. Recently, wind turbine placement has become an issue in Australia where research there shows that infrasound emissions resonate inside homes to the point where residents sometimes resort to sleeping outside rather than in their bedrooms. A new study, designed to meet the objectivity test, concludes that “enough evidence and hypotheses have been given herein to classify low frequency noise and infrasound as a serious issue, possibly affecting the future of the (wind) industry.” This study was conducted by four different firms of acousticians – two of them having worked for the wind industry while the other two never worked for the industry. Additionally, in Denmark, the number one country for wind power, Professor Henrik Moeller, the country’s leading acoustician, despite risk to his career, has criticized his government for manipulating the data to allow the siting of wind turbines close to homes.

Wind energy remains the fastest growing renewable energy source due to its “benign” impact on the environment and humans and the ease of developing wind farms. Besides, the fuel, wind, is free. The wind energy business has been under intense attack over its economics, largely due to its intermittent nature, an issue we will visit in the next Musings, but wind is now beginning to be attacked over health concerns. Will the combination of attacks slow wind energy’s growth? What happens in Charlestown may be a precursor to watch.

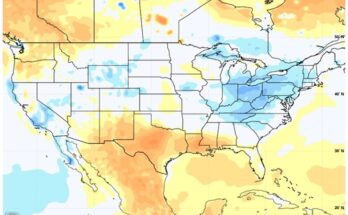

Global Warming Proponents Jump On Recent Tornadoes (Top)

Within the past few weeks we witnessed a super-strong tornado decimate the Oklahoma town of Moore, only to be followed a week or so later with the widest tornado in history hitting suburban areas near Oklahoma City. As expected, climate change proponents, who believe that the planet is undergoing significant warming due to increasing concentrations of carbon dioxide in the atmosphere, were quick to claim that the global warming phenomenon was the cause of more powerful tornadoes. Sarene Marshall, managing director for The Nature Conservancy’s Global Climate Change Team, said in an interview following the Moore tornado, “The earth is warming. Carbon emissions are increasing. And they both are connected to the increasing intensity and severity of storms that we both are witnessing today, and are going to see more of in the coming decades.”

Politicians, including Senators Barbara Boxer (D-CA) and Sheldon Whitehouse (D-RI), were quick to support The Nature Conservancy’s position. Senator Boxer used her position as chair of the Senate Environment and Public Works Committee to link global warming with increased tornado activity, while Senator Whitehouse used a speech on the floor of the Senate to make his case. Embarrassingly, Senator Whitehead made his speech berating Republican Senators who have expressed doubt about the linkage of carbon emissions and global warming and increased weather events at virtually the same moment tornadoes were destroying parts of Oklahoma. He subsequently removed the speech from his official web site.

More knowledgeable storm forecasters spoke out dismissing the linkage of global warming and increased tornado activity. Greg Carbin, the warning coordination meteorologist at the National Oceanic and Atmospheric Administration’s (NOAA) Storm Prediction Center in Norman, Oklahoma challenged the heat and storm linkage. Mr. Carbin was formerly the lead forecaster for NOAA’s Storm Prediction Center and has served on the peer review committee for the evaluation of scientific papers submitted for publication in journals such as the National Weather Digest and Weather and Forecasting. Mr. Carbin said, “We know we have a warming going on. There really is no scientific consensus or connection [between global warming and tornadic activity]….Jumping from a large-scale event like global warming to relatively small-scale events like tornadoes is a huge leap across a variety of scales.”

With the exception of the three years 2004, 2008 and 2011, the trend in the total number of tornadoes in the U.S. has been down since the 1970s. That trend can be observed by examining the chart in Exhibit 16. On the face of it, the downward trend in the total number of tornadoes each year since the early 1970s, despite the steady rise in the amount of carbon dioxide in the atmosphere, suggests there is little or no correlation.

Exhibit 16. Total Tornadoes In U.S. In Decline

![]()

Source: NOAA

What is even more significant is the downward trend in the number of violent tornadoes as determined by the Fujita-Pearson Scale. The scale has seven classifications – 0 to 6. Each classification has a range of wind speeds and a general description of the amount and types of damages experienced. The F0 has wind speeds in the 40-72 miles per hour, while F6 is 319-379 miles per hour. The tornado ranking scale was developed by Professor Fujita of the University of Chicago and Dr. Allen Pearson, the director of the National Severe Storm Forecast Center. When we examine the number of violent tornadoes, those with a classification of three and above, the downward trend since the 1950s and 1960s is clear with the exception of some individual years that spike higher. The point again is that during this modern era with rising carbon emissions, there is no correlation with the number of violent tornadoes.

Exhibit 17. Violent Tornadoes In Decline Since 1960s

![]()

Source: NOAA

Another interesting point is that between 1991 and 2010 there were tornadoes recorded in every one of the Lower 48 states. However, there is a concentration of tornadoes in the central part of the United States extending from Texas to Minnesota and from Colorado to Illinois, an area referred to by storm forecasters as ‘Tornado Alley.’ In terms of the number of storms, Texas ranked first followed by Kansas and Oklahoma.

Exhibit 18. Tornadoes In Every State

Source: NOAA

It is unfortunate that the proponents of global warming seize upon natural disasters, such as tornadoes and hurricanes, as being caused by the increase in the amount of carbon dioxide in the atmosphere since there has been no linkage established between a warming period and an increased frequency and intensity of these types of storms. We have been surprised at how many global warming people have actually criticized some of their fellow proponents because of the lack of scientific data linking tornadoes to the planet’s warming. This criticism has failed to stop the claims, however, which suggests how desperate the global warming movement is for a stage to proclaim their fears of a global apocalypse unless the world stops using fossil fuels.

The Forces Eating Away At Gasoline Demand (Top)

For decades the petroleum industry enjoyed steady growth in its primary product – gasoline. America was engaged in a ‘love affair’ with the automobile as gasoline was cheap and readily available, people were moving from cities and farms to the suburbs where jobs were increasing and the life-style was better. As a result of the demographic shift, families began buying multiple cars, or even a first car, and miles driven increased. The net result was that the number of vehicle miles traveled (VMT) in the United States showed a steady increase until late 2007 and the onset of the financial crisis. From that point forward, VMT began falling until bottoming out in late 2009. Since then the total of vehicle miles driven has bounced up and down slightly.

Exhibit 19. Total Vehicle Miles Up On Population Growth

Source: St. Louis Federal Reserve Bank

The relative stability of total miles driven reflects population growth. The impact of population on total vehicle miles is shown by examining the data on a per capita basis. Using that measure, driving peaked in 2004, well before the start of the financial crisis and subsequent recession. Per capita miles driven have fallen every year since the 2004 peak for a total decline over the eight-year time period of 7.5%. Per capita miles driven declined by 0.4% last year. At 9,363 miles per capita in 2012, the average driver is driving about the same amount as a driver in 1996! When we look at vehicle miles traveled in total, we find that it increased last year by 0.3% due to population growth. Over the eight-year period 2004-2012, total vehicle miles driven declined in three of the years while rising in five years, producing an overall decline of 0.9% for the period.

Exhibit 20. People Are Driving Less

Source: State Smart Transportation Initiative

What is interesting is to examine the trend in gasoline consumption. It peaked in September 2007, shortly before the onset of the financial crisis, at 9.3 million barrels a day. By February 2013, gasoline demand had fallen by 6.5% to 8.7 million barrels a day. It has been recognized by the petroleum industry that U.S. gasoline demand probably has peaked due to the higher Corporate Average Fleet Efficiency (CAFE) standards that dictate new automobile fleets average 27.2 miles per gallon or the auto manufacturer is fined. A recent Wall Street Journal article focused on the disparate trend between drivers on the East Coast and those in other parts of the country.

Exhibit 21. East Coasters Buying Less Gasoline

Source: The Wall Street Journal

The article found that gasoline demand stayed flat in states along the Gulf Coast and in the Midwest but declined by 10% since September 2007 in the East Coast. The East Coast accounted for over one-third of U.S. demand at the peak but accounted for half of the nationwide decline in gasoline consumption since then. The explanation given is that East Coasters buy more hybrid vehicles and fewer trucks.

Excluding luxury cars and fleets, hybrids accounted for 4.3% of new vehicle registrations in Boston, New York City, Philadelphia, Baltimore and Washington, D.C. The registration rate has been rising over the past three years. Hybrid registration in those cities, representative of the East Coast, was greater than the 3.8% new vehicle registration rate for the remainder of the country. Equally impressive is that half-ton pickup trucks account for only 3.7% of vehicle sales in those five East Coast cities compared to 9.7% in the rest of the country.

The WSJ found that drivers in the East Coast were also driving less besides buying more fuel-efficiency vehicles. They found that East Coast drivers drove 4.2% fewer miles for the 12-months ended March 2013 as compared to the same period ending in September 2007. In the rest of the United States, vehicle miles driven were only down 0.5%.

Besides buying more efficient vehicles and driving less, we suspect there are several other trends at work. One is the condition of the various state economies as reflected by their unemployment rate. In April 2013, when the national unemployment rate was 7.5%, there were six East Coast and Mid-Atlantic states with higher unemployment rates, and several of them are heavily populated states. The six states and their unemployment rate include: Rhode Island (8.8%); New Jersey (8.7%); District of Columbia (8.5%); Connecticut (8.0%); New York (7.9%); and Pennsylvania (7.6%). With the exception of Massachusetts with a 6.4% unemployment rate, the remaining six states with lower unemployment rates than the nation are relatively less populous. These six states include: Delaware (7.2%); Maine (6.9%); Maryland (6.5%); New Hampshire (5.5%); Virginia (5.2%); and Vermont (4.0%).

Another consideration is that the populous East Coast states have more transportation alternatives for their citizens allowing them to limit the amount of driving then must do. Coupled with high population and transportation alternatives is the growing trend for internet shopping. According to Forrester Research, e-commerce is expected to capture 8% of total retail sales in 2013. They point out that Amazon.com (AMZN-Nasdaq) accounts for one-quarter of all online purchases. This internet behemoth generates $600,000 in sales per full-time employee, which is roughly three times the retail average. An article in The Atlantic dealt with employment trends in the retail industry including the impact of the ‘Wal-Mart Effect’ in which its low prices tend to drive all retail prices down in neighboring stores. The author points out that one Wal-Mart Stores’ (WMT-NYSE) employee has been shown in economic studies to replace 1.4 retail workers in competing stores. At the same time, Wal-Mart has reduced its total workforce by 20,000 since 2008, despite opening 455 new stores.

As the article pointed out when commenting on the growth of e-commerce in the retail industry, “Twenty years ago, the shoppers went to the stores. Today, the stores go to the shoppers.” As floor salespeople are being replaced by informative web sites and cashiers are being replaced by automatic checkout machines, the number of retail trade employees is shrinking. The author summed up these new trends: “the daily tasks once performed by store employees are either being taken over by machines or outsourced to customers.”

It is highly unlikely that any of these trends are going to be reversed soon. We know that CAFE standards are being raised and that internet selling continues to gain market share. CAFE standards have been reworked in recent years to base them on the size of a car’s footprint. Starting in 2013 and extending to 2016, small cars must achieve 37 miles per gallon (mpg), which increases steadily to 41 mpg. Larger cars must go from 28.5 mpg to 31 mpg over the same time period. The standards agreed to between auto manufacturers and the Obama administration will take the CAFE standard to an overall fleet target by 2025 of 54.5 mpg with small cars reaching 61 mpg and 46 mpg for large cars. Unless gasoline prices decline significantly with strong prospects for them staying down, new car buyers will tend toward more fuel-efficient vehicles.

With lower gasoline sales projected for the future, the biggest losers will be refiners in Europe, which fuels a preponderance of its vehicles with diesel fuel and ships their surplus gasoline to America. The reduction in the volume of gasoline consumed in the U.S. has led to European gasoline exports to America falling to a trailing 12-month average of less than 400,000 barrels a day from more than 730,000 barrels a day in the summer of 2006. The decline in vehicle miles per capita driven and gasoline consumption, combined with higher future CAFE standards will cause further changes in the petroleum market.

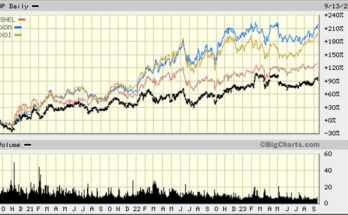

Alternatives Issue May Reflect Energy Executive Confusion (Top)

Recently, The KPMG Global Energy Institute released the results of its 11th annual survey of more than 100 senior executives in the U.S. who represent global energy companies. The good news, which was appropriately highlighted by KPMG, was that 62% of respondents believe the United States can attain energy independence by 2030, up from 52% who thought that in last year’s survey. Of those executives who think this way, nearly one-quarter (23%) actually think energy independence could be reached by 2020. That optimism is driven by the huge success of shale oil and gas development in the U.S., although we suspect there is a significant understanding that reaching energy independence means little if any demand growth in future years. That would be consistent with the new normal of 2%-2.5% growth for U.S. economy, well below the nation’s historic gross domestic product (GDP) growth of about 3.2% per year. The survey showed a 10 percentage point reduction between 2012 and 2013 in the share of respondents who believe the U.S. will never attain energy independence.

Consistent with a slowly growing economy is the belief of 73% of executives that natural gas prices will remain between $3.01 and $4.00 per thousand cubic feet for the balance of 2013. Rather than being asked where the executives believed oil prices would trade in 2013, the survey questioned where oil prices will peak. This year, 39% of surveyed executives believe Brent crude oil prices will peak at $116-$125 a barrel. In last year’s survey the plurality of responses (33%) felt the peak would be at $121-$130. Possibly the more interesting data point was that 1% of this year’s respondents put the peak oil price at $156+ a barrel while last year that same percentage said it would be at $181+. This year’s peak oil price was selected by 9% of respondents last year. The conclusion is that expectations for oil prices have moderated this year. The issue is whether the moderation was due to weak economic activity limiting oil demand growth or a realization that there is potentially much more crude oil available in the world now than perceived last year.

Natural gas received a significant amount of attention in the survey. Some 62% of respondents believe an emphasis on natural gas will lead to the resurgence in U.S. manufacturing activity and economic growth. At the same time, 36% believe the Northeast will be the region most likely to benefit from the emphasis on natural gas with 22% suggesting the beneficiary will be the Midwest. Natural gas was listed by 79% of respondents as the answer to developing environmentally friendly technologies. That percentage was up by only one percentage point. The remaining list of environmentally friendly fuels and their change between the 2012 and 2013 surveys provides some interesting perspectives. Nuclear was the second most favored fuel at 39%, but that was down from 46% last year. Solar was at 33% (35%) while clean coal was favored by 32% (35%). Wind drew only 20% of survey supporters, down from 27% in 2012, while biomass went from 13% in 2012 to 18% this year. Hydropower experienced a similar drop as wind falling from 19% to 12%. The conclusion seems to be that alternative fuels are losing their attractiveness as the solution to developing environmentally friendly energy technologies.

The survey showed that 95% of respondents expect R&D investment in alternative energy projects this year. When asked which alternative energy sources companies will target during the next three years, the favored target was shale oil and gas at 54%, followed by solar at 29%, wind energy at 25%, biofuels at 19% and clean coal at 17%. The interesting question is: What are alternative energies? Shale oil and gas are unconventional energy sources, but they are unconventional fossil fuels and not alternatives to fossil fuels. It seems energy executives don’t make this differentiation. Is it because they don’t understand or were merely confused when answering the question? At the same time, it was interesting that 46% of those surveyed said natural gas for transportation represents a significant opportunity for the industry.

The last point was the question about trends that would drive company alliances and merger and acquisition activity in the energy industry. While multiple responses were allowed, meaning that total percentage figures exceeded 100%, the combination of cost pressures and competitive pressures was selected by 84%, up from 64% last year. The petroleum industry is in the midst of a significant restructuring phase. The poor financial performance of oil and gas companies during the American shale revolution and the prospect of future low natural gas prices and possibly flat to lower crude oil prices means companies must reduce costs and lower their debt burdens in order for earnings to grow. Without earnings growth and higher share prices, the assets of oil and gas producers will continue moving from small and mid-size companies to the large, integrated U.S.- and foreign-based oil and gas companies. That transition may be necessary before the shale revolution demonstrates consistent profitability.

Self-Driving Cars May Be Years Away From Hitting The Road (Top)

We recently wrote about the Google (GOOG-Nasdaq) car and how its technology is being used to develop a self-driving car. The concept is a car where the driver becomes a passenger who only needs to be able to seize control of the vehicle should it encounter an emergency, and it is based on a number of existing and new technologies to improve the safety and efficiency of today’s vehicles. While sensors that can measure the activity of a vehicle and the environment surrounding it are well developed, the data processing task and the electronics to interpret the information and then translate it into actions is not as well developed.

Google has been lobbying Nevada to be the first state to allow self-driving cars to be legally operated on public roads. So far, the Google robot fleet of cars has traveled over 240,000 kilometers (149,100 miles) with minimal human involvement and only one incident in which the test-car was rear-ended by another human-driven vehicle. The Google fleet of six Priuses and one Audi are equipped with off-the-shelf components consisting of two forward-looking video cameras, a 360-degree laser range finder, four radar sensors and advanced global positioning system (GPS) units.

Exhibit 22. How The Google Car Drives Itself

Source: Google

Many people fail to grasp that Google’s cars are not completely autonomous even when no human is helping drive them. In order for the vehicle to function, however, the route needs to be driven by a human ahead of time in one of the test cars and mapped using its array of sensors. The mapping data is then stored in a Google data center and a portion of the data loaded into the car’s hard drive. The location of stoplights, school zones and anything else that is reasonably static is marked so the car will acknowledge them

without having to interpret them in real-time. There are two main components to Google’s efforts – reliability, which means having the car do things we expect it to do over and over again; and robustness, which is dealing with unusual situations and still being safe.

John Leonard, a professor of mechanical and ocean engineering at the Massachusetts Institute of Technology, who led a team in the Defense Advanced Research Projects Agency (DARPA) challenge and who is focused on Simultaneous Localization and Mapping (SLAM) technology, the holy grail of autonomous driving, said, “A key challenge even today is dealing with those unexpected moments.” In Mr. Leonard’s view, it is these elements of unreliability that hinder a place for self-driving cars in our future. He cited the example of the No Hands Across America team that drove from Washington, D.C. to San Diego in an autonomous vehicle. The team made it 98.2% of the way without human intervention. The remaining 1.8% represented unexpected events that limit self-driving technology’s acceptance. To try to achieve that 100% level of performance there’s a need to develop common-sense reasoning – one of those elusive goals of artificial intelligence – that no amount of pre-mapping is going to prepare you for.” It is this challenge that prompted the National Highway Traffic Safety Administration (NHTSA) to issue a statement concerning automated vehicles. While setting out the rationale for promoting self-driving – improving highway safety, increasing environmental benefits, expanding mobility, and creating new economic opportunities for jobs and investment, the NHTSA policy statement focuses on the related safety issues.

The primary focus of the agency’s safety programs is to reduce or mitigate motor vehicle crashes and their attendant deaths and injuries. The agency sees three distinct but related streams of technological change and development. These streams include: 1) in-vehicle crash avoidance systems that provide warnings and/or limited automated control of safety functions; 2) vehicle-to-vehicle communications that support various crash avoidance applications; and 3) self-driving vehicles. The NHTSA believes it is helpful to think of these emerging technologies as a continuum of vehicle control automation. To further define where the technology stands and how the agency might help it along, the agency defined five levels of vehicle automation. Those levels are: Level 0, no automation; Level 1, function-specific automation; Level 2, combined function automation; Level 3, limited self-driving automation; and Level 4, full self-driving automation.

NHTSA states that it has been conducting research on vehicle automation for many years, and this research has already led to regulatory and other policy developments. They point to their work on electronic stability control (ESC) that led the agency to issue a standard that made that Level 1 technology mandatory on all new light vehicles since May 2011. The agency is working on automatic braking technologies (dynamic brake support and crash imminent braking), which is another Level 1 technology. Within the next year, the agency will make a determination on whether either or both of these automatic braking technologies should be considered for rulemaking or for inclusion within the safety program.

The policy statement says that the agency either has already begun or is planning research on Level 2 through Level 4 automation. The research will focus on human factors with a goal of developing requirements for the driver-vehicle interface such that drivers can safely transition between automated and non-automated vehicle operation. This research will be focused largely on level 2 and 3 systems. In the area of electronic control systems safety, the agency has initiated research on vehicle cyber security. Another area of research focus will be to develop system performance requirements, which extends to level 2 through 4 systems. NHSTA states that it expects to complete the first phase of this research in the next four years. The agency said this research has not been separately funded and its full implementation will depend on using available research funds unless additional funding is granted under the Obama administration’s budget request.

The NHSTA has set forth a number of recommendations for licensing drivers to operate self-driving vehicles, even just for testing. It also has recommendations for state regulations governing testing of self-driving vehicles. In summary, NHTSA doesn’t recommend states authorize the operation of self-driving vehicles for purposes other than testing at this time. The agency believes a number of technological issues as well as human performance issues must be addressed before self-driving vehicles can be made widely available, and it will take years of research to fully evaluate them. In its view, the sophistication or demonstrated safety capability of self-driving vehicles has not been developed sufficiently to allow the technology to be made available to the general public. As these technologies improve and are road-tested, NHTSA will reconsider its policy position. In the meantime, while the NHSTA is positive about the long-term outlook for self-driving technology, without funding, the research to accelerate the introduction of this technology into cars will take years to evolve and test. Don’t hold your breath for a self-driving car.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.