- Transforming Energy Future Will Take More Than A Policy

- Tracking The Natural Gas Injection Season And Storage Build

- New Google Driverless Car To Revolutionize Auto Business

- EPA GHG Rules And Coal Plants: More Talk Than Action?

- Correction:

Musings From the Oil Patch

June 10, 2014

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Transforming Energy Future Will Take More Than A Policy (Top)

The role of energy in the history of the world has been marked by long cycles with each succeeding fuel possessing more energy mass per unit than the fuel it was displacing. This characteristic led to the new fuel being cheaper and more efficient, enabling advances in its contribution to the growth of the economy and the improvement in people’s lifestyle. Each transformation takes decades and is rarely understood as it happens until the new fuel has gained roughly half the energy market. In some cases it has taken 50 years of overlap before the new fuel reaches that 50% share.

For thousands of years, energy was measured by the power of humans. Mankind then moved to harness the power of animals that they domesticated. Fire was discovered to be an effective weapon of protection from wild animals and eventually for cooking food, but it was much later that fire was used to create steam to power mechanical devices. Humans found that wind could be captured and turned into energy for tasks such as lifting water in wells. Controlling rivers and streams afforded humans early opportunities to harness the power of flowing water to power machines to grind grains and cut wood. Earlier, man began burning biomass (wood and peat) for protection and warmth and eventually to heat water to produce steam to drive mechanical devices. Steam power helped displace the animals that had been relied upon for centuries to provide power, and which are still relied upon in many countries around the world for power. From wood and peat, humans switched to burning coal, which contained a greater amount of energy. From coal, humans transitioned to burning oil and natural gas that had even greater energy content per unit. Possibly more important, the oil age created the potential for energy to power mobility. Although nuclear power’s raw material is dangerous after its transformation, it too assumed a role in the nation’s and world’s energy mix.

As our energy transition has advanced, the use of fuels with more concentrated energy content has also resulted in the release of greater amounts of carbon into the atmosphere. Many people believe it has been the growth of carbon emissions in our atmosphere that has contributed to global warming, which certain scientists have projected will eventually lead to catastrophic environmental conditions for the planet. For those who believe in this disaster scenario, the outcome can only be avoided by the cessation of burning fossil fuels. For them, our future will be tied to an economy totally dependent on renewable fuels like wind, solar and water-based energies such as tidal movement and hydro-power. Their objective in mandating the total elimination of fossil fuels from our energy mix condemns the economy to a world similar to the past when due to these energies being intermittent, economic and social progress struggled to advance. To avoid this, those renewable protagonists count on significant technological breakthroughs for batteries and other energy storage mediums in order to address providing power to people at any time they want or need it, something that fossil fuel-based energy facilities ensure. We question whether people desire or will be happy with an economy and a lifestyle tied to intermittent power.

In an interview with The New York Times reporter Jad Mouawad in the summer of 2008, Exxon Mobil Corporation (XOM-NYSE) Chairman Rex Tillerson discussed his company’s evolving view of the relationship between energy and global warming. He specifically responded to the issue of why the United States was unable to develop a national energy policy, despite the best efforts of presidents over the past 40 years. In his article, Mr. Mouawad quoted Mr. Tillerson responding to his question:

“Sure, but the argument is that we should focus on the demand side of the equation and that we cannot drill our way out of the problem.

“Well, you can’t conserve yourself out of this problem, either. You can’t replace your fuels with alternatives out of this problem, either. The reason the United States has never had an energy policy is because an energy policy needs to be left alone for fifteen to twenty years to take effect. But our policymakers want a two-year energy policy to fit with the election cycle because that is what people want. The answer is you can’t fix it right now.”

Source: Interview with Rex Tillerson by Jad Mouawad of The New York Times, July 19, 2008, as quoted in Private Empire: ExxonMobil And American Power by Steve Coll.

We found this discussion interesting as we have been exploring, in a broad sense, the future of energy. While we certainly cannot know its future, we have been researching and discussing one of the most interesting and controversial energy technology developments that possess the potential to set us off on our next energy transition. To better understand what is meant by energy transition, we have posted two charts – one for the United States and another for the UK – showing how the respective country’s fuel use changed over the centuries.

Exhibit 1. Energy Transformation Of The U.S.

Source: Robert Bryce

With respect to the United States, our country has transitioned from a wood-dominated energy supply in the early 1800s to the use of coal and then oil and gas. The chart also shows the role of hydro-power and the emergence of nuclear power. Turning to the UK, the chart covers a longer period of history than in the case of the United States. Its energy use shows how much of the nation’s supply came from biomass, including food for humans and animals, during the 1500s to 1700s. Wind and water were always a source of energy, but their role was limited. Coal surprisingly played a notable role in the UK’s energy supply as early as 1500, but its importance grew dramatically, peaking by the early 1900s at which time UK coal mines started to be exhausted. Coal’s role in the UK was displaced by greater use of petroleum and natural gas, largely from the UK’s share of North Sea output, and in the latter years of the last century, nuclear began to assume a role in the country’s energy mix.

To gain an appreciation of the significance of the transition between energy sources, the experience of the UK navy offers insight. Winston Churchill, the UK’s war years’ prime minister, was the naval secretary in the early 1900s and under his leadership the government decided to repower its naval fleet with oil in place of coal. That fateful decision spurred British efforts to encourage Britain-based Shell Transportation and Trading Company to become involved in the Russian petroleum industry, and later for the government to become a significant player in the politics of the nascent Middle East oil business and the development of nation-states in the region.

Exhibit 2. The Energy Transformation History Of UK

Source: Basque Centre For Climate Change

With fossil fuels under intense environmental and political attack, the world is wrestling with what will be our next powerful energy source. The environmental movement is aggressively pushing to take our energy future back to wind and solar. These fuels, while offering attractive advantages for carbon emissions control, lack energy intensity, the ability to be scaled up to deliver larger volumes, and consistency. The fuel source we are considering for the next energy powerhouse offers the potential for prodigious energy output from materials that are readily available and transform into harmless waste products all at a low cost. That energy source is LENR – Low Energy Nuclear Reaction.

Students of the energy business will immediately question this author’s sanity. Isn’t LENR the same as cold fusion – the embarrassing scam of a couple of scientists who claimed that they had generated energy from mundane materials some forty years ago? Unfortunately, the scientific claims could not be replicated, calling into question whether the scientists were con artists or just “mad” scientists. Cold fusion was equated with alchemy and has tainted the research on LENR.

According to New Energy Times, “LENRs are weak interactions and neutron-capture processes that occur in nanometer-to-micron-scale regions on surfaces in condensed matter at room temperature. Although nuclear, LENRs are not based on fission or any find of fusion, both of which primarily involve the strong interaction. LENRs produce highly energetic nuclear reactions and elemental transmutations but do so without strong prompt radiation or long-lived radioactive waste.” As the definition points out, the advantages associated with LENRs include that the reaction occurs at room temperature and produces high energy output during the transformation of the material, but without creating radiation or radioactive waste. The challenges for LENRs include that the science underlying the reaction is not understood, repeatability and consistency of the experiments still need to be confirmed, and optimization has yet to be established.

The most troubling aspect of LENR is the lack of a theory explaining what is occurring during the reaction. The absence of a scientific theory leads to a high degree of skepticism, but as pointed out by a scientist actively involved in researching LENR, there have been prior episodes throughout the history of science where the science was evident but the theory to explain it wasn’t developed until years later. He pointed to the scientific community’s understanding of plate tectonics in 1912 and superconductivity in 1911 with the fact that their scientific theories weren’t developed until decades later. This person speculated that it is possible that the transformations occurring in LENR tests are demonstrating an aspect of materials that hasn’t been completely understood. An example of a similar material development is nanotechnology and the applications it is being prepped to fulfill.

An unclassified 2009 report from the Defense Intelligence Agency (DIA) highlighted how “scientists worldwide have been quietly investigating low-energy nuclear reactions for the past 20 years.” The report went on to cite how the effort is gaining increased research support globally from universities, governments and major corporations. While Japan and Italy appear to be leaders in the research effort, Russia, China, Israel and India are also actively involved. The conclusion of the report stated: “DIA assesses with high confidence that if LENR can produce nuclear-origin energy at room temperatures, this disruptive technology could revolutionize energy production and storage, since nuclear reactions release millions of times more energy per unit mass than do any known chemical fuel.” Of course the key word is “if.”

Earlier this year, it was reported by Nikkei the Japanese equivalent of The Wall Street Journal that Mitsubishi Heavy Industries Ltd. (MHVYF-OTC) had established a technology based on the LENR concept of the transmutation of elements. The company was able to experimentally transmute cesium by an atomic number of four, to praseodymium, without the use of a large-scale nuclear reactor or accelerator. The company is now advancing the technology from a fundamental research stage into a practical research stage, which is a significant development. The move by a primary manufacturer of nuclear technology, Mitsubishi, to advance this technology is driven by its desire to develop a practical process to convert radioactive strontium and cesium into harmless non-radioactive materials.

Mitsubishi physicist Yasuhiro Iwamura presented the group’s research in front of more than 100 researchers from around the world at a meeting at the Massachusetts Institute of Technology in March. At the meeting, Dr. Iwamura stated, “We can confirm the nuclear transmutation of one microgram of reaction product.” Dr. Iwamura is the leader of the intelligence group at the Advanced Technology Research Center of Mitsubishi Heavy Industries, a secretive organization that is engaged in the company’s next-generation research.

The translated Nikkei article described the transmutation experiment in the following manner: “The researchers put the source material that they want to convert on top of the multi-layer film, which consists of alternately laminated thin films of calcium oxide and palladium. The thin metal layers have a thickness of several tens of nanometers. Elements are changed in atomic number in increments of 2, 4 and 6 over a hundred hours while deuterium gas is allowed to pass through the film.

“The transmutations of cesium into praseodymium, strontium into molybdenum, calcium into titanium, tungsten into platinum have been confirmed.”

Mitsubishi’s patent was originally issued in Japan but it was extended in 2013 into a European patent, and protects the company’s proprietary thin-film transmutation technology. The Japanese newspaper also reported that a research and development company of the Toyota Group (TM-NYSE), Toyota Central Research and Development Labs, has also replicated the elemental conversion research with results similar to Mitsubishi’s experiment.

While the Mitsubishi and Toyota research efforts have focused on material transformation rather than the generation of energy, the process is similar. High profile work on LENR as an energy source has been conducted by Andrea Rossi, an Italian engineer, inventor and entrepreneur. He has invented the Energy Catalyzer (E-Cat) and completed two tests, one of which produced 900o C (1,650o F) of heat that could be used to generate steam to power a generator to produce electricity. In early 2013, a group of independent scientists ran tests on two versions of the “Hot Cat,” a one megawatt LENR unit. Their coefficient of performance (COP) was measured, determining the ratio of energy out versus energy in. The COPs in the two tests were 5.6 and 2.2, respectively. Another group that is not affiliated with nor has it worked with Mr. Rossi, has been using an E-Cat and conducting longer term tests, the results of which may be released soon. This could be a monumental development, although it will not end skepticism of the technology.

LENR is tainted with the cold fusion hoax, but over the years, a small, yet growing band of scientists and researchers around the globe have continued to push the investigation into proving up the science. Top universities around the world are involved in the research along with work sponsored by leading global corporations.

More research needs to be done, but momentum behind LENR appears to be growing. Why? Clearly, LENR is a disruptive technology. As was suggested to us in our conversation with our scientist contact, imagine if you could have a small unit in your garage that could produce electricity to power your house and automobile with a material source that cost $10 and lasted for six months while producing no dangerous waste. Would that revolutionize the power business? Certainly. Could small LENR units be built to power vehicles? Maybe. When will we know? Not for a while, and maybe never. If it works, we are likely talking about a decade-long development period. While that appears a long time, imagine you were at Drake’s well in Pennsylvania in 1859 when whale oil was still the preferred lighting fuel. Would you have embraced oil? We urge readers to pay attention to LENR’s development as it may signal the next energy transformation.

Tracking The Natural Gas Injection Season And Storage Build

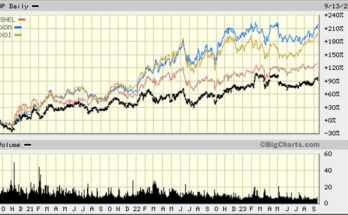

The energy market has been concerned since the vicious winter weather drained the nation’s gas storage supply about whether the gas industry could refill those facilities with enough natural gas to avoid possible supply and price shocks next winter. We ended the last gas withdrawal season on March 31st with only 822 billion cubic feet (Bcf) of supply, the lowest level since 2003. The debate about gas storage centers on the question of what level of inventory is needed to provide comfort to the market – is it 3.0 trillion cubic feet (Tcf) or 3.4 Tcf, or somewhere in between. In an article we authored for the April 15th Musings, we speculated that the industry would be able to restore inventory to roughly the 3.0 Tcf level, meaning that it needed to inject 2.2 Tcf of supply during the roughly 31 week injection season.

Last week, the industry injected 119 Bcf of gas into storage, slightly above the average estimate of gas industry analysts. With 1,449 Bcf of natural gas in storage, the volume is 33.4% below that of the same week a year ago, and 903 Bcf below the average of the last five years. There are several reasons for the sharp improvement in the gas storage situation, which is clearly demonstrated in the government’s chart, shown in Exhibit 3 on the next page, which accompanies the weekly gas storage report from the Energy Information Administration (EIA).

One reason for the improvement in the storage volumes is the continuing growth of domestic gas production as shown in the latest EIA report taken from industry data supplied on Form 914. While the monthly data lags by two months, the March 2014 preliminary production report shows that Lower 48 gross natural gas production was 76.70 Bcf per day (Bcf/d). March’s production increased from February’s upwardly-revised estimate of 75.49 Bcf/d. Since December 2013, production has increased by nearly 2 Bcf/d during a winter when activity in many U.S. oil and gas basins was negatively impacted by bitter cold, ice and heavy snowfalls.

Exhibit 3. Gas Storage On The Rebound

Source: EIA

Higher natural gas prices also impacted consumption of the fuel by the electric power generation sector, although the full impact of lower coal prices has been muted by low inventories of coal at power plants due to logistical challenges from the winter weather. A cooler spring has also limited natural gas demand in various regions of the country as less air conditioning has been needed so far. However, the key contributor to the improving natural gas storage picture is probably the better performance of nuclear power plants. According to data published last Thursday by ThomsonReuters, there were 7,095 megawatts (MW) of nuclear generating capacity idled, or roughly 7% of the nation’s nuclear generating capacity. This outage, however, is half of the rate experienced a year ago and 45% below the rate of outage averaged over the past five years.

We went back and updated two charts from our April 15th article. One shows the history of injection seasons beginning in 1994. That year was one of the five injection seasons that started with initial storage volumes below 1,000 Bcf. Of those five years – 1994, 1996, 1997, 2001 and 2003 – only two seasons failed to end the injection season with at least 3,000 Bcf of gas in storage. In the chart in Exhibit 4 on the next page, we have plotted the performance of storage growth during this injection season so far, which is only nine weeks old. Analysts at Global Hunter Securities suggested in their analysis of the gas storage situation that this year is tracking the 2003 injection season when the industry started at 696 Bcf of gas in storage but ended at 3,155 Bcf. That year the industry was able to inject 2,459 Bcf of gas into storage, which represented an injection rate of 77 Bcf per week.

Exhibit 4. Gas Storage Is In A Deep Hole

Source: EIA, PPHB

So far this injection season, the industry has increased storage volumes from 822 Bcf to 1,499 Bcf, or an injection volume of 677 Bcf. The industry has averaged 75.2 Bcf per week, although it wasn’t until the fourth week of the injection season that it hit that average rate. The last four weeks have seen weekly injection volumes exceed 100 Bcf. The Global Hunter analysts have now raised their estimate for the volume of gas that will be in storage on November 1st when the injection season ends to 3.2 Tcf. They believe that if the nation experiences a cool summer and nuclear power plants remain as efficient then it is possible the season-ending volume could reach 3.4 Tcf. While that volume would not match where the industry has ended injection seasons for the past five years, it should still be a sufficient volume, given the continued production growth, to meet industry needs without requiring supply rationing either through sharply higher gas prices or supply cuts.

It is interesting to note, even though the current weekly injection rate is only based on the first nine weeks of a 31-week season that it ranks with the 2001 and 2003 seasons as the best injection rates ever.

Exhibit 5. Weekly Injections Match Peak Years

Source: EIA, PPHB

As we pointed out above, one dynamic in filling storage is the price of gas. The higher the price, presumably the higher domestic production will be, although in the short-term production is often less sensitive to price because wells sometimes are completed as part of scheduled developments. If prices fluctuate sharply, either up or down, then producers might consider adjusting the flow-rates of wells, either higher or lower. The response to price changes may be less rigid in today’s environment not only because there is a growing demand for gas by the power and industrial sectors, but also because many producers are saddled with substantial debt loads and need the additional revenues, even if the price of natural gas is lower, to support their operations and service their debt.

Exhibit 6. Prices Have Risen In Response To Supply

Source: EIA, PPHB

What we see in Exhibit 6 that plots the weekly average gas price (data from Global Hunter) is that the price two weeks ago was only exceeded by the price three years ago. If one looks at the lowest price, which was recorded two years ago, it reflects the terrible state of the gas business in 2012 when the injection season started with the largest storage volume since 1994 by a wide margin, which minimized the need for large injection volumes as demonstrated by that season experiencing the lowest average weekly injection rate for a season during the past 20 years. Given the current state of the natural gas market and storage volumes, we are comfortable with our April 15th conclusion that the industry will be able to inject at least 2.2 Bcf of gas this season, bringing the storage volume to at least 3.0 Tcf by the start of the withdrawal season. We would not be surprised if the industry is capable of injecting more gas into storage than we postulated in our April forecast, but we would expect gas prices to move in response to the volume of gas in storage.

New Google Driverless Car To Revolutionize Auto Business (Top)

Two weeks ago at the annual Code Conference, formally known as the All Things Digital “D” conference series, top executives from technology companies and other fields gathered on the West Coast to discuss their latest and future products. This conference was the stimulus for some very interesting tech industry announcements such as Apple’s (AAPL-Nasdaq) purchase of the subscription music streaming company, Beats Music, and Beats Electronics, which makes headphones, speakers and audio software, for a combined $3 billion; Google’s (GOOG-Nasdaq) introduction of its driverless car; and Uber’s CEO Travis Kalanick’s declaration that the company planned to eventually replace all its drivers with driverless cars reducing the cost to consumers and virtually making owning a car a rare event. We’re not sure about the impact of new and improved headphones for Apple devices, but we do understand that Google’s announcement, along with Uber’s embrace of driverless cars, pose significant and transformational trends for the energy industry, especially for the transportation fuel segment.

After four years of work to develop a driverless car, Google recently elected to explore a radically different approach to autonomous vehicles. Rather than creating a vehicle that drove itself but contained the necessary equipment – a steering wheel and brake and accelerator pedals – to allow a passenger to take-over and drive the car in an emergency situation, Google wanted a vehicle that transformed the driver into just a passenger. According to Sergey Brin, a co-founder of Google and still active in the company’s research and development efforts, the company decided to shift the focus of its driverless car program a year ago following an experiment in which Google employees used autonomous vehicles for their normal commutes to work. Mr. Brin said that the company started looking at the driverless car developments of other companies, primarily the automobile manufacturers, and concluded that “That stuff seems not entirely in keeping with our mission of being transformative.” The concept of a driverless car without a steering wheel and brake and accelerator pedals and run by computer applications became Google’s new vision.

Exhibit 7. New Google Driverless Car

Source: Google

The Google driverless concept car resembles a stylized Volkswagen (VLKAF-OTC) Beetle, or a Mercedes-Benz (DDAIF-OTC) Smart Car. The car will have no driving equipment that would allow the passenger to take over driving it in an emergency. The only thing the passenger controls is a red “e-stop” button for panic stops and a separate “start” button. That would seem to be less equipment than George Jetson had in his futuristic vehicle in the 1962 Jetsons cartoon series. You can see a steering stick in the picture of the vehicle (Exhibit 9 below).

Exhibit 8. Blue Go And Red Stop Button Drive Car

Source: Google

The design of the vehicle Google plans putting on the roads later this year for testing will need to comply with the recently enacted California law for driverless cars. Three states – California, Nevada and Florida – have enacted laws to regulate autonomous vehicles, at least for testing purposes. All three state laws have been written with the expectation that a human driver would be onboard and able to take control in emergencies. Thus, the Google car will have not only its two buttons but also a steering wheel and brake and accelerator pedals. It reminds one of the ultra-conservative advice to wear both a belt and suspenders to hold up one’s pants.

Exhibit 9. Jetsons’ Car Looks Like Google’s

Source: unioncoast.wordpress.com

Google has arranged to have 100 of its concept cars built by Roush Enterprises Inc., a boutique vehicle assembly company near Detroit. The Google driverless car will have a suite of electronic sensors mounted on it that can see about 600 feet in all directions. The front of the car will be made from a foam-like material in case the computer fails and the car hits a pedestrian. The car is officially classified by the National Highway Traffic Safety Administration (NHTSA) as a Low-Speed Vehicle, meaning it has its top speed restricted to 25 miles per hour. The vehicle must also have a regulation-approved glass windshield, side and rearview mirrors, and a parking brake. Estimates are that the electric Google driverless car model will cost $150,000 and only go around 100 miles before needing a recharge. We doubt this will be the ultimate consumer model Google introduces in the future, and certainly not this expensive.

The Google driverless car will be controlled by an application on a smartphone. The car is summoned to pick up the passenger by the use of the application and then the car will automatically drive the passenger to his destination that is pre-selected on the smartphone application without any human involvement. It is these features that intrigue the principals behind Uber since it already is a smartphone application-based business enabling passengers to summon a private car that acts as a taxi and takes the person to his or her destination with the transaction paid for via the smartphone application.

Although the Google car will be built by someone else, according to remarks by Bernard Soriano, deputy director at the California Department of Motor Vehicles, Google will be considered to be the car’s manufacturer. What hasn’t been determined by California, however, is who will be responsible in the event of an accident, or as Google would have you believe, more likely when their car is crashed into by another vehicle. Mr. Soriano says that question still remains to be answered. Also unanswered is the question of who is the legal operator of the car, if Google’s technology is the pilot? "Right now, Google is saying that there isn’t a person who’s operating the vehicle," according to Mr. Soriano, "It’s the software."

Exhibit 10. A Tough Day For A Google Car

Source: www.droid-life.com

What we do know, however, is that Google cars haven’t been completely accident-proof, witness the car pictured in Exhibit 10 on the prior page. While we don’t know the story behind the damaged Google car pictured, which is one of the company’s Streetview image collector cars used for gathering the data about road conditions in order to prepare the software algorithms to operate a driverless car, it would appear the car was involved in a serious crash. From the picture, it looks as if all the damage is on the driver’s side of the front end, suggesting to us that the car ran into a structure or strayed over the center line and collided with another vehicle driving in the opposite direction. In either case, it appears the sensors didn’t gather the necessary information and interpret it fast enough to allow the car to make a mid-course correction. Was that an equipment issue (sensors) or a software problem? Or could it be that this Streetview vehicle was being operated by a human?

The photo in Exhibit 11 from 2011 shows a Google driverless car, a Toyota Prius, which was involved in an accident. The photo was sent to web site Jalopnik by a passerby. The accident occurred near Google’s Mountain View, California, headquarters. The Prius is identified as one of the company’s earlier driverless cars by the roof equipment that’s smaller than a typical Google Streetview car. The web site observed that from the picture it appears the Google car rear-ended another Prius.

Exhibit 11. Google’s First (Only?) Driverless Car Accident

Source: www.jalopnik.com

Subsequent to its initial report, Jalopnik updated the story after obtaining information from NBC’s San Francisco television station that spoke with a woman who witnessed the crash and reported that in addition to the two Priuses, the crash also involved three other vehicles. According to the woman, Google’s Prius struck another Prius, which then struck her Honda (HMC-NYSE) Accord that her brother was driving. That Accord struck another Honda Accord, and the second Accord then hit a separate, non-Google-owned Prius. Jalopnik wrote that for the Google car to strike the first car with enough force to trigger a four-car chain reaction it suggests the car was moving at a decent speed. Although this accident occurred several years ago, it may have played a role in Google’s decision that it didn’t want to become an also-ran in the autonomous vehicle race but rather wanted its effort to be transformational, which led to its new driverless car with no ability for a passenger to intervene in the vehicle’s operation.

People involved in autonomous vehicle research are suggesting that the technology is developing faster than originally anticipated and as such, they expect driverless cars to be on the roads soon. As we wrote about in our last Musings, the key developments that may determine the pace of driverless cars hitting the road will be in the legal and insurance areas. Issues of liability will shape the laws required to be enacted by each state to allow driverless vehicles on their roads. We suspect that based on the pace at which much of the nation’s social legislation is being enacted, the prospect of driverless cars will result in states copying, with only minor modifications, some of the early legislation allowing these vehicles on their roads. Thus, once the public, state and local officials, and insurance companies acknowledge driverless cars as a reality, the legislation will pass quickly. So what could it mean for the energy business?

Driverless cars will lead to lighter and smaller vehicles, meaning that for internal combustion engines (ICE), fuel-efficiency can rise rapidly. If application-based driverless cars become popular, it could lead to fewer people owning cars. An interesting analysis was prepared in 2012 and subsequently updated in 2013. The study, “Transforming Personal Mobility” was prepared by The Earth Institute at Columbia University and examined the potential impact of driverless cars on cities and towns. One scenario involved studying the impact these driverless cars could have on the yellow taxicab industry in New York City. There are slightly over 13,000 licensed yellow taxicabs that can be hailed by passengers from the sidewalk but cannot be called, scheduled or hired by smartphone applications. These taxicabs, according to data from the taxicab regulatory agency, make roughly 470,000 daily trips on average in the five boroughs of New York, or 410,000 trips just in Manhattan. The data says that the average trip lasts for 11 minutes and covers two miles at an average speed of 10-11 miles per hour. The average wait-time for a passenger to hail a yellow taxicab is reported to be five minutes.

The authors of the study modeled the taxicab demand in New York City by using driverless vehicles, which would result in a smaller fleet – only 9,000 versus 13,000+ taxicabs– along with reduced wait-time (less than one minute versus five minutes). The reason for the smaller fleet is that fewer taxicabs would be making empty trips. When a taxicab completes its trip, presumably it would have already been summoned for its next trip, meaning it would drive fewer empty miles. The most attractive aspect of the driverless vehicle fleet is the cost of trips. The average cost per mile of a taxicab trip is $5 per trip-mile. This revenue covers the cost of the driver’s labor, vehicle ownership and operating costs (including fuel) and the owner’s income. The study assumed that the owner’s income was equal to 15% of the total cost, meaning that the real cost of the trip-mile was $4.25. The cost of the driverless taxicab is estimated at $0.50 per mile due to fewer taxicabs, less empty trip-miles and reduced labor costs of the driver.

From an energy perspective, the 470,000 trips, at an average distance of two miles, equates to the fleet driving 940,000 miles a day. If we assume that due to traffic congestion the average taxicab averages only 10 miles per gallon, then the daily trips require 94,000 gallons of gasoline per day, or 2,238 barrels of crude oil per day. For a 365-day year, the taxicab gasoline demand equates to 817,000 barrels of oil. If the driverless taxicab fleet is composed of all-electric vehicles such as the Google car, then we can see a reduction in gasoline consumption of nearly a million barrels of oil per year, albeit not a significant reduction relative to overall annual U.S. oil consumption. The impact on gasoline demand from driverless cars will come as these vehicles take over all the taxicabs in the country along with delivery vehicles.

After examining the impact of driverless cars on gasoline and oil demand, we would expect that someone will point out that we are too conservative and their analysis will lead to a wild extrapolation of the market impact in the future, eventually leading to the entire U.S. vehicle fleet becoming driverless and all gasoline demand evaporating. Driverless vehicles will become a disruptive technology for both the energy and auto businesses. We remain convinced, however, that the introduction of driverless cars will be at a slower pace than the optimists believe. It is more likely that driverless cars will experience a penetration of the American vehicle fleet that will resemble the current penetration rate of electric cars.

EPA GHG Rules And Coal Plants: More Talk Than Action? (Top)

Last Monday, the Environmental Protection Agency (EPA) announced its long-awaited plan to establish a new national guideline for the reduction of carbon dioxide emissions from existing power plants, while at the same time appearing to leave the exact implementation steps up to the individual states. The EPA goal is to cut emissions by 30% by 2030 from the level of CO2 emissions from power plants that entered the atmosphere in 2005. This announcement marks the final step in the plan to create President Barack Obama’s environmental legacy by fulfilling one of his key 2008 campaign promises. That promise was to attack those who are climate change deniers and rally the public behind the President’s actions to reduce carbon emissions in order to improve the health and lives of both Americans and citizens of countries all around the world. This new rule, coupled with the government’s prior step to reduce carbon emissions from new power plants will go a long way to fulfilling the claim made by President Obama in his acceptance speech on the night of the last of the Democratic primaries in 2008 that his nomination marked “the moment when the rise of the oceans began to slow and our planet began to heal.”

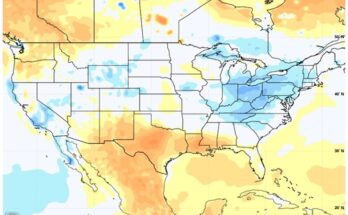

These new EPA carbon emissions rules under the Clean Air Act will be subject to a 120-day period of public comment before being refined by the agency to incorporate the comments and then eventually published in the Federal Register, which is estimated to happen in June 2015. At that point, the rules will be the law of the land and the states will have nine months to develop their respective implementation plans to reduce carbon emissions from the operating power plants located within their borders. The EPA anticipates that the states will file their state implementation plans (SIPs) by June 2016, and that the agency will have reviewed and approved the various single-state plans by June 2017 and multi-state plans by June 2018. The EPA’s guidelines reportedly will provide a wide range of options that the states may incorporate into their SIPs. One provision is that multiple states can band together to create a cap-and-trade scheme such as the one operating in the Northeast states, or they can even join that plan. Some states are in worse shape than others in attempting to reach the targets the EPA has established for them as shown in the accompanying map.

Exhibit 12. Status Of States For Emissions Is Mixed

Source: The Wall Street Journal

What will this carbon emissions policy mean for the energy business? In the near-term, it probably means less for the utility and coal businesses than the claims that were being bandied about before the announcement. Why? Three reasons: There is flexibility afforded to the states in how to meet the CO2 reduction targets; and secondly, the implementation will not occur until near the end of this decade, if then, and the base year from which the measured reduction is calculated allows the states and industry to include all the carbon reduction savings already achieved.

The first two explanations don’t fully reflect the legal battle that will be waged by the utility and coal industries as the rules are developed. They will work to disrupt and delay the multiple steps the EPA must take in order to get its proposed rule into law along with launching a wave of litigation against the rule, its scientific rationale and the agency’s handling of the approval process. Lastly, the EPA timetable assumes that the individual states will gladly comply, while the federal government’s experience with the Affordable Care Act (Obamacare) and state compliance shows that a number of them will obstruct and delay implementation of the law.

For the coal and utility industries, the selection of 2005 as the base year for measuring the decline in carbon emissions is important as demonstrated by a table from a report prepared by investment firm Bernstein Research. The table (Exhibit 13) shows that between 2005 and 2013, the estimated reduction in carbon emissions from existing power plants was 243 metric tons, or a 10% reduction. Had coal not reclaimed some of the market share in 2013 that it had lost to cheap natural gas in prior years, the percentage reduction might have been considerably greater. In fact, through 2012, the EPA data shows that the utility industry cut carbon emissions by 16%, or half the planned 2030 target cut. This suggests that while the utility industry has only achieved about one-third of the mandated emissions cut, it should be able to achieve the targeted reduction, albeit at a currently unknown cost, and after having to make significant adjustments to its power-generating fleet of plants.

Exhibit 13. CO2 Reductions Have Industry Part Way Home

Source: Bernstein Research

Even before the target date for SIPs to be in place (2017), there will be further carbon emission reductions due to the impact of anticipated coal plant closures and their replacement by natural gas-fired plants, newly installed emissions clean-up equipment at existing power plants, growth in renewable fuel-powered electricity generation, and electricity demand conservation. Combined with what has already been cut, the anticipated carbon emission reductions planned and currently underway will enable the power industry to achieve nearly 85% of the EPA’s target for carbon emissions reduction. As shown by the chart from Bernstein Research, once all the emissions cuts are considered, the amount of carbon that needs to be cut through forced shutdowns of existing coal-fired power plants amounts to about the same volume of emissions that have already been eliminated since 2005.

Exhibit 14. The Path Utilities Will Follow To CO2 Target

Source: Bernstein Research

Some of this additional emissions reduction will be achieved through the greater use of natural gas and increased energy conservation. As part of its approach, we fully expect the EPA to provide an exhaustive menu of technology and policy options for the states to employ. This approach allows the EPA to claim it is technologically neutral; although we worry about how many of its technological options are actually technologically and economically feasible. Our concern is based on the agency’s embrace of carbon capture and sequestration (CCS) technology that has yet to be proven to work as a solution for new plant emission control, let alone whether it is economically feasible as the EPA claims. To date, the signature plant to prove up the CCS technology is late and well over budget. As a result, we anticipate conservation (demand management) will play a large and growing role in helping utilities to meet the carbon emissions reduction target. We anticipate that the EPA will push these options in an attempt to minimize the blowback from claims that its policies are destroying regional economies and costing the economy thousands of jobs, despite the agency’s heavy promotion of the health benefits that will flow from the implementation of the policy. Many of the health benefits come from the reduction in emissions of particulate matter that is not carbon dioxide.

As is almost always the case in situations where governments mandate solutions, it is the final few percentage points of achievement that are the hardest, and most expensive, to achieve. We anticipate that the efforts to eliminate 30% of our power plant carbon emissions will experience the same fate. At this point, no one knows what it will cost to achieve the carbon emissions reduction target, and we remain skeptical of the cost estimates suggested by the EPA. We think what then-candidate Obama told his liberal supporters in San Francisco during the 2008 primary campaign that when his environmental policy was enacted their electric bills will “skyrocket” will likely prove accurate.

With this announcement, and following President Obama’s campaign visits to children’s hospitals to see youths suffering from acute cases of asthma, along with his political ads promoting the need for these new rules, the President will revert to his normal governing style, which is that words equal actions. Since he has now spoken about establishing these rules in order to meet his goal of cutting carbon emissions, he has devoted sufficient attention to the matter. Governing is hard, and President Obama has yet to understand that fact, or else he finds the work of governing boring.

Assuming these rules are implemented as presented, then the real pain for the utility and energy businesses will begin to be felt most likely around 2020, and the pain will last well into the future. As usual, determining the true impact of these proposed rules, which are likely to be redone during the approval process, will be in what the details are. Even then, it will not be possible to identify all the unintended consequences. The one point we are confident in is that the shape of the final rules will be learned by our current leader from the media. That will be because he will be former President Obama by then. In the meantime, relax, take a deep breath and don’t panic.

Correction: (Top)

We had several readers contact us about our article discussing our observations from our drive from Houston to our summer home in Rhode Island. They caught a mistake in our discussion of the prices of gasoline we purchased and our observation about ethanol’s impact on fuel prices. Unfortunately, we referred to one fuel as containing “ethane” that should have been “ethanol.” As we write between 7,000 and 8,000 words in each Musings, every two weeks, even with all the eyes that read the draft before publication, some things do get overlooked. We appreciate the attention our readers pay to the Musings and thank them for catching our errors, hopefully it only happens occasionally.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.