- Does History Of Alaska Pipeline Offer Hope For Keystone?

- NGSA Says More Gas Supply Less Demand This Winter

- Transportation In The U.S. Is Undergoing Subtle Changes

- Will Lower Oil Prices Drive A Greater Austerity Push?

- Rockefellers, Divestment, Clean Energy And Climate Change

Musings From the Oil Patch

October 10, 2014

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Does History Of Alaska Pipeline Offer Hope For Keystone? (Top)

The proposed 1,200-mile Keystone XL pipeline from Hardisty, Alberta, Canada to Steele City, Nebraska may be the second most famous crude oil pipeline in American history after the 800-mile Trans-Alaska Pipeline System. One pipeline has yet to be built while the other has been operating since 1977, but the two pipelines share a common regulatory approval history highlighted by environmental controversy. The TAPS line, as the Trans-Alaska Pipeline System is known, was proposed in 1969 to move the then-recently discovered Alaskan North Slope oil to the Lower 48 states market. Rather than shipping the oil by tanker from an icy North Slope port, TAPS was proposed to move the oil across the state to the warm-water Port of Valdez where it could be shipped without restriction.

The Prudhoe Bay oil field was discovered by Atlantic Richfield Corp. (now part of BP Ltd. (BP-NYSE)) and Humble Oil (a part of Exxon Mobil Corp. (XOM-NYSE)) with their Prudhoe Bay State #1 well on March 12, 1968. The field began producing on June 20, 1977, and ultimately TAPS reached a peak shipping volume of 1.5 million barrels a day in 1988. The field actually produced more oil per day than the pipeline’s volume peak, but the excess output was held in storage to ensure stable shipment volumes. Today, Prudhoe Bay produces somewhere around 545,000 barrels per day.

The Prudhoe Bay field lies in a pristine area of the North Slope, lying between the National Petroleum Reserve – Alaska to the west and the Arctic Natural Wildlife Refuge to the east. When TAPS was proposed as the least costly and most environmentally-safe method for moving the oil to market, it immediately became the target of environmentalists concerned both about the pipeline’s route and the need to stop Americans from using fossil fuels. As

Exhibit 1. Route of the Trans-Alaska Pipeline

Source: Flominator, Wikimedia Commons

chronicled in a recent article by Stephen Moore and Joel Griffith of the Heritage Foundation in The Wall Street Journal, many of the environmental issues used to fight the construction of the TAPS pipeline are similar to the attacks being leveled today at the Keystone pipeline. While there are a number of similarities in the attacks, the key to TAPS’ approval doesn’t exist for Keystone today.

Exhibit 2. Prudhoe Bay’s Location Raised Environmental Fears

Source: Wikipedia

Source: Wikipedia

In February 1969, three oil companies (ARCO, BP and Exxon) holding leases in the Prudhoe Bay field sought and received approval from the federal government to form Alyeska Pipeline Service Corporation to undertake geological and engineering studies for an oil pipeline that would cross Alaska from the North Slope bordering the Arctic Ocean to the southern Port of Valdez on the Pacific Ocean. Later in 1969, Exxon sent the tanker SS Manhattan, with an ice-strengthened bow, larger engines and hardened propellers, from the Atlantic Ocean through the Northwest Passage to the Beaufort Sea in a test of whether oil could be moved by water during the summer months. The tanker encountered thick ice and ruptured several tanks allowing salt water in. Also, the ship was pushed off its desired course and had to transverse a more challenging and dangerous passage route. The following summer, the tanker successfully transited the test route, but it was ultimately deemed too risky to consider shipping Prudhoe Bay output by tanker.

Environmentalists claimed TAPS risked creating environmental disasters. The Wilderness Society issued a resolution stating that the pipeline threatened “imminent, grave and irreparable damage to the ecology, wilderness values, natural resources, recreational potential, and total environment of Alaska.” In March 1970, a suit against the pipeline was launched by the Wilderness Society, along with the Friends of the Earth and the Environmental Defense Fund. Again, the complaint leveled at TAPS was the risk of environmental disaster. One complaint in the suit was that the pipeline would “interfere with the natural and migratory movements of wildlife, primarily caribou and moose.” We understand the animals use the warmth of the oil flowing through the pipeline for relief from the Alaskan winters. The Western Arctic caribou herd, as reported on in 2011 by the Alaska Department of Fish and Game, Division of Wildlife Conservation, was four times larger than it was in 1976.

As a result of these claims and the lawsuit the pipeline’s construction was held up for years. It wasn’t until after the Arab Oil Embargo during the fall of 1973 that Congress moved quickly to pass legislation – the Trans-Alaska Pipeline Authorization Act in November 1973 – allowing the pipeline’s construction to move forward. During the legislative debate, many claims were leveled at the safety of the pipeline. Warnings about the risk of rupture of the pipeline from earthquakes and threats to wildlife and the “way of life” of native Alaskan tribes were leveled at TAPS. None of these concerns has become an issue. In November 2002, there was an earthquake along the Denali Fault to the west of the pipeline that damaged the support structures holding the pipeline above ground, but no leaks resulted. That same year, a study conducted for the American Society of Civil Engineers found that the pipeline and the surrounding ecosystem were healthy. Certainly, there was damage to the ecosystem of Prince William Sound from the grounding of the Exxon Valdez tanker in 1989, but recent reports point to its recovery.

What we know is that pipeline construction techniques have improved over the years and that we can safely build lines in environmentally challenging locations. Those capabilities don’t address the issues of recent oil spills by Enbridge (ENB-NYSE), ExxonMobil and TransCanada Corp. (TRP-NYSE), the sponsor of Keystone. It is important for the pipeline industry to acknowledge that no project can guarantee 100% avoidance of accidents, but overall the safety record of pipelines is superb, and generally safer than other modes of transporting liquid petroleum products, namely by rail, barge or tanker. Importantly, maintaining a hands-off policy on land, as environmentalists desire, isn’t the answer either as we witnessed during the Clinton presidency. During those years of the late 1990’s, the forest service adopted a policy of not cleaning out the under-brush in forests claiming that this approach was the best way to preserve nature. That policy contributed to an eventual rise in the number of forest fires that were fed by the dry brush that had not been cleared. Rather than active management of Federal forest lands, the government’s hands off policy contributed to greater damage from extensive forest fires.

So what does the experience with the environmental concerns that hobbled the approval of TAPS have to do with Keystone? They are similar. What changed the TAPS approval dynamic was the Arab Oil Embargo of 1973. What would have been the fate of TAPS had that oil crisis not developed and oil prices tripled? Possibly we would still be waiting for that oil to reach U.S. markets just as we are for the North Slope’s natural gas. It is important to remember that U.S. lifestyles were much more dependent on oil in the early 1970’s than they are today. At that time, American cars averaged 5-7 miles per gallon and oil was used to generate a substantial portion of our electricity, and there were a large proportion of homes heated with oil, especially in the Northeast and Midwest. One wonders if it will take a disruption of our oil supplies in order to move President Barack Obama to finally approve the Keystone XL pipeline construction permit. While oil prices remain high despite a substantial amount of global oil supply off the market due to violence, a further oil supply disruption might not work this time due to the rapid growth of U.S. oil production from the tight oil and shale plays. Keystone might become history’s most famous pipeline never built.

NGSA Says More Gas Supply Less Demand This Winter (Top)

Rest easy about this winter’s energy market says the Natural Gas Supply Association. The industry association recently released a report on the outlook for gas supply and demand this winter prepared by Energy Ventures Analysis (EVA). The bottom line for the forecast is that with continued growth in gas production, despite higher gas consumption for generating electricity and powering industrial facilities, supply this winter will prove adequate because

residential/commercial demand is projected to fall due to a warmer winter. In the presentation introducing the new report, EVA had a slide that explained what happened last year and another to show why this winter will be quite different. That difference captures why the association is confident that the U.S. gas supply situation will remain comfortable throughout the winter heating season.

Exhibit 3. Polar Vortex Upset NDSA Forecast Last Winter

Source: NGSA

The first slide from the NGSA’s presentation shows what the National Oceanic and Atmospheric Administration (NOAA) predicted as of early October 2013 for temperatures last winter. The western half of the country was expected to experience average to above average temperatures while the remainder of the country would experience average temperatures. However, winter temperatures last year were either average, colder than normal or the coldest on record for 41 of the 48 contiguous states. While two of the seven warmer states have large populations – California and Florida – there were seven states that experienced the coldest winter on record and 16 states that were colder than normal, and these states included large population centers. In other words, if it weren’t for the polar vortex that visited North America twice last winter, we would have ended last winter’s withdrawal season with more natural gas in storage. Had that happened we would be closer to a normal storage situation rather than being 12% below the five-year average at this point in time. The fact we remain so far below the five-year average is the reason why natural gas prices are currently trading around $4 per thousand cubic feet rather than the $3.50 level of 12 months ago or $2.90 two years ago.

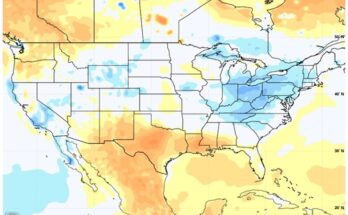

Exhibit 4. A Warm Winter Will Ease Fear Of Gas Shortage

Source: NGSA

In looking toward this winter, EVA took the NOAA forecast (seen in Exhibit 4) that predicts temperatures will be 11% warmer than last year and 3% warmer than the 30-year temperature average. The key point about this year’s forecast is that it calls for only 3,442 heating degree days versus the 3,865 heating days recorded last winter, 11% less. In addition to a warmer winter, the study projects a 5.4% increase in domestic gas production, up to an average of 70.8 billion cubic feet per day (Bcf/d). Demand for gas this winter is projected to fall by 3.7%, or 3.4 Bcf/d, entirely from the fall in consumption by the residential/commercial sector due to warmer winter temperatures. Gas for generating electricity is forecast to increase marginally, while gas consumption by the industrial sector should increase by 6%, or 1.4 Bcf/d.

Natural gas storage injections for the past two weeks have exceeded the estimates of industry analysts. Back in 2003, the year we have based our 2014 storage forecast on because it represented the largest injection volume of any year from 1994 to now. In the most recent three-week period, 299 billion cubic feet (Bcf) of gas was injected into storage, which compared favorably to the 302 Bcf injected during the comparable three weeks in 2003. The key to the volumes at the end of the season rests on the volume injected during the next few weeks. Based on the volume of gas already in storage (3100 Bcf) combined with continued strong gas production and cooling temperatures, we are on track to reach 3435 Bcf, and possibly as much as 3500 Bcf, by the end of this month, which ends the injection season.

Exhibit 5. 2014 Injections Closely Tracking 2003 Season

Source: EIA, PPHB

Consistent gas production growth from shale formations is what has driven storage volumes. As the Energy Information Administration (EIA) reported in its latest Natural Gas Monthly, July’s production averaged 70.4 Bcf/d, a new monthly record, while demand at 60.6 Bcf/d was down 2% from last year and the lowest July demand since 2010. The key to the fall in demand was the fact that gas delivered to electric power generators declined 7.3% from last year and was the lowest July since 2009. As Exhibit 6 shows, the key to storage volumes and gas prices has been the volume of gas sent to electric utilities.

Exhibit 6. Electric Power Gas Use Key To Gas Storage

Source: from EIA data courtesy of Art Berman

The big question mark about the forecast is the upcoming winter’s temperatures. If NOAA’s forecast is accurate, then unless industrial gas demand grows more than projected, natural gas prices should stay where they are currently trading or possibly decline. On the other hand, as we wrote about a couple of issues ago, if the 2014 Old Farmers’ Almanac forecast for another cold winter like last year comes to pass then gas storage could be lower than anticipated next spring likely driving gas prices higher. As our work showed, any winter that is less severe than last year will leave more than adequate gas in storage and not provide a catalyst for higher gas prices. To understand the significance of last winter’s weather, it rates as one of only three winters since 1931/1932 that have had heating degree days greater than 3,800. Those winters were 1995/1996, 2001/2002 and 2013/2014. It is interesting to note that all these severe winters occurred during the current global warming pause. Stay tuned and keep your long-johns handy.

Will Lower Oil Prices Drive A Greater Austerity Push? (Top)

West Texas Intermediate (WTI) oil prices fell below $90 per barrel last week in response to data reflecting weakening economic activity in China and Europe. Recent economic data for the United States has been mixed, but generally it has been more positive than negative. That said, future economic growth is being questioned by the weaker global outlook and the announcement of the first Ebola case in the United States.

Early last week, the debate in the energy industry was about how much production Saudi Arabia would cut in order to support global oil prices at $100, the price the Saudis have said was fair. It is also the price that is consistent with the Kingdom’s need for revenue to finance its government. Later in the week it came out that rather than cutting production, Saudi Arabia was reducing oil prices for Asia and the United States in order to support its market share. This development conjures up memories of the early- to mid-1980’s response to the fall in global oil demand following the dramatic oil price rise during the 1970’s. During that time, global oil prices fell and decimated the health of the industry. Back at that time, the cost of imported oil for U.S. refiners peaked in February 1981 at $39.00 per barrel and subsequently fell to a low of $10.91 per barrel in July 1986, a 72% drop.

When we examine the trend in WTI crude oil futures prices since the absolute peak on July 3, 2008, of $145.29 per barrel, we find that there has been a peak price every year since 2011 above $100 per barrel. Interestingly, it appears the annual peaks have been progressively lower ($113.52 on May 2, 2011, $109.77 on Feb. 24, 2012, $110.10 on Aug. 28, 2013 and $107.26 on June 20, 2014), which may be signaling an erosion in the strength of the oil market despite expectations of rising global oil demand. It is also noteworthy that these declining oil price highs have come despite an

Exhibit 7. Oil Prices Appear To Be In A Down Trend

Source: EIA

increase in the volume of oil held off the market due to geopolitical events. To some degree, this weakening oil price trend reflects the growth in U.S. oil production.

Given the difficulty in finding new large supplies of oil around the world and the fact that the oil discovered is of lower quality, we are facing an upward bias in the cost trend for finding oil. However, the stagnant global economic condition and the prospect of more oil supply arriving in the next couple of years, especially from the U.S., suggests oil prices may be heading lower before they go higher. The near-term downward bias for oil prices puts increased pressure on E&P company profitability.

We continue to see oil companies cut spending by cancelling drilling projects, releasing offshore drilling rigs, reducing headcounts, high-grading drilling prospects and selling or trading assets and acreage. The E&P industry needs to boost its returns and without higher commodity prices managements have little choice but to reign-in their spending. That means more of the steps enumerated above, but it also means improving internal efficiencies and focusing on overlooked opportunities. One of these opportunities may be increasing recovery rates from existing fields and scooping up oil that may be missed in transportation and refining operations. One business that will benefit from this trend is production chemicals. It is one oilfield industry sector currently receiving a high level of attention from private equity investors as well as strategic buyers.

An interesting player in this chemicals investment space is Berkshire Hathaway (BRK.A-NYSE), but we doubt many people are aware of the company’s involvement. Late in 2013, Berkshire agreed to purchase Phillips Specialty Products Inc. (PSPI) from its parent Phillips66 (PSX-NYSE). PSPI is engaged in developing and manufacturing polymers that maximize the flow potential of pipelines carrying crude oil, refined products or waste water. The deal was worth about $1.4 billion, but there was roughly $450 million in cash and liquid assets, so the enterprise was worth roughly $1 billion. Berkshire paid for the deal with 17.4 million shares of Phillips66 it owned, helping that company in its share buyback program.

What’s the significance of this move, given that the Berkshire stock portfolio of energy-focused investments has been reduced over the past year? Warren Buffett, in a press release announcing the PSPI acquisition, stated that "The flow improver business is a high-quality business with consistently strong financial performance, and it will fit well within Berkshire Hathaway." He has charged James Hambrick, the chief executive of Berkshire’s Lubrizol chemical subsidiary that was purchased three years ago for $9 billion, with integrating the business. PSPI’s products will help improve the flow of oil and refined products that move through the company’s pipeline subsidiary and in the tank cars hauled by the company’s BNSF train subsidiary. Both of these businesses will be growing as part of the nationwide energy infrastructure expansion in response to the shale revolution that has boosted our oil and gas production.

One thing we do not know is who benefits from improving the flow and recovery of oil from pipelines and railroad tank cars. Is it possible Berkshire can claim ownership to the recovered oil, or does the value reside with the shipper making it worth their while to buy the flow improver products? Either way, getting more oil and petroleum product to market will create value since that oil is now lost with no economic value. Another dynamic comes from the recent report by consultant Wood Mackenzie stating that enhanced oil recovery could add up to 1.5-3.0 million barrels a day of additional tight oil output after 2020, further increasing the U.S.’s role as an energy powerhouse. While Wood Mackenzie only referenced the new technologies being developed, based on our history with enhanced recovery efforts since the 1970’s, production chemicals will play a role. Will they involve products such as flow improvers? We don’t know, but we suspect the chemistry of flow improver products will play a role. Throughout history, oil industry efforts to deal with austerity pressures often have proven to be a catalyst for developing new technologies that open up new energy frontiers. This is an exciting, albeit pressure-filled time for the industry.

Transportation In The U.S. Is Undergoing Subtle Changes (Top)

As we prepare to drive from our summer home in Rhode Island back to Houston, we found it interesting to learn of new data about commuting patterns in this country and various scenarios regarding technology’s impact on auto driving in the future. The new data on commuting came from the 2013 American Community Survey. The Survey is the annual compilation of social and economic trends based on data compiled by the United States Census Bureau.

The Brookings Institute recently prepared an analysis of changes in non-vehicle commuting to work patterns in various cities during 2007-2013. The data shows that driving is slowly declining, while more people are walking and bicycling to work. These trends are especially true among younger workers. Another commuting dynamic has been meaningful growth in working from home, despite

Marissa Mayer, the new CEO of Yahoo (YHOO-NASDAQ), ordering all her employees to leave home and start showing up at the office. As the data shows, driving declined in over two-thirds of the cities sampled and those cities also experienced an increase in alternative modes of commuting including walking, biking, use of public transportation and telecommuting.

Exhibit 8. Non-vehicle Commuting To Work Growing

Source: Brookings Institute

According to the Brookings’ analysis, the share of national commuters using private vehicles to get to work has edged down for the first time in decades, dropping from 86.5% in 2007 to 85.8% in 2013. Public transportation just recorded the most passenger trips since 1956 and saw its share of the commuting market climb above 5%, a level not seen since 1990. The share of commuters walking and bicycling to work rose to nearly 4%. The biggest gain, however, came from those workers who technically didn’t commute at all, but conduct their job by computer from home. Today, nearly as many people now work from home as ride public transportation to jobs.

The trends in commuting are interesting. Prior to the 1980 census survey, the commuting population included all those from age 14 and older. That changed in 1980 when the cutoff was raised to 16 years of age. In 1980, 84.1% of commuters drove to work in private vehicles. That percentage increased to 86.5% in 1990, but by 2013 declined to 85.6%. A more significant point about this trend is that the percentage of commuters driving alone has climbed from 64.4% in 1980 to 73.2% in 1990 and 76.2% in 2013. In the interim, that percentage bounced around from 76.2% in 2000 to 76.0% in 2008 and then back up to 76.2% in 2013. We are not sure if that trend

signals that the proportion of commuters driving alone has peaked with the minor changes during the past 13 years merely reflecting changing economic conditions and gasoline prices. If gasoline prices are impacting commuting trends, then the prospect we are facing a period of lower oil prices, and theoretically lower gasoline prices, suggests single drivers may be heading higher. This trend may also be boosted by better mileage vehicles. That dynamic has always been cited to explain why vehicle miles driven rose despite higher gasoline prices. A combination of more efficient cars and falling gasoline prices may explain why vehicle miles traveled showed an uptick in June for the first time in years.

There was interesting data about people using public transportation. The greatest percentage of people in this category commuted 60 minutes or more a day. That compared with the largest percentage of single drivers and carpoolers both averaging only 15-19 minute commutes. With respect to housing, only 40% of public transportation commuters owned their home versus 70% for single commuters and 60% for carpoolers. The high rental concentration of public transportation commuters may explains why 36% of them do not have a vehicle.

While contemplating the latest commuting trend changes, we read Re-programing Mobility: The Digital Transformation of Transportation in the United States. This report was prepared by Dr. Anthony Townsend, Senior Research Scientist at the Rudin Center for Transportation Policy & Management of the Robert F. Wagner Graduate School of Public Service at New York University. The concept behind the report was to speculate on how the application of digital technology in the transportation sector could reshape our driving future. The report takes off from a recently published transportation forecast set in 2030 prepared by The Forum for the Future. That report declares that “mobility is a means of access – to goods, services, people and information. This includes physical movement, but also other solutions such as ICT-based [information and communications technology] platforms, more effective public service delivery provision, and urban design that improves accessibility.” Dr. Townsend said that this broader view of mobility forces one to consider issues such as the growth of telecommuting and shifting trends in the housing market to better understand the transportation system of the future.

In developing the report, Dr. Townsend eschewed the typical scenario development process popularized by Royal Dutch Shell (RD.A-NYSE) in the 1970’s. After explaining the Shell process of constructing four scenarios representing four possible permutations, he pointed out that if you pick the wrong variables to base the scenarios on, they may not be relevant. Dr. Townsend instead opted to use the alternative futures method developed at the University of Hawaii. That method involves developing a story about the future that can be grouped into one of four archetypes – growth

collapse, constraint and transformation. The key dynamics of each of these archetypes are:

“Growth: A future in which current key conditions persist, including continued historical exponential growth in certain domains (economics, science and technology, cultural complexity, etc.); Also known as PTE, or “present trends extended”.

“Collapse: A future in which some conditions deteriorate from their present favorable levels, and some critical systems fail, due to a confluence of probable, possible, and wildcard factors.

“Constraint: A future in which we encounter resource-based limits to growth. A sustainability regime emerges, slowing previous growth and organizing around values that are ancient, traditional, natural, ideologically-correct, or God-given.

“Transformation: A future of disruptive emergence, “high tech,” with the end of some current patterns/values, and the development of new ones, rather than the return to older traditional ones. This is a transition to an innovation-based regime of new and even steeper GROWTH.”

Unfortunately, we cannot do adequate justice to retelling the four stories, but the scenarios are interesting and entertaining as they explore a series of key issues that create them. To start, since this report takes off from the Forum study, Dr. Townsend ignores issues such as future energy systems, the economy and demographics. He does assume that for the next five years, the economy will remain stagnant and fuel prices expensive. The scenarios are based on four cities – Atlanta in 2028, Los Angeles in 2030, New Jersey in 2029 and Boston in 2032 – and demonstrate the four archetypes.

Why are the scenarios based on a roughly 15-year time period in the future? An examination of history shows that the United States has reorganized its transportation system twice before within that time span. In the 1920’s and 1930’s, American cities reorganized their streets around the capabilities and needs of the automobile thus establishing the auto era. The second time involved the construction of the Interstate Highway System between 1955 and 1970.

In developing its four scenarios, it was critical to ask and answer the following question with its sub-questions:

What technologies are working?

How do they enable new forms of mobility? Who is bringing them to market? How are they being adopted? Who is regulating them, and how? How do public sector institutions act or respond to them? What are their unintended consequences?

Within each scenario, the answers become obvious as the transportation conditions predicted demonstrate their impact. As the report points out, self-driving cars are the “800-pound gorilla” in the transportation system of the future. In fact, as pointed out in the report, the autonomous vehicle’s future has been forecasted for nearly 75 years. It started with Norman Bel Geddes, the industrial designer who shaped General Motors’ (GM-NYSE) highly influential Futurama exhibit at the 1939 World’s Fair in New York City. He predicted extensive automation of private vehicles in his 1940 book Magic Motorways. Certainly, the auto-centric landscape of America was in place by 1960, but driving was still a human endeavor. Self-driving cars remained a trend just around the corner, but their eventuality was continually predicted. In fact, in a 1963 book, University of California Berkeley’s Melvin Webber, an urban scholar, predicted a future of high-speed, self-driving cars and accelerated urban sprawl. Again, his predictions were partly fulfilled.

The Mobility report projects the success of Google’s autonomous vehicle concept. While the report says there are a range of forecasts for the penetration of self-driving cars in the American fleet, by 2029 the trend will be well-established. To sum up the scenario conclusions, Dr. Townsend wrote:

“First, we tried to understand how different market and regulatory conditions could result in very different transportation and land use outcomes. In GROWTH [Atlanta], we forecast Google’s consolidation of its Waze, Nest, Fiber, Maps and self-driving car businesses into a monolithic public-private partnership with the Georgia state Department of Transportation to manage the development of a segregated autonomous vehicle road system in the Atlanta area, connecting existing edge cities with new exurban housing developments serviced by Google technologies. In COLLAPSE [Los Angeles], we forecast a loosely regulated yet reinvigorated automobile industry flooding the streets of southern California with a heterogeneous mishmash of assistive and autonomous vehicles that don’t interoperate well – the safety benefits of self-driving cars are realized, but not the congestion-reducing ones. We believe this is an important twist on the public debate, which to date has implicitly assumed a fleet of more or less identical, or at least highly inter-operable vehicles. Throughout the report we deliberately use a variety of terms to describe these products, (self-driving, autonomous, robot car, etc.) to highlight this issue.

“Second, we looked at other modes of transportation where self-driving technology could be even more revolutionary than it will be for cars. In CONSTRAINT [New Jersey], we forecast the development of an expansive regional network of high-speed autonomous bus rapid transit, fed at transfer points by local networks of smart paratransit jitneys. Finally, in TRANSFORMATION [Boston], autonomous driving technology underwrites a major deployment of electric bike-sharing systems by allowing users to summon a bike to any location, and the system to automatically reposition and rebalance vehicles.”

Examples of interesting challenges and changes created by the operation of self-driving cars described in the scenarios was the situation in Santa Monica where instead of parking, a line of autonomous cars fully encircle a building driving round and round awaiting a summons from their owner. The congestion and speeds of autonomous vehicles make walking challenging and crossing streets virtually impossible. Likewise, in Boston, the electric bikes match up with a surge in young singles moving into the central city and living in 140-160 square foot apartments with minimum conveniences and all their extra positions stored offsite, but available for immediate delivery by autonomous vehicles summoned by smart phone.

The current shifting trends in vehicle use coupled with the scenarios of how self-driving cars could reshape our transportation future give one pause in thinking about how our energy consumption may change. It is dangerous to assume that past trends will continue into the future. But which of these trends or scenarios will most impact our future? It will likely be a combination, but will the mix of trends leading to greater consumption outweigh those reducing it, or will it be the other way? While we don’t know the answer, we certainly feel better prepared to understand the changing transportation dynamics having read the Mobility report.

Rockefellers, Divestment, Clean Energy And Climate Change (Top)

Two weeks ago last Sunday, nearly half a million people participated in the People’s Climate March in New York City along with similar rallies in thousands of other cities around the world including Rio, London, Jakarta and Brisbane, to name just a few. New York City was the epicenter because the march preceded the United Nations Climate Summit that occurred two days later and was highlighted by a pitch from President Barack Obama who is trying to orchestrate a global climate treaty next year in Paris after his and then-Secretary of State Hillary Clinton’s failed effort in Copenhagen in 2009. That event was marked by several key delegations – China and India – leaving a meeting at which President Obama was delivering a speech and headed for a private meeting. That meeting was interrupted by President Obama and Secretary of State Clinton who burst into the closed-door session. As we now know, the location of the secret meeting was uncovered by the American leaders from information obtained by ease-dropping on cell phone calls and intercepting the emails of several participating officials by our National Security Administration.

One of the attention-getting announcements during the lead-up to the UN climate change meeting was made by members of the Rockefeller family who said that it planned to use its $860 million philanthropic organization, the Rockefeller Brothers Fund, to help promote the efforts of the Global Divest-Invest movement. This divestment effort reportedly began in 2011, spearheaded by Bill McKibben, the Schumann Distinguished Scholar in Environmental Studies at Middlebury College, a fellow of the American Academy of Arts and Sciences, and the founder of the environmental activist group 350.org, named for the supposedly safe carbon dioxide level in the atmosphere. Further research has uncovered that the Rockefellers were supporting the divestment movement’s founders financially well before 2011.

The Rockefeller divestment step was one of the 800 commitments from institutions and individuals who are willing to divest $50 billion in fossil fuel investments and reinvest the funds in renewable energy and sustainable economic development projects. The announcement of the commitments came in a speech at the UN Climate Summit by former-Vice President Al Gore. According to data contained in a report issued by Arabella Advisors and the Divest-Invest coalition at the summit, these commitments have doubled since January 2014.

Digging into the Rockefeller decision has been very interesting, given how it was highlighted by the organizers of the climate change summit. Valerie Rockefeller Wayne, the chairwoman of the fund, Stephen Heintz, president of the Fund, and Nelson Rockefeller conducted several media interviews about the family´s commitment to use the fund to advance environmental issues. According to Mr. Heintz, “John D. Rockefeller, the founder of Standard Oil, moved America out of whale oil and into petroleum.” He went on to say, “We are quite convinced that if he were alive today, as an astute businessman looking out to the future, he would be moving out of fossil fuels and investing in clean, renewable energy.” And that is exactly what the Rockefellers did. We wonder whether the government would allow him to monopolize the clean energy business as he did with the oil industry.

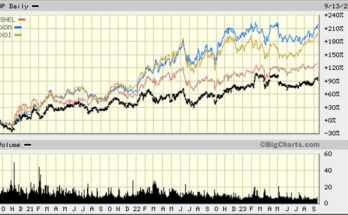

In the 1980s, according to Mr. Rockefeller, members of the family formed a $2 million fund to invest directly in renewable-energy investments. “The fund didn’t survive, which was a lesson,” he said. Nevertheless, he added, the failure of the fund was “a badge of honor.” We’re not sure about honor, but it certainly provides an education. We thought investors were interested in making money not earning badges. It was also telling that Mr. Rockefeller didn’t say that the family was investing in renewables now, or had in recent years. Had they, the “badge of honor” might have become a badge of stupidity. Look at the relative performance of the Standard & Poor’s Clean Energy Index versus two oil-related indices – the Philadelphia Oil Service Index and the NYSE ARCA Oil Index and the overall stock market for the past five years. Only in the past two years has the clean energy index generated a positive return for investors, but that came with further government support for renewables.

Exhibit 9. Clean Energy Has Been A Dismal Investment

Source: S&P, Yahoo Finance, PPHB

An interesting side note is that the Rockefeller organization has been supporting many of the environmentalist groups through direct grants from their fund. Equally interesting is that the Rockefellers Brothers Fund made the decision to exit coal and oil sands investments well before 350.org began its divestment push. At the present time, the fund has not decided on when it will divest from conventional oil investments and it is likely to keep the fund’s investments in natural gas. So does the Rockefeller Fund view natural gas as a clean energy source just as the Obama administration once did? This disclosure raises interesting questions about whether the public announcement by the fund was designed to help the climate change movement rather than to accurately reflect what the fund was doing. Maybe 350.org needed the announcement to regain momentum for its climate change push.

The divestment movement has gained power since 350.org made it the prime focus of the organization’s existence. The concept is based on the 1980’s Anti-Apartheid movement that cajoled endowments and pension funds to eliminate their investments in companies doing business with either the government or companies in South Africa until the country’s segregation policies were undone. The effort was considered a success as those social policies were ultimately changed and the country was opened to new investment and experienced more rapid economic growth, but it took about eight years to influence government policy.

Exhibit 10. Expect Increased Pressure On Ivy League Funds

Source: The Wall Street Journal

So far, the divestment effort has had a few successes, but the pressure on endowments and pension plans continues to grow. As shown in Exhibit 10, the two largest university endowments – Harvard and Yale – have declined to adopt a policy of fossil fuel divestment, and those were considered major defeats for the climate change movement.

When we did a Google search for “fossil fuel divestment” we got 208,000 responses, but importantly the third listing, following an ad for a “green” mutual fund and a story about the Rockefeller divestment step, was the 350.org manual for how to organize a divestment movement on a college campus. The key to the 350.org effort is a focus on organizing a group of students into an active body and then to begin pushing to gain attention to the movement in order to influence university authorities to change their policies and actions. Of interest to us when reading the manual was the point made that “encircling” was an effective strategy for influencing (intimidating?) authorities to act in the desired way. The manual described how a sit-in by a 350.org group at a college endowment meeting prevented officials from leaving the building and ultimately led to a vote to divest the fund’s fossil fuel investments. That experience was augmented by the description of how 350.org rallied 15,000 people on November 6, 2011, to encircle the White House when it appeared that President Barack Obama would approve the permit to build the Keystone pipeline. Four days later the State Department and President Obama announced that the Keystone permit decision would be delayed until 2013 as alternative routes were considered.

People who want to organize a local 350.org group first must sign the organization’s manifesto (an interesting choice of terms). Two key points in that manifesto caught our attention, and they are listed below:

“We’re not experts, but we know enough about the climate crisis to speak the truth. We keep up with the science and the politics as best we can, but we also know we don’t need to know every bit of information to stand up for our future. We recognize that speaking the truth on science and injustice is both our responsibility and our most effective strategy.

“While science is important, stories make our movement powerful, and human. We all have our own compelling stories, and communicate with stories to gain mass media coverage, to multiply our movement, and to reaffirm our common humanity. Whether it’s a lone brave protester in Iraq, or a community coming together to ship bikes to the tiny island of Nauru, shared stories inspire our movement.”

While the people admit they are not experts on environmental science, they declare that they know enough to speak the truth on science and injustice, which is the most important consideration in the debate. We guess this view flows from Mr. Gore’s declaration that “the science is settled.” But the key issue becomes the second point that stories are more important than science. We would suggest that is what has prompted the avalanche of disaster forecasts because it is easier to make up a story tied to a point of climate science that mushrooms into a cataclysmic outcome for mankind – think melting ice caps flooding the world or food famines dominating our future as a result of droughts created by hotter temperatures.

Based on these key points from the 350.org manifesto, we are not surprised that the climate change movement has taken on religious overtones. The science (facts) becomes less important while story-telling becomes critical. Fear is a prime emotion. Once people are onboard emotionally and morally with the climate change movement, then tactics become important. For climate change activists, the tactics shift to how best to attack the core of the fossil fuel industry. The two tactics embraced by activists are to try to cut off the flow of capital to fossil fuel companies and to try to restrict their operation. On that latter point, activists are working to convince investors that the asset value of their fossil fuel investments are overstated because the world will not allow more of the company’s reserves to be burned, trapping them as uneconomic assets with zero value. The other tactic is to fight industry expansion through tighter regulation and by challenging company projects through litigation. Delaying projects has the impact of raising their costs and challenging their economic value. Company operating costs will increase as litigation and further regulation are pushed on the companies. At the same time, company balance sheets are attacked through efforts to undercut the asset value of the fossil fuel resources. Climate activists believe this pincer movement will force companies to embrace the environment movement.

Exhibit 11. The Environmental Fight Over Use Of Oil Resource

Source: Oil Change International

The “bible” providing justification for the attack on fossil fuel company assets was the International Energy Agency’s World Energy Outlook 2012 report. It concluded that “No more than one-third of proven reserves of fossil fuels can be consumed prior to 2050 if the world is to achieve the 2 degrees C goal…” The 2 degrees C goal refers to the UN’s Intergovernmental Panel on Climate Change series of reports that warn that if the world warms by more than 2 degrees C by the end of 2100, then the planet will experience catastrophic weather conditions that could possibly destroy the world as we know it. From this conclusion, two points emerge. One is that by leaving two-thirds of the earth’s fossil fuel reserves in the ground, global energy practices would be revolutionized. It means that over the next 30 years, the leading nations of the world, including the U.S., China and Western European countries, have to decarbonize their economies almost entirely. By 2050 these countries would need to power their economies, run their transportation systems, heat and cool their buildings and grow their food not mainly with oil, gas or coal but rather with solar, wind and other clean fuels. That shift would mean that the fossil fuel industry will be forced to give up an estimated $28 trillion in sales over just the next 20 years, surely that will impact the value of oil, gas and coal companies.

The other point that emerged was a ten-word statement by President Barack Obama. In an interview with New York Times columnist Tom Friedman last June, the President said, “We’re not going to be able to burn it all.” Interestingly, those words fly in the face of the Obama administration’s energy policy of “all of the above.” Consistency in policies, when they involve environmental voters and attacks on unloved energy companies, has never been the Obama administration’s strong suit. It’s all about politics and the “we versus them” mentality. That view was confirmed when media stories focused on the environmental impact of the leaders of the climate march who were bemused to be questioned about their personal commitments to reducing their carbon contribution. Give up cell phones, automobiles and private jets? How dare you ask such an asinine question when the big issue is stopping the growth of global carbon emissions. A salient point made by one environmental activist was that the South African divestment effort took 7-8 years to be successful. This energy divestment effort has existed for about a quarter of that time. This issue is not likely to go away, and it will probably prove to be more successful than many energy people expect. The energy world is changing, and will continue to change, likely never to return to the good old days.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.