- Natural Gas Shales A Game Changer, But LNG Exports?

- Crying For The Old Days; Will They Ever Come Back?

- Meteorologists At A Loss To Explain The Winter Weather

- Incandescent Bulb Ban Nears; Study Shows CFL Issues

- Cost Of Electric Vehicle Technology Doesn’t Pan Out

Musings From the Oil Patch

January 19, 2010

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Natural Gas Shales A Game Changer, But LNG Exports? (Top)

It is popular to proclaim that the energy world has changed – unconventional natural gas is now conventional – but has it really? In 2009, the Potential Gas Committee (PGC) at the Colorado School of Mines suggested that America’s technically recoverable natural gas resources totaled 1,836 trillion cubic feet (Tcf) with fully one-third accounted for by natural gas resources found in shale formations underlying many of the existing oil and gas producing basins. Because the industry has proven its ability to tap these trapped gas deposits, the PGC felt obligated to report them as additional resources. When coupled with the 238 Tcf of proved natural gas reserves, as determined by the Department of Energy/Energy Information Administration (DOE/EIA), the total potential gas supply resource potential of the country swelled to 2,074 Tcf, an increase of 35%. Clearly this is a significant volume of natural gas resources, the largest in the 44-year history of the PGC. What caught the attention from the media, investment analysts and energy industry executives was the 542 Tcf increase in the PGC’s assessment of total U.S. natural gas resource potential in the two-year time span between the 2006 and 2008 surveys, as the estimated total grew from 1,532 Tcf to 2,074 Tcf including proved reserves.

Based on this new, huge resource potential, it became popular to merely divide the total resource base by the annual volume of natural gas consumed in this country and arrive at estimates of 100 years of supply. The prospect of a domestic energy supply source of this magnitude has lulled people into assuming that the nation’s foreign energy dependency is destined to be eliminated. As a nation, we only need to make some infrastructure investments and incentivize consumers to use more gas and America is home free on energy. Not!

Exhibit 1. Potential Gas Committee Gas Supply Estimates

Source: Potential Gas Committee, PPHB

The first big mistake many people make is misunderstanding that the estimated resource potential is a long way from proved, producing reserves, if the transition is ever completed. Therefore, it becomes imperative to understand the composition of the potential resource estimate as it may suggest the likelihood of these resources successfully transitioning from potential to actual supply. In Exhibit 2, we have broken down the PGC’s estimate of the nation’s gas resource potential into probable, possible and speculative reserves – the typical definition of oil and gas reserves provided by companies in their various regulatory filings. In the probable category we have included the DOE’s estimate of proved natural gas reserves. The approximately 700 Tcf of probable gas reserves, at the current 25 Tcf of annual gas consumption rate, suggests the U.S. has about 28 years of gas supply, but only 10 years of that supply life is accounted for by the existing proved reserves, which is about the ratio of proved reserves to production that has existed for many years.

Exhibit 2. Potential Gas Committee Reserve Classes

Source: Potential Gas Committee, PPHB

Based on the data, the nation has close to 800 Tcf of possible reserves, the ultimate amount being dependent on additional drilling, adequate prices and some technical success in order to move them into the probable reserves category in the future. It is difficult to estimate how successful this shift will be.

More importantly, fully 30% of the PGC’s estimate of the ultimate potential resource base falls in the category of speculative reserves meaning these reserves need technological advances and likely much higher prices in order to move into the probable category, although they could migrate into the possible category more easily.

By confusing possible resources with proved reserves, the popular myth of the U.S. possessing 100 years of natural gas supply was born. Recent years of swelling gas production, driven by the technological success of horizontal drilling and hydraulic fracturing, convinced many that the U.S. was now the land of plenty. Because the U.S. economy was mired in one of the deepest recessions since the Great Depression, demand for natural gas fell just as production mushroomed creating a huge surplus of gas supplies that at times forced producers to cap production not only because it was uneconomic to produce due to low wellhead prices, but also because sometimes there was limited storage space for the gas. During winter storage is usually less of a concern for the industry, but during the shoulder months during spring and fall when heating and air conditioning demand remains low, storage capacity can become a challenge for gas prices.

We worry that the nation’s gas supply myth may be creating a potentially serious future supply problem for the country. As the popularity of the view that the U.S. has more natural gas than it can use has grown, it is becoming politically popular to promote the increased use of gas in applications where it has had limited success to date, such as the transportation fuels sector, or that we should begin exporting larger volumes to capitalize on the arbitrage advantage of U.S. gas prices. Converting a meaningful segment of the U.S. auto and truck fleets to direct burning of gas either as compressed natural gas (CNG) or liquefied natural gas (LNG) will require years and possibly decades, along with the need for significant investment to retool engine manufacturing plants and to construct refueling facilities across the nation.

Prior to the emergence of shales as a major new source of natural gas supply, the nation was concerned about our ability to meet future demand. For many years we were relying on trying to sustain our production volumes while depending upon growing gas imports from Canada to meet demand growth. Because we became concerned about the ability of Canada to grow its gas exports, the energy industry’s focus shifted to importing natural gas in the form of liquefied natural gas (LNG) from other parts of the world. The U.S. has imported LNG for many years starting in the 1970s and had constructed four import terminals along the East and Gulf Coasts. As long-term natural gas forecasts called for shrinking domestic production and likely limited growth in Canadian exports to the U.S., LNG became the anticipated source of gas supply that would balance our market. As the energy industry embraced LNG, proposals for building dozens of LNG import terminals were launched and a few new terminals were actually constructed.

In the past two years as the gas production surplus grew, the volume of LNG coming to America declined. It was all about natural gas prices. Oversupplied domestic gas markets depressed U.S. prices. At the same time, growing gas demand in Europe and Asia supported higher prices there and thus siphoned away LNG volumes targeted initially for the U.S. All the domestic LNG import terminals saw their volumes fall to minimal contractual levels. For the new terminals, this was a serious problem as they had yet to fully establish commercial relationships with gas buyers who might have contracted long-term LNG volumes to move through the new terminals, or their business model was to position them to play the spot LNG cargo delivery market.

At the present time, two LNG import terminals have submitted applications to the Federal Energy Regulatory Commission (FERC) for permission to add export facilities to their terminals and to be allowed to export LNG. The terminals are located in Freeport, Texas and Sabine Pass, Louisiana. Combined they will have the capacity, once modified, to export 3.4 billion cubic feet (Bcf) of gas a day, or about 5% of total gas production today. The owners of the terminals have petitioned for 20-year export licenses. Their rationale for exporting gas is that U.S. gas prices will remain low, i.e., below $5 per Mcf, until at least 2023 as forecast by the EIA and thus below gas prices prevailing in other geographic markets such as Europe and Asia. The belief is that U.S. gas supplies will become more price competitive than other gas supplies for these major geographic markets and therefore can gain market share.

Exhibit 3. EIA Forecast Of Natural Gas Market

Source: EIA, PPHB

In the recent EIA annual energy outlook for 2011 (AEO2011), even after the agency made a big splash with a report showing huge growth in its assessment of the nation’s natural gas resources as a result of the success of gas shales, the combination of its estimate of future domestic gas production and gas imports from Canada exactly matches the forecast for gas consumption and nominal gas exports. The U.S. exports a small volume of natural gas – both through pipelines across the U.S.-Mexico border and via LNG from a plant in Alaska to Japan. It appears that the EIA’s AEO2011 long-term natural gas market forecast has not accounted for either the potential of significant increases in LNG exports or meaningful domestic production growth predicted on growing gas shale volumes.

Exhibit 4. AEO2011 Forecast Misses LNG Export Potential

Source: EIA, PPHB

If natural gas wellhead prices remain below $5 per Mcf until 2023, some dozen years from now, it is likely that a substantial amount of gas shale production will not be developed because it will be uneconomic. If, as some forecasts predict, production from gas shale wells declines sharply as wells age, then any slowdown in gas shale drilling, which sub-economic prices suggest would likely happen, should result in supply shortfalls and sharply escalating prices. That is a scenario for price instability, albeit at higher than current natural gas prices, that is not attractive for developing large sustained new natural gas demand sources.

The other ingredients in the domestic natural gas story may be the recent application by Apache Corp. (APA-NYSE) for permission to build an LNG export terminal at Kitimat, British Columbia, and a new joint venture formed by Sasol S.A. (SSL-NYSE) and Talisman Energy (TLM-NYSE) to develop a gas-to-liquids plant in Canada.

These two projects plan to utilize recently discovered natural gas from shale and tight sand basins in Western Canada to serve export markets in Asia. While the projects will take several years to be developed, eventually they will siphon off potential gas volumes that might otherwise have been exported to the United States.

If the U.S. energy industry and its gas market forecasters are wrong about the success of the shale phenomenon, and the U.S. government allows long-term LNG export contracts to be established, the potential absence of new Canadian gas resources might leave the U.S. short of gas supply once again. While this scenario only addresses the supply side of the equation, what happens if the U.S. makes a serious commitment to converting a significant portion of our transportation fleet to CNG- and LNG-powered vehicles? Might America be faced with having to retool its economy and energy business once again when the gas supply shortage develops, with a huge economic cost due to poorly-vetted investments? Don’t rule out the potential for the myth of “100 years of gas supply” to lead this country into another energy disaster as energy market regulators have done numerous times in the past.

Crying For The Old Days; Will They Ever Come Back? (Top)

A recent blog by John Teahen, senior editor of Automotive News, decried how bad 2010 was for the auto industry. He talks about how everyone focuses on the 11% increase in 2010 U.S. auto sales, rising to 11.6 million vehicles. But as he puts it, “2010 was not a good year for the auto industry. It’s as simple as that.”

Mr. Teahen says that “comparing 2010 and 2009 is like saying that 10 lashes with a horsewhip are better than 15 – the blood flows just as freely, and the scars are just as deep.” He points out that he prefers to compare industry auto sales against 2007 because that was the industry’s last “normal” year. It was the last of nine consecutive years in which auto sales exceeded 16 million cars. For Mr. Teahen, it just goes to show how far back the industry must travel, and in his view it didn’t travel very far down that road in 2011. The 11.6 million cars sold in 2010 was down 28% from the 16.2 million cars sold in 2007.

We also noticed that one of the leading auto industry consulting firms just raised its forecast of auto sales this year by 200,000 cars to 12 million. If that is all the cars the industry sells this year, Mr. Teahen, we are sure, will be back a year from now decrying the further lack of industry recovery in 2011. So just what is normal for the auto industry? And will it have to return to “normalcy” for the industry to see any impact on domestic energy consumption?

We have contended for a long time that until there is a sustained recovery in the auto and housing markets, it is hard to expect a significant recovery in U.S. energy demand. Both of those industries require significant amounts of energy to create their products. Moreover, when consumers are buying cars and homes they are also employed and traveling, other actions that use energy.

In order to try to answer the question of just what is normal for the auto and housing industries, we plotted the long-term trend in auto sales and new housing starts against the first sale prices for crude in the United States. It is interesting to note that auto sales have exceeded 15 million units only a few times prior to the mid 1990s – in 1978 following the 1973 recession and again in the mid 1980s after the horrendous recession of 1981. Again, following the 1991 recession, auto sales crossed the 15 million line for a few year before starting to ramp higher (16 million) at the end of the 1990s and 2000s. Yes, those years of 16 million annual car sales represented a bonanza, but maybe that period was the aberration and not the norm.

Exhibit 5. Housing Starts And Auto Sales Vs. Oil Prices

Source: EIA, St. Louis Fed, Wards.com

In order to attempt to answer that question, we looked at new housing starts as economists know there is a strong correlation between auto sales and home purchases. If we use 20 million annual new housing starts as the equivalent of the booms for auto sales, we see a very similar pattern – starts rebound to peaks following recessions. This is expected as government actions during recessions are designed to boost the housing sector in particular as it has a huge leveraging impact on national economic activity and job creation.

When we look at the chart in Exhibit 5, we find that new housing starts tend to lead or be coincident with the peak in auto sales. That was true throughout the 1970s and 1980s. In the 2000s that seemed to change somewhat as auto sales peaked before new housing starts crossed the 20 million threshold. Following the auto sales peak, the industry’s annual sales volume was either flat or declined slightly at a time when new housing starts soared. If we merely characterize the auto sales trend as flat, then what we really saw was how housing and auto sales benefitted from easy credit – the condition that has knee-capped the housing industry and is keeping it down currently.

Economists have estimated that it will be years before the housing market returns to anything like normal, however that is defined. Given the close historical relationship between auto sales and the health of the housing market, it is likely it will be years before auto manufacturers are selling new cars at the rate they did in the 2000s. Moreover, demographics in the United States are working against the auto industry. Those Americans born between 1945 and 1963 helped drive the new car sales volume from 10 million units annual in the 1960s to over 17 million in 1998 to 2006. The problem is that this generation is aging and less likely to be buying lots of new cars. The demographic challenge is that the number of young drivers in the 16 to 29-year age range is growing at the slowest pace since the mid-1990s. The U.S. also has the problem of its existing car fleet exceeding the number of licensed drivers. Given the nation’s demographics, there should be a shrinking of the fleet unless buyers can support multiple vehicles they can only drive occasionally.

Additional challenges for high auto sales volumes, once we get beyond the rebound from the recession, are that new cars last longer and perform better so there is less incentive to need to replace them. According to a report last year, historically 0.6% of U.S. and 0.7% of Canadian cars on the road are replaced each year. How will this ratio change with the better performance of new cars and the increasing shift of the population into metropolitan areas where car ownership is falling as other transportation options are growing such as public transportation and hourly car rental services?

We suggest Mr. Teahen should remove his rose-colored glasses and face the reality that 11 to 13 million car-sales-years are likely to be the new “normal” for the auto industry. That realization might make company CEOs focus on cost controls in order to deliver a solid product the American public will want to buy and can afford to buy. Oh, we forgot, our current government seems determined through its labor, environmental and industrial policies to prevent that from happening.

Meteorologists At A Loss To Explain The Winter Weather (Top)

Another huge snowstorm moved through the mid-Atlantic and Northeast states last week, and forecasters are calling for more snow this week and possibly next. In New York City last Thursday, 19 inches of snow landed in Central Park. That snowfall brought the city’s January snowfall total to 36 inches, well above the 1925 record of 27.4 inches. The snowy winter has people upset because previous winters have not been so bad, although last year when a blizzard descended on Washington, D. C., President Obama was moved to describe the storm as a “snowmageddon.” He was quick to point out that his daughters didn’t quite understand why school was cancelled since in Chicago this was a normal winter experience.

This winter has brought 51.5 inches of snow to Boston, easily topping the city’s winter average of 42.3 inches. The record snowfall for the city was experienced during the 1995-1996 winter when Boston was buried under 107 inches of snow. New York so far this winter has experienced 56 inches of snow, although its record was also experienced in 1995-1996 when 75 inches fell.

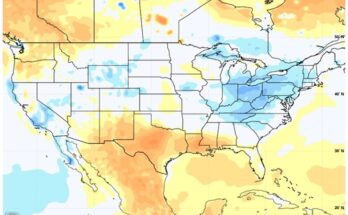

Besides the dramatic snowfall records this winter, average temperatures also have been colder than normal. Importantly, the winter weather has impacted much more of the United States than normal. On January 11th, every state except Florida had snow on the ground, including Hawaii where there were seven inches atop the dormant volcano Mauna Kea on the island of Hawaii. Slightly over 70% of the nation’s areal extent was snow covered that January day as shown in Exhibit 6.

Exhibit 6. January 11, 2011, Shows Snow In 49 States

Source: NOAA

Recent media articles have focused on the altered weather pattern that has been impacting Northern Europe and North America along with the Arctic for the past two winters. As we have written about, the polar vortex, a weather pattern that influences the location and movement of the jet stream, seems to have shifted bringing the cold weather. When there is a strong pressure difference between the polar region and the middle latitudes of the planet, the jet stream, a wind pattern that moves from west to east and normally across Canada, shifts into a tight circle around the North Pole and contains the frigid winter air at the top of the world. When that pressure differential shrinks, the jet stream weakens and drifts southward bringing cold air into the middle latitudes (the United States and Europe) and allowing warm air to move into the polar region. This pattern shift has happened intermittently over many decades; however, it has been unusual for it to weaken as much as it has in recent winters. Last year, one index had the vortex hitting the lowest winter-time value since record-keeping began in 1865. It was nearly that low last December.

Throughout the decade ending in the mid 1990s, the polar vortex was strengthening resulting in much warmer winters on average in Northern Europe and North America. This pattern led some climate change supporters to claim that global warming was responsible for the near end to traditional winter weather. Now that the pattern has reversed, and in a big way, climate change supporters are claiming that global warming is impacting the Arctic and is shrinking the Arctic sea ice cover, which contributes to the weakening of the pressure differential and the shift in the jet stream. Smarter climate change supporters are beginning to say it is too early to tell whether these weather changes are related to global warming, and certainly a two year timeframe is insufficient for overthrowing previous climate change theories dealing with the polar vortex.

AccuWeather.com’s severe weather forecaster, Joe Bastardi, recently produced a video in which he offered an explanation for why his winter weather forecast missed the record snow and cold. He is both an entertaining and smart weather forecaster in our judgment. He firmly believes that natural forces help determine the weather and by seeking to find analog patterns in history is a way to understand the development and path of weather and storms.

According to Mr. Bastardi, the changed weather pattern has a lot to do with La Niña, a weather pattern in the South Pacific Ocean that is associated with the cooling of the surface water and can alter the Northern Hemisphere’s wind patterns bringing cooler and wetter weather to the U.S. and the Atlantic Basin. Mr. Bastardi has found that when there are back-to-back La Niña years, temperatures across the United States get progressively cooler. If the La Niña period is then followed by an El Niño, winters get even colder. He bases his conclusions on looking at the analog years for back-to-back La Niña years such as were experienced in 1949-50, 1954-55, 1973-74, 1998-99 and 2007-2008. In his view, “something is going on that is bigger than we understand.”

The original AccuWeather.com winter weather forecast for 2010-2011 called for winter to be focused primarily in the Midwest and Northeast. The severity of winter would be contested in the southern portion of these various states. It was expected that the southern tier of the U.S. would not experience much of a winter and that Florida would be warm.

Exhibit 7. Original AccuWeather Winter Forecast

Source: AccuWeather.com

Unfortunately, Mr. Bastardi’s original forecast has proven to be way off base. The entire half of the country from the Plains states to the East Coast and south to the Gulf Coast has been treated to many ice and snow storms that have impacted and at times have paralyzed parts of the country, including disrupting the holiday travel plans for many Americans. The cold weather was so bad in December that it impacted the citrus crop in Florida with the resulting loss of fruit driving up orange juice futures and grocery store prices.

Exhibit 8. Latest AccuWeather Winter Forecast

Source: AccuWeather.com

Atlanta, Georgia, has had 5.9 inches of snow so far this winter with two months still to go. That compares with the city’s record winter snowfall of 10.3 inches in 1982-1983. Both Georgia and Florida recorded their lowest average December temperatures on record last month. So far in January, 155 daily snowfall records have been broken. Most of the records were in the Northeast but they stretch south to Louisville, Kentucky, and Wilmington, North Carolina.

The latest data for the Pacific Ocean show sea surface temperatures averaging 0.2o C cooler than the long-term average. Mr. Bastardi believes that since the Pacific Ocean is such a large body of water that when it cools the ocean sucks in much of the heat of the planet. This can lead to a cooler planet for an extended period of time as it will take a reversal of the weather cycle to warm the Pacific Ocean.

Exhibit 9. PDO Cooling And Sucking In Planet Heat

Source: AccuWeather.com

Mr. Bastardi has also become quite interested in sun spot activity. The current sun spot cycle (Cycle 24) is producing as few spots as last experienced during the late 1700s and early 1800s. Importantly, sun spot forecasts have been reduced in recent years. The absence of sun spots appears to correlate with colder temperatures on the planet. That is best demonstrated by the chart in Exhibit 10. As shown by the data, the Maunder Minimum period from 1645 to 1710, when there was an absence of sun spots, just happened to coincide with the extremely cold winters experienced in Northern Europe during pre-industrialized times and is referred to as the “Little Ice Age.”

What Mr. Bastardi shows in his video is how the sun spot forecast made by National Aeronautical and Space Administration (NASA) scientists has changed over the past few years. We were not able to find both charts from his video, but we did uncover a similar chart showing the earlier sun spot forecast. That chart is contained in Exhibit 11. It shows that the NASA scientists expected that the sun

Exhibit 10. Little Ice Age Coincides With Lack Of Sun Spots

Source: Robert A. Rohde, Global Warming Art, Wikimedia Commons

spot count would range between 90 on the low side and 140 on the high end, reflecting the peak number of sun spots to be observed during Cycle 24.

Exhibit 11. Original Forecast Anticipated Normal Activity

Source: NASA

Sun spot cycles have demonstrated considerable variability during their life, ranging from as short as nine years in length to as long as 14 years. The average length of sun spot cycles is about 11 years. Exhibit 12 shows two pictures of the sun – the sun on the left has many sun spots while the one on the right has none. The left picture shows the Solar Maximum while the right picture shows the Solar Minimum. Today’s sun looks more like the Solar Minimum picture.

The original sun spot forecast for Cycle 24 was issued in 2006 and it was recently revised and is shown in Exhibit 13. It now calls for a peak in the count to be somewhere around 85 with a low count of about 35. The central value is estimated to be 59, which is well

Exhibit 12. Sun With And Without Spots

Source: Windows2universe.org

below the low end of the prior sun spot forecast of 90. In the video, while Mr. Bastardi discusses the fact that he believes the world is moving into a period of global cooling, he says he doesn’t believe in the sun spot theory completely because he doesn’t know enough about it and how it might be interacting with the other driving factors behind climate change. He doesn’t rule it out, either.

Exhibit 13. Latest Sun Spot Forecast Is Much Lower

Source: NASA

Mr. Bastardi also mentions that in the early 1990s at the time of Glasnost, there were several scientific papers written by Russian astrophysicists arguing that the upcoming sun spot cycle (Cycle 24) might see the count fall to close to zero. These scientists, therefore, believed that the planet was heading into another “little ice age.” Mr. Bastardi disavows that forecast but acknowledges that there is “something going on that we haven’t seen before” and that it is happening “on the cold side, and not the warm side.” He points to the Pacific Decadal Oscillation (PDO) that is producing the cooling Pacific Ocean waters and the La Niña weather pattern. There is also the dramatic drop in sun spot activity. He believes these natural forces are just as important to determining the future of the planet’s climate as carbon dioxide emissions and Anthropogenic Global Warming (AGW) factors. As Mr. Bastardi puts it in summing up the importance of the four drivers for the weather he just identified, “one of these Emperors has no clothes” and we will find out in the near future, which one it is. It will be interesting to see how much money is allocated in the Obama administration’s next budget proposal for research on global cooling.

Incandescent Bulb Ban Nears; Study Shows CFL Issues (Top)

The countdown to the ban on selling 100-watt incandescent light bulbs is now less than 11 months away, unless you live in California where a similar state ban commenced on January 1st. The Energy Independence and Security Act of 2007 signed by President George W. Bush on December 18, 2007, mandated that all light bulbs use 30% less energy than incandescent bulbs by 2012-2014. As part of that legislation, between January 1,, 2012, and January 1, 2014, all conventional incandescent light bulbs with ratings between 40 watts and 100 watts will be banned, to be replaced by compact fluorescent lamp (CFL) bulbs. That ban does not mean individuals can no longer use incandescent bulbs, only that retailers cannot sell them. We suspect that as the date for banning the sale of these bulbs draws closer, we will see people sweeping incandescent light bulbs from store shelves in order to inventory them at home since most consumers are less than satisfied with the mandated CFL bulbs.

The CFL bulb radiates a different light spectrum than does the incandescent bulb. A major complaint from users of CFLs has been that the light quality is too harsh. In recent years, new phosphor formulations used to coat the inside of the bulb have noticeably improved the light quality of CFLs, but these “soft white” bulbs tend to cost more. As a result, people who buy light bulbs based on price often wind up with CFLs with poor light quality.

The history of the CFL goes back to an invention by Peter Cooper Hewitt in the 1890s. These vapor lights were used initially for photographic studios and in various other industries, but had limited applicability. George Inman teamed with General Electric (GE-NYSE) to develop a practical CFL bulb that was first sold in 1938 and received a patent in 1941. The first integrated fluorescence light bulb and fixture was introduced to the American public at the GE exhibit at the New York’s World Fair in 1938. The widely used spiral tube CFL was invented in 1976 by Edward Hammer, a GE engineer, but was shelved by the company. The bulb’s design was copied by China. In 1995, the first commercially available spiral CFLs lamps started to arrive from China. The other leading global light bulb manufacturer, Dutch electronics company Philips, introduced in 1980 its model SL, which consists of a screw-in lamp bulb with an integral ballast.

The Environmental Protection Agency’s (EPA) Energy Star program to promote environmentally-friendly consumer appliances determined that if each U.S. home replaced just one incandescent light bulb with a CFL bulb, the amount of electricity saved could light three million homes and save $600 million in energy costs. It would also limit nine million pounds of carbon emissions, which is the equivalent of removing 800,000 cars from American roads for a year. A Department of Energy study showed that a single 24 watt CFL bulb’s lifetime energy savings add up to the gasoline equivalent of a coast-to-coast trip by a Toyota (TM-NYSE) Prius.

Exhibit 14. CFLs Use Less Energy For Light Output

Source: Energy Star web site

The dramatic energy savings that can be attained from the use of CFLs versus incandescent light bulbs is shown by the table in Exhibit 14. Until the CFL manufacturers made progress in improving the light quality of their bulbs, most people would say that there was no equality in the light output.

It is interesting that under the 2007 energy bill, many incandescent light bulbs are exempt from the ban. For example, any specialty light such as those inside refrigerators, all reflector bulbs, 3-way light bulbs, candelabras, globe lights, shatter resistant bulbs, those used in vibration or rough service, all colored light bulbs, bug lights and even plant lights. The list of exemptions seems quite long, but we have no idea how much power they consume.

The Energy Department study also pointed out how CFL bulbs should be used only in locations where lights are on for at least 15 minutes in order to maximize their benefit. Studies and experience have shown that CFLs are not appropriate in many applications because the bulbs are very delicate and don’t tolerate abuse. For example, CFLs do not like short-cycle duty (many users say that the minimum time of use for CFLs should be at least 30 minutes to allow them to completely warm up and not the 15 minutes suggested by the government study), and as a result, they should not be used in closets and bathrooms. CFLs also are not appropriate for damp areas or in enclosed areas. A big problem is that CFLs are quite sensitive to voltage spikes and that they need to be installed base down in fixtures. By installing them in a base up fixture, they have a tendency to overheat and fail prematurely.

So as the nation is on the cusp of the first ban on sale of incandescent light bulbs, various studies conducted by researchers hired by utility regulators in California, the state that has done the most to push the use of CFL bulbs, show that measuring the energy savings is trickier than they thought. Moreover, the savings estimated by the various state utilities are woefully short of what the researchers determined. A significant conclusion is that CFL bulbs do not last as long as their manufacturers or regulators claim, which has a negative impact on consumer attitudes and use.

As reported in a Wall Street Journal story, California utility PG&E Corp. set up a CFL bulb program in 2006 and thought that customers would buy 53 million CFLs by 2008. The company set aside $92 million for rebates. The researchers found that fewer bulbs were purchased, fewer were screwed in and they saved less money than the company anticipated. PG&E estimated the energy savings of its bulb program would be 1.7 billion kilowatt hours for 2006-2008, but the researchers found that the savings were only 451.6 million kilowatt hours, 73% less.

One conclusion of the PG&E study was that its assumption that the useful life of CLF bulbs would be 9.4 years proved wrong as experience showed it to be 6.3 years. According to the researchers the problem with the life of CFL bulbs is their premature burnout from the bulbs being used in bathrooms (damp), recessed lights (enclosed and inverted) and other short-duty applications. Our personal experience also confirms these challenges, although lately the quality of CFL bulbs seems to be improving along with greater choices of CFL bulbs for various applications. We have found CFL bulbs designed for recessed lighting applications and for use with dimmer switches. We have also resorted to using smaller CFL bulbs in recessed applications to allow more air to circulate around the bulb.

While CFL bulbs offer significant energy savings opportunities, their cost and shorter lifespan can often negate their energy savings. Further improvements in incandescent bulbs, light emitting diode (LED) lights and other new designs offer hope that better light bulbs may be on the way.

A potential problem with CFL bulbs is the mercury contained in them

Exhibit 15. Light Output Vs. Energy Input Of Bulbs

Source: Wikipedia

that contributes to their improved energy-to-light performance. The mercury requires that CFL bulbs be disposed of more carefully than by just throwing them in the trash. They should be taken to waste disposal centers that are equipped to deal with the mercury. According to the EPA web site, a typical CFL bulb contains four milligrams of mercury. As a light bulb consumer wrote on a web site, 45 light bulbs in 120 million homes equals 5.4 billion light bulbs in the country. If all of them were CFLs, that spells a huge potential environmental disaster in the works if not disposed of properly.

Another problem with CFL bulbs comes from their increased efficiency in generating light from energy compared to incandescent bulbs. In cold weather locales, homeowners may have to use more energy to heat their homes to offset the heat they obtained from incandescent bulbs. According to a 2009 study for BC Hydro, the new lighting regulations will increase annual greenhouse gas emissions in British Columbia by 45,000 tons as consumers use more energy to heat their homes after switching to the more energy-efficient, but cooler, CFL bulbs. A report by Canada’s Centre for Housing Technology concluded that dollar savings by using CFLs depend on the climate in which a home is located. In Canada, in winter, “the reduction in lighting energy use was almost offset by the increase in space-heating energy use, according to the study.

Anybody care to bet that CFL bulbs will not be one of the regulations targeted under President Obama’s recent mandate to review and eliminate rules impeding economic activity.

Cost Of Electric Vehicle Technology Doesn’t Pan Out (Top)

President Obama continues to tout his administration’s goal of having one million electric cars on the roads of America by 2015. The question is: What will it take in either subsidies or mandates to achieve this goal? Before we can answer that question, we need to address the issue of the cost/benefit of the various vehicle electrification technologies. John Petersen, a Swiss-based American lawyer who has served as a director of a battery manufacturer and is a partner in a law firm that represents North American, European and Asian energy and alternative energy companies, has done the heavy lifting in this analysis.

Spurred by a slide in the December investor presentation by Exide Technologies (XIDE-Nasdaq), a $2.7 billion-revenue-company based in Milton, Georgia, and operating in 80 countries around the world supplying lead-acid batteries, Mr. Petersen decided to examine the economics of electric vehicle technologies. The slide is shown in Exhibit 16 presents the company’s assessment of the cost/benefit to implementing the various electric vehicle technologies from start-stop through hybrid to full electric.

Exhibit 16. Electric Technology Shows Hybrid Advantage

Source: Exide Technologies

Mr. Petersen compared the various electrification technologies against a baseline case, which he assumed would be a new car with 30-miles per gallon fuel economy and anticipated usage of 12,000 miles per year. This means the baseline vehicle consumes 400 gallons of gasoline a year. He then prepared a table (Exhibit 17) showing which of the various vehicle electrification technologies supported various features and what the benefit is with respect to annual gasoline savings, annual carbon dioxide emission abatement and the implementation cost. Of the six vehicle technologies analyzed – stop-start; micro-hybrid; mild-hybrid; full-hybrid; plug-in hybrid; and electric vehicle – only the last three had electric motor power. For the first three vehicle types, electrification technology is more of an afterthought or modest in contribution.

Exhibit 17. Cost/Benefit Of Electrification Technologies

Source: SeekingAlpha.com

In comparison against the baseline vehicle, the full electric vehicle would appear to be attractive since it saves the owner from having to purchase any gasoline. Only the full electric vehicle and the plug-in hybrid offer complete or nearly complete avoidance of gasoline. On the other hand, the stop-start and micro-hybrid vehicles that barely use electrification technologies save only 5% to 10% of the baseline vehicle’s annual fuel consumption. It is also important to look at the implementation cost of the various electrification technologies utilized in each vehicle. The cost ranges from $500 for the simplest hybrid to $18,000 for the full electric vehicle. Using the data from this spreadsheet, Mr. Petersen plotted the cost per gallon of annual fuel savings and the cost per kilogram of annual carbon dioxide abatement for each vehicle technology.

Exhibit 18. Plug-in Electric Vehicles Have No Cost Advantages

Source: SeekingAlpha.com

As demonstrated by the chart in Exhibit 18 and supported by the data, there is a huge cost advantage in favor of the four hybrid vehicles compared to the two plug-in vehicles. For the four hybrids, the average capital cost per gallon of annual fuel savings is $24 and the average capital cost per kilogram of annual CO2 abatement is $2.24. In the two plug-in vehicle categories, the average capital cost per gallon of annual fuel savings is $46 and the average capital cost per kilogram of annual CO2 abatement is $7.25.

As Mr. Petersen sums up the case for plug-in electric vehicles, “cars with plugs may feel good, but until somebody repeals the laws of economic gravity they will never be an attractive fuel savings or emissions abatement solution.” So if based on pure economics, how will President Obama achieve his goal of getting one million of these electric vehicles on the roads in the next four years? The easiest way is to mandate that consumers buy them. As we have written about before, by encouraging the EPA to boost the minimum fuel-efficiency rating for new car fleets to 35 miles per gallon in 2016 and possibly to 60 miles per gallon by 2020, buyers will have few choices other than electric vehicles since larger, less fuel-efficient vehicle choices will be extremely limited or not available at all.

Another way to get electric vehicles on the road is to subsidize their purchase by consumers. Therein is the justification for the $7,500 tax credit for buyers. But it appears the Obama administration is thinking about modifying this subsidy program to more closely resemble the Cash for Clunkers program initiated under the federal stimulus bill of 2008. By turning the tax credit into a cash rebate, it delivers an immediate reward to the buyer and may cost less in the end because the cash rebate is returned to the auto dealer who then can use it as a credit for his taxes. The bureaucracy to handle these cash rewards is minimal since most, if not all, of the paper work is performed by the auto dealer’s staff. Any administrative cost savings could translate into more cash rewards.

A Congressman from Michigan has already introduced a bill into the House of Representatives to increase the per-manufacturer cap on the $7,500 tax credit from 200,000 to 500,000 eligible vehicles. This means that dealers could sell two and a half times the current number of electric vehicles before the tax credit is phased out. With the prospect of a greater number of electric vehicles being sold before the tax credit expires, auto manufacturers would have greater incentives to invest in battery technology and other things to reduce the cost and boost the performance of electric vehicles. Presumably that would make them more attractive to future buyers even without tax credits or subsidies.

The Obama administration plans to ask for over 30% more for electric vehicle R&D and for research to improve battery storage technology in its upcoming budget. The administration will also ask for additional money to subsidize state and local investment in local electric vehicle charging infrastructure. Clearly, if there are only a few public charging stations, electric vehicle buyers will be reluctant to purchase them except for limited applications or for ego reasons.

Given President Obama’s “Sputnik moment” speech last week, we fully expect to hear references to how the Volt and Leaf electric vehicles are similar to the Apollo space capsule that first took man into space and to the moon. Since the space program successfully progressed from the small, simple Apollo capsule to the large, technologically sophisticated shuttle vehicles, we can expect to be told that electric vehicles will make the same transition if the government invests enough money.

We are more likely to question whether the progression will be more like the early 1900s electric vehicle fleet that peaked in size in 1908 at 28% of new car sales only to be discontinued in 1920 after being overwhelmed by Henry Ford’s low-priced, highly efficient Model T coupled with the invention of the electric starter. Or maybe the progression will follow the pattern of GM’s EV1 that lasted barely five years before the company pulled the plug on their leases. Pushing uneconomic and impracticable technologies that can only survive with huge government subsidies or mandates is a prescription for economic disaster. But politicians and regulators with agendas and no real-world experience are bound to have to make all the mistakes of history before they learn their lessons. Unfortunately, we the taxpayers have to pay for their education.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.