- Considering EIA’s Peak Oil Production Forecast

- Opportunities And Challenges In Utility Infrastructure

- From Tailwind To Headwind: Natural Gas Market Suffers

- Moral Decisions Confront Autonomous Vehicles

- How Much Do Your Eating Habits Hurt Our Climate?

Musings From the Oil Patch

February 20, 2018

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Considering EIA’s Peak Oil Production Forecast (Top)

The Energy Information Administration’s (EIA) Short Term Energy Outlook (STEO) for February was released earlier this month. The EIA made note of the fact that its estimate of crude oil output in January averaged 10.2 million barrels a day (mmb/d), an increase of 100,000 barrels a day (b/d) from December’s estimated production. While the January production estimate places the U.S. domestic oil industry squarely in new territory, the important point is that the EIA’s 2018 and 2019 outlooks show continued production growth.

For all of 2018, the EIA expects domestic oil production to average 10.6 mmb/d, surpassing the 1970 record output when the industry produced 9.6 mmb/d. Potentially more significant, and critical to thinking about the future of shale oil, and the role of the United States oil business in the world’s changing oil market, the EIA predicts domestic oil production will average 11.2 mmb/d in 2019.

A curious situation was that at almost the same time the February STEO was released, the EIA was also introducing its Annual Energy Outlook (AEO) that provides a base (reference) case forecast along with better and worse forecasts based on different sets of assumptions. All the forecasts are prepared based off October 2017 data and provide annual forecasts to 2050.

In the AEO reference case, oil production does not reach the 11.2 mmb/d estimate for 2019 until 2023-2024. The EIA does project domestic oil production continuing to grow, reaching 11.9 mmb/d in 2037 and then beginning to decline. We only mention this because there are likely to be news reports that will discuss the different forecasts, and possibly confusing them. The reality is that the AEO forecast is based on months-old data that misses the strong production growth of the past few months.

While much has been made about U.S. oil production exceeding its 1970 all-time peak, however, given the industry’s dynamics over the past few months, we wondered if the EIA is too conservative in its STEO forecast. Before exploring that question, we thought it might be interesting to look at oil production trends around the time of the past peak. We began our scrutiny by charting monthly oil production for 1969-1971, which, we felt, offered sufficient perspective around the peak output month of November 1970. In November 1970, the U.S. produced 10.044 mmb/d. This output was 31,000 b/d greater than the average production reported for October 1970. To put that peak output in perspective, we wanted to examine what had happened to production in the months immediately prior to and then subsequent to the record month.

Between August and September 1970, domestic oil output rose by 273,000 b/d. Monthly production continued to grow as an additional 160,000 b/d of output brought October’s total to 10.013 mmb/d, which then increased slightly to the record November volume. In the months after the peak, average production fell by 100,000 b/d in December 1970, and then declined by an additional 289,000 b/d in January 1971. Based on that data pattern, it would appear that a new oil field, or possibly several new oil fields, came into production during the initial portion of that six-month span. Due to the output from the new wells, average monthly oil production grew by 464,000 b/d between August and November 1970, a 4.9% increase. The actual volume of new production may have been much greater, sufficient to offset the natural decline in the output of producing wells besides growing overall production.

Exhibit 1. How Oil Production Tracked At Last Peak

Source: EIA, PPHB

On the other side of the oil production peak, average monthly output fell by 289,000 b/d, or a 3.9% decline, in the following two months. When we examine the pattern of monthly oil output per day over the entire span of time, it becomes evident that production grew throughout 1969 and in early 1970, but then experienced a sharp increase toward the end of the year. But, shortly after the November production peak, output stepped down to a level nearly half a million barrels a day lower. That level was followed by another drop of roughly 400,000 b/d. Without doing more research, we can only assume that the domestic oil industry was the beneficiary of “flush” production from newly completed wells on the way to the peak, most likely from new fields or offshore platforms, which overwhelmed the natural production decline rate, but which then overwhelmed the new production volumes following the November 1970 peak.

Does the 1970 production experience offer any guidance for the EIA’s current view of future oil output? While unlikely, our observation is based more on the fact that the U.S. was self-sufficient in oil up until that peak. Crude oil prices were only barely reflecting the pressure of a nation running out of drilling locations due to low oil prices. Oil prices in the U.S. were on a positive slope – rising from $3.09 a barrel for 1969 to $3.39 in 1971. The bigger developing stories then were the acceleration in global inflation and the rising power of the Organization of Petroleum Exporting Countries, an organization founded almost ten years earlier and designed to provide unified opposition to exploitation by the handful of major oil companies that dominated the global industry.

For the past several years, the EIA has been wrestling with understanding the shifting dynamics within the global oil market, largely caused by growing U.S. shale output. The revival of the U.S. domestic oil business was the product of technology that enabled the exploitation of shale formations that produced outcomes well beyond even optimistic expectations. Shale output growth has occurred despite low oil prices that experts predicted would contribute to the demise of the business. The fact that the industry did not implode surprised many analysts. The support came from the largess of capital providers who believed in either faulty analysis or extreme hope for a rapid recovery.

Just as oil company managers and oil market forecasters have been forced to readjust their thinking about how the industry could, and would, function in the post-2014 OPEC-induced market chaos, the EIA has been adjusting its thinking. Based on the EIA’s latest projection, one wonders whether their view about the future trails the industry’s dynamics. Possibly, their inability to accurately capture the interactions of some of the fundamental changes underway in the industry may be shaping their forecast and its timing.

Setting the stage for the EIA’s production forecast is its outlook for crude oil prices. Like many oil industry forecasters, the EIA has been behind the curve on the uplift in oil prices that began in mid-year 2017. That rise began as oil traders, consumers and producers realized that the rebalancing of global oil supply and demand, the primary goal of OPEC and its key non-OPEC supporter, Russia, was finally happening. That realization, supported by physical data, came more than six months after the OPEC/Russia production cut agreement was put in place – designed to remove about 2% of the world’s supply from the market. With accelerating global economic growth, driven largely by the earlier collapse in world oil prices, oil demand was climbing faster than expected, which led to forecasts of even faster demand growth. With less supply, the demand increase caused global oil inventories to shrink, forcing consumers to bid up prices to secure adequate supplies.

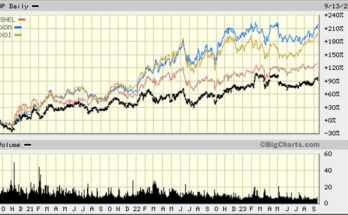

What we saw in the February STEO was the EIA’s attempt to deal with a sharply rising near-term oil price, while not abandoning the agency’s conservative long-term pricing view. We plotted the oil price forecast set forth in the January STEO against the forecast presented in the February report.

Exhibit 2. Adjusting To A Spike And Conservative Price Future

Source: EIA, PPHB

What the chart shows is a much higher near-term oil price (a spike), followed by a steady decline bringing the projected price back to the EIA’s unchanged $56 a barrel price target at the start of 2019. The EIA’s forecast then anticipates oil prices rising by $3 a barrel to $59/barrel by 2019’s fourth quarter.

The other takeaway from the chart is that rather than the near-term oil price sinking to $53.50 a barrel, as forecast in January, the EIA saw the price climb to nearly $64. We would note that only days after releasing its new forecast, the EIA saw the oil price fall below $60 a barrel as rising shale oil production, growing crude oil and petroleum product inventories, and a sharp jump in the weekly drilling rig count convinced oil speculators that the recent high oil prices were creating conditions to ensure lower prices. Understanding the relationship between all these forces helps to explain the EIA’s oil output forecast.

Exhibit 3. Is Oil Production Forecast Too Conservative?

Source: EIA, PPHB

In the February STEO, the EIA projects that total domestic crude oil production will reach 11.36 mmb/d in December 2019, up from the 10.2 mmb/d estimated for January 2018. If we look at the history of oil production and the EIA’s forecast, we find an interesting trend. Between November 2016 and November 2017, domestic oil output rose by 1.1 mmb/d. From last November to November 2018, the EIA sees oil production growing by 1.0 mmb/d, reaching an output level of 11.07 mmb/d. This growth comes in response to the sharply higher oil price ($63.30/barrel) averaged over January and the first half of February 2018. However, the oil price is forecast to fall every month afterwards until July, at which point it settles at $56 a barrel, a nearly $7/barrel decline. The price is projected to remain flat until mid-way through the second quarter of 2019, at which point it starts climbing until it hits $59/barrel as it enters the final three months of the year.

One would expect that such an oil price spike would have little impact on drilling activity, and therefore, growth in oil output. Yet, the EIA sees oil output growing by a million barrels a day by November 2018. In projecting a steady rise in the oil price during the final three-quarters of 2019, the slump in monthly production, followed by a sharp jump in the final three months of the year seems strange. That strangeness comes from examining the chart of oil prices since 2016. It shows that between April 2016 and August 2017, the oil price averaged slightly under $48 a barrel, and traded most of the time within a fairly-narrow range. For January 2018, oil prices averaged $63.70 a barrel, or nearly $6/barrel higher than the price during that April ’16 to August ‘17 historical period. That price level was higher than the average oil price in earlier periods, so it presumably was the catalyst for the upturn in drilling that produced the 1.1 mmb/d production increase.

Exhibit 4. Will Higher Price Drive Further Production Growth?

Source: EIA, PPHB

Even if oil prices fall back from their recent lofty levels and only average in the high $50s a barrel, one would expect that there may be a greater production response in 2019 than forecast by the EIA. Obviously, we need to see where crude oil prices settle out after the current period of market chaos, which is buffeting the equity, debt, currency and commodity markets, comes to an end. Unless this turmoil, or some unforeseen geopolitical event, disrupts the current synchronized global economic growth phenomenon, it is difficult to see oil prices not remaining healthy for the balance of 2018. Despite the growing focus on a more de-carbonized economy in conjunction with the recent focus on zero emission vehicles, there has been only a minimal impact on oil consumption. In fact, the impact has been almost undeterminable. We don’t see that situation changing anytime within the foreseeable future. Therefore, we believe that the EIA production forecast, at least through 2019, may prove to be conservative.

Opportunities And Challenges In Utility Infrastructure (Top)

One of the critical pieces for a nation’s economy to be successful is a well-functioning energy infrastructure. This is critical for the delivery of energy from wellheads, dams, wind turbines and power plants to consumers. Adequate and reliable supplies of energy are crucial. We are left with many examples of what happens to localities and regions when their energy infrastructure is damaged or just merely interrupted. How often are we exasperated whenever there is a power outage – none of our appliances work, and we are sitting in the dark. It also means you can’t get money from an ATM or gasoline from a gas station pump. In the summer, without air conditioning, you can broil. In winter, without power you may freeze. Yet, how few people consider their energy infrastructure until the power doesn’t work?

Our nation is fortunate for the years and billions of dollars spent on energy infrastructure. It is what has facilitated the U.S. economy’s growth, and increasingly our ability to play a role in the global energy market. Now, energy infrastructure projects have become a battleground. Energy producers see new infrastructure projects as an opportunity to expand operations and increase profits. Opponents of the increased use of fossil fuels view stopping these projects (pipelines, power plants, and ports) as critical for promoting their cause.

Exhibit 5. Energy Infrastructure Serves Different Fuel Sources

Source: EIA

In the last two weeks, we have witnessed interesting developments in the world of energy infrastructure. During a hearing before the Senate Energy and Natural Resources Committee, representatives of the pipeline and power industries expressed their frustration with Congress’ lack of action in dealing with the various states that are working to block new energy projects. One of the solutions requested of the committee was made by Don Santa, president of the Interstate Natural Gas Association of America. Mr. Santa said that the industry wanted to see Congress “provide guidance to the appropriate role of the states.”

Most energy projects are subject to multiple layers of government review and approval. Mr. Santa’s request for Congress to get more involved in providing guidance, was in response to recent actions by New York State in blocking the issuance of required permits for natural gas pipeline projects properly approved by federal regulators. In these cases, the New York State Department of Environmental Conservation (DEC) refused to grant the respective pipeline companies water quality permits required under the Clean Water Act. Under section 401 of the Clean Water Act, states must certify that a pipeline will not violate clean water standards before construction on that pipeline can begin.

The initial project rejected by New York Governor Andrew Cuomo (D) was Millennium Pipeline’s Valley Lateral Project, an 8-mile pipeline planned to move significant volumes of natural gas to a 680-megawatt power plant being built in Orange County, New York. Following the rejection, Millennium turned to the Federal Energy Regulatory Commission (FERC), who oversees the regulation of pipelines, for relief, which was unanimously granted. The issue in this case was that the DEC’s denial was made beyond the one-year time limit allowed for state review of the application for a water quality permit. FERC’s approval was granted based on DEC’s denial having been issued on August 30, 2017, rather than prior to the one-year anniversary date of the permit application, which was November 23, 2016. The question came down to when the clock began to run on the one-year period for DEC’s consideration of the application. FERC ruled it began when the application was initially received on November 23, 2015, as opposed to DEC’s position that it began August 31, 2016, when DEC considered the application to be complete after additional requested information was filed.

Last fall, following the FERC ruling in the Millennium Pipeline case, DEC’s denial of a water quality permit for the Constitution Pipeline owned by Williams Partners LP, was appealed. Last month, FERC denied Constitution Pipeline’s request, claiming that the two cases were not analogous because Williams Partners had withdrawn and refiled its request within the one-year window for the original request. The commission noted its concern about the battles being waged between the pipeline companies and the various states. It stated in its decision:

"We continue to be concerned, however, that states and project sponsors that engage in repeated withdrawal and refiling of applications for water quality certifications are acting, in many cases, contrary to the public interest and to the spirit of the Clean Water Act by failing to provide reasonably expeditious state decisions."

At the time of FERC’s overturning of DEC’s rejection in the Millennial case, Roger Downs, director of the Atlantic chapter of the Sierra Club issued a statement. In it, he stated:

“FERC’s reversal of Governor Cuomo’s decision is an insult to New Yorkers and our right to protect our communities and our water. States unquestionably have the authority to rule whether a dirty, dangerous fracked gas pipeline violates clean water laws, and nowhere is FERC granted the right to override that authority.”

Other environmental movement officials also condemned the “aggressive” move by FERC to inject itself in the water quality permit approval process of the states. Behind DEC’s move was its reliance on a then-recent court decision in which FERC’s environmental review of a pipeline project in the southeastern United States was found to be inadequate and deficient. According to DEC in its ruling, FERC “failed to consider or quantify the indirect effects of downstream [greenhouse gas] emissions in its environmental review of the project that will result from burning the natural gas that the project will transport to CPV Valley Energy Center.”

This case highlights how energy infrastructure has been identified as the Achilles heel of the fossil fuel industry. It is why the pipeline and power industries are seeking help from Congress to provide guidance over the proper role of the states in reviewing federally-approved energy projects. Surprisingly, the importance of this issue may now become paramount for the renewables industry – in particular, dealing with the approval of power transmission lines. Case in point: Northern Pass.

Exhibit 6. Northern Pass Transmission Line’s Future In Doubt

Source: NH SEC

Northern Pass is a 192-mile transmission line planned by Eversource Energy and Hydro-Quebec that would bring hydroelectric power from Canada through New Hampshire, and to the New England power grid, helping to deliver clean electric power to Massachusetts to enable the state to meet its clean power mandate. The transmission line, first proposed in 2010, had won a Presidential Permit from the U.S. Department of Energy last November. That permit is necessary for energy infrastructure projects that cross international boundaries. More significantly, Northern Pass was given the green light and a major role in Massachusetts’ clean energy plan at the end of January. Unfortunately, the New Hampshire Site Evaluation Commission (SEC) unanimously rejected the project on February 1st, throwing Massachusetts into a state of panic.

Once a final report is issued, Eversource can appeal the decision and ultimately appeal to New Hampshire’s Supreme Court. The problem is that the decision, and any appeal, will set the project’s timetable back, imperiling Massachusetts’ clean energy mandate. Northern Pass was selected over competing projects due to it being finished two years ahead of the others.

Two years ago, Massachusetts lawmakers passed renewable energy legislation laying out requirements for wind and solar power, as well as including multiple provisions related to procuring wind and hydropower, improving energy storage, and creating a sustainable commercial energy program. All of this is to be done by 2020. Once completed, Northern Pass would supply 9,450,000 megawatt-hours of renewable energy annually, boosting Massachusetts’ electricity supply to almost 50% clean energy.

It is interesting to read articles by the local press in both New Hampshire and Massachusetts. In the former, it was acknowledged that Northern Pass was universally opposed by residents and officials because of the projected damage to tourism and economic development. This opposition came even though the transmission line was to be buried for sections along highways to reduce the impact of views around the White Mountain National Forest. Eversource said that more than 80% of the line would run along existing transmission corridors. Those efforts were insufficient for SEC.

The Massachusetts Attorney General has called for an investigation into the selection process for the transmission line, as well as the proposed timetable for the project. One wonders whether the selection of Northern Pass by Eversource a week before SEC rendered its ruling was an attempt to sway the final decision.

As this news was breaking, the Energy Information Administration (EIA) released data showing how investment by major utilities in transmission infrastructure had grown over the two decades 1996-2016. While the increase was largely steady, it ramped up after 2005 and continued all through the financial crisis and recession of 2008-2009. Spending then accelerated in 2011 through 2013, before slowing for the final three years of the study. The most recent spending increases reflect the emerging and growing investment in renewable energy generation, most of which is located well away from population centers where the power is consumed. High power transmission lines may be the Achilles heel of the renewable power business.

Exhibit 7. Shifting Fuel Supplies Drive Spending

Source: EIA

A second chart from the EIA showed how the utility spending was spread across the various regional electricity grids. As the chart shows, the greatest increase was experienced in the PJM Interconnection (Mid-Atlantic region), followed, in order of 2016 spending, by the Western Electricity Coordinating Council, Midcontinent ISO and Northeast Power Coordinating Council. All this spending reflects on rapidly shifting energy supply sources.

Exhibit 8. Energy Infrastructure Spending By Region Varies

Source: EIA

Another perspective on the utility and transmission company spending was reported by the Edison Electric Institute (EEI), along with a forecast. The EEI collects data from the association’s survey on property and plant capital spending. The EEI data for 2016 tracks closely to the figures provided by the EIA. Therefore, we find the spending outlook in the EEI report quite interesting. It shows that spending grew roughly 10% in 2017 and will increase by about 4% in 2018, which represents a peak. While we understand that

forecasts are subject to frequent revision, one wonders whether the declines projected in spending for 2019 and 2020 will occur, or whether the estimates come from companies unable to accurately project their future spending and the exact time of the spending on new projects. That is not an indictment of the companies, but a recognition that future events may influence spending decisions and needs.

Exhibit 9. Will Energy Infrastructure Spending Peak?

Source: EEI

The increased transmission spending of the past few years has been helped by increased revenue and profits of utility and pipeline companies. While not devastating, the recently enacted tax reduction legislation is forcing these same companies to consider rolling back their regulated rates due to lower tax burdens. The impact by company varies depending on how much of its revenues and profits come from regulated activities. The higher that percentage, the greater the impact would be from adjusting to a lower corporate tax rate in the future. Activists are clamoring for local utilities to reduce their rates to reflect their reduced taxes. In the case of pipelines, a recent Wall Street Journal report highlighted the potential impact of reduced taxes. The WSJ examined research on a handful of pipeline operators and pointed to three – Dominion Energy Inc. (D-NYSE), Williams Companies Inc. (WMB-NYSE), and TransCanada Corp. (TRP-NYSE) – as having 65% to 80% of their revenues coming from regulated businesses. The WSJ noted three other operators with regulated revenues accounting for 41% or less of total revenues, suggesting they are less at risk of having sharply lower revenues and earnings. Likely, this topic will become more important as company managements and investors assess the impact on future earnings growth.

The magnitude and timing of energy infrastructure spending reflects the shifts underway in power generation – the growth of solar and wind energy, as well as more natural gas-fired power – in the nation. Solar and wind power have grown primarily in the West, Midwest and Texas, which are necessitating the construction of transmission lines. In the eastern portion of the country, and in Texas, the growth of natural gas production is driving new and expanded pipeline construction. Whether the nation is reaching a peak in energy infrastructure investment remains in question. The answer will offer both opportunities and challenges for the utility and energy industries.

From Tailwind To Headwind: Natural Gas Market Suffers (Top)

It was barely three weeks ago that natural gas futures prices were soaring in response to three weeks of extremely large withdrawals from storage. In fact, one of the three weeks set an industry record for the volume of gas withdrawn. As these large withdrawals were occurring, industry participants began expressing concern about the adequacy of storage volumes, and what would happen to gas prices, if such large weekly withdrawals continued. Running out of storage – actually getting below a threshold that impacts the ability of the underground storage owners to operate them properly – quickly became the industry’s focus. Was it possible the U.S. natural gas industry might find itself short of supply to meet demand due to more polar vortex blasts of arctic air? That seemed to be the fear gripping the market in late December after the first polar vortex sent temperatures to record lows, caused huge weekly supply withdrawals, and brought gas prices back to life.

From about $2.50 per thousand cubic feet (Mcf) of natural gas, the price climbed rapidly toward $3.50, with only one brief price retreat and another period of sideways movement. As gas prices rose above $3.50/Mcf in late January, peaking at $3.60, weather forecasts started to reflect the arrival of a warming trend. It took a while for gas traders to absorb the possibility that the coldest part of the winter had come and gone, with no serious supply shortage.

Exhibit 10. Record Gas Withdrawals Sent Prices Soaring

Source: EIA, PPHB

Gas prices began to fall. Even the prospect of growing liquified natural gas (LNG) shipments, as the Cove Point, Maryland terminal came into service and volumes were ramping up at the Sabine export facility, could not change the negative sentiment toward the gas supply and demand balance.

If one wants to contemplate how much the fundamentals for the domestic natural gas industry have changed in recent years, one only needs to consider Exhibit 11. Natural gas prices have trended downward since 2005, which essentially coincided with the ramping up of production from shale formations. Ever since the shale revolution started, more supply has been added to the domestic market. In recent years, much of the shale gas supply additions have come as associated gas from oil shale wells. Not only is this phenomenon showing no signs of slowing down, recent monthly data suggests we are seeing an acceleration in gas output as drilling activity ramps up in response to higher crude oil prices.

Exhibit 11. Gas Prices Steadily Fall As Supply Grows

Source: EIA, PPHB

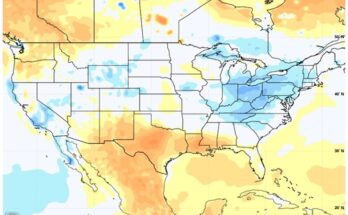

When we look to the trend in natural gas storage published by the Energy Information Administration (EIA) in its weekly report, we see how current storage volumes are well below (-23.5%) the 5-year average, and are coming extremely close to the bottom of the weekly storage range. While that would suggest the market might be ready for a bounce if more cold weather is encountered, it is important to note the sharp decline in the bottom of the range as we move toward the end of the withdrawal season. Thus, the future for natural gas prices will be determined by the advent of more cold weather than has normally been experienced in the past. Absent that, it is likely that growing gas production will continue to weigh on gas prices.

Exhibit 12. Gas Storage At Bottom Of 5-Year Average

Source: EIA

Without more cold weather, natural gas price bulls will have to soon turn their attention to the long-range forecasts for summer temperatures. Will we have an extremely hot summer that will necessitate greater air conditioning that will demand increased electricity and more natural gas fired power generation? Natural gas traders are now finding that their activities are more like those of farmers than energy analysts. As a result, traders may find it more appropriate to be scanning the weather charts than the commodity price charts.

Moral Decisions Confront Autonomous Vehicles (Top)

The principal argument behind the need for autonomous vehicles (AV) is that they save lives. Every presentation or panel discussion about AVs begins with pointing to the statistics of the number of highway deaths either in the United States (37,461 in 2016) or worldwide (1.25 million in 2015). The AV proponents point out that 90% of all fatalities are caused by humans – distracted driving. In the U.S., the data shows that 61% of fatally injured passenger vehicle drivers were legally “drunk.” There is a major challenge for automobiles.

By handing over the responsibility for driving vehicles to machines programmed with artificial intelligence, highly sophisticated sensors, and well-documented road and environment maps, AVs are destined to eliminate traffic accidents and fatalities. In the case of world traffic deaths, almost half of them in 2015 involved pedestrians, cyclists and motorcyclists. It is seeing these people and recognizing the hazard they represent that AVs are supposedly being programmed to deal with.

Exhibit 13. AVs Favored, But Consequences Not Considered

Source: UN World Health Organization

AVs are also being hailed for their liberating impact on society. Just as the automobile delivered personal transportation freedom that previously was controlled by railroad, trollies and interurban services, which run on schedules and along prescribed routes, AVs will offer unique freedoms for today’s Americans. The automobile delivered the ability to go virtually anywhere, anytime and with anyone or anything the driver desired. AVs will do that too, and with even less stress for the driver.

The greatest social feature projected to promote widespread adoption of AVs, is that they will be a key component of the Transportation as a Service model (ride-hailing). TaaS will provide the opportunity for the handicapped, elderly and youths to gain mobility, something they do not have now. Today, these groups require the help of another human to take a trip. With TaaS they will be able to journey on their own, granting them the personal freedom they currently lack.

The science of programming AVs is being examined for the choices that the vehicles must make in various situations on the road. The programming is conducted by humans, although there are scenarios where eventually AVs may be controlled by software derived from machine learning. But, for the foreseeable future, the programming will be done by humans, raising the question about what role moral choice will play in the decisions these machines choose to make.

The classic moral question exemplified in AV programming is referred to as the Trolley Problem. In this problem, a trolley is going down the track towards five people. You cannot stop the trolley, but you can pull a lever to redirect the trolley, however, there is one person stuck on the only alternative track. This is the tension between actively doing versus allowing harm: Is it morally acceptable to kill one to save five, or should you allow five to die rather than actively hurt one?

Put into AV terms, how should the auto react if it is put into a situation where it must choose between the driver and someone else? For example, the AV is on a highway and there’s a truck crash immediately ahead. The only alternative is to swerve into a motorcycle or off a cliff. How should the auto be programmed?

The National Science Foundation has given a grant to a group of three philosophy professors and an engineer to write algorithms based on various ethical theories. The grant will allow the team to create various Trolley Problem scenarios and show how the AV would respond according to the ethical theory it follows. Utilitarian philosophers believe that all lives have similar moral weight. Thus, an algorithm based on this philosophy would assign the same value to auto passengers as to pedestrians. Others, however, believe that you have a perfect duty to protect the driver even if it costs some people their lives or puts others at risk. If the auto isn’t programmed to intentionally harm others, it may be acceptable to program the car to swerve to avoid the accident even if it means harming another motorist or pedestrian.

While the algorithms may show that one moral theory will lead to more lives being saved than another, the choice may be more complex. It is possible that the choice isn’t about how many people are saved, but which ones are. For example, is it moral to program the choice to sacrifice people over 50 years old rather than ones under 30? What if the set of values programmed into the AV favors protecting pedestrians at the expense of the driver; would it then be possible for someone wanting to harm the driver to deliberately walk in front of the AV?

Patrick Lin, a philosophy professor at Cal Poly, San Luis Obispo, is one of the few philosophers who’s examining the ethics of autonomous vehicles outside of the Trolley Problem. In a recent interview, he mentioned some of the questions to be considered. Could cars be programmed to drive past certain stores rather than competitor stores? Who is responsible (liable) if a car is programmed to put someone at risk? Could drinking increase if drunk driving is no longer a concern? How do we protect the privacy of passengers in AVs, as they will always be attached to the Internet? If AVs become pervasive and do eliminate vehicle crashes, what may happen to organ donor programs?

As Dr. Lin put it when discussing the potential and unintended consequences of AVs, “It’s like predicting the effects of electricity. Electricity isn’t just the replacement for candles. Electricity caused so many things to come to life – institutions, cottage industries, online life. Ben Franklin could not have predicted that; no one could have predicted that. I think robotics and AI [artificial intelligence] are in a similar category.”

He pointed out that the development of the automobile brought us suburbs and fast food drive-through restaurants. Maybe AVs will prompt people to live further from their work or cities. The time humans spend in the AV could be devoted to increased leisure. So, while AVs are being promoted as how to reduce traffic, they could have an opposite effect as more trips are taken because they are less stressful. Dr. Lin stated, “I don’t think anyone has a crystal ball when it comes to extrapolating that far out. It’s a safe bet to say that we can’t imagine the scale of effects.” The potential impact of AVs may be huge, as well as unpredictable. There is probably no way we can create algorithms or philosophies that may make AVs moral, meaning we will have to deal with their lack of morality.

How Much Do Your Eating Habits Hurt Our Climate? (Top)

What you drive, where you live and what you eat are becoming battlegrounds for the environmental movement. Even though electric vehicles (EVs) dominated the fledgling automobile industry at the turn of the 20th Century, their inherent shortcomings – range limits, recharging logistics and cost – resulted in them yielding their market dominance to internal combustion engine (ICE) vehicles. That battle quickly became unfair when the federal government began sponsoring a national highway system and Henry Ford undercut the automobile cost structure with the help of the assembly line for his Model T.

Now that global temperatures are rising, and climate change is projected to lead to a cataclysmic outcome for the planet unless carbon emissions are eliminated, everything we do is coming under scrutiny. The latest battle has been launched over the methane regulations enacted in the waning days of the Obama presidency.

But the battleground is being expanded beyond hydrocarbon extraction and processing sites (the oil and gas industry) and into our kitchens and restaurants. What’s prompting this battle is control over methane emissions.

Methane is a chemical compound with the chemical formula CH4 (one atom of carbon and four atoms of hydrogen). It is the simplest member of the alkane series of hydrocarbons. It is a colorless, odorless flammable gas that is the main constituent of natural gas. It is emitted by various sources, and it has a lifetime in the atmosphere of 12 years, but it has a global warming potential (over a 100-year period) of 25, meaning it is that many times more powerful a climate change agent than CO2, although its time of impact is only a fraction of the time CO2 emissions contribute to global warming.

Concern about methane in our atmosphere, and its impact on the climate, has grown in recent years because there has been a sharp rise in its concentration.

Exhibit 14. How Methane Emissions Have Grown Recently

Source: NOAA

Despite that increase, in the U.S., according to the latest data from the Environmental Protection Agency (EPA), methane represents about 10% of our atmosphere. Total greenhouse gas emissions were 2.8% higher in 2016 (6,546 Metric Tons) than in 1990 (6,369 MT), but down 10.6% from the peak in 2005 (7,326 MT). In the case of methane emissions, they are down from both 1990 (-15.7%) and 2005 (-3.4%) emissions.

Exhibit 15. U.S. Methane Emissions Are Lower Since 1990

Source: EPA

The fact that methane emissions are lower in the U.S. has not dissuaded environmentalists from demanding cutbacks on these emissions by the natural gas industry. The target has been emissions that are being released by shale fracturing operations and in the transportation of natural gas via pipelines. Targeting of methane emissions has been focused on leaking valves and storage tanks in the gas infrastructure, as well as the methane released from the wellhead and other leaking connections when a gas well is fracked. The oil and gas industry, the target of the Obama administration’s methane rules, has recently forged an association to work to limit methane emissions from their operations. This effort, as well as data showing that the methane emissions problems lie outside the oil and gas industry, has done little to stop the attacks. A 2016 report from the EPA stated:

“Methane emissions in the United States decreased by 16 percent between 1990 and 2015. During this time period, emissions increased from sources associated with agricultural activities, while emissions decreased from sources associated with landfills, coal mining, and the exploration through distribution of natural gas and petroleum products.”

The data from the 2016 EPA greenhouse gas emissions report shows that natural gas systems ranked second behind the methane released from animals. Interestingly, the third most responsible party for methane emissions in this country is landfills.

Exhibit 16. Natural Gas Is Second Largest Methane Leaker

Source: EPA

On a global basis, according to the most recent United Nations’ data, the fossil fuel business only accounts for 29% – 32% of total methane emissions. And, of that share, a quarter of it is associated with geological seeps, or naturally occurring emissions, not man-made. This data suggests that the attacks on the oil and gas industry for methane leaks should be more restrained, although leaks of any kind should not be tolerated.

Exhibit 17. Fossil Fuel Not The Monster Methane Leaker

Source: Yale Environment 360

The attacks on the oil and gas industry in the U.S. for its methane emissions have been based on reports and estimates of the volume of leaks from its drilling and transportation activities. Fighting these leaks is in the companies’ best interests because it will help the bottom lines as less natural gas will be lost to the atmosphere and income will be enhanced. Fixing the leaks on their own is also a way the oil and gas industry can hope to stave off further debilitating regulations. Now, however, the industry is hopeful of an easing of the methane containment rules for companies drilling and producing natural gas from federal lands by the Trump administration.

While the discussion about methane leak control for the oil and gas industry is dominating the headlines, there remains a huge untapped source of natural gas in the form of methane hydrates under the ocean that some governments are working to exploit. These hydrates are where molecules of methane gas are entrapped within an ice lattice. They form under very low temperatures or high pressures, or a combination of the two. They are usually found on the outer continental shelves around the world. (They have been found in the pink areas of the global map in Exhibit 18.) The challenge is that they have been difficult (risky) to mine, as well as costly. They have the potential to blow up any vessel attempting to extract the hydrates from the sea floor. The U.S. Bureau of Ocean Energy Management (BOEM) estimates that the U.S. has 51,338 trillion cubic feet of methane hydrate gas resources. If only half of BOEM’s estimate is realized, there are 1,000 years of supply based on the current consumption rate of natural gas in the United States.

Exhibit 18. Where Methane Hydrates Have Been Found

Source: World Ocean Review

Last year, China, a country with significant needs for more natural gas but lacking success in finding and developing meaningful reserves, has been experimenting with tapping methane hydrates. The country’s focus is on hydrates situated in the South China Sea, which helps explain China’s attempt to claim territorial rights to that area of the Pacific Ocean. At the same time, Japan, another nation lacking adequate energy resources, has successfully extracted methane hydrates from an area offshore the Shima Peninsula. The implications of successful development of methane hydrate mining by either or both countries would be significant for the future of the global liquefied natural gas (LNG) business.

As pointed out in the methane emissions data, the agricultural and animal sectors are major contributors. A study reported last fall that the periodic reports from the UN’s International Panel on Climate Change (IPCC) about carbon emissions from livestock have been based on out-of-date data. A recent study showed that methane produced per head of cattle showed that global livestock emissions in 2011 were 11% higher than estimated by the IPCC. The discrepancy is due to the IPCC failing to understand how livestock numbers are changing in various regions of the world, and that breeding has resulted in larger animals with higher intakes of food. More food means more methane emissions both from the growing of foodstuffs and the release from the animals. Couple those factors with changes in livestock management and you have higher methane emissions.

These shifts in animal husbandry help explain the sharp rise in global methane concentrations in recent years. It is not due primarily to the growth of shale fracking activity within the fossil fuel industry, or the increased use of fossil fuels. Agriculture and animals have also been major sources of methane emissions. In the case of natural methane emissions from landfills, etc., much of the increase is coming from the regions around the equator and not from the more temperate lands north and south.

The issue with our diets is that the world is shifting to a meat-based diet and away from a plant-based one. An older research paper (2003) suggested that two billion people lived primarily on a meat-based diet while four billion were subsisting on a plant-based diet. The paper was ultimately an attack on the perceived wasted resources emanating from the U.S. meat-based diet, as the authors stated that U.S. food production utilizes about 50% of the total U.S. land area, approximately 80% of the fresh water and 17% of the fossil fuel energy used in the country. The punch line of the paper was: “The heavy dependence on fossil energy suggests that the US food system, whether meat-based or plant-based, is not sustainable.” The report was a plea to shift our food system because the nation’s population was projected to double over the next 70 years.

One aspect we didn’t see accounted for in the paper was the volume of American foodstuffs exported to the rest of the world. Not all the food produced in America is consumed here. While the ratios of energy and other components to the calories produced are valid, indicting the agricultural industry for overuse seems like a stretch.

Taking off from that theme of the energy and resource intensity of our meat-based diet is a new effort to replace the food with laboratory grown meat. This is an emerging industry with huge potential as it takes on an estimated $800 billion market. The proponents of this development point to the data showing that it takes 23 calories of feed to produce one calorie of meat.

Exhibit 19. Is Laboratory Meat Our Future Food?

Source: ARK Investment

The lab-grown meat process extracts healthy cells from animals and grows them in a laboratory environment using raw ingredients such as amino acids, water, sugar and oxygen. The process uses only about one-tenth the amount of land and water resources than presently utilized. Additionally, the risk of animal-borne diseases virtually disappears. There would likely be a significant impact on the use of energy in the food production process.

As expected, this food revolution is expensive. Laboratory grown meat is currently ten-times more costly than conventional meat. Proponents count on future costs coming down as the technology develops and its use grows. (Sound familiar?) The proponents point to a recent investment by Tyson Foods in a start-up working on lab-grown meat, Memphis Meats, as confirmation of the ultimate success of the technology.

While this technology offers long-term promise, near-term, farmers are working on improved systems to capture the methane coming from belching and farting livestock. In some experimental farms, devices affixed to the livestock are capturing some or all of the methane they are producing and it is being used to power the farm.

In 2016, the National Oceanic and Atmospheric Administration (NOAA) developed a database of isotropic measurements taken all over the world over the past three decades. The data was analyzed, and two surprising conclusions emerged. First, the recent surge in methane emissions is due not to the rising volume of fossil fuel emissions, but rather to the unexpected surge in microbial sources. Second, fossil fuel methane sources are almost twice as big as previous estimates, whereas microbial sources are about a quarter less. With respect to the first conclusion, a study done in 2014 at the University of Texas at Austin, based on access to data on wells that were fracked and where the methane leaks were from, showed that a small subset (about a fifth of the wells) accounted for more than three-quarters of the methane leaked.

Recently, a second research paper highlighting the magnitude of methane leakage from wells in the Marcellus region was withdrawn due to errors in the data. A re-analysis of the data from the withdrawn study showed that the volume of methane leaked was half of the volume reported in the original study. The first paper withdrawn initially concluded that the volume of gas leaking from fracked wells was substantial. The paper was authored by several professors who have been personally opposed to fracturing wells in New York, Ohio and Pennsylvania. Like the other withdrawn paper, this one also overstated the volume of gas leaking from fracked wells.

Exhibit 20. Every Study Demonstrates Low Methane Leakage

Source: IPAA

Understanding why the volume of methane in our atmosphere has increased so dramatically in recent years is important. It appears to have coincided with the emergence of the shale revolution, but the data doesn’t show the fossil fuel industry to be the prime culprit. Rising living standards around the world have contributed to more energy-intensive diets, which are driving methane emissions. The fact that a serious analysis of methane concentrations globally places much more of the emissions growth in the tropics, which suggests that natural conditions have been a major contributor.

We applaud the efforts of the oil industry and agricultural community to seek ways to reduce the volume of methane emitted into the atmosphere. At the same time, it is important to remember that natural gas remains the cleanest of the fossil fuels, and its role in satisfying the world’s future energy needs will grow, while helping to reduce, or certainly restrain, the growth of methane emissions. Successfully harvesting subsea methane hydrates may enable the world to severely limit the use of dirty fossil fuels, while also helping to ensure the stability of our electricity grid systems and accomplishing all of this at low energy prices. Natural gas may soon resume its role as the “bridge to a clean energy future,” a title it held when its high prices provided an umbrella for high-cost renewable fuels. Only this time, its role may be to ensure that the world can more easily transition to a clean energy future.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.