- Concerns About Economy’s Health Weigh On Oil Price

- Strange Thinking About Government Support For NGVs

- Electric Power Consumption In The Age Of Connectivity

- US Energy Independence: What If The Numbers Are Wrong?

- How To Support The Highway Trust Fund Of The Future

- Improved Economic Prospects Due To Housing Recovery?

- RI Electric Power Sources Reflect Miss-mash Of Fuels

Musings From the Oil Patch

July 3, 2012

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Concerns About Economy’s Health Weigh On Oil Price (Top)

A week ago last Thursday, a spate of bad global economic data coupled with fears about a worsening European sovereign debt situation and a downgrade of the credit ratings of 15 major U.S. banks by Moody’s contributed to a dramatic decline in crude oil prices. For the day, crude oil futures prices fell $3.25 per barrel, or 4%, to $78.20, breaking through the psychological $80 threshold. Once the price breached that support, attention turned to how low oil prices could go, and of course, what it would mean to various sectors of the economy. The low oil price concern disappeared on Friday when it looked as if the European financial crisis was resolved. That prospect, coupled with a weakening of the U.S. dollar, caused oil prices to jump by over $7 per barrel, or 9.4%.

Exhibit 1. Oil Prices Break Into Low Territory

Source: EIA, PPHB

The $80 threshold was established by connecting a line with the two low price points of early 2012 and then extending the line. If one made that line also touch the May low price, then the threshold support price would have been slightly over $80. The point is that technical analysts of stock and commodity price moves would draw a line similar to the one we have drawn on a chart of 2012 oil futures prices seeking a rough idea of market support. Once the oil price went below, and then closed below that support price, technical analysts would suggest oil has entered a “bear” market, or a trend extending toward lower prices. Immediately, analysts would switch their focus to long-term charts to see if they can find the next strong technical support price. In Exhibit 2, we have plotted oil prices since the beginning of 2011 to develop a long-term price chart. Again, as in the 2012 oil price chart, we drew a line connecting the low oil price points and then extended the line. From the line we see that about $65 per barrel is the price point with long-term support.

Exhibit 2. $65 Could Be Next Support Level

Source: EIA, PPHB

The critical thing to keep in mind as one considers the science of technical analysis is that it is based on historical trading patterns. While these patterns have a high probability of being repeated in the future, like anything based on history, the patterns repeat until they don’t. For that reason, technical analysts usually work with long-term, intermediate and short-term charts all at once with the aim of triangulating support and resistance points to increase the likelihood that the current price pattern can be projected. Based on last Friday’s news, we wonder how oil prices will trade in early July if people truly believe Europe’s sovereign debt problems are solved.

On the other hand, if oil prices are in a bear market, what does that mean for energy investments and the economy? The general rule of thumb has been that for every $10 per barrel change in crude oil prices, there is about a $0.25 per gallon change in gasoline pump prices. As we have been talking about a downward move in oil prices currently, the drop in gasoline prices helps boost consumer spending and economic activity. An increase in economic activity should lead to an uptick in hiring and more employed workers means further spending and greater government tax revenues. These trends are all positive for the overall economy. Higher oil prices would suggest the exact opposite of what we just wrote.

Energy has been a positive for employment so lower energy prices will become a negative for the economy. Over the past 12 months, the energy sector has been credited with adding 121,000 workers according to our calculations taken from Department of Labor statistics. However, we are now seeing E&P companies stressed by low natural gas prices and falling crude oil prices cutting back their capital spending plans meaning drilling and completing fewer wells in the future, and even laying off employees. Several oilfield service analysts have reduced their drilling rig activity forecasts for the balance of 2012 and in 2013, too. If these spending cuts spread throughout the industry and the lower drilling rig and well completion activity materializes, America’s economy will be fighting falling employment from what has been the engine of economic strength and counting on consumer spending picking up the slack. If we examine the current health of the national labor market, we see it is deteriorating, which does not suggest the income boost from lower energy prices will necessarily lift consumer spending and employment outside of the oil patch.

Exhibit 3. Labor Market Remains Stagnant

Source: BLS, PPHB

Most people who watch the labor market focus on the monthly unemployment rate, which ticked up one-tenth of a percent to 8.2% in May. The problem with this rate is that it is impacted by arbitrary counting of unemployed people such that the number can be manipulated. Most people don’t follow the broader unemployment measures because the media seldom reports them. If we add to the officially unemployed all the involuntarily underemployed, the unemployed rate is nearly 15%. These involuntarily underemployed workers include those who would like to work full-time but either they can’t find a full-time job or they have had their hours cut back due to the lack of business for their employer.

Another consideration is that there are 365,000 fewer workers today than when the recession officially ended in June 2009. With population growth in the intervening years and assuming that the labor force participation rate remained where it was at that time, there is a total of about 7.7 million fewer workers than currently employed. Most of these additional workers are not counted because they are considered by the government to be outside of the labor force. In certain cases, some of these workers may have made a decision to stay in, or return to, school, or they may have elected to take early retirement or seek disability status to collect payments from Social Security because they are too young for retirement. Since 2007, disability rolls have increased by 23% to about 11 million Americans. There is 1-in-18 working age, non-retired Americans currently on disability.

We think watching the hires and quits data may be more instructive about the health of the labor market. Hires reflect exactly what it suggests – the number of people hired into full-time employment. Quits reflects those unemployed workers who elected to quit their job voluntarily. Exhibit 3 (page 3) shows the trend in hires and quits since the beginning of 2008. We see that the most recent month has shown a downturn in both hires and quits. If one looks at the hires rate, it presently is about where it was for 2011 and early in 2010. That suggest employers are not interested in hiring additional workers. The problem is compounded by the fact the hire rate is well below (nearly 20%) what existed prior to the financial crisis that exploded in the summer of 2008.

When we look at the quits rate, it too is demonstrating a pattern similar to hires – it is about where the rate was during most of 2011, although it is higher than in 2010 and the recession year of 2009. A higher quits rate would reflect workers’ belief that the labor market is improving, and that they can boost their income by quitting their current job and finding a better one. The lack of improvement in the quits rate is not surprising given the lack of sustained improvement in the hires rate.

Another measure of the labor market’s deterioration is the Bureau of Labor Statistics’ job opening postings that showed a decline of 300,000 to 3.4 million on the last day of April (the latest data available). That marked the lowest number of openings in five months. While job openings have increased by one million, or nearly a third from the end of the recession in June 2009, it is well below the five million rate that existed prior to the financial crisis. The conclusion is that recent labor market slowdown reflects economic weakness rather than the impact of a warmer winter having pulled employment gains forward this year.

The primary reason the labor market is not improving is that the U.S. economy is engaged in a massive deleveraging, which usually comes after a financial crisis such as we experienced in 2008. The American spending splurge of the 1990s and 2000s that was financed by increased debt and borrowing against rising home values proved unsustainable. The housing crash, which was recently shown to have been a major contributor to the 39% decline in American family net worth between 2007 and 2010, is at the root of our economic problems. The issue is we have not found another sector to replace the housing engine that drove our economy for nearly 20 years. Until American family balance sheets are repaired – debt reduced and savings rebuilt – the pace of economic activity will be slow. The accompanying chart from McKenzie Global Institute shows that the deleveraging process is well along, but by the standard of the Swedish experience in the late 1990s, we have a considerable way to go as we are less than half way there.

Exhibit 4. Deleveraging Takes Time

Source: McKenzie Global Institute

The deleveraging scenario is a difficult concept for most people to understand and even more difficult to see the economic implications. The pace of economic recovery will remain slow for many years, just as the Federal Reserve suggested as it reduced its U.S. economic growth forecast for 2012 to 1.9-2.4% from its prior 2.4-2.9% estimate. It also cut its 2013 and 2014 growth estimates. Slow growth means continued high unemployment and under-employment, which means stagnant wages and consumer spending. What it also means is the energy industry is probably looking at weak demand for the foreseeable future.

Strange Thinking About Government Support For NGVs (Top)

We will be writing more about alternative fuel vehicles in a future Musings, but for the moment we feel compelled to comment on the thinking of one supporter. Floyd Norris writes about finance and the economy at The New York Times and on its web site. He generally does a solid job and we have used some of his analysis in various Musings articles dealing with those topics. But we were distressed about something he wrote in a recent Times article arguing that natural gas vehicles (NGVs) need federal government support.

In his column “Natural Gas for Vehicles Could Use U.S. Support,” Mr. Norris argued that the federal government has stimulated the market for alternative fuel vehicles and it needed to do more to help the NGV sector. After discussing the lack of fueling infrastructure in this country and why the NGV manufacturers are primarily targeting companies that can support their own natural gas refueling facility, he went on to write, “Imagine if gasoline vehicles were sold only to those who could afford to build and operate their own gas station.”

I wonder if Mr. Norris would be shocked to know that the first gasoline service station wasn’t constructed in this country until 1907, some 15 years after Frank and Charles Duryea built the nation’s first gasoline-powered automobile. By 1912, five years after the first station opened the nation had half a million gasoline powered vehicles. Where did the gasoline to power these vehicles come from and how did the owners get it? Gasoline was a byproduct of kerosene refining. It was sold in a handful of liveries and dry goods stores. Motorists bought gasoline in buckets and filled their tanks using funnels.

The first service station was built in 1907 by the Standard Oil Company of California (now Chevron) (CVX-NYSE). It was located close to the company’s Seattle kerosene refinery. The station was described as little more than a shed, a 30-gallon tank and a garden hose, but it attracted upwards of 200 customers a day. Delighted by the level of business, the owners put a rain-blocking canopy on the shed, which may be described as the first customer amenity.

In 1913, Gulf Corporation (since merged into Chevron) opened the first drive-up service station. The brick, pagoda-style station was situated on a high-traffic Pittsburgh street and featured free air, water and restrooms. These services were a further recognition of the need for good customer service. Over the next few years the gasoline station business boomed. There were more than 200 new petroleum companies formed in 1916. Stations were rustic and functional. Customers pumped their gasoline by hand (the first self-service stations?) while attendants tallied the price on paper.

Given the history of the gasoline station, we question why we need the federal government building, or subsidizing, natural gas fueling stations as Mr. Norris suggests. If you hold to a philosophy that government knows best, this would appear to be a rational role for the government. We would content that the capitalistic system has always found a way to create the products and services the public wants and needs without the involvement of the government. Gasoline stations are an excellent example. If people are convinced they want NGVs because they are cleaner or more fuel-efficient, their owners will deal with the initial inconvenience of finding a natural gas fueling station. Eventually, with sufficient demand more stations will be built, which will reflect true consumer demand and not an artificially stimulated market using your and my money.

Electric Power Consumption In The Age Of Connectivity (Top)

A matter of only a few weeks ago, the investment world was mesmerized by the buzz surrounding the value of the initial public offering of stock in Facebook (FB-NASDAQ), the social media company created by Harvard University undergraduate, Mark Zuckerberg, who was anointed with rock star status for the phenomenon he created. His image was tarnished by the IPO’s flame out that has become one of the largest IPO failures in history and is now the subject of investigations by regulators and civil lawsuits. The fact of the matter is that the social media phenomenon and the ever expanding role of the Internet in modern day life have added to the globe’s power demands and suggest further increases on the horizon. Our growing communications and information gathering needs and wants have been met by the installation of ever more computer servers, which in turn have contributed to power consumption growth around the world.

In the United States, electricity consumption demonstrated a steadily rising pattern since 1949 until the financial crisis erupted in 2008. Occasionally during that history there were brief periods of little or negative power consumption changes due to economic recessions. The 2008 financial crisis, coupled with the resulting recession that has now morphed into an extremely sluggish recovery, resulted in domestic power consumption remaining essentially flat for the past three years. This pattern is not inconsistent with every recession since the end of World War II, but what is different is that this period has become the longest time with little power consumption growth. The history of electricity consumption is presented in Exhibit 5 (page 8). Besides pointing out the recession years, we plotted (red line) the linear trend line in consumption growth over the entire period. What becomes clear is that at different periods of time, actual power consumption has been both above and below the long-term trend line. The longest period of above trend line power growth was experienced in the 1990s and 2000s, up until the economic downturn that started in 2008. A contributing factor for the above-trend line growth during that extended period was the explosion of computers, cell phones and other personal communication devices. Their growth was related to the increased usage of the Internet and the developing phenomenon of social media.

Jonathan Koomey, a consulting professor at Stanford University, has conducted two studies in the past five years measuring and forecasting the power consumption of servers in light of their rapid growth in recent years. The first study was conducted in 2007. His most recent study, published by Analytics Press and sponsored by The New York Times, utilized new installed server database information and server sales estimates by computer analyst firm IDC. The study found that a slowing in the server installed base reflected by recent lower annual purchases due to virtualization and the 2008 economic downturn offset increased power consumption.

Exhibit 5. Long-term Electricity Growth

Source: EIA, PPHB

Professor Koomey estimated that total server center power consumption from servers, storage, communications, cooling, and power distribution equipment accounted for between 1.7% and 2.2% of total electricity use in the U.S. in 2010. These estimates are up from the 0.8% of total U.S. power consumption servers accounted for in 2000 and the 1.5% estimate for 2005. However, this estimated power consumption is down significantly from the 3.5% of total U.S. power consumption previously estimated based on expectations that the historical trend would continue, and a previous alternative estimate of 2.8% that assumed power-saving technologies would be adopted. The historical trends in the number of servers installed, the annual number of servers purchased and annual server power spending are shown in Exhibit 6. One trend that Dr. Koomey found is server center operators are finding that annual spending on electricity to power the servers is rising to compete with the cost of buying the servers themselves.

Exhibit 6. Servers And Power Rise Together

Source: IDC

Worldwide server power consumption trends appear to be similar to those of the United States according to Professor Koomey. He estimated that the world’s server center power consumption accounted for 1.1% to 1.5% of all electricity used in 2010, up from 0.5% in 2000 and 1.0% in 2005. Importantly, the estimate for global power consumption was down from the previously estimated 1.7% to 2.2% of world electricity use.

To understand the dynamics of servers and their power consumption, one needs only to examine the chart in Exhibit 7 showing trends in server speed and their corresponding power consumption during 1990 – 2009. This is a reflection of the fact that energy is required to perform computing tasks. Depending upon local power rates, the energy consumed in operating a server can run anywhere between $300 and $600 per year. An examination of the chart shows how server growth and the need for greater speed increased power consumption during the 1990s and first half of the 2000s. After 2005, the rate of increase in microprocessor speed slowed, and with it the increase in power consumed.

Exhibit 7. Microprocessor Speed = Power

Source: National Academy of Sciences

One of the governing factors in the growth in servers and power consumption is the demand for faster servers to support faster Internet searches. People like speed. A 2012 microprocessor running 24/7 runs 30 times faster than a 1995 microprocessor but consumes 10 times the amount of electricity. Speed has a cost. When the Internet slows, people become frustrated leading to reduced satisfaction and revenue per user. Researchers at Google (GOOG-NASDAQ) and Amazon (AMZN-NASDAQ) undertook one of the first performance tests as opposed to relying on anecdotal evidence about the role of faster or slower servers on consumer behavior and satisfaction. We can’t quarrel with the conclusions of the analysis based on our own reaction to slow Internet connectivity.

Exhibit 8. Consumers Dislike Slow Computers

Source: Google and Amazon

In order to avoid this phenomenon, Google and others have been investing in faster servers while also pursuing lower cost electricity. Global spending on powering servers, excluding the spending on power for storage, network and end-user devices, is now about $35 billion annually. As server center operators seek both increased efficiency in order to get more compute-tasks per dollar of electricity and low cost power, they are seeking to locate new centers in areas with surplus or cheap electricity. In the case of Google, it has been investing aggressively in “green” energy power projects across the nation in order to sustain a favorable consideration along with promoting the perception the company is a responsible computer power user. According to Dr. Koomey’s research, Google alone accounts for an estimated 0.8% of all server center power consumption globally, and in turn, 0.011% of the world’s total power consumption.

Exhibit 9. Leading-edge Server Centers

Source: Sun Microsystems

In a recent presentation to investors, Sun Microsystems showed a chart (Exhibit 9) highlighting how electric power and server growth have increased over past decades. It predicts these trends will continue, suggesting we will see continued increased power demand in America. The average size of leading-edge server centers has risen exponentially since 1975 in terms of physical size (square feet), number of servers installed and power consumed. Based on a 20 megawatt (MW) server center using 100 kilowatt-hours (kWh) annually, a facility in low-cost energy Wyoming will cost $15 million less to run than a similar facility built in New York. The savings are the result of Wyoming’s electricity costing $0.10 per kWh less than in New York. Another analysis showed that a 50MW server center in Wyoming would save $160 million over the four-year life of servers based on their amortization schedule.

The key factor behind the less-than-expected data center power consumption trend lies in slower growth in the installed server base than projected earlier. Using the IDC estimates for the server installed base and annual server sales, Dr. Koomey estimated the total U.S. installed base of servers in 2010 was 11.5 million volume servers along with 326,000 midrange servers and 36,500 high-end servers. Those estimates are significantly below the projections from his 2007 study that estimated 15.4 million volume servers, 326,000 midrange servers and 15,200 high-end servers. The earlier study also assumed a Power Usage Effectiveness (PUE) rating of 2.0, which means that for every kWh of power used by a server to process data, an equal amount of kWhs are required to run the server center infrastructure for things like cooling. In the more recent study, Dr. Koomey estimated that the average PUE in 2010 was more efficient at somewhere between 1.83 and 1.92.

According to Dr. Koomey, "The main reason for the lower estimates in this study is the much lower IDC installed base estimates, not the significant operational improvements and installed base reductions from virtualization assumed in that scenario." He went on to state, "Of course, some operational improvements are captured in this study’s new data…but they are not as important as the installed base estimates to the results." Looking forward, Dr. Koomey wrote that IDC forecasts show virtually no growth in the installed server base from 2010 to 2013 as virtualization becomes more prevalent, cutting the need for more physical servers. As a result, he wrote, lower data center power consumption growth can be expected. That is good news.

In August 2007, the Environmental Protection Agency (EPA) issued a report in which it estimated that U.S. energy consumption by server centers would nearly double from 2005 to 2010 to roughly 100 billion kWh at an annual cost of $7.4 billion. It predicted the centers’ electricity demand in the U.S. would rise to 12 gigawatts (GW) of power by 2011, or the output of 25 major power plants, from 7 GW, or about 15 power plants. In his latest study, Dr. Koomey concluded that the reason for the lower estimate of power consumption was “Mostly because of the recession, but also because of a few changes in the way these facilities are designed and operated, data center electricity consumption is clearly much lower than what was expected, and that’s really the big story.” A big question mark is whether the acceleration in economic activity will lead to a return to the historical trend line in power growth associated with the growth in installed servers. We know that services depending on server centers such as cloud computing and streaming of music and movies will add to power consumption or lead to ways to reduce projected energy consumption. Could the embrace of cloud computing and remote data storage lead to more efficient use of server centers and a reduction in the total number of server centers in use, especially among company-owned centers? On the other hand, does increased streaming media growth overwhelm the possible power savings from more efficient use of server centers? We doubt the upward trend in servers and power consumption will change, but maybe the slope of that increase will be reduced. Planning for the nation’s power generating capacity will become more challenging in the future, not just from unclear trends in demand, but also from the growth in the myriad sources of supply and their widely different generating characteristics. Add to that the challenge of meeting regulated mandates that often create more operating problems while solving few environmental issues.

US Energy Independence: What If The Numbers Are Wrong? (Top)

A theme in global energy markets is that the oil shale boom in the United States has the country on a path to increased crude oil production allowing it to substantially reduce oil imports in the future. That theme goes on to focus on the impact this changed American role in the global energy market may have on the future trend for oil prices and geopolitical developments in the Middle East. If the oil technology and economics keep America on this path to greater output, the country’s economy will be in better shape than if it remains dependent on high oil imports, even if they come from our friends in North America rather than from less friendly places around the globe. But what if this belief is wrong? What could be the unfortunate outcomes?

A recent report about tight oil production from Norwegian broker Pareto highlights the importance of the oil shale revolution in the U.S. for meeting the country’s future oil needs and especially the role two states – North Dakota and Texas – play in that scenario. According to the latest data from the Energy Information Administration (EIA) Texas accounts for about 28% of total domestic supply while North Dakota adds slightly over 9%. Oil production in both states is increasing rapidly according to EIA data, led by the shale oil drilling booms in North Dakota’s Bakken formation and the Eagle Ford trend of South Texas.

Exhibit 10. Production Driven By TX and ND

Source: Pareto based on EIA

Based on consensus estimates, Pareto says that shale oil output of 610,000 barrels per day (b/d) accounted for nearly 11% of total U.S. production of 5.7 million barrels per day (mmb/d) in 2011. They are projecting that by 2016, shale oil production will reach 2.3 mmb/d, with Bakken and Eagle Ford output accounting for 81% of that total, up from 80% registered in 2011. To achieve that target output, Pareto sees total shale oil production increasing by between 100,000 b/d and 500,000 b/d. To achieve that production growth, drilling will need to increase steadily in the future as shale oil wells are known to have a steep decline rate.

Exhibit 11. Production Falls By 50% In Year 2

Source: Pareto

In order to grow production, let alone sustain existing production, the E&P industry will need to step up its drilling activity in these prolific oil shale basins. It would also help for the producing industry to find some new oil shale plays, but according to most experts, all the shale basins in the United States have been identified, although they may not have been exploited yet. A problem with the large production growth estimates is that future drilling tends to find less prolific wells. Thus, the number of wells needed to increase and sustain existing production must grow faster than in recent years.

Therefore, the number of active drilling rigs will need to increase sharply. The trend is demonstrated in Exhibit 12, which shows that to add 200,000 b/d of additional production in the Bakken as Pareto projects, assuming each rig can drill 10 wells per year, the number of additional rigs will increase from about 65 in year one to 350 by year 15. In other words, the Pareto model says the basin will need 650 additional wells in the first year and 3,500 wells in the 15th year.

Exhibit 12. Production Results Call For Drilling Rise

Source: Pareto

Another analysis performed by Art Berman and presented in an Association for the Study of Peak Oil webinar last May showed different historical results. Mr. Berman found that the first 182,000 b/d of production in the Bakken needed 1,636 wells drilled at a cost of $18 billion and was reached in August 2009. The second 182,000 b/d of output was attained in October 2010 and required 1,480 wells at a cost of $17 billion. The next increment of 182,000 b/d of production was achieved in January 2012 and needed 1,480 wells and cost $17.1 billion. The good news in this analysis was that the industry was able to demonstrate some efficiency gains between 2010 and 2011 compared to 2009’s results. A big question will be whether the efficiency gains will improve in the future or will the industry instead revert to its 2009 pattern.

Mr. Berman doesn’t translate his well results into rigs working, but compared to the Pareto analysis, it is hard to see how the industry can produce an incremental 200,000 b/d annually by only adding 65 rigs in the first year and 110 in the second, based on 10 wells per year per rig. Pareto has to be assuming future wells will be much more prolific than past wells, a questionable assumption. This will certainly present a challenge for the producers to find more attractive locations, or to improve their completion techniques to boost initial well production rates and to reduce the production decline rate. It will also be a significant challenge for the drilling industry to add the requisite number of rigs per year and for the service industry to provide sufficient completion and workover equipment. Remember, the region where the Bakken formation is located has been the subject of numerous articles about the difficulty in hiring staff for drilling rigs and service equipment and the challenges in finding housing and locating bases for the service companies and their equipment.

Exhibit 13. Difference In Production Estimates Grows

Source: EIA, Texas RRC, PPHB

A bigger problem for this optimistic scenario, however, may be with the discrepancy in data being relied upon for projecting the progress of the United States in its quest for oil independence. The estimated volume of oil production in Texas reported by the EIA is considerably different from that collected and reported by the Texas Railroad Commission, the regulator of oil and gas activity in the state. The history of these two production data estimates is shown in Exhibit 13. Based on the latest Railroad Commission data, oil production is nearly 600,000 b/d less than the EIA estimates the state’s producers are pumping. As shown in the chart, the monthly production difference between the two estimates has widened over the last two years. The growth in the discrepancy has coincided with the sharp upturn in drilling in the Eagle Ford shale oil trend and the emergence of a shale oil play in the Permian Basin of West Texas. Is this difference the result of a poor data collection effort by the Railroad Commission, or are the models on which the EIA estimates its production figures flawed? The Texas data is based on the production information all producers must file to estimate their royalty and severance tax payments. Presumably this tax driven data should be more accurate than computer model estimates. From everything we know about activity in these areas, drilling and well completion activity is very high, but it is possible that existing well production is not being sustained at rates estimated in the EIA’s models. Analysts have questioned the EIA about the discrepancy between their estimates and the state production data – both for crude oil and natural gas – but to no avail.

Exhibit 14. Ongoing Data Discrepancy

Source: Art Berman

If the state data for Texas, Alaska, North Dakota and California, the top oil producers is added to the Outer Continental Shelf oil production, there is about an 800,000 b/d discrepancy with the EIA’s estimate that is based on an algorithm. Moreover, the EIA production data shows that almost all the increase occurred in the last year while the state data shows that there has been a steady increase beginning in 2010. Businesses and the military rely on accurate data and intelligence to plan their strategies. We would hope government policy makers would also demand the same. We worry about government actions being based on potentially flawed data and, importantly, on inaccurate conclusions and assumptions driven by the bad data. This could lead to the government enacting energy-use policies that contribute to greater economic problems in the future.

How To Support The Highway Trust Fund Of The Future (Top)

In our last Musings, we wrote about the challenge the government faced in generating sufficient moneys for the Highway Trust Fund that underwrites road maintenance and new highway construction due to miles driven declining and more efficient and alternative fuel vehicles entering the nation’s fleet. In almost every fiscal year since 2007, the federal government has had to shift money from the Treasury to the highway fund to meet its obligations since taxes from the sale of gasoline, diesel and tires prove insufficient. In our article we talked about the potentially negative impact from the increase in the number of electric and natural gas vehicles that currently do not pay any fuel tax. We talked about the experiments underway to devise new mileage taxing schemes rather than relying on fuel taxes.

Last week we ran across an article based on the views of Robert Poole, director of transportation policy and Searle Freedom Trust Transportation Fellow at the Reason Foundation. He cited the three problems with our existing highway funding structure: the gas tax is based on gallons of fuel sold and average miles-per-gallon have doubled over the past two decades; federal policy promotes alternatives to gasoline-powered vehicle, so fewer vehicles in the future will be contributing tax revenues; and fuel taxes are not indexed for inflation. As a result, fuel taxes are not providing sufficient money to pay for highways and bridges. Mr. Poole also highlighted another issue, which is that everyone pays the same rate per gallon regardless of whether he drives on country roads and neighborhood streets that are inexpensive to build and maintain or on multibillion-dollar highways.

Mr. Poole suggested that we should shift to a miles-traveled taxing system based on technology used for collecting tolls – vehicle transponders that can record where people travel. He acknowledges that this technology creates privacy concerns. He believes the issue can be addressed by allowing those motorists who don’t want to use the technology to instead buy an unlimited number of miles with a flat annual tax. That sounds to us like a cell phone plan. We wonder whether the monthly miles will expire like the minutes, or merely roll over.

“Replacing fuel taxes is not just about ensuring adequate, sustainable funding for the highways we all depend on,” concludes Mr. Poole. “It is also the key to transforming what is now a poorly managed, non-priced, government-run system into a 21st Century network utility.” We doubt he means a stand-alone private utility, but rather an expansion of the federal government’s responsibilities. After Thursday’s health care decision, we don’t think anyone will bring this idea up soon and Congress elected to dodge the issue when it passed a transportation bill extending highway financing.

Improved Economic Prospects Due To Housing Recovery? (Top)

Lately there have been a number of articles about the end of the housing bust and the potential for additional economic impetus from its revival. One need only remember the housing bubble that helped drive consumer spending and borrowing in the late 1990s and early 2000s, and how inflation of the housing bubble contributed to robust employment growth in the construction trades to understand the importance of a recovery in this sector is to the overall health of the domestic economy.

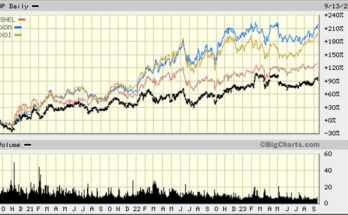

The data shows that this most recent recession had the greatest negative effect on construction employment in the post war period. Given that the percentage decline was impacting a larger than ever construction labor force, the negative impact has been huge and is one of the primary reasons for the lack of economic recovery. But that concern seems to be going away, based on the reaction of investors and economists to the latest housing data and stock market performance of housing-related companies.

Exhibit 15. Recession Hits Construction Jobs

Source: BLS, PPHB

The key data that has driven this changed view of the state of the housing market was the S&P/Case-Shiller home price index for April that was released last week. According to the data, prices among 20 U.S. cities rose 0.7% from March after adjusting for seasonal variations. On a year-over-year basis prices fell 1.9%, but that was the lowest pace of deterioration in over a year. Importantly, 19 of 20 cities saw price gains and 18 of 20 cities experienced improved annual returns. No cities in the index experienced brand new pricing lows. The results beat experts’ expectations and suggest stabilization of housing. The chart of the Case-Shiller index in Exhibit 16 shows the latest monthly uptick and highlights how the upturn is coming after several months of essentially flat home prices.

Exhibit 16. Have Home Prices Bottomed?

Source: MoneyGame.com

Enthusiasm for housing stocks has resulted in the S&P 500 home-building index to rebound and actually lead all 154 industry groups within the benchmark index for the first six months of 2012. The rebound has come because of perceptions of improved industry fundamentals. Recently there have been gains in new home sales, decreases in unsold house inventories and upticks in builder confidence. These hints of improving underlying industry fundamentals are enough to get the juices of stock traders flowing over the earnings prospects for home building stocks. A problem for the enthusiasm about the recent data improvements, however, is that they are coming from such low levels. For example, let’s look at the housing starts data. For May, home builders started 708,000 new residential units on a seasonally-adjusted basis. That is above the recent lows, but for the 50 years prior to the housing bust, the starts number never dipped below one million units and during the 2003-2006 period they were consistently above two million units. This is part of the reason why the Federal Reserve continues to describe the housing sector as “depressed.”

Exhibit 17. Home Sales Helped By Lack Of Foreclosures

Source: Agora Financial

Another consideration about the housing data is tied to the state of the housing market. The new single-family home sales data shows that the uptick has come partly due to the absence of more foreclosures, which is a function of the cessation of foreclosures by banks as they negotiated an agreement with the regulators over how they failed to properly handle mortgages on underwater homes. As Barry Ritholtz, chief of Fusion IQ, pointed out, “There is a tendency among many analysts to forget about the context in which the residential real estate market has stabilized.” As he pointed out, without distressed assets in the mix, it may appear that prices are improving when it is really a mix issue. Likewise, without distressed homes in the mix, often sold at less than replacement cost, new homes have less price completion and sales can rise, helping to drive up average home sale prices. David Rosenberg, the Gluskin Sheff money management chief economist, speaking on Bloomberg TV last week, commented that he estimated “there is between two and three million excess housing units on the market for sale when you count in all the shadow inventory, so you’re talking about at least another two or three years to clear the inventory and put a definitive floor under home prices.”

So while it is nice to have some better real estate statistics, the low levels they represent and the possibility their improvement really reflects the absence of distressed properties rather than true fundamental improvement, we should be careful about getting too excited. We understand the argument that the stock market is a leading indicator so the rise in home-building stocks is a precursor of better times for the sector, but we hasten to remind readers of the late economist Paul Samuelson’s warning that “The stock market has called nine of the last five recessions.” Stock market moves are not perfect predictors.

RI Electric Power Sources Reflect Miss-mash Of Fuels (Top)

An insert in the National Grid (NNG-NYSE) power bill for our home in Rhode Island carried an interesting table showing power sources and their contribution to the electricity consumed in the state. We were intrigued by the list (shown in Exhibit 18) and called the company seeking more information. We suspected that the representative we talked to wouldn’t know the answer to our questions, and we were not disappointed. He kept saying that he hadn’t been trained on this topic, but offered to have someone call us back. About an hour later a supervisor did call, but even he didn’t know any answers and went off-line to discuss with his boss our questions. When he came back on the line, he said that his boss didn’t know but started to Google the topics. He suggested that it would be faster for me to do the same, and he said he planned to do that when he went home as he was intrigued to learn about what we were asking.

Our first question was what is Digester Gas? According to The Free Dictionary, digester gas is really biogas, which is “a mixture of methane and carbon dioxide produced by bacterial degradation of organic matter and used as a fuel.” Immediately, that answer, similar to what we thought, led to our second question, which was why isn’t this included under Biomass? Likewise, we wondered, why aren’t Landfill Gas, Municipal Solid Waste, Trash-to Energy and Wood all considered part of Biomass? The answer we got from the National Grid representative was that they only publish the information and its collection is mandated by the state energy office.

Exhibit 18. Power Sources For Rhode Island

Source: National Grid, PPHB

Another question what was meant by Jet? We are hard-pressed to think that electricity is being generated by jet engines, unless they are a part of a combined-cycle peak power plant. In that case, we wondered why the fuel for the jet engine wasn’t accounted for as that was of greater informational value than the generating source. Seeking answers to our questions we emailed the Rhode Island State Energy Office but have yet to hear back from them. At the end of this quest for knowledge, we suspect the data collection is driven by people who don’t know the difference between fuel and power sources. Therefore, we recast the information from the insert by fuel sources as they are usually displayed.

Exhibit 19. Rhode Island Power By Fuel

Source: National Grid, PPHB

When the fuel sources are considered, nearly 54% of the state’s electricity is generated by fossil fuels. Nuclear provides nearly 29% of the power with renewables including biomass and hydroelectric represents 10%. What we don’t know is the fuel source for the imported power, but if we had to guess we would suggest at least half is generated by fossil fuel, with the balance from hydroelectric power, which means fossil fuels accounts for nearly 58% and renewables approaching 14% of the electricity produced. This energy accounting compares with the national breakdown for 2011 with fossil fuels accounting for 68%, nuclear 19% and renewables 13%.

We believe our recast power source table provides more useful information for Rhode Island electricity consumers about how fuel choice is influencing their monthly bill, their exposure to changes in the cost of power and how the debate over how to fuel the New England region of the country could impact their future power bills. The miss-mash of information contained in the National Grid table, recognizing that the table’s information is determined by state energy regulators, really doesn’t tell consumers much. It conveys the impression that his electricity is generated by a large portfolio of power sources, which it is, but many of them use similar fuels, which is not clear. It is reminiscent of the California politician who several years ago said that his state didn’t need more oil and gas because it had electricity. Ignorance is bliss unless you’re in charge of developing the nation’s energy policy.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.