- Foreigners Asking For Certainty Should Read U.S. History

- Learning How Much Climate Scientists Don’t Know

- Natural Gas Output Continues Up Despite Rig Count Drop

- Rhode Island, Electric Cars And A Revolution?

- Obama Climate Speech Confuses Keystone Analysis

- More Analysts Questioning End Of Auto Love-Affair

- Defending Against Rising Sea Levels Still Ignores Reality

Musings From the Oil Patch

July 9, 2013

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Foreigners Asking For Certainty Should Read U.S. History (Top)

We were amused to read in a Washington energy newsletter about a hearing in Congress a few weeks ago dealing with the impact of the gas shale revolution on the future of manufacturing in America. One of those testifying was an energy executive of a foreign-based company planning a huge new petrochemical and refinery complex in Louisiana suggesting it would help his company’s decision-making process to know that U.S. energy policy would be stable for the long-term.

Testifying before a joint hearing of the Subcommittee on Energy and Power and the Subcommittee on Commerce, Manufacturing, and Trade of the Committee on Energy and Commerce of the U.S. House of Representatives on June 20th, was André de Ruyter, Senior Group Executive, Global Chemicals and North American Operations of Sasol Limited (SSL-NYSE). The topic of the hearing was “A Competitive Edge for American Manufacturing: Abundant American Energy” and focused on the impact the shale revolution has had in generating significant gas volumes that have reduced gas prices and have provided manufacturing industries with a globally-competitive cost advantage.

Sasol proposed last December to begin front-end engineering and design (FEED) work for a world-scale ethane cracker and an integrated gas-to-liquids (GTL) facility to be co-located on property adjacent to the company’s existing chemical complex near the town of Westlake, Louisiana. The complex has an estimated price tag of $16-21 billion and will represent the single largest manufacturing investment in Louisiana’s history and possibly one of the largest foreign direct-investment in manufacturing projects ever in American history. Louisiana has offered Sasol $2 billion worth of tax credits and other incentives for locating the plant in its state.

The world-scale ethane cracker will enable Sasol to expand its differentiated ethylene derivative business in the United States. The facility will produce an estimated 1.5 million tons per year of ethylene, helping to strengthen U.S. manufacturing, boost exports and stimulate economic growth. By adding additional ethylene manufacturing capacity Sasol will be able to further strengthen its position in the global chemicals business. The plant will produce a range of high-value ethylene derivatives including ethylene oxide, mono-ethylene glycol, ethoxylates, polyethylene, alcohols and co-monomers. The final investment decision will be made during 2014.

The GTL plant will produce 96,000 barrels a day of clean diesel, a product with low sulfur content and high octane, which makes it an excellent fuel blending product enabling traditional crude-produced diesel to meet the low sulfur emissions requirements of California and a few other locations. Exhibit 1 shows a schematic of the GTL process as displayed on Sasol’s web site. The creation of a liquid transportation fuel from natural gas has been a significant hurdle in order for gas to develop a significant new growth market for its abundant and growing output. Liquefied natural gas (LNG), which is created by super cooling the gas and maintaining it as a liquid, presents meaningful infrastructure challenges to provide the fuel to the domestic transportation market on anything other than a localized basis. On the other hand, GTL is a liquid and a super diesel blending component since it has no sulfur and high octane. An impact of GTL could be to increase the number of diesel-powered vehicles in the U.S. In Europe, due to lower taxes on diesel compared to gasoline because it is also used as home-heating fuel and is favored by government policy, diesel-powered vehicles far out-number gasoline-powered ones.

Exhibit 1. Sasol’s Proprietary GTL Process

Source: Sasol web site

Mr. de Ruyter included two charts in his testimony that demonstrated the rationale behind Sasol’s decision to consider this massive GTL investment. Mr. de Ruyter said, “The U.S. shale gas revolution, coupled with the current wide differential between gas and oil prices (which we anticipate to persist over the long term), have created attractive opportunities for Sasol’s further growth and investment in the U.S. market.” The two charts show the U.S. Energy Information Administration’s view of the future supply of natural gas in the U.S. and how much its future growth is dependent on the gas shale revolution (in red in Exhibit 2 next page). But equally as important is the view that domestic gas prices will remain significantly below their long-term relationship with global oil prices, as represented by Brent crude oil. The long-term forecast calls for the historically high ratio between the two fuel prices to settle out in the future at twice the historical average. Mr. de Ruyter indicated that Sasol believes this price disparity will continue well into the future. What happens if it doesn’t? We hope Sasol is considering that alternative.

Exhibit 2. Shale Gas Is Key To Abundance View

Source: de Ruyter testimony

Exhibit 3. Gas Prices Will Be Half As High As In The Past

Source: de Ruyter testimony

For a company considering investing $16-21 billion in a new fuel complex, its decision-makers want to know that U.S. energy policy will remain stable for the long-term. In response to a question from Rep. Steve Scalise (R-La.) about any impediments Sasol has experienced so far during the regulatory approval process for the plants, Mr. de Ruyter stated, "One of the impediments we see is the need for regulatory certainty.” He went on to say, "We need things to be predictable and to remain stable for the long term. Once we have that, we’ll be in a pretty good position to make a large investment." For Mr. de Ruyter, having the permits “considered and extended” efficiently by the regulatory agencies that must approve the project on environmental grounds would be helpful to Sasol. A brief review of the history of the Environmental Protection Agency (EPA) in its use of the powers assigned it under the Clean Air Act and subsequent court decisions about carbon emissions would be instructive about how energy policy can shift radically over time. Moreover, we suggest Mr. de Ruyter and his colleagues would be wise to review the history of natural gas regulation in the United States in order to see how often it made sharp turns, often in response to perceived problems for the gas market, which then created long-term problems never anticipated.

Many people fail to appreciate that natural gas has been subject to over 100 years of regulation that has become increasingly more intrusive for the industry in response to government efforts to protect consumers. Often the regulatory changes implemented to correct perceived market imbalances resulted in significantly greater industry problems in the future. For example, in the mid 1880s, natural gas was produced from coal beds and was consumed in the same municipality. As a result, local governments recognized the potential for abusive actions in establishing prices and conditions due to utility company monopoly power through control over both the supply and marketing of the gas. By the early 1900s, natural gas was being shipped between neighboring towns, each subject to local regulations. There was, however, a gap in regulation over the pipeline connecting the towns. States stepped in to provide regulation, which led to the establishment of public utility commissions (PUCs) or public service commissions (PSCs) to oversee these intrastate pipelines. The earliest commissions were established by New York and Wisconsin in 1907 and expanded the scope of government regulation of the gas industry.

Eventually technology enabled pipelines to be built that moved gas long distances, often crossing state boundaries. Between 1911 and 1928, states attempted to regulate these long-haul pipelines but the Supreme Court consistently ruled they were violating the interstate commerce clause so the regulations were judged to be unconstitutional. In response to these regulatory challenges, in 1935, the Federal Trade Commission issued a report on market power in the natural gas industry. It found that over 25% of the interstate pipelines were owned by 11 holding companies that also controlled a significant portion of natural gas production, distribution and electricity generation. This led to the enactment of the Public Utility Holding Company Act of 1935. That law didn’t regulate interstate gas sales, so Congress enacted the Natural Gas Act of 1938, (NGA).

The NGA empowered the previously established Federal Power Commission (FPC) to regulate interstate natural gas sale prices and it provided the FPC with limited certification powers. The act specified that no new interstate pipelines could be built to deliver natural gas into a market already served. In 1942, these certification powers were expanded to encompass approval of any new interstate pipeline being built. During the early 1940s, the Supreme Court determined that wellhead gas prices were subject to federal oversight if the selling producer and the purchasing pipeline were affiliated companies, i.e., owned by the same holding company. The Court also determined that if the companies were unaffiliated, then there were sufficient natural market forces to keep prices competitive.

In 1954, the Supreme Court ruled in Phillips Petroleum Company vs. Wisconsin that a natural gas producer selling gas output to interstate pipelines would fall under the classification of “natural gas companies” in the NGA and therefore was subject to regulation. In response, the FPC instituted a “cost-of-service” rate-making procedure. Natural gas would be priced based on its cost to produce plus a “fair profit” rather than its market value. This form of rate setting worked for regulating a few pipelines, but with hundreds of producers, it quickly became an administrative nightmare. The full impact of this “mistake” in rate-setting policy would lead, in less than two decades, to critical gas supply shortages.

Between 1954 and 1960, the FPC worked on gas prices on an individual basis. In 1959, the FPC received 1,265 applications from producers but was only able to settle 240 cases. In response to the administrative burden, the FPC shifted to an area rate-setting policy. The FPC process was to establish “interim rates” until they could complete their final analysis. By 1970, it had only completed rate-setting for two of the nation’s five producing areas. Furthermore, the prices were fixed at 1959 levels. In 1975, the FPC moved to a national price, which was set at $0.42 per thousand cubic feet (Mcf) of gas. The national price was essentially double the prices set in the 1960s, but was still well below market prices. For example, at this time, natural gas selling in the Texas and Louisiana intrastate markets, and not subject to FPC regulation, was going for $7-$8/Mcf. One impact of FPC price regulation was that in 1965, one-third of the nation’s gas reserves were dedicated to the intrastate gas market. A decade later intrastate gas markets controlled over half of all gas reserves. The availability of American manufacturers to secure gas supplies, even at extraordinarily high prices, created a stampede of these companies to gas producing states. This price imbalance became a serious problem in 1976-1977 when schools and manufacturing plants in the Midwest, totally dependent on interstate gas supplies, were forced to shut down due to the lack of gas.

In response to these serious gas shortages, Congress passed the Natural Gas Policy Act that established “maximum lawful prices” for wellhead prices. This act broke down the barriers between interstate and intrastate markets. The FPC was abolished, and in its place the Act created the Federal Energy Regulatory Commission that assumed the responsibilities of the FPC except for regulating exporting and importing natural gas that remained under the purview of the newly established Department of Energy. The Act also directed that by 1985, natural gas prices would be totally decontrolled. In response to the provisions of the Act, interstate pipelines signed up for long-term supplies under “take-or-pay” contracts with producers. No sooner was the ink dry on these contracts than gas demand fell.

In contrast to the 1960s and 1970s when gas customers were curtailed due to high demand and low supply, this time high gas prices caused demand to fall, forcing gas customers to curtail consumption. To attempt to address the high-priced, take-or-pay gas supply conundrum, pipeline companies established Special Marketing Programs (SMPs) to allow customers to purchase lower-priced gas and have the pipeline transport it to the customer. These SMPs were struck down by the courts as being discriminatory. In 1985, FERC instituted Order 436 under which interstate pipelines would voluntarily act as a transporter of gas as long as all its customers had access to SMPs. While unblocking gas markets, the resolution forced pipelines into litigation with producers over their take-or-pay supply contracts.

To ease the litigation problem that threatened to bankrupt nearly every major pipeline company, FERC issued Order 500. That order forced the buyouts of take-or-pay contracts with the cost to be passed on to local distribution companies (LDCs). State regulators agreed to allow LDCs to pass the additional costs on to their retail customers. In 1989, the Natural Gas Wellhead Decontrol Act was passed that established January 1, 1993, as the date on which all regulations over wellhead prices were to be eliminated. The deregulation was accomplished by establishing that the very first sale of gas was decontrolled, although price regulations might be applicable to later transactions along the trail from wellhead to customer burner tip. To facilitate decontrol, FERC issued Order 636 that allowed the complete unbundling of transportation, storage and marketing of natural gas.

Sasol executives seeking a stable, long-term energy policy should note how the 1954 Phillips decision regulating wellhead gas sold to interstate pipelines led to massive gas shortages 20 years later, which prompted further regulatory responses. And they should also consider how the path to gas-industry decontrol contributed to the surprising demand drop that nearly destroyed the pipeline industry because of the take-or-pay supply contracts they had entered into. In each case, the courts and regulators believed their policy actions to correct market imbalances would ensure long-term industry stability. No one considered how markets might change in the future in response to the new policies.

Our best advice to Mr. de Ruyter and his Sasol colleagues is to run a number of scenarios, including the most “off-the-wall” ones anyone can conceive, even if it seems they couldn’t possibly happen. If the project’s economics support the investment, then they should consider moving forward. This strategy is a better insurance policy than counting on future Congresses, regulatory agencies, administrations and/or the courts to ensure a stable long-term energy policy. History says we’ve never had an energy policy before, although others might say that all those energy acts from the 1990s through 2000s count, so why should we think the nation will develop a long-term energy policy now?

Learning How Much Climate Scientists Don’t Know (Top)

For the past decade there has been a war waged by climate scientists claiming that the increased frequency of tropical storms in the Atlantic Basin is due to global warming. These claims were loudly proclaimed following Sandy, at one point a Category 1 hurricane that made landfall in the New York metropolitan area last October. A new research paper from scientists associated with the British Meteorological Service and published in the journal Nature Geoscience shows that cleaning up the atmosphere over the past 40 years may have contributed to the recent increase in tropical storm activity. “Our results show changes in pollution may have had a much larger role than previously thought,” said Nick J. Dunstone, a researcher with Britain’s meteorological service and the lead author of the new paper.

The paper found that atmospheric pollution caused by the industrialization of North America and Europe during the 20th Century may have contributed to clouds that actually cooled the Atlantic Ocean. That cooling, in turn, reduced the frequency and intensity of tropical storms from what might have occurred in a natural climate. The clean air acts have cleaned up the atmosphere, thus reducing the cooling impact of air pollution and contributing to a heating of the ocean and the spawning of more and stronger storms.

The interaction of clouds with global warming models has been an area of intense criticism by scientists questioning the climate model forecasts of catastrophic outcomes if the planet is allowed to warm by 2o Celsius. The main impact on storm patterns comes from particles of sulfur dioxide that enters the air from the combustion of sulfur-laden fuels like coal and petroleum products. Water condenses on these particles, and with a substantial volume of them in the air can change the properties of clouds, causing them to be made up of finer droplets. That change brightens the clouds and causes their tops to reflect more sunlight back into space, reducing the sunlight that reaches the ocean’s surface and limits how much it heats up.

The author of a The New York Times article on the results of this study interviewed five climate scientists who were not involved in the paper. They all agreed with the words of Kerry Emanuel, an atmospheric scientist at the Massachusetts Institute of Technology and a leading global warming/increasing storms advocate, who said the results were “entirely plausible.” The British study lead author said there needed to be much more study, but he acknowledged that the study employed the latest and most sophisticated climate model. He also pointed out that there are many reasons to continue to clean up the atmosphere because of its polluting effects on human health.

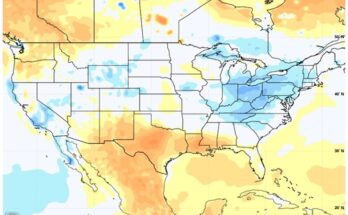

Exhibit 4. Cleaning The Air Started In Early 70’s

Source: Colorado State University

Leading hurricane forecaster Bill Gray, a professor in the Department of Atmospheric Science at Colorado State University, has long claimed that hurricane frequency and intensity is tied to natural cyclical weather patterns that promote or retard the development of storms. He has produced the charts in Exhibit 4 that show how the frequency of storms has increased in recent years. Dr. Gray also acknowledges we have much better coverage of weather and storms across the Atlantic today, so past storms may have been overlooked that might alter the frequency calculations. In any case, Dr. Gray has established clear links between historical natural meteorological cycles with tropical storm frequency and strength and not global warming. The key point of the new British study is that it shows how little past climate models have been able to account for critical weather variables such as clouds and their feedback mechanism on global climate. So is it possible the climate change hysteria has been driven by faulty computer models?

Natural Gas Output Continues Up Despite Rig Count Drop (Top)

Observers of the natural gas market expressed distress about the latest monthly data from the Energy Information Administration (EIA) based on its survey of producers. The initial data for April showed that on a gross withdrawal basis, total U.S. natural gas production increased by 0.46 billion cubic feet a day (Bcf/d). If April’s gross data is compared against the revised March data, the increase grew to 0.49 Bcf/d. Since April production in Alaska was down, the all-important Lower 48 production increase was more than half a Bcf/d.

We show the various state and regional production changes between April and March on both gross and revised measures in Exhibit 5. The Gulf of Mexico showed a small increase on both measures while Louisiana was off sharply due to pipeline and gas plant processing issues. The primary increase was in the Other States, which includes the Marcellus formation in Pennsylvania, West Virginia and Ohio and the associated natural gas produced along with the shale oil from the Bakken formation of North Dakota and Montana. Until the various states begin publishing monthly data as requested by the EIA, we will be challenged to know which basins outside of the main producing states are the primary contributors to this growth trend.

Exhibit 5. Gas Supply Continues Growing

Source: EIA, PPHB

There are several interesting data points in the table. Note that on a gross basis, production in Texas was flat between the two months, but on the revised March data, it grew by 0.11 Bcf/d. That means March production data for Texas was revised lower by the volume of the increase. We went back and examined the performance of the revised monthly data compared to the initial production estimate from March 2012 to March 2013. For the period, there were four monthly increases and nine reductions. The change in production over the period on a gross basis was 0.29 Bcf/d while it was less than half of that amount based on the revised data (0.14 Bcf/d). For the largest gas producing state in the nation, this strikes us as a significant trend. On the revised production figures, Texas saw its production only increase from 21.91 to 22.05 Bcf/d, essentially flat, over the past year. We know that in response to low natural gas prices, drillers have shifted their drilling focus to the oil shale and liquids-rich areas within the Eagle Ford formation and away from the dry gas areas.

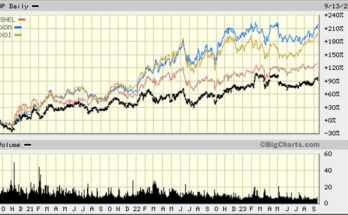

Exhibit 6. Eagle Ford Rig Count In Downturn

Source: Art Berman

We can see some of that drilling shift in the chart of the Eagle Ford shale rig count. The total rig count peaked in early 2012, and then slid to a low about mid-year 2012 before recovering slightly. It has remained essentially flat over the past nine months. Given the steep production decline of shale gas reservoirs, the recent slowdown in drilling is likely contributing to the very modest production growth as new high-producing wells are barely offsetting the declines of older producing wells.

Exhibit 7. Shale Revolution Changes Gas Market

Source: EIA, Baker Hughes, PPHB

Since the EIA started its monthly gas production survey in 2005, output growth versus drilling rig activity has been closely monitored. In the survey’s early years up to the start of the global financial crisis in 2008, gas production growth was closely associated with a rising gas-oriented drilling rig count. The financial crisis, which not only impacted energy demand but seriously impacted oil and gas industry financial liquidity, caused a sharp fall in the drilling rig count. Gas production fell briefly but then resumed its upward climb as the rig count recovered. It was during this period that the significance of the gas shale revolution became clear. After rebounding, working gas rigs declined but output continued rising. From June 2010 to now, other than during a brief drilling rig rebound in late 2011 in response to a hoped-for rise in natural gas prices, the rig count has steadily fallen while gas production has risen.

Exhibit 8. Lower Gas Rig Count Slows Gas Supply Rise

Source: EIA, Baker Hughes, PPHB

If we focus on the link between gas drilling and gas production growth onshore in the Lower 48 states (essentially capturing the shale production) during the past 30 months, it becomes clear that following the drilling peak in September 2011 activity has steadily declined. From the start of 2011 to its peak in November 2012, gas output has grown consistently. Since that peak, production has been flat although exhibiting monthly volatility. The falling rig count and aging producing wells is being felt in the gas market.

Looked at over an even shorter period, one can see how gas output has declined. We have plotted initial and revised monthly gas volumes for the Lower 48 land market since January 2012 in Exhibit 9. The differences between the two lines show the positive and negative revisions to initial production estimates. Revised production peaked three months before the initial estimate did. Although subtle, the decline rate from the revised peak is at a slightly steeper rate than for the initial production data. If these declines continue for the next several months, we can conclude that

Exhibit 9. Land Gas Supply Has Peaked

Source: EIA, PPHB

the long and deep decline in gas-oriented drilling has had its desired effect on curtailing gas supply growth. If demand increases, especially as the nation heads into winter, there likely would be upward pressure on natural gas prices. How high they rise will be a function of both supply and demand, but the interaction between coal and natural gas prices in the electric generation market will impact that relationship. Low coal prices have limited the erosion in coal’s share of power generation from cheaper and cleaner natural gas. The pace of the Obama administration’s effort to get the Environmental Protection Agency to institute stricter regulations on existing coal-fired power plant carbon emissions will restrict coal’s future demand in favor of natural gas.

Exhibit 10. Coal Regaining Power Market Share

Source: EIA

What else might influence the price of natural gas? The growth of natural gas output, despite the long slide in gas-oriented drilling activity, suggests producers have been high-grading gas prospects insuring they produced the largest volumes possible despite low prices, enabling a maximization of wellhead revenues. Producers also were looking for prospects with the lowest drilling and completion costs in order to maximize well profitability in this low-price environment.

Another factor impacting drilling prospect selectivity has been the weak financial results of exploration and production companies. An analysis conducted by Art Berman of the financial performance of four of the leading gas shale E&P companies – Chesapeake Energy Corp. (CHK-NYSE), Southwestern Energy Co. (SWN-NYSE), Devon Energy Corp. (DVN-NYSE) and EOG Resources, Inc. (EOG-NYSE) – for 2008-2012 showed they had a combined cash flow of $80.1 billion but capital expenditures of $132.8 billion, meaning the companies generated negative cash flow of $52.7 billion that was financed either with increased debt, new equity raises or asset sales. Also, the companies were forced to reduce (impair) the stated value of their oil and gas reserves by a combined $42.4 billion. These results negatively impacted the companies’ share prices. But these were not the only companies who suffered financially from the reversal in fortunes of the gas shale business from what was envisioned in the mid-2000s. For example, Australian minerals and energy producer BHP Billiton Limited (BHP-NYSE) was forced to take a $2.84 billion impairment to the value of the gas shale assets it purchased barely 18 months earlier from Chesapeake and Petrohawk for $4.75 billion. These results reflect the clash of gas shale optimism versus low gas price reality.

The confluence of trends in the natural gas market, coupled with poor financial results by producers during the past few years, suggests that Economics 101 is beginning to have an impact on the behavior of the market’s participants. Although energy market observers worry that natural gas production continues to grow despite a falling rig count, as we have shown, onshore gas production in the Lower 48 states where the gas shale revolution has been underway for nearly a decade is showing signs of a moderation in output growth. If gas prices jump up from current levels and stimulate additional drilling, this output growth pause might end, defeating a necessary market correction following the gas industry’s imprudent rush to corner the nation’s shale assets. The financial damage inflicted on the gas industry over the past few years will continue if the pause ends, forcing a more drastic restructuring on the business. Gluttony is always followed by severe indigestion, and for the gas industry we may still need more bottles of Pepto-Bismol before this episode ends.

Rhode Island, Electric Cars And A Revolution? (Top)

Several weeks ago the Rhode Island media was promoting the green revolution underway in the state with the opening of the state’s tenth public electric vehicle charging station. As various reports pointed out, the state had nine stations until the new one was opened on June 18th at Roger Williams University. The ceremony was attended by Governor Lincoln Chafee (RI-IND), state officials and three electric vehicles – a Chevy Volt, a BMW Active E and a Tesla S – all strong believers in a green economy.

Al Dahlberg, founder of Rhode Island’s EV planning program, Project Get Ready, is working to install another 49 charging stations by August 15th when federal stimulus money must be spent. He has a real challenge since as of mid-June the remaining locations for the additional charging stations had not been determined. Mr. Dahlberg is working with the state, National Grid (NGG-NYSE), the state’s primary electric power provider, the private charging network, ChargePoint, and the University of Rhode Island to install the stations.

According to various media stories about the ceremony, speakers discussed the positive benefits of electric vehicles for the environment and citizens. They did note the impediments to the growth of electric vehicles on the road, which includes the high cost of buying or leasing vehicles and range anxiety. Vehicle costs are coming down as manufacturers reduce prices and federal and state tax credits remain in place. The key to overcoming range anxiety is to have many charging stations available. As one speaker put it, “Build it and they will come.”

Exhibit 11. Fastest Electric Car In The World

Source: Geeksaresexy.net

Recently, we were fascinated watching the video of a British Baron driving his electric race car over 200 miles per hour (mph). The car owned by Drayson Racing and driven by Lord Drayson hit 204.2 mph, some 30 mph better than the previous world record for an electric-powered vehicle. The intriguing point was Lord Drayson telling the reporter interviewing him that the car’s battery was powered through inductive charging. The basic idea of inductive charging involves the creation of an electromagnetic field through coiled wires in the road that induces a complementary charge in similar coils in the electric car above it. You may be familiar with the process through the plug-less charging of the Powermat inductive charger for cell phones and other small electronics. The physics of this short-range wireless transfer of electricity are sound; it’s the impracticality of digging up roads to install expensive inductive charging circuits. The use of this technology will be limited to race tracks for now, but its prospect as an alternative to battery-storage issues plaguing electric vehicles is intriguing.

By this Labor Day, Rhode Island expects to have 59 charging stations operating. That got us thinking about how the state compares to Houston and Austin, which are similar to Rhode Island on various measurements. The State of Rhode Island and Providence Plantations contains 1,034 square miles of area with 1.05 million residents as of 2012. In contrast, the City of Houston has 2.16 million people residing in 627.8 square miles, while the City of Austin is 272 square miles in area and has 795,000 residents. Compared to Rhode Island, therefore, Houston is slightly over 60% the areal extent and just over twice the population, while Austin is about one-third in size and has 80% of the number of residents. Based on highway statistics from the U.S. Department of Transportation, Houston has roughly 1.29 million vehicles while Austin has 596,000. Those fleets compare to Rhode Island’s 478,000 vehicles. Earlier this year, Houston had 42 electric vehicle charging stations compared to Rhode Island’s nine while Austin had 53 stations and was building them at a pace that ensures there would be 153 stations operational by the end of summer while Houston will have well over 200 stations. This compared to Rhode Island’s ten now with its goal of 59 by Labor Day. That may be a revolution in the minds of Rhode Island government officials, but it certainly pales compared to Austin’s commitment to electric vehicles. Promoting electric cars is an interesting development for the capital of the leading oil producing state in the nation.

Obama Climate Speech Confuses Keystone Analysis (Top)

In an attempt to deflect attention from the various scandals surrounding his administration, President Barack Obama delivered a major policy speech on climate change. Political observers suggested this was a speech he needed to give in order to soothe the ire of his many environmental supporters who have been upset the President has done so little to limit carbon emissions during his term in office. President Obama gave a preview of his climate change plan during his February State of the Union speech. He devoted ten percent of that speech to energy and climate, but this climate speech was as long as the State of the Union speech.

We found an interesting analysis of presidential state of the union speeches. Actually these speeches started as written annual reports from presidents to Congress discussing their views of the nation’s condition. They were not presented as speeches until Woodrow Wilson in 1913. Jimmy Carter was the last president to deliver a written report. Not only was it his last report, but it was the longest ever at over 33,287 words. In contrast, President Obama’s February speech was 6,501 words in length.

The analysis was prepared by The Guardian of the UK and employed the Flesch-Kinkaid readability tests designed to determine comprehension difficulty when reading a passage of contemporary academic English. The two tests employ the same core measures – word length and sentence length. Wikipedia writes that “Reader’s Digest magazine has a readability index of about 65, Time magazine scores about 52, an average 6th grade student’s (a 13-year-old) written assignment has a readability test of 60–70 (and a reading grade level of 6–7), and the Harvard Law Review has a general readability score in the low 30s.” Based on these tests, the analysis rated every president’s State of the Union speech/report delivered since the nation was formed. It also translated the scores into the grade-level of readers. It was interesting that the president whose speeches averaged the lowest grade level was George H.W. Bush at 8.6 while Barack Obama was second at 9.2. Bill Clinton was next, followed by a tie between Lyndon Johnson and George W. Bush at 10. Ronald Reagan was next at 10.4. The highest ranking president was James Madison at 21.6. Two presidents – William Henry Harrison and James Garfield – never delivered State of the Union speeches. As these rankings suggest, the State of the Union presentation has been dumbed down over the years.

Exhibit 12. Dumbing Down Of State Of The Union Talks

Source: The Guardian

In the climate speech delivered at Georgetown University on a warm day and in the sun, President Obama talked about all the issues of why we need dramatic action on climate change in order to protect our children. On the issue of approving the Keystone pipeline, the President had the following to say, “I put forward in the past an all-of-the-above energy strategy, but our energy strategy must be about more than just producing more oil. And, by the way, it’s certainly got to be about more than just building one pipeline.”

He went on saying, “Now I know there’s been, for example, a lot of controversy surrounding the proposal to build a pipeline, the Keystone pipeline, that would carry oil from Canadian tar sands down to refineries in the Gulf. And the State Department is going through the final stages of evaluating the proposal. That’s how it’s always been done. But I do want to be clear: Allowing the Keystone pipeline to be built requires a finding that doing so would be in our nation’s interest. And our national interest will be served only if this project does not significantly exacerbate the problem of carbon pollution. The net effects of the pipeline’s impact on our climate will be absolutely critical to determining whether this project is allowed to go forward. It’s relevant.”

Many promoters of the pipeline, including politicians in Canada, say the President’s language insures Keystone will be built since the State Department’s Draft Environmental Impact Statement (DEIS) says that whether the pipeline is built or not will have little impact on the development of Canada’s oil sands. Unfortunately, we are not sure that is the test the President set forth. What we know from the DEIS is its conclusion about CO2 emissions, which is: “The annual CO2e emissions from the proposed Project is equivalent to CO2e emissions from approximately 626,000 passenger vehicles operating for one year or 398,000 homes using electricity for one year.”

The DEIS went on to make the following point: “WCSB [Western Canadian Sedimentary Basin] crudes are more GHG [greenhouse gas]-intensive than the other heavy crudes they would replace or displace in U.S. refineries, and emit an estimated 17 percent more GHGs on a life-cycle basis than the average barrel of crude oil refined in the United States in 2005. If the proposed Project were to induce growth in the rate of extraction in the oil sands, then it could cause GHG emissions greater than just its direct emissions.”

Taken together, these two conclusions confirm that the Keystone pipeline WILL increase carbon emissions. The question will then become whether the increase will “significantly exacerbate” the carbon emissions balance of the United States. Unfortunately, the answer to this question will remain unclear until the State Department issues its final ruling and President Obama then makes his determination on Keystone’s fate. The real answer conjures up Bill Clinton’s famous statement to the grand jury that “It depends on what the meaning of the word ‘is’ is.”

More Analysts Questioning End Of Auto Love-Affair (Top)

Recently there have been several stories and analyses about how younger drivers are delaying securing driver’s licenses and how much less they are driving. These structural issues have been offered as an explanation for the decline in the total number of vehicles in America, although the slow pace of the economic recovery following the 2008 financial crisis may also play a significant role. These issues are important for the future of gasoline and diesel fuel markets here, the future health of the domestic automobile industry, and the trend in carbon emissions.

We were reminded of the significant role automobiles play in today’s society by a story in Future Think by Edie Weiner and Arnold Brown discussing observing things through “alien eyes.” The story told of an animated film from Canada about two aliens sent to Earth to observe it and report back on it to their native planet. “After two weeks, the aliens concluded that Earth was inhabited by four-wheeled vehicles called automobiles, each of which owned at least one two-legged slave called a human. Every morning a loud noise went off to wake the human so that he could take the automobile to a social club (parking lot), where the auto hung out all day with other cars while the human went into a building to work to support it.” The story almost seems real.

A new study by Michael Sivak of the University of Michigan’s Transportation Research Institute focused on the peaking in the number of light vehicles (cars, vans and pickup trucks) in use in recent years and whether it represents a permanent peak or only a temporary peak in response to economic conditions. An interesting set of data was the historical perspective of the growth of vehicles in the U.S. Early in the history of the automobile industry it took merely five years for the fleet to grow 10-fold from 10,000 vehicles in 1901 to 100,000 in 1906. (Exhibit 13.) It subsequently required seven years for the next 10-fold increase and eight years for the subsequent expansion. But for the domestic fleet to grow from 10 million to 100 million, the automobile industry needed 47 years.

Exhibit 13. Historic Growth Of Vehicle Fleet

Source: Transportation Research Institute

As shown in Exhibit 14, the light vehicle fleet needed less than 30 years (1968-1997) to reach the 200 million mark, a faster pace than for the climb to the first 100 million vehicles. The auto industry’s concern is that the growth of the fleet slowed beginning in 2006 and reached an absolute peak in 2008, the same year the financial crisis unfolded. After declining to a near-term low in 2010 of 230 million units, the vehicle fleet expanded in 2011 and sales in 2012 were a healthy 14.44 million units and now are running at nearly a 15-million unit rate through the first half of 2013. While these recent sales figures reflect a healthier industry they do not match the boom years of the early 2000s.

Mr. Sivak’s study investigated the ratio of light vehicles to households, licensed drivers and population. What he found was that these ratios peaked during 2001-2006, well before the 2008 financial crisis. This suggests the possibility these vehicle use drivers reflect structural changes due to the demographics of America’s population and differences in how vehicles are utilized by specific age groups. There have been life style changes such as greater telecommuting, use of public transportation, and shopping online. Mr. Sivak cited trends such as in 2000, 3.3% of the nation’s

Exhibit 14. Vehicle Fleet Has Peaked

Source: Transportation Research Institute

workers were telecommuting, which rose to 4.3% by 2010. The use of public transportation for commuting grew from 4.7% of the work force in 2000 to 5% by 2009.

Exhibit 15. Fleet Growth Drivers Are Weaker

Source: Transportation Research Institute

One of the other trends identified from previous studies conducted by the Transportation Research Institute is the shift toward older drivers representing a greater share of the driving population. Older drivers tend to drive less than younger drivers. In addition, the highest probability for vehicle purchases has shifted from the 35 to 44 year old age group, the peak driving segment, to the 54 to 64 years old segment. We also know from numerous marketing and attitude surveys that consumers are shopping online more and driving for shopping less. Also interestingly, we have a record high 9% of households who do not own a vehicle, double the rate of the 1990s. These societal shifts are likely to remain forces that alter historical driving patterns.

Exhibit 16. Change Driver Demographics

Source: New York Times

The demographic change for drivers over the past 27 years is fairly dramatic both in the decline in younger drivers and the increase in older drivers. Unless attitudes toward vehicles among young drivers revert to historical patterns, it is hard to see how the size of the domestic light vehicle fleet will grow appreciably in the future. Increasingly, today’s youth population is choosing to live in areas where alternate transportation options are greater, including more public transportation, bicycles, walking, motor scooters, car sharing services or short-term car rentals. As the automobile industry builds better and longer-lasting vehicles and the fleet fails to grow in the future, then after Americans finish updating their vehicles, we could see a sustained but lower sales rate than now. None of that will be good for future gasoline and diesel demand.

Defending Against Rising Sea Levels Still Ignores Reality (Top)

Our article in the last Musings on the impact of the expansion of Manhattan Island over the centuries on the storm surge and rising tides was prescient based on subsequent media stories. One was an interview with John Boulé, the former head of the Corps of Engineers for the New York district, who is now working for a private engineering company. As he strolled with the interviewer, he pointed to Castle Clinton in Battery Park, built to defend New York City before the War of 1812. His comment was that the fort used to be located in the harbor. In other words, all the area between lower Manhattan’s historic tip and the old fort has been filled in, just as shown in Exhibits 17 and 18. He also made the point that it is easier to defend the above-ground facilities of New York than the subway system that has over 500 openings just in lower Manhattan. As he pointed out, miss three or four manholes or other openings and water flows in overcoming the rest of the protection effort. His parting comment was that designing protection for New York City wouldn’t involve anything that hasn’t already been done and proven successful elsewhere.

Exhibit 17. Expanded Battery Park Landmass

Source: Mannahatta Project

Exhibit 18. Battery Park As Seen Today

Source: Mannahatta Project

In an article on the problems of the Florida Keys and Key West from rising sea levels and storm waves, the author pointed to a pre-Civil War water gauge that says there has been a nine inch rise in the sea level during the last century. While decrying the problem of rising sea levels and the challenge for building protection facilities, a quote from Alison Higgins, the sustainability officer for the city of Key West, pointed to critical structural factors that have nothing to do with sea level increases but rather the city’s location. Ms. Alison stated, "Our base is old coral reef, so it’s full of holes. You’ve got both the erosion and the fact that (water) just comes up naturally through the holes." Just as South Louisiana’s marsh areas are subsiding, Key West’s coral base is eroding. If people choose to live in these areas they should expect problems that will need to be addressed and will cost them more. The question is whether these costs should be socialized or borne by the local residents. We know what New York’s Mayor Michael Bloomberg wants – socialization.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.