- Weekly Oil Data Confusing, But Optimism High About Prices

- Will The Petroleum Industry Actually Change How It Works?

- McKibben-Watts Is More Lincoln-Douglas Than Lee-Grant

- Is The Future Of The Automobile Already Here Now?

- Below Average Hurricane Forecast Shifts Focus To Disasters

Musings From the Oil Patch

June 16, 2015

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Weekly Oil Data Confusing, But Optimism High About Prices (Top)

Are you confused about oil price volatility? Is your neck sore from being snapped around by sudden changes in what’s now the most important industry data point for determining the near-term course of oil prices? Are you numb from listening to the instant experts on the cable news channels? Maybe you’ve decided to chuck it all in and go on vacation. Not a bad idea!

Watching weekly data points is often a worthless effort since so many of them are either compete estimates, admittedly prepared by knowledgeable people, or hybrid guestimates based on partial data supplemented with estimates for missing data points. Throughout our career we have been subjected to having to focus on the data-point du jour or du week. They ranged from daily oil price movements and weekly drilling rig count changes to weekly storage estimates for crude oil and natural gas and oil import and domestic oil production volumes. People are mostly focused on the U.S. oil business but that is just one part of the domestic energy business and a small piece of the global energy industry. In this country, our industry is made up of hundreds of oil companies and individuals ranging from sole proprietorships to multi-billion dollar global enterprises. With thousands of rigs drilling tens of thousands of wells and hundreds of thousands of producing wells spewing out millions of barrels of oil and thousands of cubic feet of natural gas, and huge storage tanks and salt caverns for oil and gas inventories scattered all around the nation, expecting 100% accurate weekly data is foolish, but the quality of the information we receive is quite good, which speaks well of those compiling the reports. A great problem for oil industry observers is that the U.S. produces the best industry data, while much of the rest of the world’s industry lacks transparency. In fact, much of it is opaque.

The Baker Hughes (BHI-NYSE) weekly drilling rig count continues to decline. There are many ways of looking at the information reported and attempting to judge what it all means for future domestic production, but sometimes the simplest approaches are the best. If we examine the change in the weekly rig data based on the types of wells being drilled since the peak in the overall rig count in November 2014, we created the chart below.

Exhibit 1. Latest Weekly Trends Are Becoming Confusing

Source: Baker Hughes, PPHB

Since the week ending November 26, 2014, the total rig count has dropped by 1,058 rigs for a 55% decline. As would be expected by the sheer number of rigs drilling horizontal wells, that category fell by 708 rigs producing a 52% decline. The vertical rig count fell by 101 rigs, but that represented a 71% drop, which reflects how the shale revolution altered the types of wells drilled.

Exhibit 2. The Current Rig Downturn Matching 2008-2009

Source: Baker Hughes, PPHB

While rigs are falling, having dropped by nine last week, the question becomes: When will the bleeding stop? Compared with the 2008-2009 oil market crash due to the financial crisis and recession, which is the benchmark most are comparing this correction against, it seems like we are approaching the end of the decline.

While the decline in the drilling rig count continues, albeit at a much slower pace than experienced several months ago, the puzzling issue is the continued increase in domestic oil output. The reason these weekly production estimates show increases is the belief that the tight oil industry has continued to improve its drilling efficiency by targeting the most productive portions of formations and completing wells in ways to maximize output. As we go forward, we will eventually obtain more accurate data for past periods that will either support the assumptions used in the weekly estimates or show them to have been overly optimistic. If the latter scenario unfolds, data revisions will force rethinking about the course of future oil prices, and the change will be quick.

Exhibit 3. Latest Rig Count And Oil Output Data Are Clashing

Source: EIA, Baker Hughes, PPHB

In order to avoid the volatility of weekly data, we opt to track the trend using a 4-week average of oil production. We have plotted oil volumes for the Lower 48 states against the weekly land drilling rig count. We show the peak oil price in June 2014 and peak oil rig activity in October 2014. After nearly 14 months of weekly average production gains since the last decline experienced in early 2014, production fell in April. After a month of weekly declines, we then experienced two weeks of very small increases. Since it is a 4-week average, the April and May production patterns were encouraging, suggesting that the industry had reached a tipping point when production should start falling and at an accelerating rate until more new wells were brought into production. At that point, we had our first weekly oil rig count increase (+2 rigs), which we speculated might have reflected growing confidence among oil producers that better prices were ahead. We then had a huge weekly oil production increase followed by several more weeks of output gains. Those increases seem to have wiped out the optimism for higher oil prices, causing the rig count to resume its fall.

As we said earlier, the weekly data makes determining the future of oil prices and industry fortunes almost impossible. Most of the near-term outlooks are based on people focusing on only one or two data series and then extrapolating recent trends, even if the estimates seem questionable. As much as we are hoping that clarity of the data and the industry’s outlook is at hand, we are not going to raise our hopes very high.

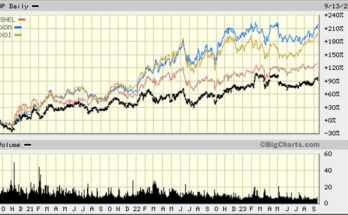

Will The Petroleum Industry Actually Change How It Works? (Top)

One of the most dangerous phrases in the investment business is: “This time is different.” We are wondering whether that phrase has meaning for the petroleum industry as it struggles to deal with the collapse in global oil prices. An interesting exchange we were part of on the Oilpro.com website was about the price decline. One writer mentioned that few people recognize that the current industry fall is less a collapse and more a reflection of the industry coming down from an extended boom. As shown in the track of futures prices since 1983, current oil prices in the $60-a-barrel range are essentially back to levels experienced in 2005. At that price, oil is trading at slightly more than twice the level it was during most of the early years of this century. But, at $60 a barrel, current prices are about three times the level they traded at during much of the 1990s. The response from the industry is that we need a higher oil price because the cost of finding and developing new reserves has increased, especially in due to the revolution in unconventional oil and gas resources.

Exhibit 4. Oil Futures Prices Since 1983 To Now

Source: EIA

Utilizing oil price data from BP plc (BP-NYSE), we decided to examine how oil prices moved following the previous most significant boom – the 1970s. Conditions during that period were driven by structural changes in the industry that predated the explosion in oil prices but set-up the industry for that explosion. The decline in domestic output made America vulnerable to outside forces manipulating the oil market. When oil prices exploded, an industry boom was kicked off, although the U.S. and global economies saw oil demand fall. The demand drop was missed by petroleum industry planners, managers and analysts. The 1970s boom, which lasted seven years, was followed by a five-year bust whose full impact was not overcome for another half decade. The 1980 oil price peak was 2 ½ times higher than the oil price base established over the 1980s and 1990s.

Exhibit 5. Despite Booms, Oil Price Had Long-term Floor

Source: BP plc, PPHB

It is interesting that the 1985 average oil price became a floor despite a brief excursion below that floor during the 1997-1998 Asian currency crisis. As the world’s economy recovered and enjoyed the ebullient economic and stock market environment associated with the dot com bubble, oil prices climbed back to a level twice the 20-year floor price level. As Exhibit 5 shows, the oil price, after declining during the 2001 recession, began climbing and then exploded higher in response to the dramatic 2004 global oil demand increase, which was driven by Chinese consumption as it prepared for hosting the upcoming Olympics.

The 1970s boom and bust produced many complaints about the high cost of oilfield equipment and services that drove finding and development costs up squeezing the operating margins of the oil and gas producers. Beating down service costs was the initial reaction of producers, but when oil prices collapsed in 1985, everyone but energy consumers suffered. Cost pressures in the energy sector forced producers to reconsider how they operated. The most significant change emerging from that re-examination was Royal Dutch Shell’s (RDS.A-NYSE) “Drilling in the ‘90s” program. Shell and others wanted the service industry to consolidate (a strange focus since they had successfully used competition from start-ups, often sponsored by petroleum companies, to beat down prices during the boom) so one company could offer more products and services at lower costs. For those service companies with limited offerings, they were forced to often align with other service companies to be able to bid the full range of products and services the oil and gas companies required in their bid documents.

In the case of contract drillers, Shell’s approach called for them to secure the drill bits, drilling fluids and directional drilling services needed to successfully drill wells. During a late 1980s conference of the International Association of Drilling Contractors (IADC) at which we were speaking, the program morphed into a discussion of the Drilling in the 90s program. After much back-and-forth discussion, we remember one crusty West Texas driller speaking up and saying that the industry had always been doing this – it was called turn-key drilling. The drilling contractor was responsible for choosing the best drill bits and drilling fluids in order to drill wells because he was responsible for delivering a competed well to the producer for a fixed cost. All the risk of drilling was assumed by the contractor in contrast to day rate drilling in which the producer assumes all the risk and pays a contractor by the day to use his drilling crew and rig. Since most of the drilling industry had gotten away from turnkey work, which requires that the contractor be highly confident in his understanding of the geological formations he would be drilling into, this comment ended the discussion of the Shell plan.

Throughout the 1990s and 2000s, every time the industry suffered a price and activity downturn, producers decried the high cost of oilfield products and services. Those comments were besides the fact that technological improvements in the business were contributing to reduced drilling costs – usually in the form of greater footage per rig per day.

Exhibit 6. Is 4x Gap In Oil Price Floor Appropriate?

Source: BP plc, PPHB

In Exhibit 6 (previous page) we show the BP oil price data from 1990 to now, using a $60-a-barrel price for 2015. We would note that BP’s oil price is an average for each year meaning we don’t see the $147-a-barrel price spike in 2007. That said the current oil price essentially matches the average of the 2008-2009 decline during the financial crisis. The $60 price is back to a level experienced in 2005 as we were heading toward that 2007 spike. If $60 is a new floor, it is 4x the 20-year floor. It is important to note that if we measure the current oil price decline from the peak U.S. futures price on June 20, 2014, of $107.26 a barrel, a 50% reduction suggests a floor of $53.63 a barrel. Oil prices in this downturn have traded at that level and lower, but prices have rebounded in recent weeks as confidence within commodity markets has grown that U.S. oil shale production is about to fall leading to a future elimination of the world’s glut.

Our question of “Is 4x enough?” raises the issue of how high oil prices must be to sustain current production and eventually grow it. Discovering that mysterious price level has been the quest of analysts and forecasters ever since Saudi Arabia orchestrated an agreement among the members of the Organization of Petroleum Exporting Countries (OPEC) to sustain their high output. Sustained high output has created the global oil glut, which will only be corrected by less output and/or greater consumption. OPEC perceives lower prices as the medicine to create both outcomes, and despite the financial pain, most producers are cheering for those forces to work, and soon!

Although producers can do little about commodity prices other than take advantage of upward blips to hedge future production, providing them greater financial flexibility and cash flow visibility, they can and are attacking their cost structures. Most readers of the Musings are aware of various efforts by producers to force oilfield service companies to roll back their prices and for equipment companies to drop their sales prices. This is the traditional producer/service company ‘ying and yang’ that occurs at every industry turning point. What may prove more challenging this time is producers’ belief that oil prices will stay lower for longer. It means structural changes become more important than temporary price rollbacks.

While producers are calling for further service cost relief, are they doing much to help? In contrast to past downturns, there is a recognition that energy companies need to streamline their business operations since they cannot assume ever-higher commodity prices will bail them out. Many producer actions in recent years have been in response to strategic business decisions. Examples include ConocoPhillips’ (COP-NYSE) decision to split off the company’s downstream refining business; ExxonMobil’s (XOM-NYSE) purchase of XTO Energy and then allowing that unit to absorb Exxon’s shale drilling operations and continue to operate as a separate company; and BP’s reorganization of its onshore U.S. operations into a separate unit operating outside of the existing corporate structure.

Each move, and those of other producers, was done in response to perceived structural shortcomings in their operations impacting their financial performance. ConocoPhillips’ move forced its exploration and production managers to rationalize their assets following a history of mistimed asset purchases. Exxon’s move was to compensate for its planning group’s failure to foresee the shale revolution. BP’s restructuring hopes to enable its onshore E&P business to mimic the successes of independent producers.

While petroleum corporations have made strategic moves, they are also working to lower finding and development costs through boosting their operational productivity. Everyone who has lived through this boom environment allowed their organizations to become bloated by adding staff, aggressively bidding up salaries of new employees that puts upward pressure on the entire corporate salary structure, adding benefits and building new corporate facilities. Some of these splurges are being rectified through layoffs and salary reductions. The most powerful cost reductions will be to cut drilling, project development and production costs in ways that are longer lasting than the immediate benefits from cutting staff.

The impact of the industry boom since the early 2000s is reflected in the IHS upstream capital cost index that exploded from 115 in 2005 to 230 in Q3 2013 and has essentially remained there through 2014. That cost explosion has been particularly devastating for offshore projects and other long-term projects such as oil sands developments and the construction of LNG export terminals. The cost problems of many of these projects reflect oil companies’ desires for complete control over projects and treating each of them

Exhibit 7. Finding And Development Costs Remain High

Source: IHS

as unique. Unfortunately, that approach rejects standardization in favor of uniqueness. Every time companies elect uniqueness, unless there is a physical necessity, costs escalate. Many in the industry question this approach, but it appears we are seeing early signs of rejecting uniqueness in response to the need to cut costs.

The most recent example of this new thinking is Chevron Corporation’s (CVX-NYSE) announcement that it will accept two bids from contractors for its projects – one based on Chevron’s specifications and the other based on the service company’s design. In the early 2000s, ExxonMobil created a ‘Design One, Build Many’ plan for its Angola deepwater development in Block 15. The plan involved building two tension leg platforms and five of the world’s largest floating production, storage and offloading vessels. The plan’s success brought the field into production earlier than expected and within budget. The program was recognized with an award from the Offshore Technology Conference in 2011. One wonders how extensive these efforts are in the industry today because deepwater costs continue to rise. There are still many examples of uniqueness dominating standardization.

With the advent of the manufacturing process of drilling and completing shale wells, the operating philosophy has become that every well should be the best well in the field. That philosophy has forced rethinking all aspects of the work process. We have covered some of these successes in previous articles, but this is an area for further research and investigation. How successful these efforts are and how much they are embraced will shape the future of the petroleum industry.

McKibben-Watts Is More Lincoln-Douglas Than Lee-Grant (Top)

In the late 1850s, the burning issue in the United States was slavery and its potential expansion into the new territories that were destined to become states in the Union. The country had a reasonable balance between states allowing and encouraging slavery and those opposing it. Two of the most important actors in this drama were Illinois Senator Stephen A. Douglas, the Democratic candidate for that seat in the 1858 election, and his Republican opponent, Abraham Lincoln. Their famous debates have much in common with the debate over climate change. But first we need to provide some history about those debates.

In the lead up to the debates, Sen. Douglas claimed Mr. Lincoln was an abolitionist for saying that the American Declaration of Independence applied to blacks as well as whites. On the other hand, Mr. Lincoln argued in his House Divided Speech that Sen. Douglas was part of a conspiracy to nationalize slavery. He pointed to Sen. Douglas’ sponsorship of the Kansas-Nebraska Act of 1854 that ended the Missouri Compromise ban on slavery in the two states. The Kansas-Nebraska Act established the two states and

Exhibit 8. Lincoln And Douglas Held Spirited Debates

Source: Wikipedia.com

allowed the residents to vote (exercise popular sovereignty) on whether their states would allow slavery or not. Mr. Lincoln expressed the fear that the Dred Scott decision was another step in the direction of spreading slavery into the Northern territories. That case stated that slaves had no standing so could not sue the federal government, and that the federal government had no power to regulate slavery in territories acquired after the founding of the United States. As a result, Mr. Lincoln worried that the next Dred Scott decision would turn Illinois into a slave-state.

Prior to 1913 and the ratification of the 17th Amendment to the Constitution, senators were elected by their state legislatures rather than by popular vote. In 1858, Sen. Douglas and Mr. Lincoln were competing for their respective political parties to win majorities in the Illinois House and Senate, and ultimately appointment as the state’s senator. The two men agreed to debate in each of the nine districts of Illinois, but since each had spoken in Chicago and Springfield within a day of each other, they agreed that those speeches would represent their joint appearances. Their remaining seven debates where held between late August and mid October and drew large crowds and stenographers from the media to record them. These transcripts were published by many of the leading newspapers of the day (including a book of the transcripts compiled by Mr. Lincoln after the 1858 election) and provided the opportunity for manipulation in partisan ways. (Was this possibly the start of the sound bite?)

The debate format required endurance by both the presenters and the audience. The first speaker spoke for 60 minutes, followed by the second presenter for 90 minutes with a 30-minute rejoinder from the first speaker. In the election, the Democrats won 40 seats in the state House to the Republicans’ 35 seats, while in the state Senate the Democrats held 14 seats to the Republican’s 11. As a result, Sen. Douglas was elected senator despite Mr. Lincoln winning the popular vote. That performance plus the debates elevated Mr. Lincoln’s stature leading to his eventual nomination by the Republicans and election to the presidency in 1860. (In the 1950s a Broadway play, “The Rivalry,” stared Richard Boone, of Paladin TV fame, recreated the highlights from these debates.)

Exhibit 9. Lee Surrendering To Grant At Appomattox

Source: faculty.ucc.edu

Seven years later, on April 9, 1865, the respective military leaders of the North, General Ulysses S. Grant, and the South, General Robert E. Lee, after several years of battling through Virginia, ended the Civil War with a meeting at the house of Wilmer McLean in the village of Appomattox Courthouse in Virginia. As part of the agreement, Gen. Grant wrote into the surrender terms that “this will not embrace the side-arms of the officers, nor their private horses or baggage,” representing a significant and magnanimous gesture.

In modern times, the equivalent of these events in the climate change battle was a meeting two weekends ago between Bill McKibben, the founder of the environmental movement 350.org, and Anthony Watts, the sponsor of the climate blog Watts Up With That? Mr. McKibben is a professor, author, environmentalist and leading proponent in fighting for legislation to restrict the use of fossil fuels, while Mr. Watts is a meteorologist and weather expert who is skeptical of the man-made global warming case. We were treated to an article on Mr. Watts’ web site highlighting the face-to-face discussion he had over a beer with Mr. McKibben in California.

Exhibit 9. McKibben (left) And Watts (right) Meet Over Beer

Source: Watts

Mr. Watts wrote his article and then Mr. McKibben offered some comments later, but he only offered one point on which he disagreed with Mr. Watts’ commentary, which had to do with nuclear power. So what did the two opponents agree to? (The following are extracts from the article.)

“…tackling real pollution issues was a good thing.”

“…as technology advances, energy production is likely to become cleaner and more efficient.”

“ coal use especially in China and India where there are not significant environmental controls is creating harm for the environment and the people who live there.”

“…climate sensitivity…hasn’t been nailed down yet.”

“…how nuclear power especially Thorium-based nuclear power could be a solution for future power needs…”

“…the solar power systems we have put on our respective homes have been good things for each of us.”

“…there are ‘crazy people’ on both sides of the debate…”

“…if we could talk to our opponents more there would probably be less rhetoric, less noise, and less tribalism that fosters hatred of the opposing side.”

“…it would be a great thing if climate skeptics were right, and carbon dioxide increases in the atmosphere wasn’t quite as big a problem as we have been led to believe.”

The two did disagree on some of the topics. Here is where they disagreed and Mr. Watts’ commentary about the disagreements.

“Climate sensitivity was the first issue that we disagreed about. …Bill thought the number was high, while I thought the number was lower…”

“Bill seems to think that carbon dioxide influences…have perturbed our atmosphere…He specifically spoke of the recent flooding in Texas calling it an ‘unnatural outlier,’ and attributed it to man-made influences on our atmospheric processes.”

Exhibit 10. No Discernable Pattern Of Global Precipitation

Source: Watts – Global Precipitation, from CRU TS3 1° grid

Exhibit 11. No Discernable Pattern Of Extreme Precipitation

Source: Watts

It was here where Mr. Watts spent time trying to explain that good weather records only exist for about 100 years making it difficult to know what an ‘outlier’ is. He pointed to the 1861 flood in California that was followed a few years later with an exceptional drought period. Mr. Watts also presented several graphs (previous page) of global precipitation showing a lack of any noticeable increase in recent years.

Mr. Watts went on to say:

“Bill also seems to think that many other weather events could be attributable to the changes that humans have made on our planet….But I came away with the impression that Bill feels such things more than he understands them in a physical sense. This was not unexpected because Bill is a writer by nature, and his tools of the trade are to convey human experience into words.”

“For example I tried to explain how the increase in reporting through cell phones, video cameras, 24-hour cable news, and the Internet have [sic] made severe weather events seem much more frequent and menacing then they used to be.”

“Bill seemed concerned that we have to act on the advice of the models and the people who run them because the risk of not doing so could be a fateful decision.”

“Bill and I talked about how government can sometimes over-regulate things to the point of killing them,…He was surprised to learn that electric cars in California have to be emissions tested just like gasoline powered cars, instead of simply looking into under the hood and noting the electric motor and checking a box on a form.”

According to Mr. Watts, both he and Bill lamented how they were labeled – Bill an “idiot” and Anthony a “denier.” As Mr. Watts put it: “I don’t think Bill McKibben is an idiot. But I do think he perceives things more on a feeling or emotional level and translates that into words and actions. People that are more factual and pragmatic might see that as an unrealistic response.”

Mr. Watts’ summary is educational. He shows that like Mr. Lincoln, Sen. Douglas, General Lee and General Grant, opponents can actually meet, engage in civil discourse about an issue and remain respectful of each other afterwards. In our view, Mr. McKibben reflects the emotional and religious motivation behind the environmental movement. Mr. Watts’ characterization of Mr. McKibben’s writing influence underlying his views and passion plus his lack of understanding of the physical aspects of climate change factors provides significant insight into how this climate battle may ultimately be resolved. The most recent climate change paper about the global warming pause, which relies on revised land-based temperature measurements that have cooled the older data while boosting modern data in order to show how global warming is continuing, is an example of the disservice performed by scientists. The paper’s authors provide a huge disservice to the public by ignoring the atmospheric data collected by satellites, considered to be the most accurate temperature data and which clearly shows the 15- to 18-year pause in global temperatures. Climate science, unfortunately, is not settled and the more data gathered appears to show significant flaws in the climate models upon which the climate change proponent’s case is based. Climate skeptics seem more willing to listen to facts and to alter their positions accordingly. However, they are not willing to be subjected to purely emotional arguments that fly in the face of facts. The meeting between Mr. McKibben and Mr. Watts actually shows more agreement than disagreement between the men, and presumably the two camps. Maybe this is the first step to an agreement about the issue. Then again, we will not hold our breath until we see many more such meetings and agreements.

Is The Future Of The Automobile Already Here Now? (Top)

It isn’t even the peak time for automobile trade shows, but there has been a lot of news lately about the state of the autonomous vehicle market as well as the future of electric vehicles (EV) due to the success of Tesla Motors Inc. (TSLA-Nasdaq) and its recent annual meeting news. In attempting to understand the state of the autonomous vehicle market, a recent report by Google (GOOG-Nasdaq) on the accident history of its self-driving cars during the past six years and 1.8 million miles driven provides some insight.

Google’s self-driving cars are being hailed for their safe driving, something the company is promoting with its new automobile insurance web site. Investors and analysts are wondering whether Google believes that the self-driving vehicle revolution will radically change the automobile insurance market opening up a new business opportunity. Auto insurance companies may be concerned, but since self-driving vehicles are not yet commercial it is too soon to be unveiling revised business models. One wonders, however, how soon they may have to address the Google threat?

Holman Jenkins of The Wall Street Journal wrote a column about the safety performance of the Google self-driving cars and found some interesting, and counter-intuitive results. The Google report referred to only 12 accidents in its May edition, although the metrics regarding the vehicle fleet and miles driven were as of June 3, 2015. However, Mr. Jenkins reported that Google experienced another accident after the report was issued and his June 10, 2015, column. We don’t know for sure, but assume, based on the release quoted in the column that the Google car was being driven in autonomous mode. That means the accident record is six while in manual driving mode with seven in autonomous mode. According to the report and the subsequent release describing the latest accident, none of the self-driving accidents were caused by the car. Interestingly, the report made the following statement about vehicle accidents. “Thousands of minor accidents happen every day on typical American streets, 94% of them involving human error, and as many as 55% of them go unreported.” Google also opined that they believe these figures to be low.

Google’s report contained two interesting visuals about the success of the vehicle’s technology in recognizing driving conditions human drivers might not have handled as well. The first incident involved a self-driving vehicle stopped at a traffic light that remained stationary after the traffic light turned green because it sensed an approaching emergency vehicle and its technology recognized that these vehicles have different driving patterns than normal cars.

Exhibit 12. Self-driving Car Waits On Emergency Vehicle

Source: Google May report

The emergency vehicle reaction is interesting. The self-driving car’s technology was able to pick-up that it was coming and the direction it was coming from and then reacted by not moving forward into its left-hand turn until the emergency vehicle had passed. Of course, it is possible a human driver would have had the same reaction, but this is an interesting demonstration of the intelligence of the autonomous technology. This technology would help in those situations when you hear an emergency vehicle’s siren but don’t know exactly where it is in relationship to your vehicle so you don’t know what driving action should be taken.

The second example was a self-driving vehicle encountering two cyclists at an intersection taking erratic paths. Additionally, the incident occurred at night, making the experience even more challenging for both humans and the autonomous technology, which suggests the technology is well developed.

Exhibit 13. Self-driving Car Deals With Cyclists At Night

Source: Google May report

Google reported it currently operates 23 Lexus RX450h sport utility vehicles currently self-driving on public streets, mainly in Mountain View, California, the corporate home of the company. It also has nine prototypes currently self-driving on closed test tracks. Since the self-driving experiment began in 2009, the vehicles have driven a total of 1,011,338 miles in autonomous mode and 796,250 miles in manual mode, totally 1.8 million miles. At the present time, the Google vehicle fleet is averaging 10,000 autonomous miles per week on public streets.

Mr. Jenkins compared the accident statistics of Google’s self-driving vehicles against the record of the general driving population based on data from a federal study in 1993. The comparison shows that Google’s accident rate (7.2 accidents per million miles driven) is about twice the rate of the safest group of drivers, those ages 40-64. He found that the Google accident rate compared closely to the rate of those drivers ages 70-74. He found this comparison interesting since a blog post by someone in the Google headquarters’ neighborhood said, “Google’s cars drive like your grandma.”

Based on the overall accident statistics, the Google cars only outperform two age groups: drivers age 16-19 who reportedly experienced 20.12 accidents per million miles driven, and drivers age 75 and older who have a 12.23 accident rate. Further, according to Mr. Jenkins, compared to statistics for New York City drivers, the Google car doesn’t perform well. Data from 2004 shows that New York City drivers were involved in 6.7 accidents per million miles driven, nearly similar to the 1993 federal study’s results for all drivers, and slightly better than the Google cars’ record.

Interestingly, New York City taxi drivers, the bane of many residents and visitors, had a 4.6 accident rate, considerably better than the rate for Google’s cars and the general population. We were surprised by the taxi accident rate since of the four vehicle accidents we have been involved in during our driving career, three of which were rear-end collisions, one involved a New York City cab.

A recent survey showed that 27% of those polled said they would support laws restricting human driving if self-driving vehicles proved to be safer. Maybe self-driving cars are safer, but why the large number of rear-end collisions? Maybe it is due to the algorithms. Mr. Jenkins quoted an anonymous blogger at the Emerging Technologies site who wrote: “They’re never the first off the line at a stop light, they don’t accelerate quickly, they don’t speed, and they never take any chance with lane changes (cut people off, etc.).” There are questions about whether, relative to the standard of most American drivers, the Google cars overreact to a yellow light, are reluctant to make a right turn on red, or are overly cautious about seizing the right of way when a pedestrian is approaching a crosswalk. The implication of these challenges is that when the entire vehicle fleet is self-driving there should be no accidents as all the cars would go whizzing along at high speeds with little congestion and would avoid all driving challenges. The problem will come during the transaction from a manually-driven vehicle fleet to a totally autonomous one.

Despite various issues with self-driving cars, virtually every automobile manufacturer is developing its own version and targeting specific geographic markets such as BMW’s (BMWXY-OTC) new car for China. Whether the fleet gets to 100% autonomously-driven or self-driving becomes only a segment, there will be changes for the automobile industry, its suppliers and vendors. Equally important is the rate of penetration of self-driving technology and how it impacts vehicle use and oil consumption. In that vein, we were intrigued to read an interview by New York Times reporter Quentin Hardy and Eric Larsen of Mercedes-Benz Research and Development in Sunnyvale, California. Mr. Larsen received a doctorate in sociology and heads research in society and technology for Mercedes. He is a futurist, a label that likely makes many people roll their eyes in disbelief about the value of this type of research.

Dr. Larsen made several very interesting observations about social trends influencing the growth, use and value of automobiles. His views are not positive for EVs. He began by stating that the automobile industry represents a big market for family values and that the American lifestyle lends itself to vehicles. But he also pointed out how wireless connectivity, which manifests itself in smart phones, big data and autonomous driving, has changed everything in today’s society and will impact the automobile industry as well. What this means is that while many people have pointed to a long-term decline for suburbia, Dr. Larsen believes suburbs are and will remain important. He suggests that young people have merely had their adulthood postponed by the 2008-2009 recession, but they will still get married, want children and will move to the suburbs. They want their lives to be home-based and they will continue filling up their cars “with kids, dogs and stuff from big-box supply stores.” As a result, people will continue to want big cars.

While Dr. Larsen believes the suburbs are not going away, he points out that they are not like the suburbs in the 1950s. Both parents are often working and school activities are no longer at the schools but miles away at soccer fields and violin teachers. As a result, there will be opportunities for different transportation solutions. Dr. Larsen pointed to Mercedes’ Boost business, where minivans drive children after school, but rather than school buses they drive them door-to-door. Parents can track their children with smart phone apps and the minivans have both a driver and a concierge on board. The driver cannot leave the minivan but the concierge can walk children to the door or their after-school activity. In his view, Americans will not give up their own cars. “Americans like to do everything in cars.” He pointed out that Americans eat in cars, drink in cars, have entertainment in cars, change clothes and have sex in cars. People often sleep in their cars and spend a lot of time waiting for their children. As Dr. Larsen points out, “Driving is really the distracting thing we do in cars.”

While it would appear that little has changed about the desire, need and use of automobiles, what has changed is the inside of the vehicle. “Screens have become more important,” said Dr. Larsen. You can tell how old a car is by its screen, or absence. Leased vehicles may be refurbished more often in order to make them appear newer. Therefore, it is likely there will be fewer new models but more new screen updates. Computer controls within vehicles will become smarter and more intrusive. You won’t be able to enter long addresses into navigation systems while you are driving, but only when you are stopped. After a vehicle has stopped, seat controls will allow you to put your seat back further, letting you work, sleep or watch television from the driver’s seat. The issue will become balancing personalization versus privacy. Sensors in smart phones and vehicles will allow insurers to monitor how you drive and whether you are using your phone while driving. As the nation develops “pay as you drive” car businesses or fuel taxing, Dr. Larsen suggests there are legal issues needing to be resolved, suggesting that the pace of these technology transitions may not be as quick as assumed, then on the other hand they could happen faster.

Dr. Larsen’s views about EVs were significant because he does not see the fuel part of the industry model being broken. Hydraulic fracturing has contributed to reduced crude oil and lower gasoline prices that have boosted internal combustion engines, which are achieving better mileage. Natural gas is cleaner than oil and easier to install from a technology point of view. Refueling cars with gasoline requires about five minutes a week, while people still fear running out of fuel with EVs, which hurts their acceptance. Although he acknowledges that cities are doing their part by putting a few EV charging spots on streets and in city parking garages, he questions whether they will put a charging station at every parking spot or in every parking garage space. He notes that hybrid vehicles can be very successful in the suburbs since they overcome the range anxiety of EVs and, if they are plug-in hybrids, the owners can put charging stations in their garages and power them with solar installations on the roof of their homes.

Dr. Larsen’s final observations dealt with selling luxury cars, important for his employer. He stated: “In the industrial age you got luxury based on income and showing off. Today it’s about wealth, status and projecting personal values.” But he then made the following observations about luxury: “One of the challenges is that luxury wants to be heavy, with better seats, more safety features, more stuff in the car. Authenticity matters, too. Wealthy people want things that are natural and handmade.” Dr. Larsen went on to explain Mercedes-Benz’s strategy to deliver cars that meet the desires of the wealthy. His final observation about the wealthy was very insightful. “It’s hard to be rich without contradicting yourself.” At the present time, that comment helps to explain both the success and failure of EVs.

Exhibit 14. Gasoline Use Rising With VMT Increase

Source: DOT, PPHB

The observations of Dr. Larsen, a sociologist, about the attitudes of our youth about the future of the suburbs and connectivity will prove crucial for the future of the automobile industry and oil demand. If the suburbs survive and thrive, then the automobile’s role will remain central to the lives of the people living there. On the other hand, if young people shun the suburbs and elect to live in urban areas where they can utilize public transportation and concentrate more on their electronic interactions, then automobile demand will suffer as will fuel consumption, especially as more fuel-efficient vehicles come to dominate the nation’s vehicle fleet. Which of these two scenarios shapes the nation’s social and automobile future will determine the sustainability of the recent increase in vehicle miles traveled and rising gasoline consumption.

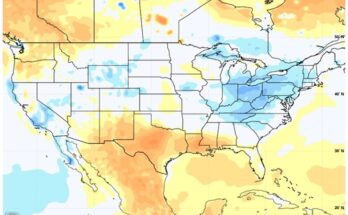

Below Average Hurricane Forecast Shifts Focus To Disasters (Top)

We are early into the 2015 Atlantic Basin hurricane season, which has been predicted to be “below average” for storm activity, but we have already experienced one named storm off the North Carolina coast even before the June 1st official start date for the season. The various storm forecasts we have seen – National Oceanic and Atmospheric Administration (NOAA) and StormGeo – essentially mirror the 2015 predictions from Dr. Phil Klotzbach of the Department of Atmospheric Studies at Colorado State University (CSU). In his April 9th forecast, he called for the upcoming storm season to be one of the “least active seasons since the middle of the 20th century.” His forecast for seven named storms, three hurricanes and one major hurricane reflects his assumption that the El Niño weather phenomenon forming in the South Pacific will become at least a moderate force this summer and fall impacting weather, temperature and wind conditions in the Atlantic basin that will inhibit the formation and strengthening of tropical storms into hurricanes. The CSU hurricane forecasting team is also calling this year for a “below-average probability for major hurricanes making landfall along the United States coastline and in the Caribbean.”

In June, CSU updated its April storm prediction adding one storm to the expected total for named storms, going from seven to eight. The June forecast held constant its estimate for the number of hurricanes and major hurricanes. As other forecasts fell in line with the CSU forecast, suggesting a second consecutive year of very low storm activity, those meteorologists sympathetic to the climate change movement began focusing on how the lack of hurricanes landing on the U.S. coastline was due to luck, which they claim is likely to run out. As one article claimed, “recent decades have been quiet — maybe too quiet.”

An Associated Press article studying the issue utilized their analysis along with that of Massachusetts Institute of Technology meteorology professor Dr. Kerry Emanuel. Dr. Emanuel wrote a number of papers and op-eds back in 2005 and 2006 following those active storm years that foresaw more highly active hurricane years and with many of them making U.S. landfall, in particular along the Gulf Coast region between Houston and New Orleans. His writings promoted global warming (the term climate change hadn’t been adopted yet) as the root cause of the more active hurricane seasons. He predicted that the frequency of hurricanes, and especially major hurricanes, would increase and storms grow progressively stronger, inflicting greater damage on the U.S. coast when they made landfall.

In the most recent newspaper article, Dr. Emanuel commented that "We’ve been kind of lucky. It’s ripe for disaster. … Everyone’s forgotten what it’s like." There is certainly a lot of truth to that statement, as we can attest having grown up in New England where hurricanes were active in the 1950s. We also have to agree with Dr. Emanuel’s other quote. “It’s just the laws of statistics," he said. "Luck will run out. It’s just a question of when." Fortunately, Dr. Emanuel didn’t attempt to tie an increase in hurricane activity to climate change or global warming. Maybe he is now willing to acknowledge the cyclical pattern of factors that will bring a return of increased storm activity. The problem is that we are in a period of more active storms, so any increase in hurricanes will either likely come soon or we may find conditions changing and reducing the likelihood of more storms.

Exhibit 15. U.S. Coastal Cities That Have Avoided Hurricanes

Source: AP/Reuters

The AP/Dr. Emanuel article contained the graphic in Exhibit 15 (previous page) showing cities along the U.S. coastline that have not experienced a hurricane landfall in anywhere from 50 to 90+ years. Some of these cities will be targets of future hurricanes if history is any guide, which we will show with the aid of slides from a spring presentation made by CSU’s Dr. Klotzbach showing the cyclical nature of tropical storm activity in the Atlantic basin.

Exhibit 16. Hurricane And Major Hurricane Landfall

Source: Klotzbach/CSU

In an April 28th presentation at the Ironshore Hurricane Seminar, a supporter of the CSU hurricane forecasting team, Dr. Klotzbach presented the slide in Exhibit 16 (above) showing a nine-year moving frequency of hurricane and major hurricane activity on the U.S. coastline from 1878 to now. What is clear from the hurricane

Exhibit 17. Atlantic Major Hurricanes Show Cyclicality

Source: Klotzbach/CSU

and major hurricane patterns is that there have been periods when storm activity was high and times when it was low. What also stands out on the chart is the vertical drop in storm impact in recent years, likely supporting Dr. Emanuel’s observation that it may be merely a matter of time before conditions change.

The cyclical pattern in the frequency of major hurricanes is demonstrated by the slide in Exhibit 17 (previous page) showing the annual number of 6-hour periods of major hurricanes. Another way of looking at the data is to recognize that four 6-hour periods equals one full day of major hurricane time. Thus, what we see is major hurricane activity of just over three days per year in 1900-1925, seven days in 1926-1969, two and a half days in 1970-1994 and eight days in 1995-2014. There is clearly a cyclical pattern to major hurricane activity. What causes this cyclicality? To simplify the analysis, it involves measuring various factors, some of which have proven to be more important at certain times of the year than others and in different periods of time.

Exhibit 18. Primary Driver For Atlantic Tropical Storms

Source: Klotzbach/CSU

TMC stands for Thermohaline Circulation, which is a large-scale circulation in the Atlantic Ocean driven by fluctuations in the water’s salinity and temperature. AMO is the Atlantic Multi-Decadal Oscillation, which is a mode of natural variability that occurs in the North Atlantic and evidences itself in fluctuations in sea surface temperatures and sea level pressure fields. The CSU hurricane forecasting team bases its AMO on sea surface temperatures within 50-60oN and 50-10oW. It is also determined by sea level pressures within 0-50oN and 70-10oW.

Generally, when THC is stronger than normal, AMO tends to be in its warm or positive phase, meaning that typically more hurricanes form. What one sees in the slide in Exhibit 19 is that the AMO was warmer (the red areas in the positive part of the graph) during 1926-1969, while it was cooler from 1900 to 1926 and again during 1970-1994. The most recent years also appear to be in the warm phase along with the projection for the next few years. The AMO pattern matches the cyclicality of major storm activity.

Exhibit 19. Warm AMO Reflects Greater Storm Activity

Source: Klotzbach/CSU

What is also interesting to note are the number of major hurricanes and their geographic paths during three historical time periods that coincide with the warming and cooling since 1945. When we examine the paths of the major hurricanes during these three periods, as displayed in Exhibit 20 (next page), we see how many storms made landfall where the cities cited by AP/Dr. Emanuel are located. The 1945-1969 era experienced the largest number of major hurricanes and many of them landed in the central portion of the Gulf Coast or went up the East Coast striking or brushing many of the cities cited. In the less active era of 1970-1994, there were only a few storms that struck the Gulf Coast and almost none landed on the East Coast. The most recent era saw a large number of storms as predicted by HC and AMO conditions. A number of the storms landed on the Gulf Coast, but again virtually none landed on the East Coast. Many more storms landed in the Caribbean and Mexico. Additionally, a large number of storms chewed up the North Atlantic without ever coming near the East Coast.

Exhibit 20. Hurricane Frequency Show Cyclicality

Source: Klotzbach/CSU

The slide presented in Exhibit 21 shows the number of major hurricanes that landed on the East Coast divided into two 50-year time spans. The 1915-1964 period included the early cool years, but also a majority of a first warm period and shows 22 major hurricanes, or about 2 ½-times the number of storms that came ashore during 1965-2014.

Exhibit 21. Early Years Had More Major Hurricane Landfalls

Source: Klotzbach/CSU

The data for hurricanes and major hurricanes over the past century demonstrates that there have been significant time spans when the Atlantic basin was active while there were other periods when it was inactive. Just because certain years were active didn’t necessarily translate into hurricanes landing along the U.S. East or Gulf Coasts. On the other hand, there were times when disastrous storms occurred in years with little activity. Examples include 1980 when Hurricane Allen landed on the Gulf Coast south of Corpus Christi, and damaged central Texas, or Hurricane Andrew in 1992 that destroyed much of south Florida. The point is that even in quiet years a major storm can cause significant destruction. More important, however, may be considerations such as steering winds and warm coastal waters that could cause hurricanes to travel up the East Coast rather than barreling into the Caribbean Sea and the Gulf of Mexico. When those winds change and become more like those experienced in the 1950’s, we could expect hurricane paths to change dramatically from those experienced in 1995-2014.

What history and storm data shows is that tropical storm activity is cyclical because meteorological conditions promoting or retarding hurricane development change. Potentially the greatest problem for us is, as Dr. Emanuel pointed out, that many people living in cities and areas along the coast have never experienced hurricanes, or haven’t for years, so they don’t appreciate how devastating they can be. Years of quiet storm activity have encouraged people to build homes in areas highly exposed to storm damage should a hurricane come ashore. Complaisancy is our biggest enemy.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.