- Latest Economic Statistics Are Not Good For Energy Market

- Alternative Fuel Vehicles Drawing Increased Attention

- Who Should Be Praying For A Normal Or Cooler Summer?

- After 7 ½ Months Of Lower Gas Rigs, Production Finally Falls

- NOAA Hedges About Storm Season Ahead; Others Agree

- Natural Gas Market Gains To Be Limited By Coal Prices

- How Are Global Warming And CAFE Standards Linked?

Musings From the Oil Patch

June 5, 2012

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Latest Economic Statistics Are Not Good For Energy Market (Top)

Last Friday morning the anxiously awaited U.S. jobs report for the month of May was released to gasps of disbelief by investment professionals and politicians. The assembled economic prognosticators on CNBC’s Squawk Box morning stock market show were debating their forecasts calling for anywhere from 125,000 to 165,000 jobs having been created by the American economy last month prior to the government’s statistical release. The consensus estimate, as reported by the CNBC anchors was about 150,000-155,000 jobs. One of the CNBC forecasters had reduced her estimate in the prior 24 hours after Thursday’s release of the ADP proprietary report of private sector job growth that suggested that only 133,000 new positions had been created last month. When the CNBC reporter read the release from the Bureau of Labor Statistics (BLS) saying only 69,000 total new jobs had been created, with 82,000 being private sector jobs while the public sector lost 13,000 jobs, everyone reacted with utter shock.

Not only was the job creation number extremely weak, but the BLS revised prior monthly estimates of employment growth lower. The March employment number was reduced by 11,000 to 143,000 and April’s number was slashed by 38,000 to 77,000 jobs, barely above the May estimate. Other reported measures of the labor market’s health were also weak in May as the unemployment rate ticked up by one-tenth to 8.2% as more workers re-entered the labor force seeking jobs. The average workweek for all nonfarm employees edged down by 0.1 hours to 34.4 hours in May. Significantly, the manufacturing workweek declined by 0.3 hour to 40.5 hours, and factory overtime declined by 0.1 hour to 3.2 hours.

The average hourly earnings for private nonfarm workers increased by 2-cents to $23.41, which was a positive. Over the past 12 months, average hourly earnings have increased by 1.7%. Unfortunately, for the 12 months ending in April, inflation as measured by the Consumer Price Index increased 2.3%. If we average the annualized inflation rate for the first four months of 2012, we have a 2.7% rate, which dwarfs the increase in annual average hourly earnings growth meaning that workers’ real income has declined. Falling gasoline prices, as they follow crude oil prices lower, suggest American consumers are starting to get some cost of living relief, but it probably won’t be enough to send them on a buying binge that would help jumpstart the economic recovery. It would also boost energy demand.

The bad economic news released on Friday continued the string of bad data released earlier in the week – a revised assessment of GDP growth in the first quarter of 2012, down to 1.9% from the earlier estimate of 2.2%; a report by outplacement firm Challenger, Gray & Christmas that there were 61,887 layoffs in May, the largest monthly total since September 2011 when 115,730 workers were terminated; and that the stock market declined in value by 6% in the month of May. Not only are U.S. economic statistics weak, but increasingly the data from other important countries around the world are showing deterioration. Take for example China, where the government’s official Purchasing Managers’ Index (PMI) that measures buying primarily by the large state-owned companies fell in May to 50.4, which was below expectations of 52.2 and marked the largest monthly drop in over two years. The reading, however, was still positive. Numbers of 50 or above indicate that the economy is in an expansion mode, conversely those below 50 signal contraction.

The PMIs for Australia and South Korea are also falling, and we have seen announcements by natural resource companies Rio Tinto (RIO-NYSE) and BHP Billiton (BHP-NYSE) that they are scaling back their plans to expand capacity in many of their mineral operations. Reduced capital spending by these companies means less equipment purchased and fewer new workers hired, which means less energy needed.

Everyone is well aware of the financial and economic turmoil in Europe. Virtually every country in Europe is either in a recession or close to entering one. This state of affairs is demonstrated by the Eurozone PMI charts in Exhibit 1 on the next page. In the regional chart, the Eurozone has the lowest reading. Within the Eurozone, Ireland is the only country with a PMI reading of 50 or above, signaling its economy is growing.

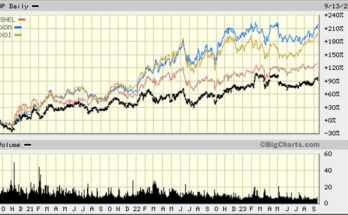

These weak economic statistics contributed to a dramatic stock market sell-off last Friday when the popular market indices fell by about 2.5%. Not only were stocks down, but crude oil futures dropped by over $3 per barrel taking WTI back to barely above $83 per barrel, down 20% over the past two months. As the chart in

Exhibit 1. Global Economies Are Weakening

Source: Financial Post

Exhibit 2 demonstrates, as crude oil prices began their slide earlier this year oilfield service stocks followed. Last week, as oil futures prices declined by nearly $7.50 per barrel to close at an eight month low, or over an 8% drop for the week, the OSX was pummeled, falling by more than 7.5%. It was clear by the end of last week that investors expect the future for energy and oilfield service companies to be much more difficult than they had expected merely a few days prior.

Exhibit 2. Energy Stocks Are Tracking Oil Prices

Source: EIA, Yahoo Finance, PPHB

Weak economic statistics suggest falling oil demand in the future, which is not good for energy companies. To see what the growing economic weakness in North America and Europe has meant to global energy demand, one only needs to look at the change in the 2012 oil consumption estimate of the International Energy Agency (IEA) between its first forecast made last July and its most recent May projection. Globally, the consumption estimate has been cut by one million barrels per day. The Americas and Europe have both seen their demand estimates reduced by 400,000 barrels per day.

The demand cuts for those two regions were joined most recently by a cut to the demand estimate for Asia/Pacific. The Former Soviet Union is projected to see an increase in demand while Middle East consumption will fall by 100,000 barrels per day and Africa remains unchanged. Given the latest economic statistics from China and South Korea, we fully expect to see a further reduction in Asia/Pacific’s demand estimate.

Exhibit 3. Oil Demand Growth Estimates Falling

Source: IEA, PPHB

On a year to year basis, 2012’s oil demand was initially projected to grow by 1.5 million barrels per day in July 2011, but now it is expected to increase by only 800,000 barrels per day. Since we have not yet reached the mid-point of 2012, we will not be surprised to see demand in 2012 increase by only a few hundred thousand barrels per day. If the sovereign debt crisis in Europe is not resolved in the next six months and the U.S. economic recovery doesn’t accelerate, the weakening pace of economic activity in the Asia/Pacific region could contribute to little or no oil demand growth in 2013. That would seem to be what the stock market is saying, and would certainly present a challenge to the energy business.

Alternative Fuel Vehicles Drawing Increased Attention (Top)

Will it be cars powered by electricity or those running on natural gas that gains the greatest market share in the future? Electric vehicles (EV) are the favored option of the Obama administration because they are “pollution-free.” Unfortunately, several recent studies have shown that depending on the fuel source powering the generation plant, electricity can be quite polluting. In other words, how environmentally-friendly EVs are depends on where they are plugged in. This concern has not been the primary reason why EVs haven’t caught on with the American consumer. Rather, it has more to do with their high price and limited travel range on a single battery charge that has held back increased market penetration.

Taking center stage now are vehicles powered by natural gas, either as compressed natural gas (CNG) or liquefied natural gas (LNG). The push to get more Americans into CNG- or LNG-powered cars has been driven (pardon the pun) by the natural gas shale revolution that has led to increased reserves and production and low prices. The consensus view held for the past decade of the role natural gas will play in the domestic, and likely global energy market, is as the bridge fuel to take this country from its crude oil dependency to a country powered by clean renewable fuels. This view has evolved as natural gas production expanded well beyond what was expected and the price of the commodity descended to un-imaginably low levels. Now, with the prospect of these supply and price conditions extending for decades, instead of being a bridge, natural gas is perceived as the next fuel to power the globe as we go through another historical energy transition. At this point we won’t debate the natural gas thesis but rather will focus on its impact on the transportation market and which vehicles drivers may be using in the future.

Somewhat ignored in this discussion about the future of the alternative fuel vehicle market is the role of hybrid vehicles, including gasoline/battery powered and plug-in hybrids. What we have found, as a result of an analysis by an executive leading a company active in the alternative-fuel vehicle market that was made available to us, is that the politically-popular view of EVs being the most environmentally-friendly mode of transportation may not be correct. The analysis was done at the end of 2010, but we have updated the fuel cost variables for more recent prices. The analysis was driven by the announcement by Toyota (TM-NYSE) that it was introducing a plug-in Prius, a variation on its extremely successful gasoline/battery hybrid. The plug-in version allowed for a fair comparison of pure electric vehicles versus highly efficient internal combustion engine vehicles. As the analysis stated, the two models are essentially the same car with the only variation being the propulsion system. The analysis eliminated key variables such as different vehicles and different battery technologies.

The technical details for the vehicles are listed below and were incorporated in the analysis.

- Gasoline economy: Popular reviews suggested a range of 45-55 miles per gallon. The mid-point (50 mpg) was used in the analysis.

- Battery-only range: About 13 miles, as published by Toyota.

- Battery energy capacity: 5.2 Kilowatt hours (kWh).

- Battery charging efficiency: About 90%, including battery internal efficiency and recharging power electronics efficiency.

- Battery re-charging time: About three hours.

Fuel costs used in the analysis:

- Cost of gasoline: $3.53 – national average for 2011 according to the Energy Information Administration (EIA).

- Cost of electricity: $0.118 per kWh – national average for residential electricity in 2011 according to EIA.

The analysis of commutes in the two model cars shows the following results:

- Cost of a pure electric round trip commute: (2 x10 miles) x (5.2 kWh/13 miles)/(0.90 charge efficiency) x ($0.118 per kWh) = $1.05

- Cost of gas/battery hybrid round trip commute: (2 x 10 miles) / 50 mpg) x ($3.53 per gallon) = $1.41

At the time the analysis was originally prepared, gasoline cost $2.73 per gallon and residential electricity cost $0.115 per kWh. Using those parameters, the pure electric trip cost $1.02 while the hybrid mode commute cost $1.09. The cost advantage for the pure electric vehicle was only 7-cents. Today, it is 36-cents, which shows the sensitivity of EV economics to gasoline prices. Between December 2010 and now, the price of gasoline increased 29% while the cost of residential electricity only rose 2.6%. Low natural gas prices, which also helped drive down coal prices, contributed to the muted increase in electricity costs. As an alternative, we also calculated the pure electric commute cost assuming the cost of electricity rose by one penny over its December 2010 price, which resulted in increasing the commute round trip cost by 9-cents to $1.11.

While the executive preparing the analysis looked at some other issues, we found those to be less significant than his key conclusions about the pollution impact of the “battery only” vehicle option. He assumed that the national average electricity power generation efficiency was equal to 32% based on an examination of the chart of estimated U.S. energy use in 2009 prepared by the Lawrence Livermore National Laboratory. He also used a value of 130,000 Kilojoules (KJ) of energy in a gallon of gasoline. Based on these assumptions, the analysis of the total energy used in the round trip commutes was as follows:

- Pure electric round trip commute: (2 x 10 miles) x (5.2 kWh / 13 miles) / (0.90 charge efficiency) / (0.32 electric power-plant efficiency) x (3600 KJ/kWh) = 100,000 KJ

- Hybrid mode round trip commute: (2 x 10 miles) / (50 mpg) x (130,000 KJ/gallon of gasoline) = 52,000 KJ

The total energy consumed by the “pure electric” vehicle is almost twice the total energy consumed for the gasoline/battery hybrid one. This speaks to the challenge alternative fuels face in trying to displace the high efficiency of gasoline engines and the associated efficiency of the mechanical drivetrain. But what does this mean in terms of greenhouse gas (CO2) emissions? The executive preparing the analysis estimated the amount of CO2 emitted by each fuel for the various modes of travel:

- Total CO2 emitted from hybrid mode (gasoline) round trip commute = 3.4 kilograms (kg)

- Total CO2 emitted from hybrid mode (natural gas) round trip commute = 2.3 kg

- Total CO2 emitted from pure electric round trip if power is from a natural gas-fired electric plant = 5.1 kg

- Total CO2 emitted from pure electric round trip if power is from a coal-fired electric plant = 10.4 kg

- Total CO2 emitted from pure electric round trip if power is from the average of all electric power plants (coal, natural gas, nuclear and renewables) = 6.0 kg

This analysis highlights two points: 1) a natural gas-powered hybrid is considerably more environmentally-friendly than a gasoline-powered one; and 2) even if all the electricity comes from a natural gas-powered plant an EV is not as environmentally-friendly as a gas/battery hybrid vehicle, and especially if the hybrid vehicle is powered by natural gas.

In April, the Union of Concerned Scientists issued a report designed to show that EVs were the only vehicle acceptable in a world of global warming. They looked at the amount of pollution from electric power generating plants in 50 cities around the country to see how an EV compared in each city based on a miles-per-gallon comparison of emissions of a gasoline-powered car. What they found in those regions of the country where coal-fired power plants predominate for electricity generation was that EVs would compare to gasoline-powered vehicles with much lower mpg ratings than those geographic regions with cleaner electricity such as California. The New York Times, when it wrote about the study, created an interactive model to show the pollution rating based on a gasoline-powered vehicle’s mpg rating for many cities in the country.

One chart from the Union of Concerned Scientists’ study is presented in Exhibit 4. It is hard to read, but it shows the study’s conclusion that if a vehicle is powered exclusively by solar or wind, there will be zero emissions. If powered by the cleanest grid region in the country, the vehicle would emit 100 grams of pollution per mile driven, which compares with the best hybrid (50 mpg) that emits 225 grams per mile. The dirtiest grid region would release 340 grams per mile driven while the average new compact car today with a 27-mpg rating would emit 415 grams. The point of the chart is to show how EVs gain on a pollution basis compared to others even if the source of electricity is dirty. The values in the chart were determined based on full fuel-cycle accounting.

Exhibit 4. How Dirty EVs Are Depends On Location

Source: Union of Concerned Scientists

This study would seem to be counter to the above analysis of pure-electric mode commutes compared to hybrid mode trips. We are not sure that the full fuel-cycle accounting truly measures the energy requirement of the vehicles, which also helps explain EV performance issues. We found it interesting that one of the leading auto parts manufacturers, Delphi Automotive PLC (DLPH-NYQ), is working on a gasoline engine that operates like a diesel engine and will be able to yield fuel performance equal to the best hybrids at less cost, and presumably with fewer emissions, too.

Delphi’s approach, called gasoline-direct-injection compression ignition combines a collection of engine-operating strategies that make use of advanced fuel injection and air intake and exhaust controls. Many of these technologies are available on advanced engines today. The company found that if it injected the gasoline in three precisely timed bursts, it could avoid the too-rapid combustion that’s made some previous experimental engines too noisy. At the same time, the engine could burn the fuel faster than in conventional gasoline engines.

Exhibit 5. Delphi’s One-cylinder Test Engine

Source: Delphi Automotive PLC

Diesel engines do not use a spark to ignite the fuel. Instead, diesel engines compress air until it is so hot that fuel injected into the combustion chamber ignites. Attempting to do this with gasoline engines has proven very challenging especially under the wide range of loads put on them such as when the car idles, accelerates and cruises at various speeds. So far, Delphi has demonstrated this technology with a single-piston test engine under a wide range of operating conditions. It is beginning tests on a multi-cylinder engine that will more closely approximate a production engine. This is just one of many initiatives the auto industry has underway to improve conventional gasoline engines because they remain the most efficient converter of fuel into power. Transportation may be one segment of the energy market where “all of the above” makes sense. Unfortunately, politicians want to rush to pick one technology they believe is superior (EVs), while stifling others in their haste. It brings to mind the old expression: “Haste makes waste.”

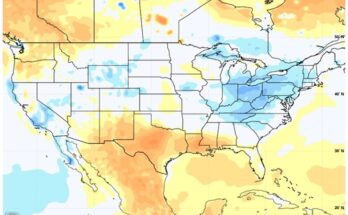

Who Should Be Praying For A Normal Or Cooler Summer? (Top)

The Energy Information Administration (EIA) published a chart on its web site last week showing the estimated electric power reserve margin and target for this summer as projected by the National Electric Reliability Council (NERC). Based on the data, those of us who live in Texas should hope 2012 will not be a repeat of the heat wave that dominated last summer’s weather pattern. If we do get a repeat, since the Texas power industry has the lowest reserve margin of any region in the nation at 13% and is not interconnected to the rest of the nation’s power grids, it means the Lone Star State might become the “Blackout State” in 2012. Only two other of the 14 regions have reserve margins in the teens.

Exhibit 6. Most Populous States At Risk For Power

Source: EIA from NERC

What is particularly interesting in the map the EIA produced is that the reserve margins estimated by NERC show the three states most at risk of power blackouts happen to be the top three states ranked by population. The nation’s fourth most populous state is Florida, which has an estimated reserve margin of 30%. Already, ERCOT, the power regulation body for Texas, is proposing raising power prices this summer to help shed electric load and thus increase the implied reserve margin to avoid blackouts. If the summer has normal temperatures, Texas should have adequate power supplies, otherwise, residents should prepare for potential blackouts.

After 7 ½ Months Of Lower Gas Rigs, Production Finally Falls (Top)

Since the natural gas rig count peaked in early October 2011, we have had roughly seven and a half months of lower gas drilling. We acknowledge that the shift from rigs focused on drilling for dry natural gas in favor of drilling liquids-rich gas plays can actually lead to more gas production. But it is important to understand that at the time the gas rig count peaked, natural gas prices were in the $3.50-$3.70 per thousand cubic feet (Mcf) range, down from about $4 only a few months earlier. The lack of gas demand due to the weak economic recovery and growing gas shale production was signaling lower future natural gas prices. What actually happened was that the nation experienced one of the warmest winters in years that erased any hope for a surge in natural gas consumption. With reduced winter storage withdrawals, in contrast to the normal pattern, natural gas prices have collapsed.

We and others argued that it was going to take a serious reduction in rigs drilling for natural gas – both dry and liquids-rich – to curtail the growth in gas production. For months, the analysts and industry professionals have been watching the monthly gas production data reported by the Energy Information Administration (EIA) based on its Form 914 survey of producers. The data arrives with about a two month delay.

Last week the Form 914 production figures for the month of March were released. The data showed that total natural gas production in the United States was 81.76 billion cubic feet per day (Bcf/d), down from 82.38 Bcf/d in February and the January peak of 83.16 Bcf/d. The national total obscures the fact that gas production in the Gulf of Mexico was up to 4.69 Bcf/d from 4.56 Bcf/d in February and that Alaskan production fell to 9.99 Bcf/d from 10.04 Bcf/d. When you subtract Gulf of Mexico and Alaskan production from the national total, you get the production for the onshore Lower 48 States. That production was down to 67.07 Bcf/d from 67.78 Bcf/d the prior month and 68.19 Bcf/d in January, a two-month decline of over 1.5%.

Exhibit 7. Gas Production And Rigs Are Falling

Source: EIA, Baker Hughes, PPHB

A plot of Lower 48 onshore gas production versus the Baker Hughes count of drilling rigs targeting natural gas shows how the relationship between drilling and production has tracked over the past few years since the gas shale revolution developed. Because of the lag in reporting production data, we have the advantage of two additional months of drilling rig data to project the likely trend in future reported gas output. As shown in Exhibit 7 (above), the number of gas drilling rigs has continued its decline suggesting that Lower 48 gas production has continued to fall. We will only know whether that assumption is correct in the beginning of August. Part of the explanation for the production decline, however, may be selective curtailments by producers such as Chesapeake Energy (CHK-NYSE) and Encana (ECA-NYSE), among others. What we don’t fully know is exactly how much output has been voluntarily restricted by producers. In the same vein, we don’t know how much output from older gas shale wells has fallen as their production matures. This phenomenon will set up the treadmill need of producers to accelerate gas well drilling at some point in order to sustain and grow gas production.

Exhibit 8. One Major Basin Appears In Decline

Source: Art Berman and Lynn Pittinger

Analytical work by Art Berman and Lynn Pittinger shows that gas production from the Haynesville field, one of the major sources of output growth over the past few years, has peaked and is beginning to decline. Since this is a dry gas basin, low natural gas prices are limiting the industry’s effort to drill in the region to offset declining production. A similar situation is developing in the Barnett formation in North Texas. Without an aggressive drilling effort, it will be a challenge for the industry to boost production. If demand for gas does pick up due to greater use for power generation, more industrial consumption or even an exceptionally hot summer and an early cold winter, the E & P industry could be looking at gas prices in the $4-plus range by December. Wouldn’t that be a pleasant surprise?

NOAA Hedges About Storm Season Ahead; Others Agree (Top)

A few days prior to the official start of the 2012 hurricane season, the National Oceanic and Atmospheric Administration (NOAA) issued its first forecast for storm activity. The forecast was introduced about the same time tropical storm Beryl, the second named storm of the season, was being watched as it formed off the coasts of northern Florida and Georgia. The storm, which grew just short of becoming a hurricane, came ashore north of Fort Lauderdale, Florida during the Sunday night of Memorial Day weekend. So prior to the June 1st start of the hurricane season, the country has already experienced two tropical storms with one making landfall.

NOAA’s 2012 forecast calls for a 70% chance of between nine and 15 named tropical storms (with top winds of 39 miles per hour or higher), between four and eight hurricanes (top winds of 74 mph or more) with one to three becoming major hurricanes (top winds of 111 mph or greater). The government’s Climate Prediction Center characterizes the forecast as “a less active season compared to recent years.” In the headline for the NOAA press release announcing the forecast, the season is characterized as “a near-normal 2012 Atlantic hurricane season.” In light of two storms before the start of the season, we wonder whether NOAA will be going back to the drawing board for a new forecast anytime soon.

The NOAA forecast is based on current climatic conditions along with the possibility that two climatic forces that could limit the formation of storms might strengthen as the storm season progresses. The Atlantic basin is experiencing a continuation of the overall conditions associated with the high-activity era that began in 1995. In addition, sea surface temperatures across most of the tropical Atlantic Ocean and Caribbean Sea are near-average, which is favorable for storm formation and strengthening. The two forces emerging that could limit the development of storms and their strengthening are strong wind shear and cooler sea surface temperatures in the far eastern Atlantic Ocean. Another factor that could impede storm development would be the development of El Niño in the Pacific Ocean that cools the Atlantic basin sea temperatures and increases shear winds. NOAA acknowledges that an El Niño could shift its forecasts to the lower end of its ranges.

The NOAA forecast is consistent with the June 1st forecast by Phil Klotzbach and William Gray of the Department of Atmospheric Science at Colorado State University (CSU). Their revised forecast for the season calls for 13 named storms, five hurricanes and two major hurricanes. Their increased forecast reflects their expectation El Nino may not develop this summer. CSU does produce an estimate for the potential for tropical storm landfalls. The projections for this season call for a 48% chance of landfall somewhere along the U.S. coastline (historical chance is 52%). There is a 28% (31% historical) chance of landfall along the East Coast including the Florida peninsula, while a storm coming ashore along the Gulf Coast has a 28% chance (30% historical) of happening. An early start to the tropical storm season may be significant, but quite likely it will mean very little by the end of the year.

Natural Gas Market Gains To Be Limited By Coal Prices (Top)

It appears natural gas prices bottomed at $1.91 per thousand cubic feet (Mcf) on April 19th. A surprising rally began that carried prices to $2.74/Mcf by late May. The rally was driven largely by expectations for natural gas to continue growing its share of the power generation market from coal-fired plants. Low natural gas prices have made it easier for electric power generators to use their gas-fired plants instead of coal-fired ones, not only because the cost of the fuel was lower but also because gas plants produce fewer greenhouse gas emissions.

Exhibit 9. Economy Stops Gas Price Rally

Source: EIA, PPHB

At various meetings, power company executives have been quoted saying that they have been using their gas-fired peaking power plants for base load electricity generation due to the lower cost of the fuel. As a result, according to the Energy Information Administration (EIA), the share of power generated by burning coal recently fell to 34%, the lowest of any month since 1973. The peak share of coal-fired power generation occurred in 1985 and remained high for most of the 1980s when natural gas was restricted by law from being burned as a boiler fuel due its perceived shortage. Once fuel-use restrictions were removed by the Reagan administration, natural gas steadily gained market share in the power generation market, principally due to its use for peaking power plants, which have been concentrated on combined cycle gas turbines (CCGT) as the cheapest and most efficient plants to meet this need. These peaking plants can ramp up to peak generating capacity rapidly enabling their use to meet electricity demand surges. These are also the preferred backup plants for renewable power facilities that are by their nature variable in their power output and thus create periods when power supply surges are needed.

As natural gas prices have fallen in recent months and the government continues to wage a campaign to restrict the use of coal-fired power plants, CCGTs have been the electric power industry’s preferred response for meeting demand. The result has been that the share of power generated by natural gas has risen to about 30% from its historical (1973-2012) average of 15.5%. This gain contrasts with coal’s loss of market share. In March 2012, coal only produced 34.3% of all power generation for the month, which is down from its historical average of 50.3%.

Exhibit 10. Gas Taking Power Share From Coal

Source: EIA

The impact of the market share gain by natural gas has been a significant increase in coal inventories at power plants. In March, coal stocks at power plants totaled 196.4 million tons, up 18% from March 2011. This was the highest monthly total since November 2009. The March inventory is unusual for this time of the year as typically coal stocks peak in late fall and late spring.

Exhibit 11. Low Gas Prices Boost Coal Inventories

Source: EIA

As a result of the build-up of coal stocks and the market share gain by natural gas generation capacity, coal prices declined. A chart

prepared to accompany an article published by The Wall Street Journal shows how natural gas and coal prices, based on British thermal units, converged in the second half of 2011 and then actually came together in 2012. The recent rebound in natural gas prices appears to be opening a window for coal to regain some lost power generation market share. That relationship is best demonstrated by the chart in Exhibit 12.

Exhibit 12. Cheap Gas Has Driven Coal Prices Down

Source: The Wall Street Journal

Energy Intelligence has calculated the value (Exhibit 13) at which it makes economic sense for a power plant operator to switch from burning natural gas to burning coal. As shown in that chart, natural gas prices (in red) have rebounded and are moving toward the indifference value (in blue) between coal and gas. At that point, power plant operators may consider other factors besides just the economic cost of gas versus coal. Will the regulatory pressures on power plants to stop burning coal become a more important factor in raising the blue line?

Exhibit 13. Gas Advantage Narrows

Source: New Energy

The increase in natural gas demand, due to its greater use as a fuel to generate electricity has been a pleasant, surprise. The industry is hoping for increased industrial use and possibly for natural gas to become a significant transportation fuel – markets perceived as providing more stable demand and potentially offering new growth opportunities. Recent natural gas gains in the power generation market may be limited in the future due to the decline in coal prices and the buildup of coal inventories. If the natural gas industry can demonstrate it can increase reserves and production at current low prices, then there may be hope for gas to carve out a larger and more permanent share of the energy supply mix. The jury is still out about the economics of natural gas.

How Are Global Warming And CAFE Standards Linked? (Top)

The unseasonably warm first third of this year has motivated proponents of global warming to produce studies showing how deadly heat can be for people. The most recent study was released by the National Resources Defense Council (NRDC) and titled, “Killer Summer Heat: Projected Death Toll from Rising Temperatures in America Due to Climate Change.” The study was based on a review of independent peer-reviewed scientific papers. It focuses on one paper in particular that was published in Weather, Climate and Society, the Journal of the American Meteorological Society. The NRDC used the paper’s methodology to estimate the impact of global warming on deaths in the U.S.’s 40 largest cities.

The study used a “sophisticated climate model from the National Center for Atmospheric Research” to generate two scenarios for future temperatures. One accepted no slowing in the rate of increase in carbon emissions while the other assumed public intervention that allowed carbon emissions to reach levels above current levels but not as high as in the uncontrolled scenario. The authors developed two sets of algorithms to describe the relationship between temperatures, weather conditions and mortality. One algorithm used data from 1975-1995 while the other used the longer period, 1975-2004, that encompassed years in which cities had begun taking preventive measures to reduce the health impact of heat. Other considerations in the methodology included making no modifications for population growth in the cities studied or adjusting for demographic changes such as the aging of the population that is most susceptible to heat-related health problems, those 65 years old and older. The study also did not take into account potential acclimatization to increased heat by individuals. The study also assumed a steady increase in temperatures as opposed to a possible path marked by periods of rapid temperature increases with other periods marked by modest or no temperature increases. While the authors of the study claim their study’s results tend to be conservative, we wonder about that conclusion. Maybe that is because we don’t understand the study’s algorithms.

There are two tables in the report – one showing the number and progression of excessive-heat-event days (EHE) during the summer while the other shows the increases in EHE-attributable summer mortality caused by climate change. Washington, D.C. experienced the greatest number of EHE days per summer over the period 1975-1995 with 16. The next greatest number was 11, which was recorded by four cities – Boston, Dallas, New York City and St. Louis. By focusing on these four we can watch how the models’ results work. By the end of the century, the climate change for those four cities added to the historical number puts Boston at 71 EHE days, Dallas at 37, New York City at 75 and St. Louis at 45. Based on these results, one would expect New York City and Boston would experience the greatest increase in mortality with St. Louis and Dallas ranking third and fourth.

The historical mortality rate for 1975-2004 for each of the four cities was: Boston with 99 deaths, Dallas with 46, New York City with 184 and St. Louis with 24. Matching the mortality experience with the increase in EHE days, one would still put Boston and New York City at the top of the list and Dallas and St. Louis at the bottom. According to the report, however, Dallas came in as number one with a year-after-year total mortality due to climate change of 7,271 deaths. Boston with 5,715 deaths barely edges out St. Louis with 5,621 deaths. Surprisingly, New York City is projected to experience only 1,127 incremental deaths.

In the case of New York City, the model projects that by the middle of the century, climate change will actually decrease the summer average mortality by 2, leaving a rate of 182. Therefore, cumulatively to mid-century, the table projects a decrease in heat-related deaths due to climate change of only 573. Based on the data in the table, only four other cities experience mortality declines due to climate change. Those cities were Atlanta, Philadelphia, Seattle and Tampa. Interestingly, both Atlanta and Seattle have are projected to experience declines in mortality for the entire century.

Local readers of the Musings might be interested to learn that Houston averaged only one summer EHE day historically, which is projected to increase to 13 days by the end of the century. In terms of mortality, Houston’s historical summer rate is two, but the cumulative deaths for the century as a result of climate change will total 1,391. Given these results (and the other cities), we are confused about the model. But then, I doubt the NRDC really is interested in explaining why results appear to be all over the map. What they were interested in was that the total of deaths for these 40 cities will reach 150,322. It was the magnitude of this number that NRDC emphasized in its press release. As expected, the environmental blogs and web sites were quick to highlight the large number of projected deaths that would occur in America due to global warming if nothing was done to avert the rise in temperatures.

The problem with the study is that the results conflict with data from most other studies. For example, an article in the Southern Medical Journal in 2004 by W.R. Keatinge and G.C. Donalson of Queen Mary’s School of Medicine and Dentistry at the University of London titled “The impact of global warming on health and mortality,” contained the following statement: “Cold-related deaths are far more numerous than heat-related deaths in the United States, Europe, and almost all countries outside the tropics, and almost all of them are due to common illnesses that are increased by cold."

An important study in 2008 by two UK health organizations – the Department of Health and the Health Protection Agency – concluded that the "mean annual heat-related mortality did not rise as summers warmed from 1971 to 2003." According to the authors, people are able to acclimate to hotter weather over time. As they further wrote, "Heat-related mortalities are substantial throughout Europe, but the hot summers in southern Europe cause little more mortality than the milder summers of more northerly regions."

To support their claim about populations acclimatizing to warming temperatures, the authors reported the following change in deaths per million of population between 1971 and 2003:

- Southeast England from 258 to 193 in 2003

- Rest of England and Wales from 188 to 93

- Scotland from 125 (in 1974) to only eight in 2003

The study also reported that deaths due to cold weather also fell dramatically – by more than 33%. However, there are many more deaths due to cold weather than hot as the following statistics demonstrate for the period from 1971 to 2003.

- Southeast England from 9,174 to 5,903

- Rest of England and Wales from 9,222 to 6,088

- Scotland from 9,751 in 1974 to 6,166 in 2003

The impact of air conditioning on heat-related deaths has been dramatic in the United States. According to one large study, heat-related mortality in 28 major U.S. cities from 1964 through 1998 dropped from 41 deaths per day in the 1960s to only 10.5 per day in the 1990s. And in Germany, data shows that heat waves reduced mortality slightly while deaths due to cold temperatures increased significantly. And lastly, the following data from the United States National Center for Health Statistics for 2001-2007 shows that on average 7,200 Americans die each day during the months of December, January, February and March, compared to the average of 6,400 who die daily during the rest of the year. Based on this calculation, there are 95,000 additional deaths during the 121 days of winter months, assuming a non-leap year. All of these studies and data would appear to refute the NRDC study’s conclusions.

A greater issue, however, may be that the new corporate average fuel efficiency (CAFE) standards agreed to last year by auto manufacturers and the government may produce an accident death total that overwhelms the NRDC’s heat-related death forecast. The CAFE standards are part of the federal government’s efforts to reduce oil consumption in this country and improve our carbon emissions that would address the heat-related death issue the NRDC is concerned about. The primary problem with fuel-efficiency standards is that they normally are achieved by reducing vehicle weight through the use of lighter and thinner materials. A substantial amount of weight was removed from vehicles in the 1970s and 1980s in order to reduce vehicle fuel consumption, but when crude oil prices crashed in the mid-1980s, vehicles got bigger and, as a result, heavier negating the pace of improvement in average fuel-efficiency for America’s new car fleet.

The CAFE standards agreed to last year will boost the fleet rating from 35.5 miles per gallon (mpg) to 54.5 mpg. Buried in the agreement is a scheme enabling auto manufacturers to inflate the number of electric and hybrid vehicles used in the fleet fuel calculation. Car companies will be able to count each electric vehicle sold as two high-mileage units and each hybrid as 1.7 units sold. This scheme will enable Detroit to continue building and selling big cars, SUVs and light trucks, the most profitable vehicles in their line-ups. Estimates are that due to this scheme, the actual CAFE will only reach the mid-40s mpg range rather than the mid-50s.

This vehicle counting scheme may help the auto companies by not forcing them to take even more weight from cars, although it is impossible to see how that will happen given the magnitude of the mandated fuel efficiency improvement. What we know about the shift to building smaller, lighter vehicles, despite increased safety measures is that auto-related deaths increase. At a National Highway Transportation Safety Administration (NHTSA) seminar on improving the safety performance of vehicles, the slide in Exhibit 14 was presented. It shows that the average number of deaths in small cars was 118 compared to 79 for midsized cars, minivans and SUVs, a nearly 50% greater mortality rate.

Exhibit 14. Honda Says Small Cars Can Be Safer

Source: Honda at MSS Conference

The role of small cars in deaths is well documented by studies since CAFE standards were introduced. J.R Dunn wrote in The American Thinker, “Fuel-standard lethality is as obvious as a smashed windshield.” He pointed to a late 1990s study by the Brookings Institution that a 500-pound weight reduction by the average car increased annual highway fatalities by 2,200-3,900. About the same time, USA Today found, based on its study of federal government Fatality Analysis Reporting System Data, that 7,700 deaths occurred for every mile per gallon gained in fuel economy standards. In fact, smaller cars accounted for up to 12,144 deaths in 1997, 37% of all vehicle fatalities for that year. If we use the Brookings Institute estimates of increased traffic fatalities (2,200-3,900 per year) then over the balance of the century, there will be an incremental 193,600 to 342,200 deaths due to steps being taken to meet the increased CAFE standards.

A 2003 NHTSA study estimated that every 100 pounds of weight reduction for vehicles weighing more than 3,000 pounds increases the accident death rate by slightly less than 5%, and that the rate increases as vehicles become lighter. Two years earlier a National Academy of Sciences study estimated that CAFE standards at that time were responsible for as many as 2,600 additional highway deaths in a single year.

In 2007, the Insurance Institute for Highway Safety concluded in a study that “None of the 15 vehicles with the lowest driver death rates is a small model. In contrast, 11 of the 16 vehicles with the highest death rates are mini or small models.” A presentation at the 2011 NHTSA safety seminar produced a presentation the contained the slide in Exhibit 15. It shows that for minor vehicle weight reduction, the fatality rate goes up when the car is a small one, while for the heaviest vehicles it actually has gone down.

Exhibit 15. Smaller, Lighter Cars Are Less Safe

Source: Lund at MSS Conference

At the same time independent studies are documenting that lighter and smaller vehicles have higher fatalities rates, auto manufacturers are being pushed to make smaller and lighter vehicles to meet the higher mandated CAFE standards. Lighter and smaller vehicles are the easiest solution for car builders. At the same time, the auto industry’s weight reduction strategies are contributing to higher vehicle costs. The National Automobile Dealers Association calculated in April that a Chevrolet Aveo, the most affordable vehicle it studied, would increase in price from $12,700 to $15,700 by 2025 in 2010 dollars due to steps taken to meet the increased fuel-efficiency mandate. The Association estimates this $3,000 cost increase would prevent 6.8 million drivers from qualifying for car loans.

The bottom line is that increased CAFE standards will make small cars more expensive, which will prevent a segment of the population from being able to buy a car. At the same time, as cars get smaller and lighter in order to meet the higher CAFE standards, there will be an increase in traffic deaths. Over the balance of the century, these increased traffic deaths are projected to be 1.3 to 2.3 times the summer heat-related deaths estimated by the NRDC. Where is their outrage over CAFE standards?

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.