- Are The Shale Resource Estimates Realistic Or Fantasy?

- U.S. GDP Up, But Economic Recovery Continues To Struggle

- BP Spill Study Says BOP Needs Further Work

- China Planning To Go Green On Energy?

- Can Katrina Offer Any Guide For Japan’s Energy Outlook?

- Are Houston Drivers Spending Less Time Stuck In Traffic?

- Your Tax Dollars At Work In World Of Green Energy

- Wind Energy Continues To Find More Problems

Musings From the Oil Patch

March 29, 2011

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Are The Shale Resource Estimates Realistic Or Fantasy? (Top)

Maybe you’ve seen the advertisements from various financial newsletters touting the investment potential of companies involved in developing the Bakken oil shale formation that spreads across North Dakota and Montana and into the neighboring Canadian provinces of Saskatchewan and Manitoba. The claims, which several years ago appeared outrageous, of the Bakken containing eight times the amount of oil as in Saudi Arabia or 21-times the reserves held by Kuwait seem less than fantasy today.

These newsletters began trumpeting the financial impact of the Bakken for various oil exploration companies active in the formation following the 2008 U.S. Geological Service (USGS) revised estimate for the basin’s reserve potential suggesting it might contain between 3.0-4.3 billion barrels, a 25-fold increase over the organization’s prior estimate made in 1995, which said the field might contain 151 million barrels. The USGS stated that the Bakken formation is estimated to be larger than all other current USGS oil field assessments in the Lower 48. The agency called it the largest “continuous” oil accumulation ever assessed. This means the oil is spread rather evenly across the basin as opposed to being located in discrete deposits. The next largest continuous oil deposit is the Austin Chalk trend stretching from Louisiana to central Texas that is estimated to contain 1.0 billion barrels. Jeff Hume, president and chief operating officer of Continental Resources (CLR-NYSE) was quoted in an article not long ago suggesting that there could be as much as 24 billion barrels of reserves in the Bakken formation.

Exhibit 1. Bakken Is U.S. Largest Continuous Oil Field

Source: EIA

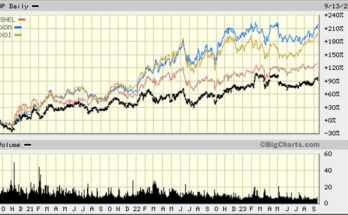

The success the domestic exploration and production companies have had in the Bakken has been partially responsible for U.S. oil production growing over the past two years to the highest level in over a decade.

Exhibit 2. Domestic Oil Production Up Last Two Years

Source: EIA

North Dakota’s oil production reached a peak last November at 356,697 barrels per day from 5,099 producing wells. Over the past three years, monthly oil production in North Dakota has grown by more than 2.5 times. Production is off slightly in January to 342,088 barrels per day from 5,061 wells. While we can’t be certain, we suspect the traditionally harsh winter in that region of the country impacts oil production efforts, especially since the infrastructure to produce much of this additional oil has not kept pace with the rising production. There have been media stories about the dramatic increase in the number of railroad tanker cars hauling Bakken production to refineries. We are also aware of some of the oil making its way by truck across the US-Canadian border to shipping facilities there. All of that will change as new pipelines are constructed.

Exhibit 3. North Dakota’s Oil Production Soaring

Source: North Dakota Natural Resource Department

More important is the growth in drilling, which will be critical for boosting the state’s oil and gas production in the future. The most recent Baker Hughes rig count showed 153 working rigs in North Dakota, more than double the count merely 14 months earlier. According to Mark Williams, senior vice president of exploration and development for Whiting Petroleum (WLL-NYSE), “All current and planned projects could take us up to 1.1 million barrels a day.”

The most important factor in the growth of Bakken oil production has been the application of drilling and well completion techniques learned from drilling the gas shales – horizontal wells with longer lateral sections and a greater number of hydraulic fracturing treatments. Estimates are that by applying these technologies, Bakken wells that were drilled only a few years ago might produce 100,000-200,000 barrels over their lifetime but are now getting 400,000-700,000 barrels out of the deposits. Even though the cost to drill and complete these newer wells is greater than for the older wells, the economics of greater production, especially at today’s $100 per barrel oil price, are extremely attractive.

But back to the question we posed in the headline for this article. Just how realistic are reserve estimates? As we have commented on many times in our career, the biggest challenge for assessing the value of oil and gas companies is the realization that virtually all their assets lie underground. Because of that we never will know exactly how much oil and gas a company owns. Moreover, the amount of oil and gas a company can recover from the reservoir will have a huge impact on its potential value. We were intrigued by a presentation at the Ohio Oil & Gas Association Winter Meeting by Chief Larry Wickstrom of the Ohio Department of Natural Resources’ Ohio Geological Survey. Mr. Wickstrom’s presentation was on the Marcellus and Utica Shale plays in Ohio, the latest hot spot in the business. The Ohio Marcellus and Utica shale formation is the westernmost part of that regional play that extends from Ohio and West Virginia through Pennsylvania and New York State and on into Canada.

There is much speculation about the potential reserves in the Utica shale in Ohio, which is responsible for the land rush that has been underway in the state recently. Even though there have been only a few Marcellus wells drilled in the state and virtually no Utica shale wells, its potential is believed to be significant. Mr. Wickstrom demonstrated this potential by discussing the following information. A typical conventional gas well in the Appalachian Basin will produce 100,000-500,000 cubic feet per day and 200-500 million cubic feet (MMCF) over its producing life. In contrast, a Marcellus well (and possibly a Utica well) produces 2-10 MMCF per day or four billion cubic feet over its lifetime. If one does the math of multiplying the lifetime production of each of these wells by $4 per thousand cubic feet, the current price for natural gas, there is an eight-fold increase in value for a Marcellus well compared to a conventional gas well ($16 million versus $2 million in gross revenues). Of course, this simple calculation ignores the greater cost to drill the Marcellus well (possibly $3-$5 million) and possibly other costs, too, but the magnitude of increased economic returns from the shale wells underlies the industry’s excitement and aggressive behavior.

Mr. Wickstrom decided to use his presentation to not only educate his audience about the geology and challenges of drilling and developing the Utica shale, but also to help people understand how speculative much of the chatter about the formation’s potential is given the lack of production data. He began this mission by explaining the formula for estimating the resource potential for a field, which was developed by two geologists in 1989. The formula is: Qt = V x D x TOC x C x %R. The volume of hydrocarbons contained in the field is a function of the volume of rock, the density of that rock, the amount of carbon content in the rock, the percent of carbon converted into hydrocarbons and the percent of reservoir space containing the hydrocarbons. While the formula sounds straightforward, it requires estimating five important variables. Drilling core samples and analyzing the formation’s rock composition helps in making these estimates. By correlating the rocks from this reservoir with those from other similar basins where production history has been established further improves the accuracy of the estimates. But in the end, the estimates can be subject to wide degrees of error. Just how much? Maybe that depends on which side of the debate about the economic performance of gas shales one is on. At the present time, it is much like that old beer commercial – “less filling” versus “tastes great” both positive qualities but impossible to quantify.

Exhibit 4. How To Forecast Basin Reserves

Source: Ohio Dept. of Natural Resources

To further help the audience understand the challenge of estimating the potential size of the Utica shale formation in Ohio, Mr. Wickstrom presented data from a study of the entire Appalachian Basin’s Utica/Point Pleasant formation prepared in 1989. That study concluded the formation had migrated 13.26 billion barrels of oil to conventional reservoirs in the basin. Remember, the shale formation is the source of the oil and gas that migrates into a basin’s various conventional traps.

Exhibit 5. Utica Shale Contribution To Appalachian Reserves

Source: Ohio Dept. of Natural Resources

It was at this point that Mr. Wickstrom suggested the audience should have some fun and make up the numbers to manufacture an estimate of the Utica’s potential. He started with the variables used in the 1989 study of the Utica formation for the entire Appalachian Basin. He suggested that based on more recent knowledge, we could increase the total organic content to 2.50% from the prior estimate of 1.34%. Then came the fun part. What should be the percent of reservoir space containing hydrocarbons that can be recovered? Mr. Wickstrom suggested we should start with the percentage used in the various studies for the Bakken formation – 1.2%. Plugging that number into the equation, we arrive at an estimate of 1.96 billion barrels of oil or its equivalent for the Utica formation.

Exhibit 6. Widely Different Forecasts From Minor Changes

Source: Ohio Dept. of Natural Resources

What happens, however, if you change the recovery factor? Mr. Wickstrom boosted that number to 5%, and suddenly the Utica may contain 8.2 billion barrels of oil or its equivalent. That’s more than a fourfold increase. What could this mean to our crude oil and natural gas reserves?

Exhibit 7. The Fantasy World Of Projections

Source: Ohio Dept. of Natural Resources

By assuming that the hydrocarbon volume is split 1/3rd natural gas and 2/3rds crude oil, we have the basin containing either 3.75 TCF of gas and 1.31 billion barrels of oil on the low side, or 15.7 TCF of gas and 5.5 billion barrels of oil on the high. Given these oil estimates, the Utica formation in Ohio contains less than half the oil in the Bakken formation or it contains one and a half times more! For natural gas, the Utica shale represents either two-tenths of one percent of the nation’s potential conventional gas reserves as estimated by the Potential Gas Committee in its 2008 study, or it accounts for 9.4% – quite a difference in magnitude.

Once you go through this analysis, it becomes easy to understand the hype surrounding the Ohio Utica play. The optimistic estimates, based on reasonable assumptions about the geology and recoverability of the hydrocarbons, will certainly get the attention of industry players and especially Wall Street that loves to create overly optimistic scenarios. But as Mr. Wickstrom cautioned, without drilling wells and developing a production record, these estimates are all fantasy.

U.S. GDP Up, But Economic Recovery Continues To Struggle (Top)

On Friday, the Commerce Department announced the final figures for economic activity in 2010’s fourth quarter. The department projected that the U.S. economy grew by 3.1%, up from the prior estimate of 2.8%. The 2010 preliminary 4th quarter gross domestic product (GDP) estimate made in early January suggested the economy had expanded by 3.2%. So while the final revision is upward, it did not reach that preliminary estimate that had excited economists, politicians and Wall Streeters. The problem now is that the headwinds for the economy are growing.

The latest Thomson Reuters/University of Michigan index on consumer sentiment was released at 67.5, down from the 77.5 for February. This latest consumer sentiment rating is the lowest it has been since November 2009. Consumers are reacting to the rising prices of gasoline and food, the two key items in the consumer price survey that government economists and banking officials dismiss as not indicative of inflation forces in the economy. Several weeks ago, William Dudley the president of the New York Federal Reserve Bank was speaking to a group of citizens in the Queens section of the city and trying to explain how the Fed looks at inflation and the role of core inflation, which ignores food and gasoline prices. He was queried by an audience member who asked, “When was the last time, sir, that you went grocery shopping?” In groping for an example of the principle he was trying to explain, Mr. Dudley said, "Today you can buy an iPad 2 that costs the same as an iPad 1 that is twice as powerful," referring to Apple Inc’s (AAPL-Nasdaq) latest handheld tablet computer. He went on to say, “You have to look at the prices of all things.” There were supposedly widespread guffaws and murmurs amongst the audience with one member calling Mr. Dudley’s comment “tone deaf,” while another pronounced, “I can’t eat an iPad.”

Exhibit 8. U.S. GDP 2010Q4 Growth Revised Up

Source: calculatedriskblog.com

While the upwardly revised 2010 4th quarter GDP estimate is good news, besides the lower consumer sentiment index, the latest home sales figures are extremely troubling. Both existing and new home sales fell last month. February new home sales fell 16.9% from January and were down 28% from a year ago coming in at an annual rate of 250,000 units, the lowest level since the government began keeping records nearly 50 years ago. The inventory of unsold new homes swelled to 8.9 months, up from 7.4 months in January. At the same time, the median new house price fell 13.1% to $202,100, the lowest level since December 2003. New home sales prices are being pressured by the number of used homes on the market. These market conditions have pressured home builders to only start 40% of the number they would have started in a normal year. Existing home sales declined 9.6% last month while prices fell by 5.2% and the inventory of unsold homes rose.

Exhibit 9. Home Sales Down

Source: Financial Times

We have contended for a long time that until and unless we have healthy automobile and housing sectors, the U.S. economic recovery will remain weak and continue to put downward pressure on energy demand. The latest domestic economic figures, coupled with the automobile industry problems due to the Japanese earthquake and the continued civil unrest in North Africa and the Middle East putting upward pressure on crude oil and gasoline pump prices, imply we can look forward to further weak energy markets.

BP Spill Study Says BOP Needs Further Work (Top)

Last week, the results were released from the forensic study of the blowout preventer (BOP) used on BP Ltd.’s (BP-NYSE) Macondo well in the Gulf of Mexico that blew out last year causing an explosion, fire and eventual sinking of the Deepwater Horizon semi-submersible drilling rig and this nation’s greatest offshore environmental accident. Den Norske Veritas (DNV), the Norwegian engineering and risk-management firm hired by the U.S. Department of the Interior to assess the BOP and determine its role in last year’s Deepwater Horizon disaster, after examining and testing the unit recovered from the ocean floor, prepared a 200-page report with a 351-page appendix. The inspectors’ conclusion was that the shear ram valves in the BOP were unable to fully sever the drillpipe as the unit is designed to do because the pipe inside buckled from the well’s initial blow-out and was out of alignment that prevented complete closure. DNV found that the shear rams had closed to within 1.4 inches. This gap, albeit small, provided sufficient room for an estimated 4.9 million barrels of oil to escape.

While the report details the failure, the conclusions confirm the early belief of many drilling engineers consulted about the disaster. The inability of the shear rams to cut the pipe because of it being off center highlight potential problems for companies drilling over-pressured wells. The buckling of the pipe was due to the high pressure fluids roaring up the drilling pipe and annulus lifting the pipe until it hit an obstacle. At that point, the momentum of the pipe and pressures and heat of the flows resulted in its bending.

Exhibit 10. Why BP’s Macondo Well Spilled Oil

Source: The Wall Street Journal

The report quickly generated further criticism of the offshore oil and gas industry and its safety procedures in drilling deepwater wells that tend to exhibit high formation pressures. All facets of the oil and oilfield service industry involved in drilling these wells is working on ways to improve the performance of the drilling and safety equipment, especially the BOP. There still remain unanswered questions about what actually caused the well to blow out and there will be more information and hypotheses presented down the road, but the DNV report was the last major report on the equipment involved in the accident. The belief of most observers is that the Deepwater Horizon disaster was the result of a confluence of questionable decisions and actions by all parties involved that resulted in the creation of an unbalanced pressure differential between the downhole formation and the equipment designed to hold back that pressure.

Criticism of the DNV report came immediately from political opponents of offshore drilling including Rep. Edward Markey (D., Mass.) who said, “This report calls into question whether oil-industry claims about the effectiveness of blowout preventers are just a bunch of hot air.” The man responsible for overseeing U.S. offshore drilling rules until he retired in 2009, Elmer Danenberger III, was quoted by The Wall Street Journal as saying, “They have to rethink the whole design,” meaning the BOP. The DNV report concluded that the BOP failure was due to a design flaw and not the operation, abuse or maintenance of the BOP by the companies involved in drilling the Macondo well.

The BOP in question was manufactured by Cameron International (CAM-NYSE), the leading provider to the drilling industry of such units for over 90 years. The BOP has been the industry’s last and best defense against well pressures, which often came as a result of encountering pockets of higher-pressured natural gas at shallower depths while drilling a well. In fact, the BOP that became the signature product for Cameron was developed in response to several high-pressure well workover accidents in 1922. The co-founder and majority owner of then Cameron Iron Works, James Abercrombie, was also a successful contract driller with a history of putting out well fires and blow-outs, long before Red Adair made the occupation of fire-fighting glamorous.

In late 1921, Mr. Abercrombie secured a contract to work over a troublesome well in the Hull field in Liberty County, northeast of Houston. This was a field with many small pockets of high pressured gas. In the course of working over wells in this field, Mr. Abercrombie’s company had lost its newest and best rig and had encountered three blowouts. While each of the blowouts resulted in lost equipment, fortunately no one was hurt. The episode, however, focused Mr. Abercrombie on ways to design equipment that could be used to prevent wells from blowing out. Originally, he had used an elementary blowout preventer called a “boll weevil.” It was essentially a piece of heavy-gauge pipe surrounded by a thick lead casing. There was stopcock on top of the arrangement. If it was suspected that a well might blowout, the unit was slipped over the well’s casing and the stopcock closed. The unit proved impractical as a well containment device but mainly it was used to try to give the drilling workers time to get away from the rig before the well blew.

There was another preventer on the market designed to improve on the “boll weevil” and Mr. Abercrombie purchased one of them to use on his next well workover in the Hull field. Unfortunately it, too, failed to prevent another blowout. Mr. Abercrombie came up with the idea of a ram-type preventer with the faces of the rams closing in on the drillpipe in order to close off the pressure in the well. With a sketch of the concept, Mr. Abercrombie went to his co-founder and partner, Henry Cameron, the next morning and sketched out his concept in the sawdust and dirt of the machine shop’s floor. With a casting produced by Howard Hughes’ nearby shop, Mr. Cameron machined the design. A patent application was filed on April 14, 1922, but patent number 1,569,247 was not issued until January 12, 1926.

As the unit was tested it was discovered that it leaked when pressure increased. Mr. Cameron designed a fix whereby the increasing pressure would force open a notch in the corner of the ram face and force it to close tighter. Patent number 1,498,610 was issued as a modification to the original BOP design but before the original patent was even granted. By adding steel and cast iron parts to the BOP and being able to guarantee the unit would work to shut off 2,000 pounds of flowing pressure, the orders started coming in, not only from domestic companies in Texas, Louisiana and California, but also for use in foreign locations such as Mexico and Venezuela. The Cameron Iron Works company was on its way to a glorious history that continues today. [Much of this history about Cameron comes from the book, Mr. Jim, by Patrick J. Nicholson.]

We have high confidence that the engineers in the drilling business will figure out how to improve the performance and safety of the drilling process, just as they have for the past 150+ years. Well control episodes have occurred throughout the history of the petroleum industry. The Deepwater Horizon was the latest and most devastating, both due to the loss of 11 lives and the environmental damage to the Gulf of Mexico from the oil spill. The evidence from the investigations of the disaster continues to show the Macondo well blowout was an accident. All aspects of our daily lives, including the energy, involve risks. We need to better understand the risks and their potential ramifications. Importantly, we need to keep a perspective on risk and our risk tolerance. We don’t stop driving after a car accident. We don’t stop flying after a plane accident. We shouldn’t stop drilling after a drilling accident.

China Planning To Go Green On Energy? (Top)

Recently at the latest National People’s Congress in China, a new “five-year plan” for energy production showed that the government wants to focus on natural gas and renewables to meet its growing needs. As the chart in Exhibit 11 shows, natural gas is projected to double its contribution from 4% to 8% between 2010 and 2015. At the same, China wants renewables to increase their contribution from 8% to 10%. In this case, renewables includes nuclear power. Oil’s contribution in the energy supply mix remains the same as in 2010. The growth in natural gas and renewables share will offset a decline in the contribution from coal. The world should be applauding this plan as it suggests a cleaner energy future. The problem is that coal remains the primary fuel for electricity.

Exhibit 11. China’s Future Is Gas And Renewables

Source: Agora Financial

The bulk of China’s electricity is generated from fossil fuels, primarily coal. At the present time it accounts for 80% of power generation feedstock. The EIA is projecting that coal’s feedstock share will decline to 74% by 2035. Offsetting some of that coal will be natural gas, which represents about 5% of installed generating capacity and 2% of net generation in 2009. Hydroelectric power is a significant component of the country’s power generating capacity, representing close to 16% in 2009. China is the world’s largest producer of hydroelectric power with a plan to continue to grow its share.

Wind is the second largest component of renewable power in China and an energy source receiving substantial new investment. In 2010, wind had 16 gigawatts of installed generating capacity, having doubled from the prior year. The problem is that currently China lacks transmission infrastructure so much of the wind generating capacity is idle.

Exhibit 12. Coal Dominates Power Generation Sector

Source: EIA

Nuclear power, however, represents a focus of the government’s green energy plan. With 11 reactors operating representing nine gigawatts of generating capacity, China plans to add over 70 gigawatts of new capacity by 2020. According to the EIA, China will increase its nuclear power capacity to 598 billion kilowatt-hours by 2035, an 8.4% annual growth rate. That will take nuclear as a share of total generating capacity from 2% in 2009 to 6% in 2035. While green energy, including nuclear power, is receiving substantial investment funds, the reality is that coal and other fossil fuels will remain the primary fuel sources for power generation in China for years to come with attendant economic, geopolitical and climate impacts.

Can Katrina Offer Any Guide For Japan’s Energy Outlook? (Top)

Energy markets have struggled to predict what the earthquake and subsequent tsunami mean for Japan’s energy demand. But events in Libya have pushed Japan’s travails off the front pages of the papers, yet the issues haven’t gone away – the loss of 10% of Japan’s nuclear power capacity and the destruction of homes and businesses, which will cut near-term energy demand – and we don’t know how they will impact the global energy markets.

Since the earthquake and tsunami, two significant developments for Japan’s economy and its energy sector have emerged. First was the problem with the nuclear reactors at the Fukushima Daiichi power plant. It has created both near- and long-term impacts on the country’s electricity production and need for alternative energy supplies, besides the radioactivity clean-up issues. The second is the impact on the country’s manufacturing sector’s production output, especially for automobiles and spare parts and the electronics industry. While the latter issue has a direct impact on the amount of energy needed by these sectors, the world is beginning to see that due to “just-in-time” inventory scheduling, there will be a knock-on effect on production in these industries globally. In turn, that may create energy demand issues elsewhere in the world.

Between the two businesses – autos and electronics – there will probably be a greater impact globally from the damage to auto manufacturing plants and parts manufacturers. The electronics industry, while it will be impacted by problems for certain component suppliers, probably has more alternative suppliers worldwide that can step up to offset the lost Japanese production. Japan produces 60% of the worldwide supply of silicon wafers and represents 22% of the industry’s demand.

It is in the autos and auto parts sectors where the story may be different. According to a study by IHS Automotive, it estimates that the disruptions to the auto manufacturing sector will result in the industry building about five million, or 7%, fewer cars than planned this year. Already the industry has been unable to produce 320,000 cars. While the impact hit Japan first, it will begin hitting the global industry over the next three to four weeks. Currently about 13% of the world’s automotive production is out of commission and based on the projections of plant closures due to a shortage of parts, about one-third of the world’s manufacturing capacity ultimately will be shut down. If the Japanese plant shutdowns last for eight weeks, then the industry could expect to lose upwards of 40% of its capacity. Even little things such as paint pigments are causing global manufacturers not to be able to produce vehicles with certain colors. That is likely to impact the sales of vehicles currently in production and sales in the future.

In 2008, according to the Energy Information Administration (EIA), nuclear power accounted for 11% of Japan’s total energy consumption. Nuclear power plants produce 27% of the country’s electricity, so the loss of the three reactors at Fukushima Daiichi will hurt, but they were only operating at 75% of capacity at the time of the earthquake due to reduced power needs in the country. Estimates are that Japan has lost about 10% of electricity power generation capacity as a result of the damaged plants and those shut down for safety reasons. At the same time, the amount of power Japan needs has dropped due to the loss of structures and manufacturing capacity. Power needs will change in the future as the pace of the needed cleanup and rebuilding effort gets underway.

Many economists, attempting to forecast the impact of the Japanese earthquake and tsunami on both that country’s economic activity and the global economy, point to the limited impact the 7.3 magnitude earthquake in Kobe had on it in 1995. While the January 17, 1995,

Exhibit 13. Nuclear Power Accounts For 27% Of Electricity

Source: EIA

earthquake devastated Kobe, located some 210 miles southwest of Tokyo, and killed 6,400 people and caused $100 billion in damage, electric power was restored to the area within a week. There were 120,000 structures fully or partially collapsed and the port, the sixth largest container port in the world, was totally destroyed. Some 40% of the city’s industrial manufacturing capacity, including 60% of the non-leather shoe manufacturing and 50% of the sake brewing capacity, was destroyed. Japan’s industrial production fell by 5% between December 1994 and January 1995, but within a couple of quarters economic activity in the country had returned to normal.

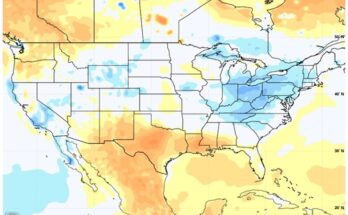

This time things may be different because of the loss of the nuclear power plants and the damage to key manufacturing facilities. Like many others, we decided to take a look at the economic and energy impact from one of the last great disasters as a guide. Hurricane Katrina in September 2005 destroyed much of New Orleans and South Louisiana along with wiping out the Gulf Coast regions of Mississippi and Alabama. To try to assess the impact, we looked at the amount of electric power consumed in these three states before and after the hurricane.

Our first look was at the annual electricity sales in the three states over 1990-2009. As can be seen in the graph in Exhibit 14, there was a minor drop in electricity usage between 2004 and 2005, but the magnitude of the decline was almost imperceptible. We then looked at the monthly electricity sales figures for each of the states during 2005. What we see in Exhibit 15 is that there was a decline between August 2005 and October 2005 for all three states, which makes it difficult to know on the surface whether it was due to the hurricane impact or just cooler weather ending the heavy summer air conditioning load. If we look at just September and October, it is interesting that Mississippi showed no change in electricity

Exhibit 14. Gulf States Show Long-term Electricity Growth

Source: EIA, PPHB

consumed while both Louisiana and Alabama were down. Surprisingly, Alabama’s power load was off more than Louisiana’s although the latter was more devastated than the former. Because each of these states has other regions that suffered little from the hurricane, the ability to discern the impact on the local power demand is difficult to assess.

Exhibit 15. Katrina Reduced Gulf States’ Electricity

Source: EIA, PPHB

When we looked at the monthly electricity consumed in each state over the three-year period 2005-2006, all the states showed remarkably similar patterns, especially during the September 2005 period. What stands out to us is that Louisiana’s electricity volumes dropped more sharply between August and October of 2005 than its neighboring states that were also hit by Hurricane Katrina.

Exhibit 16. 2005 Demand Fell Due To Katrina

Source: EIA, PPHB

Exhibit 17. Mississippi Electricity Flat After Katrina

Source: EIA, PPHB

Exhibit 18. Alabama’s Economy Offset Katrina’s Impact

Source: EIA, PPHB

While the analyses of the economic and energy impacts from the 1995 Kobe earthquake and Hurricane Katrina in 2005 suggest that Japan will recover quickly from this disaster, we suspect that the reality is that the impact will be greater and last longer. As global industries are just now, over two weeks later, getting a feel for the supply chain disruptions from the Japanese event, we suspect the energy impact will be different, and likely greater, than previously, or even currently thought.

Are Houston Drivers Spending Less Time Stuck In Traffic? (Top)

The December 2010 report from the Texas Transportation Institute on traffic congestion shows that all urban areas in the country have experienced increased congestion in the 17-year period from 1982 to 2009. The study measures the average annual hours wasted per commuter due to congestion by the size of the urban area where the commuting is taking place. Importantly, the study concluded that traffic congestion has barely changed during the ten years of 1999-2009. In fact, for Large Urban Areas, those with over one million but less than three million in population, congestion actually declined between 1999 and 2009.

The latest U.S. Census Bureau figures for the City of Houston put its population in 2010 at 2,100,017. That should mean commuting Houstonians are spending slightly less time stuck in their cars compared to how much they did just prior to the turn of the century. Maybe we owe that reduction to the completion of some of the major road rebuilding efforts such as the 610 West Loop, the Katy Freeway and the opening of the Westpark Tollway, to name a few of the high-profile projects? If true, then Houstonians can take some solace in the fact that the road rebuilding projects are declining and the census people say the city’s population won’t exceed three million until 2040, nearly 30 years from now. That makes it look good for the future of commuting.

Exhibit 19. 1999-2009 Traffic Congestion Barely Increased

Source: Texas Transportation Institute

The problem for Houston drivers, however, is if we measure their time stuck in traffic by the size of Harris County rather than the City of Houston. The Census Bureau says that the county had an estimated 3,951,682 people in 2010, putting it in the Very Large Urban Area category, where traffic congestion did increase between 1999 and 2009. What we have observed recently when driving in Houston and Harris County is the growing number of out-of-state license plates. We know the area’s population grew by about 20% over the past decade, but if the energy business continues to prosper, we wonder if Houston will experience another mass in-migration of people similar to that seen in the late 1970s and early 1980s. For selfish reasons we hope not.

Your Tax Dollars At Work In World Of Green Energy (Top)

Earlier this month the Department of Energy announced it had finalized a nearly $50 million loan to the The Vehicle Production Group LLC. The purpose of the loan is to support the development of the six-passenger MV-1, a purpose-built wheelchair accessible vehicle that will run on compressed natural gas (CNG). The vehicles are planned to be manufactured in a plant in Indiana. The Vehicle Production Group states that the MV-1 is the only purpose-built vehicle that is designed from the ground up for wheelchair accessibility and that will meet or exceed the guidelines of the Americans with Disabilities Act.

Exhibit 20. MV-1 Concept Vehicle

Source: The Vehicle Production Group LLC

The Energy Department press release says that when at full capacity, the project will produce over 22,000 vehicles per year and it will create 900 direct and indirect jobs. Based on the $50 million loan, that is the equivalent of over $55,500 per job. If we assume, as the release says, there will be 100 jobs in production at the plant with the remaining jobs elsewhere and associated with part suppliers, production and sales, we calculate a much higher number. Assume that the 100 production jobs are full-time and the other 800 jobs are half time then the cost to create each job is $100,000. If there are only going to be 22,000 vehicles sold per year, it may even be generous assigning half-time to those indirect positions, further inflating the cost per job created.

The press release says the vehicle has “an interior that accommodates up to six occupants with the optional jump seat, including one or two wheelchair passengers and the driver.” From the picture of the vehicle in Exhibit 20, it looks similar to many other light-duty vehicles we have seen that have been modified for wheelchair use. So the real issue is that the MV-1 will be powered by CNG. But when you go to the company’s web site and check out the vehicle’s specifications, it appears there will be a gasoline-powered option available. Then what becomes interesting is to see how much smaller the cargo space is in the CNG version compared to the gasoline one – 29.1 ft2 versus 36.4 ft2 – due to the need for the larger CNG fuel tank. That is a 20% difference, or if we assume two wheelchair passengers, about four and half fewer cubic feet of space per passenger – 14.6 ft2 compared to 18.2 ft2. That strikes us as not an inconsequential difference.

Our last surprise about this loan was figuring out how it fit with the mission of the Energy Department’s Advanced Technology Vehicles Manufacturing Loan Program, described as supporting “the development of innovative, advanced technologies that create thousands of clean energy jobs while reducing the nation’s dependence on oil.” If all the MV-1s are based on CNG, yes, it will reduce our oil dependence, although we are not sure by how much. We’re not sure we understand what “innovative or advanced technologies” are used in the MV-1 as CNG is a well-established technology, although not widely adopted. Oh, I guess it has to do with the clean jobs, but this loan won’t meet the “thousands of clear energy jobs” threshold, either.

Just a couple of weeks later it was announced that the Energy Department had rejected a request for a $321 million loan application from V-Vehicle Co., a start-up company founded by former Oracle Corp. (ORCL-Nasdaq) executive Frank Varasano, and backed by $100 million from investors including Boone Pickens. The State of Louisiana was also helping the company with a loan to help convert a plant in Monroe, Louisiana, to build the vehicle and employ 1,400 workers. While the company had not disclosed its technology publicly, it did say after the loan rejection that the car would cost as much as 40% less than “comparably equipped” cars, would save the typical driver about 300 gallons a year in fuel and that it would be ready for production and sales by the end of next year.

Earlier in March the Energy Department announced that its BioEnergy Science Center had created a new fuel from woody plants that could be used in automobiles as a replacement for gasoline. The process for directly converting woody material into isobutanol involves the use of a genetically produced super microbe rather than having to employ several microbes to perform different functions in the production of ethanol. The super microbe is similar to ones used in cleaning up polluted sites. The process is more direct than that employed in producing ethanol suggesting it will be cheaper.

According to Energy Secretary Steven Chu, “This is a perfect example of the promising opportunity we have to create a major new industry – one based on bio-material such as wheat and rice straw, corn stover, lumber wastes, and plants specifically developed for bio-fuel production that require far less fertilizer and other energy inputs. But we must continue with an aggressive research and development effort.” Compared to ethanol, higher alcohols such as isobutanol are better candidates for gasoline replacement because they have an energy density, octane value and Reid vapor pressure – a measure of volatility – that is much closer to gasoline. There is the possibility that isobutanol can be used directly in existing engines without blending, although it can be blended in any proportion with gasoline. At least these are the claims of the scientists as no engine tests have been conducted.

It is interesting to note that in the technical discussion about the isobutanol production process, the comment was made that although corn stover and switch grass were relatively abundant and cheap, the process worked best on corn and sugar cane, the same raw materials used to produce ethanol. One wonders whether we really have created a new and better mouse trap, or merely a new one. We’re glad to see that our scientists are searching for new fuels, but if this technology is better than ethanol then who bares the cost for all those ethanol refineries constructed in response to that mandate? Oh, you and I, the American taxpayers!

Last, but not least. I’m sure you are happy to learn that the Environmental Protection Agency (EPA) has announced that the District of Columbia, home to our federal government, has been recognized as the leading EPA Green Power Community. The local residents are purchasing 756 million kilowatt-hours of green power annually – enough green power to meet 8% of the community’s total electricity use.

Wind Energy Continues To Find More Problems (Top)

Wind One, a 400-foot wind turbine owned by the town of Falmouth, Massachusetts, on the southwestern tip of Cape Cod, was installed in early 2010. The turbine was welcomed by the town residents because it would help keep taxes down while demonstrating their concern for promoting green energy. The wind turbine cost $5.1 million, of which $3 million was derived through grants, government funding and credits. The turbine generates 1.65 megawatts of electricity during optimum conditions. While some residents were happy with the wind turbine fitting in with the rest of the town’s topography, others thought the pillar of white steel with its whirling three-bladed rotor on top was less than pleasing to the eye.

The real problem was that once the turbine was switched on, it generated significant noise, which some described being as loud as an old Soviet helicopter. One resident, an avid supporter of alternative energy and owner and operator of a passive solar company on Cape Cod for 25 years, Neil Anderson, told a Boston radio station, “It is dangerous. Headaches. Loss of sleep. And the ringing in my ears never goes away.” His conclusion was that the turbine was located too close to his home.

Mr. Anderson and other neighbors engaged a lawyer who negotiated an agreement with the town to disengage the turbine when wind speeds exceed 23 miles an hour. The problem is that this wind turbine was designed to perform optimally at about 30 miles an hour. So now that the noise impact has been resolved, the problem for Falmouth is that its expenses are up. According to Gerald Potamis, the operator of the town’s wastewater facility, by shutting off the turbine when wind speeds are high, the town will have to spend an additional $173,000 to purchase natural gas to run the plant.

The agreement highlights several ongoing problems with onshore wind turbines – their noise level and location relative to homeowners. These problems, as they become better known, are sparking intense debate at town meetings and zoning committee sessions. These problems are why many wind proponents believe the solution for wind energy is to locate the turbines offshore where the wind speed is more constant, there are no people and the visual issue from shore is nonexistent as the wind mills are over the horizon. The solution, however, ignores many of the problems of installing wind turbines in deepwater, servicing them and installing power cables to bring the power to the onshore power grid. The cost of offshore wind turbines is multiples of onshore ones, another major issue.

Exhibit 21. Wind Energy And Power Needs At Odds

Source: EIA

Environmentalists have faced another challenge on the other side of the country over a wind farm. In the 1970s, some 4,500 wind turbines were installed in the Altamont Pass Wind Resource Area, a 50,000 acre spot just east of San Francisco. Since the wind farm commenced operation, there has been a noticeable increase in the number of dead birds in the area sparking an outcry from nature activists. In 2008, a two-year, taxpayer-funded study was launched, which determined that 8,247 birds were killed by the turbines. In 2010, a settlement was reached between the Audubon Society, Californians for Renewable Energy and NextEra Energy (NEE-NYSE), the owner and operator of the wind farm. Nearly half the smaller turbines will now be replaced by newer, more bird-friendly models, a project expected to be completed by 2015. The cost is unknown. The company will also spend $2.5 million to restore a raptor habitat.

In each of these wind power projects, the resolution of problems associated with turbines has been to raise the cost of the electricity consumers are paying. The solutions are addressing problems that have been known to exist for wind energy but were either ignored or obfuscated by the proponents of the projects. These problems fall under the NIMBY – not in my backyard – syndrome.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.