- Is Unconventional Gas Wave Too Big To Overcome?

- Commodity View Clouded By Uncertain Global Economy

- Did Computer Hackers Crack The AGW Cabal?

- The Significance Of Warren Buffett’s Investment Bets

- Offshore Wind and Green Jobs Are Long-term Future

- Hurricane Season Ending With Little Oil & Gas Impact

- Steps In Never Ending Quest To Reduce Congestion

- Climate Support Waning As Copenhagen Meeting Nears

Musings From the Oil Patch

November 24, 2009

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Is Unconventional Gas Wave Too Big To Overcome? (Top)

Natural gas inventories are continuing to grow during the normal commence of the gas withdrawal season as producers drill more wells and complete previously drilled wells, especially in the prolific gas-shale basins of this country. In addition, producers who had shut in gas production during the weak pricing period of the third quarter are now bringing back into production, or at least attempting to bring back, some of those previously shut-in wells. Until demand for natural gas improves, this scenario condemns gas prices to remain low.

Analysts have been wrestling with the data showing a lack of meaningful progress in cutting production following the collapse in gas-directed drilling during the past 12 months. One of the culprits seems to be the lack of data on the number of previously drilled but yet uncompleted wells in these gas basins that appear to be partly responsible for the continuing surge in gas production. The problem for analysts is how to get a handle on the possible number of these uncompleted wells and their future impact on gas production.

We have seen two analyses of the uncompleted well issue, neither of which left us with a feeling of confidence about their conclusions. One of the studies we understand has since been withdrawn due to the problem of data coming from two sources that may be counting wells differently. In the oil patch, that is almost par for the course and is what analysts are supposed to attempt to reconcile. The data challenge is that the American Petroleum Institute (API) counts the number of wells drilled by type – oil, gas and dry – for each quarter of the year. The Energy Information Administration (EIA) counts the number of completed wells by type – oil and gas – by quarter. As we see weekly when these two different organizations report the inventories of petroleum products in this country, the numbers differ, and in some cases dramatically, despite the fact that supposedly they are talking to the same reporting entities.

As one would expect, the two analytical studies came to dramatically different conclusions using the same data. The optimistic case (supporting higher gas prices) showed where the gap between the count of wells drilled and those competed has been closed dramatically in recent quarters suggesting that the industry is now completing wells almost as fast as it is drilling them. The negative case took the difference in wells drilled versus those completed and accumulated them to a number nearly twice the rate wells are being drilled currently. We show the results of these two analyses in the following exhibits.

Exhibit 1. Optimistic Case: Completions Matching Drilling

Source: API. EIA, PPHB

Exhibit 2. Pessimistic Case: Well Backlog Overwhelms Drilling

Source: API, EIA, PPHB

The principle behind each analysis is correct. The challenge is how to combine the two in such a way as to get a more representative view of the potential backlog of uncompleted wells. But more important is trying to assess the outlook for the industry as they complete these previously drilled wells and bring them into production because that will be the bottom line, along with demand growth, in predicting the trajectory for natural gas prices. Almost regardless of what happens to gas demand, if production growth is more rapid than the market can handle, meaning that gas inventories fail to be drawn down significantly during the upcoming winter season, then the overhang of gas storage volumes will depress future gas prices. Weak gas prices (possibly undercut also by more coal-fired electricity supplies and increased cheap LNG supplies) will hurt the cash flows and profitability of gas producers. They might be forced to cut back their future drilling efforts or even get into such financial difficulties as to be forced into more drastic corporate actions such as mergers or even bankruptcy. This scenario, one not focused on much today, could be a disaster for investors and also for the U.S. as we might be subjected to violent gas price spikes in the future as adequate supplies are not developed in a timely manner.

In order to dig deeper into the mystery of uncompleted wells, it helps to understand that gas wells drilled are separated into two categories – vertical and non-vertical. The latter encompasses directionally-drilled wells and horizontally-drilled wells, the key technologies for helping to open up the prolific gas-shale basins. The breakdown between these two types of wells is shown in the next exhibit. Notice how vertical wells used to dominate the mix of wells drilled but are now being overwhelmed by non-vertical wells. The breakdown of well types is done by applying the percentage of drilling rigs drilling vertical and non-vertical wells as reported weekly by Baker Hughes (BHI-NYSE). Admittedly this percentage could be off some because the Baker Hughes data doesn’t show those rigs drilling exclusively for gas versus for oil, but the percentages are probably within a reasonable ballpark for analytical purposes.

Exhibit 3. Non-vertical Wells Dominate Gas Drilling Activity

Source: API, Baker Hughes, PPHB

What we know about these two types of wells is that non-vertical wells, especially those located in the prime gas-shale basins require substantially more pressure pumping equipment to complete than vertical wells. That is a function of the need for massive hydraulic fracturing pressure and multiple fracturing treatments in order to improve the rate of flow of these wells and to make them economic given their increased cost to drill and complete. That characteristic has to be accounted for in any analysis of uncompleted wells. Why? Simply because gas-shale wells require more pumping capacity and require greater set-up and teardown time, thus reducing the effective capacity of the domestic pressure pumping fleet.

Exhibit 4. Gas Well Completion Capacity Exceeds New Wells

Source: API, EIA, Baker Hughes, PPHB

We know two things about the pressure pumping industry in recent quarters. 1) It has been adding capacity to be able to meet the producers’ needs for massive stimulation jobs. 2) Pricing discounts for pressure pumping work have been extreme, eliminating profitability for the service company providers and forcing them to park equipment due to inadequate returns. So as industry capacity expanded at a pace greater than drilling in 2007 and 2008, it is now contracting due to poor pricing. That phenomenon is shown in our estimate of industry capacity in the above exhibit.

If we assume the pressure pumping companies can meet the needs for all the vertical completions (simple and less demanding wells) and then tries to satisfy the non-vertical well count, we can begin to calculate the growth of uncompleted wells and how that backlog may be shrinking in recent quarters. If our capacity estimates are correct, then the third quarter uncompleted backlog of roughly 1,500 wells just happens to compare closely with the estimate for uncompleted wells suggested by Dave Lesar on Halliburton Company’s (HAL-NYSE) earnings conference call on October 16th. At that time he stated the company believed there were 1,300 to 1,500 uncompleted wells in North America

Exhibit 5. Completions Outrunning New Gas Well Drilling

Source: API, EIA, Baker Hughes, PPHB

representing 7,000 fracture stages. This estimate also fits with comments we have received from others involved in the pressure pumping sector that there are “thousands” of uncompleted wells.

With a reasonable handle on the backlog of uncompleted wells, the question then becomes what might the future hold. If we assume that gas wells drilled and completed each grow by 10% in the fourth quarter of 2009 and then by 12% in 2010’s first quarter and by 15% in each quarter during the remainder of 2010, we have a picture of a rising well count as depicted in the nearby exhibit.

Exhibit 6. Future Demand Drives Gas Well Drilling Activity

Source: API, EIA, Baker Hughes, PPHB

If the gas producers step up their completion activity and pay higher prices for fracturing services, then the pressure pumping fleet capacity should rise. But drilling probably still overwhelms the ability for the service industry to restore capacity as quickly as wells are drilled. Therefore, even with better pricing for fracturing services we can envision a scenario whereby by the end of 2010 there will be a greater backlog of uncompleted wells once again. That backlog could represent a headache for gas producers.

Exhibit 7. Uncompleted Gas Wells Could Be Problem In 2010

Source: API, EIA, Baker Hughes, PPHB

For natural gas producers the uncompleted well count represents a serious challenge. As these wells are completed the initial gas flows are huge, which is likely to overwhelm the modest demand growth being experienced presently. Secondly, there has been an upturn in the number of gas-rigs drilling and many gas-shale producers are planning to step up their capital spending and drilling efforts meaning even more gas coming to the market in 2010. If producers need to grow their gas production in order to continue to curry favor from analysts and investors on Wall Street, then they will have to pay more for their pressure pumping services, which will encourage the fleet capacity to expand allowing more wells to be fractured and brought into production in the future. All of that suggests weaker prices at least during the first half of 2010. What the back half of 2010 holds for gas prices will likely depend upon gas demand and Wall Street’s willingness to continue shoveling money to these gas-shale producers. If gas demand falls again in 2010 as the EIA forecasts, then one has to wonder whether we are watching an impending train wreck in slow motion.

Commodity View Clouded By Uncertain Global Economy (Top)

The latest U.S. government statistics have cast some doubt on the sustainability of the current economic recovery. This comes at a time when international financial bodies are suggesting that many of the world’s economies are well into recovery phases. However, it seems that every time these announcements are made one government or another announces statistics that shock financial analysts. This uncertainty has been translated into the commodities market in various ways, although mostly through its impact on the value of the U.S. dollar compared to other global currencies. The most noted relationship has been the inverse relationship between the value of the dollar and crude oil prices.

Given all this uncertainty, forecasts for crude oil and natural gas prices are widely divergent for 2010. If we look solely at the oil and gas futures strip as traded on the commodities exchanges, we can see that traders expect higher prices in the future – not a surprising trend. If oil and gas producers are going to sell some of their production now for future delivery, they should expect to receive a price somewhat higher than the current price because they are giving up any possible upside that might occur due to economic or financial events and value of the currency in the future is likely to be worth less, also.

The sharp recovery in crude oil prices this year belies fundamental industry trends. Following last year’s rocket ride up and subsequent collapse in prices in the face of weak global economic conditions and growing OPEC production, rising global oil inventories should be depressing crude oil prices. Rex Tillerson, chairman of ExxonMobil Corp. (XOM-NYSE), suggested that based on economic fundamentals, crude oil prices should be in the $55 to $60 a barrel range rather than around $80 where prices are currently trading. Looking out, he doesn’t see global economic activity picking up sufficiently to boost oil prices out of his fundamental trading range.

On the other hand, a number of commodity traders and energy analysts suggest that crude oil prices next year should trade more in the $85 to $90 a barrel range. Their view is predicated on continuing growth in oil demand from developing economies that will more than offset the declining demand experienced in the highly industrialized economies of the world. As a result of these divergent views for oil demand, the range of oil price forecasts is one of the widest in recent memory as shown in the nearby exhibit. Current 2010 oil futures prices are largely in the upper end of that price forecast range, potentially signaling that oil futures are a risky bet at the present time.

Exhibit 8. Oil Futures Seem Unconnected To Fundamentals

Source: CME, PPHB

Superimposed on the oil price debate is the question of petroleum industry capital spending and its impact on the pace of development of new oil and gas supplies. Recently the International Energy Agency (IEA) warned about the impact of reduced investment in oil projects on the growth rate of new productive capacity. In fact, the IEA is even concerned about the sustainability of existing production given the sharp fall in industry capex spending this year. If new oil supplies are not brought forward on a timely basis, the IEA believes there could be serious supply shortages greeting the growing global oil demand they foresee.

The same phenomenon is true in the North American natural gas market. That market has been well supplied this year as production continues to remain high in the face of a collapse in gas-oriented drilling. In fact, gas production exceeds current gas demand helping to swell gas storage inventories while pushing off the need for Canadian and liquefied natural gas (LNG). The increased emphasis on, and success of, gas-shale development efforts helps explain why natural gas production has not declined as much as expected by analysts earlier this year. The recent upturn in gas drilling, coupled with the even greater initial production from gas-shale wells in the newer basins, has made it even harder for production to drop. This has helped to extend the decline in natural gas prices evident since mid-2008.

Most of the recent production declines are related to involuntary well shut-ins due to growing gas storage volumes. The 20 billion cubic feet (Bcf) of gas injected into storage last week, slightly above the analysts’ expectations, put the total gas storage inventory at a new record level of 3,833 Bcf. This represents nearly 96% of total capacity estimated at 4,000 Bcf. Most analysts anticipate possibly another two or three weeks of gas injections before we switch to gas withdrawals – quite unusual at this time of the year. These injections will push gas storage volumes to even higher record levels suggesting additional downward pressure on prices, or at least a cap on prices, as capacity approaches absolute maximum levels.

Exhibit 9. Natural Gas Storage At All-time Record Level

Source: EIA

Absent a recovery in industrial gas demand, the healthy pace of production and the possibility of additional Canadian gas and LNG imports will likely keep gas prices under pressure. It is the expectations for these variables that divide the forecasts for gas prices in 2010. The range of 2010 gas price forecasts stretches from $5.25 on the low side to $7.50 on the high. With so many variables involved in the forecasts, there cannot be a high degree of confidence in any one level. In fact, a gas trader made the comment about these forecasts and the variables behind them that he thought the industry would be hard pressed to even see $5.25 next year. Talk about a negative outlook!

Exhibit 10. Gas Price Forecasts Well Above Current Prices

Source: CME, PPHB

Oil and gas are not the only commodities subject to strong pushes by market forces other than their industry dynamics. A story in last Friday’s Financial Times pointed out that even with global industrial production growing at a double-digit rate, it will not be before 2011 at the earliest that it will return to the level it was at last May before the economic and commodity price collapse. Yet since the bottom in commodity prices about 12 months ago, most commodities have experienced dramatic price increases. The paper cited nickel that is up by more than 50% and copper that has doubled in price. Crude oil prices have more than doubled during this same time period and many agricultural products have also soared in price.

To put the debate into context, the paper published two charts – one showing the month-on-month percentage change of the three-month moving average for world industrial production and the S&P/GSCI commodity indices. While world industrial production has increased nicely in the past several months, the trough created by the economic collapse of the last half of 2008 and the first part of 2009 shows how long it will be before past levels of economic activity will be achieved. On the other hand, the commodity indices chart shows the collapse in 2008, but moreover it also shows a dramatic recovery in energy and industrial metals prices this year.

Exhibit 11. Commodity Prices At Risk

Source: Financial Times

The Financial Times pointed out how commodity analysts continue to espouse bullish arguments for investing in these products. As they put it, “Commodity prices, apparently, will rise forever on the back of rapid growth in emerging markets.” But based on the current relationship between commodity prices and world industrial production levels, the FT warned its readers that “investors are best advised to ignore wailing commodity analysts – yet again.”

Did Computer Hackers Crack The AGW Cabal? (Top)

The big story over last weekend was the hacking into the computer at the University of East Anglia’s Hadley Climatic Research Centre in England, the epicenter of much of the anthropogenic global warming (AGW) research of the past decade. The hacker downloaded 62 megabytes of data including about 1,000 emails and some other files and posted them to another server where they were available for a short period of time before being removed. A number of people downloaded the data and have posted it on other web sites and are beginning to analyze the content of the emails.

The emails tend to confirm the religious fever among these prime researchers behind the AGW movement. In some cases they discuss their dislike for critics, including how happy they were about the death of one. They also caution each other about how committed to the movement certain supporters are when those people are asked for information or help. The emails also show how at times some of the researchers worked to fake and/or manipulate the data supporting their arguments when actual scientific data was in conflict. These emails also establish co-operation among many of the leading proponents of AGW to undercut critical analyses of their research studies, especially when the critics were challenging the actual data used and the calculations. http://www.powerlineblog.com/archives/2009/11/024993.php

There will be extensive reports and analyses of these emails coming out over the next few weeks – especially with the Copenhagen climate change conference starting soon. Questions are already being raised about whether fraudulent emails have been added to the hacked data. So we expect there to be a lot of haranguing about the validity of any critical conclusions drawn from analyses of the emails. What seems evident from this data, however, is that the motives of the AGW believers were designed to build a case that would appear to be overwhelming and stimulate political action that would build momentum for increased government spending in AGW research that would be available to support these scientists. We wonder if the Nobel Prize committee has ever asked for an award to be returned.

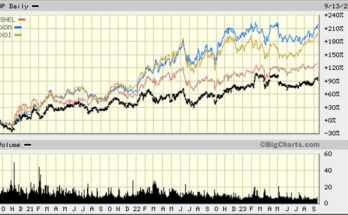

The Significance Of Warren Buffett’s Investment Bets (Top)

Earlier this month Warren Buffett surprised the world with his announcement that Berkshire Hathaway (BRK.A-NYSE), the company he runs and holds a significant interest in, would purchase the 77.4% of Burlington Northern Santa Fe Corporation (BNI-NYSE) it does not already own for roughly $26 billion, paying cash and stock for the interest. Burlington is the largest railroad by revenue and carries coal and timber from the West, grain from the Midwest and imports arriving directly from Mexico and Canada as well as from California ports. In one major move, Mr. Buffett has taken a huge step in reshaping Berkshire Hathaway.

Last week Berkshire Hathaway also released its 13-F form showing changes in its investment portfolio for both the company and its insurance subsidiaries. There were some interesting moves within the portfolio holdings that also send signals about what Mr. Buffett thinks will be the dominating investment trends in the future – or at least trends he believes he can capitalize on and make positive returns for his shareholders.

In the 13-F form, Mr. Buffett revealed that he had doubled his holdings of Wal-Mart Corp. (WMT-NYSE) and had increased his position in ExxonMobil (XOM-NYSE) by 50%, a position he had not disclosed until recently under an agreement with the Securities and Exchange Commission to not have to disclose positions he is actively investing in. While Mr. Buffett increased his ExxonMobil position to 1.2 million shares, he was further reducing his holdings of ConocoPhillips (COP-NYSE). That was a controversial investment that did not work out and resulted in Berkshire Hathaway having to take a significant write-down in the value of its holdings earlier in the year. He has been selling his position steadily. So why would Mr. Buffett invest in ExxonMobil while selling ConocoPhillips?

We are not sure it is merely about the valuation of the two companies – ExxonMobil is much cheaper and has underperformed its peer group, although ConocoPhillips also has underperformed. We think it might have to do more with Mr. Buffett’s view of the future of energy markets, the long-term growth potential for international natural gas markets and the role of ExxonMobil in renewable fuels and lithium-ion battery technology. ConocoPhillips made a large and early bet on North American natural gas with acquisitions for which it paid top dollar only to see those values decline sharply. In doing those transactions, the company levered its balance sheet and is now in a mode of selling assets to strengthen the balance sheet and reposition the company for growth.

Putting some of the recently disclosed portfolio moves together with the Burlington purchase and the existing businesses Berkshire Hathaway owns makes for an interesting mosaic of how Mr. Buffett may be seeing the future for the U.S. economy over the next 20-30 years, even though he will not be around for some of that period. In 2007, Berkshire Hathaway purchased Marmon Holdings, Inc., a company which makes industrial products. That gives Mr. Buffett more goods to send to international markets – primarily to the east and south of the U.S. – along with the coal, timber and grains that Burlington moves.

Exhibit 12. Burlington Northern Route Structure

Source: BNSF, Wikimedia Commons

Source: BNSF, Wikimedia Commons

The Burlington rail system is spread through the central and western parts of the United States, where growth seems to be better than in the East. His railroad purchase suggests he believes the coal industry will remain a viable global supplier along with being a significant resource supplier to electric generation plants in this country. His railroad matches up with the expanding and upgrading port facilities in Houston that will be bringing in more goods from abroad, primarily South American and Asia. Mr. Buffett also believes that oil prices will remain high, giving railroads a competitive advantage over trucks in the long-distance hauling of goods. Burlington suggests that rail is 3-4 times more efficient than trucks in moving goods. Lastly, Berkshire Hathaway has a low-cost insurance company in GEICO and significant holdings in an efficiently-run financial institution in Wells Fargo (WFC-NYSE), that when coupled with its huge investment in Wal-Mart suggests Mr. Buffett thinks the U.S. consumer will remain income-challenged for some period of time that will benefit these types of investments.

On the energy front, besides the bet on coal reflected in the railroad purchase, Berkshire Hathaway owns Mid-American Energy Holdings Co., an Iowa-based utility with a growing portfolio of wind power assets. The head of Mid-American, and a possible successor to Mr. Buffett, was the key person who investigated the technology behind BYD (BYDDF.PK), the Chinese battery and electric power vehicle company that Berkshire Hathaway has invested in. Reportedly, the BYD e6 electric car has shown a range of 250 miles on a single charge and that a 50% battery charge can be completed in ten minutes. The progress Better Place has achieved developing the technology to exchange fully-charged batteries in an automated process requiring only 90 seconds means the electric vehicle could be on the cusp of more rapid development than generally assumed.

If one is to believe the comment by a Rice University MBA student, reported in a story in the Houston Chronicle, about Mr. Buffett’s answer to his question about Peak Oil, then America is headed for a huge influx of electric vehicles. Supposedly Mr. Buffett told this student that he envisioned in 20 years all the cars on the road will be electric. We are doubtful that was exactly what Mr. Buffett said, or meant, as it is hard to believe the global auto industry will be able to produce that many electric cars and that the fleet would turnover that quickly. But his statement and investments suggest Mr. Buffett believes our future will be driven by increased use of electricity – produced from cheap domestic natural gas, wind power and coal and LNG. He also believes we will be relying on electric vehicles manufactured overseas and delivered by Burlington along with the coal to power western electric generating plants to fuel those cars. At the same time, Americans will be living a more frugal life that includes buying low-cost insurance from GEICO and cheap goods and groceries from Wal-Mart. This sounds like a positive scenario for energy service companies.

Offshore Wind and Green Jobs Are Long-term Future (Top)

The hotbed of offshore wind development is the Northeast region of the country with the Cape Wind project nearing final approval and projects offshore Rhode Island and Delaware in early stages of development. At the eighth annual Ronald C. Baird Sea Grant Science Symposium held in Newport earlier this month, the audience was informed about the wind energy potential offshore the East Coast of the country. According to Willett M. Kempton, a professor at the University of Delaware, the annual wind resources in the region amounted to 330 gigawatts or nearly five times the estimated energy use in nine coastal states from Massachusetts to North Carolina.

The study was based on ten years of satellite data and information collected from federal meteorological buoys. If the region’s wind resources were developed to their maximum capacity, there would be sufficient electricity to power all the cars in the region and provide all of its heating needs, too. The caveat is that it will take the installation of tens of thousands of turbines in waters up to 100 meters deep to achieve this goal. And several of the speakers, including leaders of some of the initial offshore projects under development, question whether the political will and societal support to tap this potentially unlimited renewable clean energy exists.

David Cohen, president of Fishermen’s Energy, an offshore wind farm developer, questioned whether there was the support from the citizenry and governments to install hundreds, if not thousands, of offshore turbines. Mr. Cohen said, “Quite frankly, I’m not sure if the United States is willing to make that commitment.”

Jim Lanard, managing director of Deepwater Wind, the developer of the Rhode Island wind farms, said that offshore wind makes more sense for the Northeast because each turbine can create 50% more energy than ones onshore and they shift the turbines away from population centers. He pointed out that $12 billion to $18 billion of wind development is planned for the East Coast, but that it will take quite a while for that to happen. Deepwater Wind must comply with 17 federal reviews, he said, that will cost the company tens of millions of dollars for analyses and research. He is learning that while wind power may be the fair-haired child of the Obama administration, the offshore rules and regulations the oil and gas industry must comply with are not going to be waved aside for renewable energy sources. Even with state programs, Mr. Lanard said that steel won’t be going into the water before 2012.

With all this potential, the New England economy is counting on green jobs to be its economic savior. But at a conference last week in Boston, speakers said the region will gain jobs from the development of clean energy, but it could take years before there is much impact on the economy. According to Ross Gittell, the New England Economic Partnership’s vice president, “I think the green economy is part and should be part of an economic recovery, but it can’t be counted on as the single source of growth.” He and other speakers pointed to a Washington nonprofit foundation study that showed that between 1998 and 2007, green-collar jobs in the U.S. grew at a faster rate than overall jobs (9.1% vs. 3.7%), but the total number of green jobs is still tiny.

A study by the Pew Charitable Trust for New England counted 51,000 green jobs, or 0.66% of total employment in the region. Massachusetts, which leads the region in the number of green jobs, counts only 0.69% of overall employment in that category. Rhode Island, which is hoping to make green jobs a large part of its economic recovery strategy, has only 2,328 positions, or 0.42% of total employment in the state.

In his written report to the conference, Dr. Gittell pointed out that the region’s best hope for green job creation is in weatherization and improving energy efficiency in the region’s large stock of older buildings. Those jobs don’t sound like the high paying jobs that promoters of green-jobs suggest we are destined to have from this energy revolution.

Hurricane Season Ending With Little Oil & Gas Impact (Top)

The 2009 hurricane season is drawing to a close in slightly less than one week’s time. This year has proven to be among the calmest seasons for tropical storm and hurricane activity in the Atlantic basin in a long time, which turned out to be good news for the oil and gas industry put possibly disappointing news for commodity traders. The petroleum industry was rarely challenged to ramp up its emergency shut-down and evacuation efforts so oil and gas production in the Gulf of Mexico was barely upset. Hurricane Ida, the most recent and meaningful Gulf storm this year, did disrupt some production, but appears to have caused greater problems for oil imports.

Tropical storm activity over the last 12 months may have been at the lowest level experienced since the national weather organization began monitoring the Earth’s oceans by satellites in 1979. The lack of tropical storm disruptions to Gulf of Mexico oil and gas production hurt commodity prices as the continued pumping of supplies contributed to the record buildups in U.S. crude oil and natural gas inventories. In turn, these inventory builds, coupled with sustained North American oil and gas production, acted to limit the rise in prices, especially for natural gas. As a global commodity, crude oil’s price actions are influenced by factors outside of the control of the United States oil market. Oil prices were impacted in particular by the falling value of the U.S. dollar versus international currencies this year.

As shown in the accompanying chart, projections for tropical storm activity this year were continually lowered. The accompanying chart contains the history of projections prepared by the tropical storm forecasting group at Colorado State University’s (CSU) Department of Atmospheric Sciences headed by Philip Klotzbach and Bill Gray. As shown, their first effort at estimating the number of storms this season was issued on December 10, 2008, and it called for 14 named storms including seven hurricanes. Those totals were reduced in each subsequent forecast until the storm season commenced. The team’s last forecast, made August 1st and which included observed storm activity for the year up to that date, which was nothing, reflected a further reduction to only 10 named storms including four hurricanes.

Exhibit 13. 2009 Hurricane Forecast Was Reduced Repeatedly

Source: CSU Department of Atmospheric Science

Baring some amazing development within the next few days, and history doesn’t support a change, the 2009 tropical storm season should end with a total of nine named storms and three hurricanes. Only one hurricane landed on the U.S. coast (Ida), and it was only a tropical storm when it hit. Of the three hurricanes that developed this year, two of them attained Major Hurricane status. One storm, Hurricane Fred, which lasted about five days from September 7th to September 12th, attained a Level 3 hurricane classification for about 12 hours during its life span. Hurricane Bill, the other Level 3 hurricane experienced a longer time span as a Major Hurricane during its run from off the coast of South America then over Bermuda and up by Nova Scotia before heading across the Atlantic to Ireland and the United Kingdom.

Exhibit 14. Storms Usually Hit In Summer

Source: AccuWeather.com

In comparison to the final CSU forecast, actual storm activity for the Atlantic basin turned out to be almost 100% on target. The forecast missed the actual number of storms in each category by only one. But why were the CSU hurricane forecasts consistently reduced over the past 12 months? It was due to the development of an El Niño in the southern portion of the Pacific Ocean that influenced weather patterns in the Atlantic basin and especially contributed to the development of strong shear winds that mitigated the formation of hurricanes and weakened those that did form.

The low level of hurricane activity this year will result in 2009 ranking with the El Niño-dominated low year of 1997 when the Atlantic basin experienced only eight tropical storms, seven named storms and three hurricanes, interestingly including another hurricane named Bill. Both 1997 and 2009 were below-average tropical storm years compared to the 50-year averages of 1950-2000. Over that time period the North Atlantic basin averaged approximately 10 named storms, six hurricanes and slightly over two major hurricanes each year.

Exhibit 15. 50-Year Averages For Tropical Storms

Source: CSU, Department of Atmospheric Science

The 2009 below-average storm season is further calling into question a popular view that there is a strong linkage between the number and severity of hurricanes and the global warming trend, now called climate change. A number of studies and papers have been issued attempting to show the causation of storm devastation from more and stronger hurricanes and climate change. Almost immediately following Hurricane Katrina’s 2005 destruction of New Orleans and the Louisiana, Mississippi and Alabama coastlines, Massachusetts Institute of Technology hurricane scientist Kerry Emanuel published a paper attempting to link climate change data with increased hurricane intensity. In the following years, additional papers were written to further this causation link. These papers were motivated by the four strong storms experienced in 2005 following three large ones the prior year, all coinciding with the escalating hype about the rapid heating up of the globe.

The problem with these studies is they appear to ignore certain data that would dispute the tie between increased storm activity and higher temperatures. Many students of hurricanes point to the influence of the Atlantic Multidecadal Oscillation (AMO) as the primary cause of the increase in storm frequency. The AMO is driven by changes in ocean salinity and not by rising sea surface temperatures or CO2 concentrations. While meteorologists acknowledge the rise in global temperatures during the past century, they point out that for only one region to experience an increase in storm frequency belies the belief in the linkage of sea surface temperatures and storms. The historical data shows that in the 25-year period of 1945-1969 when the globe was cooling there were 80 major hurricanes lasting for 201 days. In contrast, the quarter century of 1970-1994 when the Earth was warming, there were only 38 major hurricanes over 63 days.

Exhibit 16. Temperatures Have Little Impact On Storms

Source: Klotzbach and Gray

The National Oceanic and Atmospheric Administration (NOAA) uses a measurement of tropical storm energy to determine the level of a season’s activity. The Accumulated Cyclone Energy (ACE) metric combines the intensity, duration and frequency of hurricanes and tropical storms during a year. Because the quality of tropical storm data has been questionable in earlier years and in various parts of the globe, there is much dispute about what impact warming ocean waters will have on tropical storm activity in the future.

A meteorology doctoral candidate at Florida State University, Ryan Maue, has been tracking the global ACE metrics in recent years with hopes of answering the question of what impact global warming may have on the frequency and intensity of tropical storms. The North Atlantic ACE reading of 52 through November 19th equates to 49% of the 30-year average reading since 1979 and 51% of the reading since 1948 – a very low reading.

Exhibit 17. Current Storm Activity Close to Record Lows

Source: Ryan Maue, Florida State Univ.

The 12-month running totals for accumulated cyclone energy for the entire globe during the past 31 years – 1979-2009 – show that earlier this year, the ACE index was at its lowest point during that time. Importantly, the ACE index for the past three years is at a historically low level, although not the lowest level ever recorded. Will it remain at this low level in the future? Or will the index rise?

Exhibit 18. Recent Storm Activity Is At Recent Lows

Source: Ryan Maue, Florida State Univ.

The best guess about the answer to the above question is that the index is not likely to stay as low as it has been in recent years. But that does not mean it will climb back above the average level experienced over the past 30 years. For those meteorologists who forecast tropical storm activity, they believe the Atlantic basin is in a period driven by AMO trends that will act to keep the average lower than normal. It will be interesting to see what the CSU-forecasters project in their first forecast for the 2010 hurricane season that should be announced in the next several weeks. Unfortunately, the forecast won’t have much impact on oil and gas prices for many months.

Steps In Never Ending Quest To Reduce Congestion (Top)

The Dutch government has approved a plan to shift its efforts to control road traffic congestion from flat road-taxes and vehicle-purchase taxes to a per kilometer charge for driving. The plan is also thought to be a way of attacking transportation-related pollution by reducing the number of kilometers drivers travel each year. At the same time the Dutch government was acting, a non-partisan think tank in Canada, Transportation Futures, was holding a meeting in Toronto to discuss options for planners seeking solutions to unclog their local transportation systems.

In the Netherlands, beginning in 2012, the government will institute a new global positioning system (GPS) monitoring of driving patterns with taxes based upon this data instead of the vehicle-purchase tax and the annual road-tax. The annual road-tax now totals more than €600 ($900) for a mid-sized car. The government expects that with the implementation of this new taxing scheme, the cost of new cars will drop in 2012 by as much as 25%, which begs the question of whether that price reduction will actually spur new car purchases and ultimately increased congestion, fuel consumption and greenhouse gas emissions.

The Dutch government is relying on its belief that the GPS monitoring system, charging a base rate of €0.03 ($0.07 per mile) per kilometer traveled with higher charges levied during rush hour and for traveling on congested roads, will alter people’s driving habits. They believe drivers will reduce the use of vehicles and the number and distance of trips taken, along with adjusting the time of the day they travel. The government further hopes that some people will decide to abandon their cars entirely and rely on public transportation and taxis, which will be exempt from these charges. Trucks, commercial vehicles and larger cars emitting more carbon dioxide will be assessed at a higher tax rate.

The estimated transportation benefits of this pricing switch are a drop of 15% in road traffic and rush-hour traffic being cut in half. With less traffic and fewer stressed drivers, fatal vehicle accidents should fall by 7% and greenhouse gas emissions from road travel would be cut by 10%. The per-kilometer tax burden is scheduled to rise each year until 2018, and could be adjusted sooner if it fails to change traffic patterns.

Across the Atlantic, the Organization for Economic Co-operation and Development (OECD) reports that Toronto’s traffic congestion costs the Canadian economy approximately C$3.3 billion a year in lost productivity. That cost has climbed significantly in recent years. A study, The Cost of Urban Congestion in Canada, commissioned by Transport Canada in 2002 and delivered in 2006 estimated that the cost to the Canadian economy from traffic congestion in the nine largest urban areas was between C$2.3 billion and C$3.7 billion in 2002 dollars. Some 90% of the cost was due to lost time to drivers and passengers stuck in traffic, while 7% was due to extra fuel costs and 3% from greenhouse gas emissions.

The problem today for Toronto, according to the OECD study is that some 71% of commuters still depend on their car for transportation. The study recommends that the city begin to implement programs to reduce congestion that involve the construction of toll lanes, implementation of congestion charges and fuel and parking tax hikes. Within the next 25 years, Ontario has plans to create 450 kilometers of High-Occupancy Vehicle (HOV) lanes reserved for multi-passenger vehicles. What other congestion-busting steps the province or city will undertake are unknown at the present time. Will it adopt a system of vehicle taxation similar to what the Dutch are proposing or is presently being used in Singapore and being tested in Oregon? Or might Toronto merely adopt a taxing scheme similar to those employed by London and Stockholm? Those two cities levy either a flat-tax rate on vehicles (London) entering the central city or a time-of-day rate (Stockholm).

Another subtle method to impact vehicle use is to restrict the availability of parking. While that would require a philosophical change in how zoning works by reducing the number of parking spaces that must be provided by business owners and landlords, it does represent a possible method for reducing the vehicle population within an urban area and the number of vehicles entering the area, also. Copenhagen has been following this path by taking away a few parking spots every year in what we could call an “urban-planning game of musical chairs.”

At the end of the day, these restrictions on vehicle use of road space are designed to attack congestion. But will do they work? People point to the fact that shortly after HOV lanes were set up on a bottlenecked stretch of California highway between Orange County and Riverside County, almost half the drivers opted to use the less crowded toll lanes, electing to pay the toll for reduced travel times. So what do we mean by congestion? Congestion is defined as the impedance vehicles impose on each other, due to the speed-flow relationship, in conditions where the use of a transport system approaches its capacity. This definition argues that we should be working to expand transport system capacities as a way to reduce congestion. The formula for estimating congestion cost is (Time @ target speed) – (Time at actual speed) times (Volume of traffic) equals (Total congestion delays). Unfortunately, the cost of congestion can increase even if no one is worse off.

For example, assume we have a local road with a 30-km/hour rating where vehicles are averaging 20 km/hour during peak time. We then change the designation to a 60-km/hour road and make improvements that increase the peak traveling speed to 25 km/hour. The traffic volume has not changed, but due to the congestion pricing formula, the cost has risen even though vehicles are traveling faster (This example comes from a University College of London study).

If the volume of traffic increases, congestion pricing has to increase even if the average speed increases. If the traffic volume rises more than the speed improvement, then even if the average speed is greater the congestion cost rises. This phenomenon is best shown by the data in Exhibit 19.

In this case, the total amount of time spent traveling has increased due to the increase in the number of vehicles traveling. The speed of each road time has gone down as a result of the congestion effect of the speed-flow curve. Overall, however, the average speed of travel has gone up as a result of differential growth on the road types and between peak and off-peak times. What this means is that even if you are traveling faster, the relative growth in traffic volume can actually make it appear that the cost of congestion has increased.

Interestingly, government actions to deal with congestion such as those discussed above have been around for thousands of years. Some 2,000 years ago, a Roman edict was issued stating, “the

Exhibit 19. Speed Up But So Is Congestion Cost

Source: J.M. Dargay & P.B. Goodwin, University College London

circulation of the people should not be hindered by numerous litters and noisy chariots.” The ancient city of Pompeii had parking restrictions. Julius Caesar introduced the first known off-street parking laws in Rome. The center of Rome was banned to vehicles between 6 a.m. and 4 p.m. because of the congestion of carts due to the convergence of all roads in the center of the city. The noise of chariots rumbling over the cobblestone streets supposedly drove Caesar crazy.

In AD 125, the emperor Hadrian limited the number of vehicles that could enter Rome. In AD 180, Marcus Aurelius extended the entry bans in principle to all towns in the Roman Empire, the first European continent-wide governmental policy action. So for all the improvements in technology to improve traffic flows, we are still relying on regulatory actions dating from the early years of the Roman Empire. So much for progress. Or maybe the Romans were more advanced than we give them credit for.

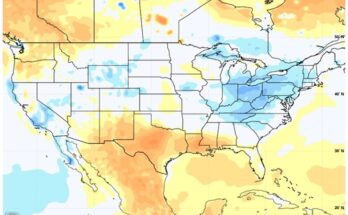

Climate Support Waning As Copenhagen Meeting Nears (Top)

The Climate Confidence Monitor survey released earlier in November shows that support for action on climate change is falling across the world, especially in the western industrialized countries. Worldwide, the concern over climate change had dropped by eight percentage points from 42% in 2008 to 34% now. In the United States, the level of concern about climate change has fallen to only 18% from 26% in 2008. In the United Kingdom the drop was even greater. British citizens’ concern about climate change is now at 15%, down from 26% last year, and in Canada the drop was to 26% from 2008’s 34% rating. The Climate Confidence Monitor is produced by the HSBC Climate Partnership, comprised of organizations such as World Wildlife Fund, Earthwatch Institute and HSBC (HBC-NYSE).

What this survey is really reporting is that concern about climate change is not a particularly high priority for most people around the world. The state of economies has become the greatest concern for most people. In Australia, climate change now is ranked only number seven on the list of citizen concerns, down from first place in 2007 when the government finally approved the Kyoto Protocol. This ranking is surprising to many climate change supporters who wonder how it can rank so low given the drought and forest fires experienced in Australia in the last year.

The British government is so worried about the lack of concern by Britons about climate change that it has commenced an advertising campaign to mobilize support for efforts to reduce emissions. The government’s Department of Energy and Climate Change has begun a £5.75 million ($9.56 million) guilt-laden advertising program with a commercial showing a father reading a bedtime story to his daughter. The story details all the calamities that could befall the country from climate change prompting the little girl to ask, “Is there a happy ending?”

Recent market research showed that 52% of Britons don’t believe climate change will affect them and 18% don’t expect it to affect their grandchildren. A poll conducted by YouGov showed that 25% of people don’t expect to be impacted, up from only 13% in 2007. Less than half the people felt at risk from heat waves and less than one-third are concerned about floods – the two major concerns climate change advocates believe will hit the U.K. in the future. These low rankings come despite more Britons acknowledging that they are aware of climate change as an issue. But as the Institute For Policy Research (IFPR) put it, Britons are bored by climate change (not as stimulating as a football match) and believe that it is a “gimmick” or has become “a bit faddy” and is driven by a “bandwagon” effect. The IFPR believes that one of the major problems is that the cost of being green is too great for most people.

The climate change problems in Britain are consistent with polls from other venues such as the European Union where only 50% of the people said in April that climate change was the world’s greatest problem versus 62% in 2008. In the United States, a Pew Research Center poll showed that 35% of Americans believe global warming is a serious problem, but that total was down by nine percentage points since last year.

In Canada, a poll taken by Hoggan & Associates for a number of corporations and other entities showed that concern about the environment ranked third behind the state of the economy and health care among citizens. They gave the environment only a 12% ranking. In a separate poll of 1,000 people that Hoggan termed “thought leaders,” or top members of business, universities, government and the media, the environment was the number one concern with a 32% weighting.

All these polls and market research studies show is how the cost of climate change is becoming more important to people given the state of the global economy. Additionally, they show that despite Al Gore’s claim that “the debate is over,” there remains a high degree of skepticism about the entire global warming science. So Copenhagen, rather than being the peak of the summit will now become merely a meeting at which people work on principles for a climate change agreement with a goal of legislation sometime in 2010. Is this an example of “kicking the can down the road” or a sign that another wave of social upheaval has been exhausted?

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.