- The New IPCC Report Lands With A Thud

- We’re Back, With Greater Appreciation For The Real World

- Renewable Power Loses Out To Natural Gas In Power Market

- Trying To Dodge Florence On Trip Home Was Unnecessary

Musings From the Oil Patch

October 23, 2018

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

The New IPCC Report Lands With A Thud (Top)

On October 8th, the Intergovernmental Panel on Climate Change (IPCC) issued its anxiously awaited report about the challenges of actually limiting the world’s temperature increase to 1.5°C, rather than the prior goal of limiting it to something less than 2°C. This lower increase was agreed to at the UN’s Paris Climate Initiative in late 2015, and this report was a mandated requirement of the agreement. That was because the challenges and costs of restricting the increase to a half a degree Centigrade lower had not been previously assessed. The title of the report: GLOBAL WARMING OF 1.5°C, does not capture the magnitude of the issue, but the sub-heading does a better job. The sub-heading states: “an IPCC special report on the impacts of global warming of 1.5 °C above pre-industrial levels and related global greenhouse gas emission pathways, in the context of strengthening the global response to the threat of climate change, sustainable development, and efforts to eradicate poverty.” That statement makes clear the motivation behind the climate change movement and the breadth of its research and political agendas.

We have not completed reading the entire report, which consists of five chapters, but still needs over 1,000 pages to cover the topic. Although this report’s length is shorter than many of the typical IPCC treatises, it is still too long for reporters to wade through before writing their articles. As a result, the media, as well as politicians, rely on the 34-page Policy Statement. This brief summary of the report’s research is designed to advance the universally embraced agenda of the climate change movement, and often is at odds with the underlying research included in the body of the reports. The public’s recognition of that reality likely explains why the report has received so little attention from the general public, despite the herculean efforts of the media to promote the report’s scary

conclusions. Every poll continues to show climate change and global warming rank at the bottom of the public’s list of concerns.

The 2015 Paris Accord was lauded for its “ground-breaking” scope. It was hammered out by some of the most liberal political leaders in the world at the time, all hoping to hone their credentials for their seriousness in fighting the forces driving climate change and the potential impacts that would be inflicted on their nations and residents. From the perspective of the passage of nearly three-years since the Paris agreement, the massive global political leadership shift may be a testament to why the public doesn’t consider climate change to be the primary reason for redesigning lifestyles and risking their economic well-being. A close reading of the new report confirms how little its authors, relying on thousands of academic research papers and books on the issues involved in climate change, know of the true risks of carbon emissions – whether they are as serious as claimed and the risks of the policies recommended to address them.

A quick review of the Paris Accord shows that many of the optimistic claims about the agreement’s power were well-crafted political statements designed to promote those leading the charge to secure an agreement. Remember, this was the first opportunity to address the failure of the Copenhagen meeting five years earlier, which fell apart when various countries worked to undermine the Obama administration’s leadership role in climate change. That was the meeting that President Barack Obama flew to as he basked in the halo of accepting the Nobel Peace Prize. At the meeting, the attempt by President Obama and Secretary of State Hillary Clinton to mastermind the negotiations was fought by other leaders.

The Paris Accord set forth a goal to lower the maximum amount of warming to be allowed from 2°C (3.6°F) to “well below 2°C.” The agreement retained the voluntary system of pledges, known as INDCs (Intended Nationally Determined Contributions), meaning that each country would submit its goal and plan to limit its greenhouse gas emissions. There is no mandatory requirement for such a goal and plan, and certainly no penalty assessed for failure to meet a goal. The mandatory and penalty requirement would begin with new goal submissions in 2020, when the next IPCC meeting would be held.

Pointed to as an example of the success of the Paris meeting was the announcement by China, the world’s largest carbon emitter, that it would undertake to have its emissions peak no later than 2030, and presumably begin declining thereafter. However, as pointed out in an article by John Sherman, Jay W. Forrester Professor of Management at the MIT Sloan School of Management and a faculty director of the MIT Sloan Sustainability Initiative, if all the country pledges are fully implemented, expected warming by 2100 would fall 1°C (1.8°F) compared to what it would be if no actions were taken.

But, assuming that all the promises were fully implemented (and without stronger actions in the future that are not yet pledged), expected warming would be 3.5°C (6.3°F). In other words, ignoring all the hoopla surrounding the agreement, the results would fall far short of the goal annunciated by the people driving the outcome, although there would be some progress compared to doing nothing.

With the unveiling of this IPCC report, the media went into overdrive with dire warnings about the short time remaining for radical global action if the world as we know it is not to experience doomsday: 2040, or 12 years from now. That horizon is slightly longer than most doomsday predictions made for the planet and civilization. According to an article in The New York Times, the report “paints a more dire picture of the immediate consequences of climate change than previously thought and says that avoiding the damage requires transforming the world economy at a speed and scale that has ‘no documented historic precedent.’” Civilization’s demise will come with “worsening food shortages and wildfires, and a mass die-off of coral reefs.”

The article quotes Bill Hare, an author of previous IPCC reports and a physicist with Climate Analytics, a nonprofit organization, declaring the report “quite a shock, and quite concerning.” He went on to say, “We were not aware of this just a few years ago.” Was his tongue firmly implanted in his cheek when he said that? His statement was the modern version of Captain Renault’s “I’m shocked, shocked to find that gambling is going on in here!” from the 1942 movie, “Casablanca.” By his statement, I’m guessing Mr. Hare slept through Al Gore’s 2006 movie, “An Inconvenient Truth.” We did, but it was from boredom rather than terror!

According to Steven Hayward, a political scientist and former Reagan administration official, his first recording of a ten-year doomsday prediction was made in 1970 by then UN leader U Thant. Since then, ten-year apocalypse forecasts have become a regular feature of the climate change as well as other environmentally-motivated movements. Some of these forecasts are made by serious academics and professionals, while others are by “crackpots.” The most interesting forecast, which still has a few years left before reaching verdict time, is from an MIT computer in 1972. It was a computer program called World1, which predicted that civilization would likely collapse by 2040. The computer had been programmed to consider a model of sustainability for the world.

An Australian broadcaster recently recirculated a 1973 newscast about the computer program, commenting that it had identified 2020 as the potential tipping point for a world collapse. The program was commissioned by the Club of Rome, a group of scientists, industrialists and government officials focused on solving the world’s problems. In 1972, the group, led by scientists Donella and Dennis Meadows, and using an updated computer model (World3) authored the book, The Limits to Growth, which predicted that the combination of trends dealing with population, food production, industrialization, pollution and consumption of nonrenewable natural resources would result in the collapse of civilization by 2072. This was more distant than the World1 forecast of 2040 with its 2020 as the tipping point!

The Meadows’ book was published at the height of the initial climate-awareness push with its attendant doomsday prediction phenomenon. Much of that was associated with the first Earth Day in 1970, and was coupled with the popularity of Professor Paul Ehrlich’s 1968 book, The Population Bomb, predicting the end of the world due to overpopulation and the lack of food supply, i.e., Thomas Malthus reincarnated.

A recent article discussing the World1 computer prediction newscast and The Limits to Growth, highlighted the book’s critics, including The New York Times. The editors wrote, “Its imposing apparatus of computer technology and systems jargon…takes arbitrary assumptions, shakes them up and comes out with arbitrary conclusions that have the ring of science.” It concluded that the book was “empty and misleading.” Can anyone imagine that being written by the paper today? The evolution of climate reporting by The New York Times since the early 1970s, as evidenced by the above quotes, may say more about the decline in media skepticism and its embrace of social agendas in its reporting.

That contrasts with Mr. Hayward’s reliance on the wise counsel of Former President George W. Bush about truthful warnings: “Fool me once, shame on you; fool me twice. . . Won’t get fooled again.” Mr. Hayward referenced President Bush’s advice for why he would not be reading the full IPCC report. He has read most of them, he said, which means slogging through upwards of 3,000 pages, definitely

Exhibit 1. Is This Really IPCC’s Motivation?

Source: PowerLine.com

something reporters would never do. He did offer that he might read the chapter on computer modeling, as he always found it humorous. However, he was struck by a tweet by Eric Holthaus of the environmental web site Grist, that made him wonder whether he still needed to read this IPCC report.

Scientists have once again confirmed the political nature of the climate movement. It was tipped off by the sub-heading of the report. Mr. Holthaus linked his tweet to a paragraph in the summary section for Chapter 4 of the report, which deals with the necessary transitions required to make the pathway to a faster reduction in carbon emissions feasible. The paragraph was under the heading “Enabling Rapid and Far-reaching Change” and states:

“Increasing evidence suggests that a climate-sensitive towards low-emission, climate-resilient infrastructure and services requires an evolution of global and national financial systems. Estimates suggest that, in addition to climate-friendly allocation of public investments, a potential redirection of 5% to 10% of the annual capital revenues is necessary. This could be facilitated by a change of incentives for private day-to-day expenditure and the redirection of savings from speculative and precautionary investments, towards long-term productive low-emission assets and services. This implies the mobilisation [sic] of institutional investors and mainstreaming of climate finance within financial and banking system regulation. Access by developing countries to low-risk and low-interest finance through multilateral and national development banks would have to be facilitated (medium evidence, high agreement). New forms of public-private partnerships may be needed with multilateral, sovereign and sub-sovereign guarantees to de-risk climate-friendly investments, support new business models for small-scale enterprises and help households with limited access to capital. Ultimately, the aim is to promote a portfolio shift towards long-term low-emission assets, that would help redirect capital away from potential stranded assets (medium evidence, medium agreement).”

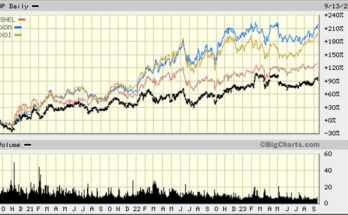

Exhibit 2. IPCC Would Siphon Off Corporate Profits

Source: St. Louis Federal Reserve, PPHB

As Mr. Hayward put it, “Decoded from that gobbledygook is a straightforward ratification of what I’ve always said: the solution for every environmental problem is always massively more government control of people and resources.” That is clear from the very first sentence that talks about “realignment of savings and expenditure” and then the “evolution of global and national financial systems.” As part of that effort, there needs to be not only an increase in “public investments,” but also a reallocation of “5% to10% of annual capital revenues.” The chart in Exhibit 2 (prior page) shows the history of after-tax profit margins for American business from 1947 through 2016. The gray shaded area is the range from 5% to 10% profit margins, the estimated amount of money that needs to be “re-directed.” As shown, it means we would have eliminated all the profits of industry in the economy for most of the 70-year post-war era!

Potentially the most revealing statement in the cited paragraph is the acknowledgement of the need for “multilateral, sovereign and sub-sovereign guarantees to de-risk climate-friendly investments.” That statement signals what many students of renewable energy have argued – they are uneconomic without government subsidies, meaning higher costs for the public through either increased energy prices or increased taxes to pay the subsidies, and sometimes both. They could have just as easily quoted Warren Buffet saying that the only reason to build wind farms is for their tax credits.

In this one paragraph, the world is shown the true agenda of the climate change movement and the costs to be imposed on economies and civilization for what may yet prove to be un-needed actions. The latter part of that previous statement becomes clear when reading all the acknowledgements throughout the IPCC report about what the authors of the 6,000+ scientific papers that form the knowledge-base of the study don’t know about the technology they are promoting as solutions for global warming.

Highlighting only a few statements from Chapter 4 show that the report’s authors are aware that they don’t know a lot about the cost, timing and/or impacts of what they are proposing as solutions for climate change mitigation. For example: “Knowledge gaps around implementing and strengthening the global response to climate change would need to be urgently resolved if the transition to 1.5°C worlds is to become reality.” The inability to resolve this problem makes moot most of the remainder of the IPCC report.

The authors further state: “Unless affordable and environmentally and socially acceptable CDR (Carbon Dioxide Removal) become feasible and available at scale well before 2050, 1.5°C-consistent pathways will be difficult to realise [sic] …” We are not sure what year “well before” translates into, but the technology being tested is far from perfect and hugely uneconomic, with prospects it won’t become economic for many years. The public probably won’t tolerate the government throwing untold sums at this technology.

Another challenge for climate change mitigation has to do with civilization’s diet. The report states: “Some estimates indicate that livestock supply chains could account for 7.1 GtCO2, equivalent to 14.5% of global anthropogenic greenhouse gas emissions. Cattle (beef, milk) are responsible for about two-thirds of that total, largely due to methane emissions resulting from rumen fermentation.”

The authors went on to acknowledge: “There, however, remains limited evidence of effective policy interventions to achieve such large-scale shifts in dietary choices, and prevailing trends are for increasing rather than decreasing demand for livestock products at the global scale. How the role of dietary shift could change in 1.5°C-consistent pathways is also not clear.”

In the end, the following statement highlights the greatest challenge for the IPCC and global policy-makers: “The challenge is therefore how to strengthen climate policies without inducing economic collapse or hardship, and to make them contribute to reducing some of the ‘fault lines’ of the world economy.” This statement was before the paragraphs dealing with the IPCC’s estimates of the annual global cost to affect the climate change mitigation actions, about which they said, “Yet there is limited evidence and low agreement regarding the magnitudes and costs of the investments.” (Emphasis in the original.) Therefore, one should treat all the estimates of the cost of mitigation from the IPCC with a grain of salt. As with all government estimates, assume they are understated, which isn’t obvious until we are too far down the path to reverse course!

Despite the media’s attention to the IPCC report, it has totally ignored one of the more significant disclosures about the fundamental basis underlying climate change studies. That was a report of the disastrously poor quality of the leading historical global temperature database, which underlies the climate models delivering the apocalyptical predictions.

Australian researcher John McLean, PhD, has conducted an audit of the creation and content of the Hadley Centre’s Global Temperature Dataset (HadCRUT4). The study was initially done for his doctoral thesis, and it was reviewed by experts external to the university, revised in accordance with their comments and then accepted by the university. This is the equivalent of “peer review.” Dr. McLean found the dataset riddled with errors and inconsistencies, rendering it, in his view, virtually useless, and especially by such a prestigious organization as the IPCC. His thesis utilized the dataset as of late January 2016. His new report was based on the January 2018 versions of the files and contained updates not just for the intervening 24 months, but also additional observation stations and consequent changes in the monthly global average temperature anomaly right back to the start of the data in 1850. (This fact relates to the attempt to modify global temperature data to make the more recent readings appear warmer relative to the past.)

Dr. McLean said of the database: “It’s very careless and amateur.” He went on to state that it is “about the standard of a first-year university student.” Among errors he found were the following:

Large gaps where there is no data and where instead averages were calculated from next to no information. For two years, the temperatures over land in the Southern Hemisphere were estimated from just one site in Indonesia.

Almost no quality control, with misspelled country names (‘Venezuala” “Hawaai” “Republic of K” (aka South Korea) and sloppy, obviously inaccurate entries.

Adjustments – “I wouldn’t be surprised to find that more than 50 percent of adjustments were incorrect,” says McLean – which artificially cool earlier temperatures and warm later ones, giving an exaggerated impression of the rate of global warming.

Methodology so inconsistent that measurements didn’t even have a reliable policy on variables like Daylight Saving Time.

Sea measurements, supposedly from ships, but mistakenly logged up to 50 miles inland.

A Caribbean island – St Kitts – where the temperature was recorded at 0 degrees C for a whole month, on two occasions (somewhat implausible for the tropics).

A town in Romania, which in September 1953, allegedly experienced a month where the average temperature dropped to minus 46 degrees C (when the typical average for that month is 10 degrees C).

Dr. McLean pointed out that data sparsity was a real problem. According to the method of calculating coverage for the dataset, 50% global coverage wasn’t reached until 1906, and 50% of the Southern Hemisphere wasn’t reached until about 1950. As Dr. McLean wrote:

“In May 1861 global coverage was a mere 12% – that’s less than one-eighth. In much of the 1860s and 1870s most of the supposedly global coverage was from Europe and its trade sea routes and ports, covering only about 13% of the Earth’s surface. To calculate averages from this data and refer to them as ‘global averages’ is stretching credulity.”

Dr. McLean also pointed out problems with the method of adjusting the temperature data. The dataset alters all previous values by the same amount. So, where the change in the temperature is gradual, such as in a growing city, the methodology distorts the true temperature, adjusting all past data by more than it should have been. With observation stations relocated multiple times, the earliest data might be far below its correct value, exaggerating the warming trend.

Dr. McLean’s key conclusion from his audit is that “the dataset shows exaggerated warming and that global averages are far less certain than have been claimed.” He suggested three potential impacts from his primary conclusion. First, the climate models have been tuned to match incorrect data, rendering incorrect predictions of future temperatures and estimates of human influence on temperatures. Second, the Paris Climate Agreement adoption of 1850-1899 averages as “indicative” of pre-industrial temperatures is fatally flawed. During that time period, the global coverage averaged only about 30%, and many of the land-based temperatures are likely excessively adjusted and therefore incorrect. Finally, even if the IPCC is correct in its claim that mankind has caused the majority of the warming since 1950, the amount of such warming could well be negligible. In fact, Dr. McLean has suggested that about one-third of the 0.6° C temperature rise since 1950 may be exaggerated.

As Dr. McLean points out, “Most people can’t even notice a change in temperature of 1 degree C for one moment to the next. So, the idea that governments are spending so much money on the basis of a rise in temperature a fraction of that spread over almost 70 years is just idiotic beyond belief.”

Before dismissing Dr. McLean’s work, it should be noted that he is the person who uncovered the fact that the IPCC’s 2007 Assessment Report, which, according to the IPCC, represented a “consensus” of the views of “2,500 climate scientists” was actually based on the opinions of just 53 scientists. This small group of scientists wrote the key Chapter 9 conclusion about human-induced warming being detectable in every continent except Antarctica, and was leading to the melting glaciers and sea ice, changing rainfall patterns, and more intense cyclone activity supposedly being observed (a conclusion the IPCC is backing away from). These 53 scientists later were shown to be part of a close professional network affiliated with Michael Mann of the “hockey stick” temperature fame, which was uncovered during the East Anglia University climate change email scandal.

A problem the Hadley Centre and Met Office (collaborator) has with challenging Dr. McLean’s work is that in March 2016 he advised them of certain errors, which they promptly corrected. His credibility was accepted then, making him an authority they take seriously.

Unfortunately, the climate change movement will work to whitewash any serious examinations of its analyses, including of the quality of the data underlying the models producing the results. The conclusion in the latest IPCC report proclaiming a “knowledge gap” existing in how best to modify the world’s economy and civilization’s lifestyle is a claim for more money for climate change research. That, ultimately, is the scam of the climate change movement.

We’re Back, With Greater Appreciation For The Real World (Top)

You may have noticed in the last Musings that we said our next issue would be about a month away. Early last week, we arrived home from two weeks in the South African bush country. We took advantage of an aggressive push by Turkey to boost its economy, especially its tourist trade, by cutting airfares on Turkish Airlines, making the airfare portion of our trip’s cost quite reasonable. It did mean we had to commit two travel days getting between Houston and South Africa. To reduce the stress of the trip, we allotted a day of rest in Johannesburg going and coming. On the way back, we used our day to sightsee in Johannesburg, which we were surprised to learn is about the same age as the opening of the American west. A highlight of the travel over and back was the opportunity to take two layover tours in Istanbul at different times of the day, something that became a bit more interesting on the way back. Layover tours are a popular promotion effort of Turkish Airlines designed to help the nation’s tourist trade.

The reason for our trip was to spend ten days with the team of guides, cameramen and producers of the YouTube show, safariLIVE. This is a twice daily, three-hour, interactive safari show. The safaris are run at the Maasai Mara National Reserve in Kenya and the Djuma Private Game Reserve in the Sabi Sands/greater Kruger National Park area in South Africa, which is where the show is headquartered. The safari show is produced by Wildearth TV, which has an affiliation with National Geographic. We had helped the backers of the show in a “kickstarter” effort to begin a similar interactive show underwater – diveLIVE. In return for our financial support, we were invited to spend time with the safariLIVE crew and learning about the wild animals of the South African bush country.

We spent two days in a jeep with a safari guide, who is televised by a cameraman in the back while he talks about what is being shown and answers questions sent in by viewers. When the guide isn’t on camera, you can talk about the land, the animals and the foliage. In our two trips, we experienced some of the most amazing sights – a leopard with a kill, which was stolen by a hyena, and the leopard’s attempt to get it back, and tracking a female leopard leading her cub for several miles to a kill that the mother had hidden for the youngster to eat, before finding a tree to put the remainder in for safe-keeping for the night. We went back the next day on one of our two ride-walk tracking exercises and found the mother leopard and cub under the tree, grooming themselves after having eaten the remainder of the kill.

Exhibit 3. A Friendly Get Together In The Bush

Source: Allen Brooks

We also participated in two bush walks with a guide, a cameraman and a tracker/safety person. Three hours of walking in the bush to track the oldest male leopard in the area was an interesting experience. The other walk started out tracking two new male lions who had come into the area, but instead we encountered two herds of elephants meeting up and migrating through the Djuma area. It was impossible to know exactly how many elephants there were due to the wide range of ages – babies, youngsters and adults, but we counted at least 25 – an amazing scene!

Exhibit 4. A Snooze After Dinner

Source: Allen Brooks

Wildearth TV is working to involve as many elementary and middle schools from around the world in helping students learn about the wild animals in southern Africa. Part of that effort involves arranging for the installation of internet connections in some schools in Asia and Africa, so the children can watch the show and learn.

A most interesting experience was on our layover in Istanbul on the way home. This was the day the media was reporting that the government of Saudi Arabia was about to admit to having killed the journalist and Saudi dissident Jamal Khashoggi in an interrogation and potential kidnapping that went bad. This was also a few days after the Turkish Supreme Court convicted American Pastor Andrew Brunson of aiding terrorism after being detained for two years, but sentenced him only to time served, thereby freeing him to travel home to the United States. For those who may not remember, Istanbul was the scene of violence during an attempted overthrow of the government headed by President Recep Tayyip Erdogan, and the airport was the site of a terrorist attack in 2016. Given that the Saudi journalist’s death occurred in the Saudi Arabian embassy in Istanbul, Turkish officials were on high alert. While we didn’t see any signs of this tension near the Hagia Sophia in town, we did notice increased police presence in the airport with them openly carrying automatic weapons. When we went to board our flight to Houston, we had to go through several more levels of security screening. Fortunately, there was no incident, and flight left only a few minutes late.

We commend safariLIVE to our readers who want to see and learn more about the African wild animals in their habitat. The young people (20s and 30s) who are responsible for putting on the shows for six hours a day are an enthusiastic and dedicated group we were pleased to spend time with. It was a great experience!

Renewable Power Loses Out To Natural Gas In Power Market (Top)

A recently updated analysis by the University of Texas (UT) at Austin’s Energy Institute shows natural gas combined cycle, wind and residential solar photovoltaic technologies to be the least-expensive ways to generate electricity across much of the United States. The interactive model uses a range of power generating technologies and ranks them based on their levelized cost of electricity (LCOE). The map evolved from an analysis conducted, and initially reported in 2016, with input from a wide range of UT experts. Their expertise covered mechanical engineering, electromechanics, chemical engineering, public policy, public affairs, and finance, among others, all used to help calculate the LCOE of new power plants on a county-by-county basis and by congressional districts in the country, while also providing estimates of some environmental externalities.

The technologies examined for generating electricity included: coal (bituminous and sub-bituminous, with 30% and 90% carbon capture and sequestration); natural gas combined cycle, with and without carbon capture and sequestration; natural gas combustion turbine; nuclear; onshore wind; solar photovoltaic (PV) at both utility and residential scale; and concentrating solar power (with six hours of storage).

The methodology employed by the UT Energy Institute is designed to overcome some of the inherent flaws in LCOE calculations. The cost of building and operating an identical power plant in different geographies will be different due to local construction cost differentials. Likewise, fuel costs, capacity factors and financing terms will differ across regions. LCOE does not incorporate these differences. Additionally, LCOE can be problematic due to the assumption of constant capacity factors over the life of the plant’s operation. As a result of these concerns, the Energy Institute’s analysis incorporates region-specific data on plant capital expenditures, operating and maintenance expense, as well as fuel costs. The result of this effort to more closely identify the true costs of new power plants by region is to produce calculated LCOEs that are more reflective of true electricity costs by region.

The latest map (generated in early October) shows that natural gas combined cycle is the cheapest technology for large swathes of the eastern and western regions of the United States (light brown). This conclusion is true even at a natural gas fuel cost of $5.07 per million British thermal units (MMBtu). Solar PV is the cheapest power for most of Arizona, Colorado, Wisconsin, Minnesota and Missouri (purple). Wind provides the cheapest power for much of the central U.S., New York, and small swathes of Appalachia (green).

Exhibit 5. Where Natural Gas Is Cheapest Power

Source: Powermag.com

What is most enlightening is a similar map showing the cheapest technologies based on current natural gas prices (in this case, $3.24/MMBtu). In contrast to the previous map, this one shows natural gas winning in Arizona, much of the Panhandle of Texas, most of Oklahoma, and nearly half of New York. The latter is amusing as Governor Andrew Cuomo (D) has banned the development of natural gas resources in the state by fracturing, including blocking new and expanded pipelines crossing the state to haul gas to New England.

Exhibit 6. Low Gas Prices Wins More Of Country’s Power

Source: Powermag.com

The significance of these two maps, especially the one utilizing current natural gas prices, is that natural gas wins in the battle to be the cheapest source of electricity in almost every region where solar and wind power are being forced into the grid via government mandates and/or subsidies. An argument for emphasizing wind and solar is the significant declines in their capital costs – an 80% drop in the cost of solar panels in recent years, for example. The reality is that when based on more precise cost data for building new power plants, wind and solar prove to be less competitive than touted by environmentalists and politicians. Quite possibly, the message delivered by these maps is what is prompting the growing attacks against increased use of natural gas as a “bridge” fuel to a cleaner energy world. A truly competitive playing field for power fuels would leave renewables with a much smaller national footprint. That might be an outcome utility customers would welcome.

Trying To Dodge Florence On Trip Home Was Unnecessary (Top)

Given the timing of our last Musings issue and our recent trip to Africa, delivering this narrative about the impressions from our trip home from Rhode Island seems like ancient history. What we know, though, is that many of our readers are interested in our observations from the road during these trips. They find our assessment of the state of the economy, as reflected by observing highway traffic and scenes at food establishments we visit, is a unique perspective not found in other publications. We have often observed things about the state of the U.S. economy others have not reported, or we confirm what current economic statistics are telling us. This particular trip was influenced by the then-impending arrival of Hurricane Florence and the possibility that our usual route home would be subject to storm conditions. As a result, we did more advanced planning, including seeking alternate routes to avoid possible storm conditions.

About a week before we left, the weather services began tracking tropical disturbance Florence, then off the west coast of Africa. She began as a tropical disturbance on August 30 with wind speeds of under 38 miles-per-hour (mph), and began her journey westward. Five days later, her intensity had increased to hurricane status, meaning wind speeds exceeded 73 mph. As she continued traveling west, her strength fluctuated, even causing her status to drop to tropical storm at one point, or wind speeds of 39-73 mph. Once she reached the extremely warm waters of the Atlantic Ocean, Florence rapidly intensified, growing into a Category 4 hurricane with sustained winds of 130-156 mph. The weather services began talking about Florence possibly reaching Category 5 status with sustained winds above 156 mph.

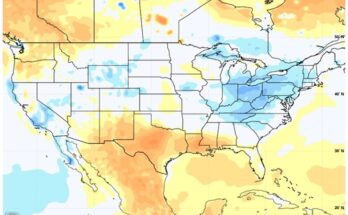

Exhibit 7. Possible Coastal Targets Of Florence

Source: NOAA

The question for forecasters was would Florence drive straight across the Atlantic and slam into the Carolina coast, or might she bend southward, targeting Florida, or northward to hit the Mid-Atlantic and/or New England regions? There was even a computer model showing Florence making a sharp right-hand turn after passing Bermuda and heading back out to sea and ultimately toward the UK and Europe.

Florence grew to be a large storm, meaning its winds and rain would extend well beyond the eye of the storm and impact most of the Southeast from Virginia to Florida. The storm’s reach actually extended further as rip currents impacted the Rhode Island beaches. We could actually hear the roaring waves from our house. The zone of confidence for Florence’s path, which encompasses all the possible tracks of the storm’s eye, was wide. The storm’s arrival time at the U.S. coast was targeted for late Thursday or very early Friday, September 13-14. That would coincide with our departure planned for Friday morning.

As we contemplated the possible track of Florence, which at the time was targeting the North Carolina/Virginia border for landfall and then continuing into western Virginia and north to Pennsylvania. That route would have us driving through rain all of Friday, with the possibility we would encounter traffic delays. With that possibility in mind, we still decided to leave early Friday, but only planned on going about a third of our 1,900-mile trip’s distance to Roanoke, Virginia. That would normally be a 10-hour drive, allowing for expected delays, we thought that was a reasonable distance given the weather conditions we anticipated.

There were evacuation orders for residents along the coast. From prior experience, we knew hotels along our route would be targeted by evacuees, so we booked a room at a Hampton Inn in Roanoke that we have stayed at in the past. It is right off the highway, so easy to find, even if the weather was a challenge.

Thursday was extremely busy due to rain during the first three days of the week. We needed to deliver our boat to the boatyard for winter storage, close up the house, move everything in from outside, garage our Rhode Island car, pack, load our car, eat dinner and go to bed early. Friday dawned with the sun out, blue sky and white puffy clouds – no signs of what was being unleashed along the Carolina coastline.

We headed down I-95 with only a few trucks and moderate auto traffic. We passed New Haven and then Bridgeport, and our car’s guidance system suggested we detour to avoid stopped traffic ahead. We shifted from I-95 to the Merritt Parkway. The Merritt Parkway was the nation’s original super-highway through Fairfield County in southern Connecticut. It connects the Hutcheson River Parkway in New York with the Wilbur Cross Parkway starting in Stratford, Connecticut. The Merritt, as it is often called, is a limited-access highway (no trucks) known for its scenic layout and architecturally-elaborate overpasses. With its history and beauty, it is designated as a National Scenic Parkway and is listed in the National Register of Historic Places.

Traffic on the Merritt Parkway, surprisingly, was relatively heavy, but it did move right along. We assumed the heavy traffic was influenced by people avoiding the I-95 traffic delay about which our guidance system had alerted us. The one blessing of using the Merritt Parkway is that there are no trucks! We soon were crossing the newly opened westbound span of the Governor Mario M. Cuomo Bridge, aka the Tappan Zee Bridge, over the Hudson River. It will always be aka to us. By the time we stopped for lunch near Harrisonburg, Pennsylvania and the I-81 junction, we realized we had been flying. As we drove through Maryland around 2:30 pm, we realized we could go further than Roanoke if conditions held, or we would get there before dinner, making for a very short day. Originally, we were thinking about a two-night trip. We also thought Roanoke provided an option of going west if Florence impacted the highways. Neither option seemed to be needed, so we cancelled our reservation and made another about 150 miles further, in Bristol, Tennessee. No sooner had we made that switch than we were hit with a near white-out rain storm. We wondered if we had just made a monumental mistake, but conditions cleared and we arrived in Bristol about 9 pm.

Truck traffic had been quite light all day, with the exception of a few stretches. It was always where trucks bunched up because they cannot outrun each other due to speed restrictions set by the truck companies. Rolling hills create serious problems for trucks wishing to pass slower ones. We are not sure how this will be improved with autonomous trucks. We did observe that truck stops and highway rest areas were roughly 80% full of trucks in the afternoon. We wondered if the new mandatory rest periods and daily work limits have drivers adjusting their schedules in order to drive more miles during the times when auto and local truck traffic declines, i.e., during the night. That thought seemed to be confirmed by the number of trucks passing our hotel that night.

At 6:45 pm, the Cracker Barrel restaurant in Roanoke, Virginia was comfortably full, but no waiting line. Service was prompt, so we were back on the road quickly and to our hotel by 9 pm. As we were driving this last leg, we enjoyed the sky. Massing gray mushroom-shaped clouds extended from the horizon high up into the sky and were connected by dark cloud bridges between the clouds. The sky was an array of pink and blue striations of different hues, reflecting the rays of the setting sun. From Pennsylvania south, the sky had grown cloudier, reflecting the impact of Hurricane Florence, and we encountered a few brief showers. The sky that evening did not reflect what we envisioned was happening just a few hundred miles to the east.

Day two dawned with a bright blue sky. We later encountered clouds, which we assumed were rain bands from Florence as she slowly worked herself across the Carolinas. We encountered brief showers in Tennessee and Alabama, but most of the rain that day occurred along the Gulf Coast. Traffic continued light, although it was a Saturday. Maybe it was helped by the cancellation of football

Exhibit 8. The Track Of Hurricane Florence

Source: Weather Underground

games throughout the Virginia/North Carolina region. We did encounter electronic Game Day traffic warning signs in Knoxville, Chattanooga, Birmingham and Tuscaloosa. By the time we reached Baton Rouge, the LSU Tigers were finishing a last-minute upset of Auburn, which kept the stadium traffic down as we assumed people were glued to their seats.

With no serious construction delays and light traffic, we rolled on. Our only delay was for about 30 minutes as we crawled along a four-mile stretch of I-12 due to a tractor-trailer accident and fire that burned up some cars being hauled. We saw fewer than a dozen police cars on the entire drive. Several had pulled over speeders, while others were monitoring the traffic flow. It didn’t look like a big revenue-generating weekend for local governments!

With another easy in-and-out dinner in Lafayette, we headed to Houston, skipping a second night on the road. We pulled into our driveway about 10:30 pm. With the additional hour gained by crossing into the Central Time Zone, we drove 1,044 miles in an elapsed time of 16 hours. For anyone mathematically-challenged, that is an average of 65.25 mph, with most of the trip on roads with 70 mph speed limits.

How much Florence reduced the volume of truck traffic we have been experiencing in recent years is hard to know. We believe it had some impact. Overall, gasoline prices were low, although much has been made by the media about how much higher they were this year compared to last. Everywhere we encountered people, we came away thinking that the economy was pretty upbeat. There were scattered signs in store windows advertising job openings. All the restaurants had permanent signs highlighting not only that the establishment was seeking new employees, but why it made sense to come to work for them – wages, flexible hours, and importantly, aid for continuing education. Overall, there were not as many help wanted signs as in trips of the past several years, but the low national unemployment rate may explain why. Despite all our concerns over how Florence might impact our trip home, this drive turned out to be one of the easiest we have experienced in a number of years.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.