- Accidents Haunt Canada’s Rail Transportation Of Crude Oil

- New Study On Youth Driving Says It’s All About Economics

- Al Gore Tilting At Windmills But With Significant Support

- EIA Introduces New Data To Help Understand O & G Output

- Has EPA Become The Punching Bag For Industry And States?

- Acceleration In Patents Should Help World’s Energy Markets

Musings From the Oil Patch

October 29, 2013

Allen Brooks

Managing Director

Note: Musings from the Oil Patch reflects an eclectic collection of stories and analyses dealing with issues and developments within the energy industry that I feel have potentially significant implications for executives operating oilfield service companies. The newsletter currently anticipates a semi-monthly publishing schedule, but periodically the event and news flow may dictate a more frequent schedule. As always, I welcome your comments and observations. Allen Brooks

Accidents Haunt Canada’s Rail Transportation Of Crude Oil (Top)

Last July, 47 Canadians were killed when a runaway train loaded with Bakken crude oil crashed and burned in Lac-Mégantic, Quebec. That accident, which wiped out a portion of the town’s downtown, was the focus of media attention for days while the fire burned and the search for victims was underway. About two weeks ago, Canada experienced another petroleum-related rail crash near Gainford, Alberta, about 80 kilometers (49 miles) west of Edmonton. The Canadian National Railway Company’s (CNI-NYSE) train was carrying both propane and crude oil, but fortunately no one was hurt and property damage was limited, although road traffic was disrupted due to the fire created by the accident jumping a four-lane highway. This was the third accident for CNI in the past month involving hazardous materials.

While transportation of hazardous goods by rail in Canada is very safe, the spate of train accidents, and the tragic loss of life in Lac-Mégantic, has Canadians nervous. A column in the Calgary Herald recited the string of accidents this year. Besides the Lac-Mégantic and the recent Gainford accidents, there was the derailment of an ammonia-laden train just a couple of days earlier near Sexsmith, Alberta. In September, 17 railcars carrying petroleum, ethanol and chemicals from a CNI train derailed in Saskatchewan. Canadian Pacific Railway (CP-NYSE) experienced two train derailments in Calgary since June involving petroleum products.

The latest rail accident prompted Wendy Tadros, chair of the National Transportation Safety Board of Canada (TSB) to remark, “There has been an erosion of public trust.” Ms. Tadros had previously written an op-ed in The Globe and Mail suggesting that the shift in the public’s confidence in rail transport of hazardous goods will force the industry to adjust its thinking regarding safety.

She said, “It’s no longer enough for industry and government to cite previous safety records or a gradual, 20-year decline in number of main-track derailments.” In her op-ed, Ms. Tadros commented that the railroads needed to address many issues that “the TSB has been advocating for years.” Those issues involve installing more of the best trackside detection systems, employing modern fail-safe methods for stopping runaway trains and only shipping hazardous goods in tank cars that meet the highest safety standards. Improving the safety of trains hauling hazardous goods, such as petroleum products, is important for winning back the support of citizens. Safety will be important if the industry plans to expand its use of railroads for moving crude oil and petroleum products.

Exhibit 1. Rail Increases Shipping Flexibility And Profits

Source: Platts

Why is railroad transporting of goods safely so important? Over the past several years, the greater flexibility offered by railroads for getting crude oil and petroleum products to their most profitable markets has grown in importance for the energy industry. Rail transportation volumes have grown significantly and are predicted to increase even more in the future at the expense of pipelines. A recent study by Phil Skolnick of Cannacord Genuity estimates that 130,000 barrels a day of Canadian heavy oil is being shipped by rail now. That compares to an estimate from the American Association of Railroads that nearly 200,000 railcars in Canada carried oil or petroleum products in the first seven months of 2013, a 20% increase over the same period in 2012. One industry estimate projects two million barrels a day of North American petroleum will be moving by rail by the end of 2014. Mr. Skolnick’s analysis suggests that the planned rail loading terminals in Alberta will add an incremental 425,000 barrels a day of heavy oil shipping capacity over the next two years, possibly eliminating the need for the Keystone XL pipeline.

Exhibit 2 Rail Shipments May Offset Pipelines

Source: Platts

Canadian media articles focusing on the safety of rail transportation of crude oil and petroleum products cite the TSB report of only 63 derailments in Canada in 2012, down from an annual average of 107 over the previous five years. The articles also point out that there had been 56 derailments through July of this year. However, those figures only relate to main track derailments. Those are the most serious accidents and would include the rail accidents described above. While there were only 63 accidents resulting in the leaking of dangerous goods in 2012, which compares to the average for 2007-2011 of 64, if you look at total rail accidents the story becomes somewhat different. Those figures show that for the past four years there have been somewhere in the low 1,000 accidents per year, down from around 1,400 accidents on average in the 2003-2006 period.

Exhibit 3. Recent Number Of Rail Accidents Lower

Source: TSB

The distribution of accidents in 2012 is informative. Nearly half of the total accidents were non-main-track derailments. Are those serious? It is difficult to know, but anytime a railcar carrying dangerous cargo goes off the track, one should assume that there is a risk to life and property.

Exhibit 4. 2012 Rail Accident Distribution

Source: TSB

There has been improvement in the number of main-track derailments as the total has fallen by more than half from earlier years. In the case of the number of main-track collisions there doesn’t appear to be meaningful improvement, however, the overall total has been fairly low throughout the entire 2003-2012 period.

Exhibit 5. Main-track Rail Accidents In Canada

Source: TSB

What we found to be of greater concern is the number of fatalities related to railroad accidents. TSB reports deaths by crossing accidents (those killed in an accident at a railroad/highway crossing), trespasser accidents (where a person is killed while being on railroad property illegally) and all others. While the trend in trespasser accidents appears to be downward, the number of crossing accidents doesn’t seem to have shown any improvement. (Exhibit 6, next page) There will be an increase in the All Others category following this year’s Lac-Mégantic accident that killed 47.

Exhibit 6. Classification Of Rail Accident Deaths

Source: TSB

As shown by the information in Exhibits 1 and 2 on pages 2 and 3, respectively, the use of rail to ship crude oil and petroleum products to markets is becoming a permanent part of the industry’s transportation system. The shifting volumes of liquids and the desire of oil and gas companies to maximize their profits virtually necessitate the increased use of rail. Some may be comforted by the statement from an interview by Canada’s Transport Minister Lisa Raitt that “Over 99.9 per cent of the time the dangerous good makes it to its final destination.” Others are concerned that the 0.1% of the time they don’t make it just isn’t good enough since there could be more Lac-Mégantics. The Railway Association of Canada says the dangerous goods safety performance is actually 99.9977 per cent of rail shipments in the country. The industry – both the railroads and the energy companies – needs to be seen as working to increase that ratio to 100%.

New Study On Youth Driving Says It’s All About Economics (Top)

One of the great mysteries about the decline in vehicle miles driven in the United States is why America’s youth are delaying securing drivers’ licenses and driving. A recent study from the U.S. Center for Disease Control, based on survey data, estimated that the proportion of high school seniors with a driver’s license fell from 85% in 1996 to 73% in 2010. The proportion of high school seniors who reported that they didn’t drive during an average week rose from 15% to 22% over the same period. Demographic data has suggested that part of the explanation for the decline in youth driving has to do with fewer of them, but that is not necessarily what is impacting their driving habits.

The Highway Loss Data Institute (HLDI) conducted a study of youth driving, which confirmed the results of the Center for Disease Control study. Relying on insurance data, the HLDI study found a similar decline in teen driving. Analysts looked at changes in the number of rated drivers ages 14-19 under collision insurance policies in 49 states and the District of Columbia. A rated driver on an insurance policy is typically the driver in the household considered to represent the greatest loss potential for the insured vehicle, usually the teen driver if there is one in the household. From 2006 to 2012, the number of rated drivers ages 14-19 declined 12%. During that same period, the population of teens ages 14-19 declined by only 3%. Clearly, there was something else impacting teens securing licenses and driving.

To understand what may be going on with teen drivers, rated drivers ages 35-54, referred to in the HLDI study as prime-age drivers, were looked at for comparison. That category of drivers also fell, but not as sharply as the decline in teen drivers. The result was fewer teen drivers relative to prime-age drivers. The ratio of teen drivers to prime-age drivers fell from 0.042 in 2006 to 0.038 in 2010 and then remained relatively constant through 2012. By utilizing a ratio, it was possible to control for changes in the number of teen drivers due to factors not specific to teenagers.

One of the factors examined by the HLDI study was the differences in unemployment rates between teens and prime-age workers. The unemployment rate, defined as the percentage of the total labor force that is unemployed and actively seeking employment, increased for both groups between 2006 and 2010. However, the rise was sharper for teenagers — 11 percentage points compared with only five percentage points for prime-age workers. The spread in unemployment, measured as the difference in unemployment rates for the two groups, increased at the height of the recession in 2009 and then leveled off after 2010.

There was an inverse relationship between the growing unemployment spread of the age groups and the declining ratio of teen drivers to prime-age drivers. Population changes and changes in the age for initial state licensing contributed somewhat to the decline in the teen driver ratio, but the HLDI study concluded that 79% of the decline was associated with the increasing unemployment spread.

Exhibit 7. Youth Unemployment A Driving Problem

Source: HLDI

The conclusion of the HLDI study was that teenagers still have a desire to secure their drivers’ licenses, own vehicles and drive. The problem is that the economics of owning and driving vehicles has become prohibitive due to the recession and weak recovery as confirmed by the sustained high unemployment rate for teenagers, especially relative to prime-age drivers. The results of this study suggest that even though many of us have focused on the social aspects of the Internet, Twitter and other electronic interaction rather than meeting in person as reasons why teenagers are not driving, it may not represent the sole reason for their lack of vehicle ownership and use. A demonstratively improved economic environment might lead to a reversal in the trend of youthful driving that could revive vehicle miles driven in the U.S.

Al Gore Tilting At Windmills But With Significant Support (Top)

Nobel Laureate, former Vice President of the United States and prominent private equity investor Al Gore has recently re-entered the media limelight over his claims about climate change. He has declared that the world is in the midst of a “carbon bubble” that is going to burst – or at least he is going to do everything in his power to help it to burst – and it will contribute to economic problems. Besides his investing, Mr. Gore is currently the chairman of The Climate Reality Project, a non-profit organization devoted to solving the climate crisis. It is not a coincidence that this new media climate effort follows on the release of the summary report for policy makers of the UN’s Intergovernmental Panel on Climate Change that upped its assessment of the imminent danger posed to the world’s climate and citizens from the continued release of carbon into the atmosphere.

For those in the energy business, carbon is what you deal with every day. However, that daily interaction may dull your senses to the growing global effort to attack your business – both its legitimacy and its financial viability. These attacks are becoming more creative and as such potentially more damaging. The public relations war over energy has been underway for decades, or at least since the first Earth Day in Philadelphia in 1974 that led to the passage of the Clean Air Act.

Mr. Gore was seen in the video declaring, “We have a carbon bubble.” He went on to define a “bubble.” According to Mr. Gore, “Bubbles by definition involve a lot of asset owners and investors who don’t see what in retrospect becomes blindingly obvious. And this carbon bubble is going to burst.” While Mr. Gore’s bubble definition is generally accepted, energy industry people should note the increased targeting of company owners and investors, since this is the angle of attack on the fossil fuel industry today.

During the video, Mr. Gore focused on the estimated $7 trillion in carbon assets on the books of multinational energy companies and how that value might be impacted by successful legislative efforts to prevent those assets from being produced. Mr. Gore stated, “The valuation of those companies and their assets is now based on the assumption that all of those carbon assets will be sold and burned.” If the anti-fossil fuel proponents are successful in keeping those assets in the ground, then there will be a negative valuation impact for the companies that own them. The estimate of the value of trapped carbon assets Mr. Gore quoted came from a report issued by Carbon Tracker, a non-profit organization focused on climate change policy. That organization, in conjunction with the Grantham Research Institute on Climate Change and the Environment at the London School of Economics and Political Science, prepared the report entitled Unburnable Carbon 2013: Wasted capital and stranded assets. The Grantham Research Institute is funded by the Grantham Foundation for the Protection of the Environment and endowed by Jeremy Grantham, the highly successful co-founder and chief investment strategist of Boston-headquartered $108 billion money-manager, GMO, and his wife. The 74 year old Mr. Grantham hails from Yorkshire, England, and is credited with having called the Internet bubble and then the housing bubble. Because of his negative views at the time of these popular investment theses, he was dubbed a “perma-bear,” meaning someone who is always negative on the outlook for investing in the stock market. That moniker, however, was eliminated by Mr. Grantham’s advice to GMO’s clients to get back into the stock market in early 2009 in the exact week the market established its post-Lehman Brothers low.

Mr. Grantham’s Malthusian investment views on minerals and food have evolved over the past decade. This has been a dangerous investment thesis historically because technology, human ingenuity and societal demand shifts have altered trends projecting ultimately toward global shortages. A few years ago, Mr. Grantham conducted a study of the price trends for commodities of all types and found that over the 20th Century they had declined across-the-board by 70%. Between 2002 and 2008, those prices recovered and soared to new highs in many cases, having reclaimed 100 years of price declines in a mere six year span. He believes the resource game changed due to the explosion of growth in China who, with a population of 1.3 billion people, consumes 45% of all the coal used in the world, 50% of all the cement and 40% of all the copper. These are growth rates Mr. Grantham believes are unsustainable.

As Mr. Grantham and his staff researched natural resources seeking to find those critical for our sustainability, he seized upon phosphate or phosphorous as the most attractive. This is a necessary chemical for every living being and we are mining it and depleting it. He went to professors and asked about the chemical’s future and was told, “We have plenty.” But he persisted asking what happens when we run out of it? He was assured, “There is plenty.” As Mr. Grantham says, there is plenty, but 85% of the low-cost reserves are located in Morocco and are owned by the King of Morocco. The rest of the world’s reserves have about a 50-year remaining life if the world’s economy and its population don’t grow rapidly. As Mr. Grantham pointed out, phosphate ownership concentration is much greater than Middle East oil.

Fossil fuels are one area where Mr. Grantham is very concerned, not from the perspective of there being too few reserves left in the world, but rather from the damage being caused to the environment by burning them. Mr. Grantham has even participated in protests against the Keystone XL pipeline due to the damage he foresees from increased consumption of bitumen from Canada’s oil sands. The Grantham Foundation is sponsoring research institutes, one of which collaborated with Carbon Tracker in the latest report on wasted capital from unburnable carbon resources.

In the report’s letter to readers, the following statement was made:

“Carbon Tracker’s work is now used by banks such as HSBC and Citigroup and the rating agency Standard & Poor’s to help focus their thinking on what a carbon budget might mean for valuation scenarios of public companies. The IEA is conducting a special study on the climate-energy nexus which will consider the carbon bubble. Together with our allies, we have brought it to the attention of the Bank of England’s Financial Stability Committee. We await their reaction to this analysis with great interest.”

It is difficult for energy company CEOs, their executives and directors to understand the degree of passion against their business that the anti-fossil fuel movement holds. Much like previous battles over social issues, the goal of the antagonists is to paint the opposition in a bad light. Every campaign needs a target that can be demonized while generating sympathy for innocent victims. Whether it is pictures of polar bears struggling to move from one ice flow to another, birds dripping in oil from a major oil spill or melting glaciers, these pictures become vehicles for generating public sympathy against fossil fuels that are painted as the cause of these problems.

While the Keystone pipeline permit has been the leading environmental target for the anti-fossil fuel movement, the real target is stopping the use of Canadian oil sands that is perceived as the “dirtiest” of all the fossil fuels available. President Barack Obama’s climate speech last June provided ammunition for the anti-fossil fuel movement. He urged his audience of college students (the speech was at Georgetown University) to push for divestment of the shares in oil and gas companies held by their university endowments. The energy divestment effort mushroomed under the guidance of an organization, 350.org, founded by environmental writer and activist Bill McKibben. Schools such as the University of Pennsylvania, Cornell University, Harvard University and Swarthmore College, to name just a few, became divestment targets. This effort has spread to over 300 college campuses across the nation, but to date the victories have been few. Only a handful of tiny schools such as Unity College, a small liberal arts school in Maine with an endowment of $14.5 million, Green Mountain College, College of the Atlantic, Hampshire College and Sterling College have divested their energy shares.

On the other hand, the University of Pennsylvania with its $7.7 billion fund and Harvard University with the world’ largest college endowment valued at $32.7 billion have elected to not divest their energy holdings. It appears the divestment movement has had more success in Europe where Norwegian pension fund and insurer Storebrand says it has excluded an additional 19 coal and oil sands companies from its investment portfolio and Holland-based Rabobank will no longer lend money to companies involved in shale gas extraction, or even make loans to farmers who lease their land to shale gas extraction companies.

With the energy divestment effort proving less successful than hoped for, the anti-fossil fuel movement has increased its activism. Environmental protestors plan to stage sit-ins at the offices of energy companies and even at the offices of industry regulators. There has been an increase in environmental litigation against energy companies over their projects and against federal regulators for failing to stop some of them. Environmental protests are shifting from passive to militant. Attacking energy companies by motivating their investors and regulators is the latest effort to sway investment in fossil fuels.

Last Friday’s Financial Times carried a front-page story about a letter signed by 72 investors including several U.S. state pension funds and large pension fund managers, warning energy companies that they may be investing in productive capacity that may never be used. The letter was sent to 45 companies including large oil and gas companies and mining groups. The letter urges the companies to carry out a “risk assessment” of the consequences of a global move to cut greenhouse gas emissions by 80% by 2050.

Andrew Logan of Ceres, the investor network coordinating the letter said, “Investors have already been burnt by coal, because of the sudden drop in demand in the U.S., and there’s a concern that oil is going to go the same way.” Every reputable long-range energy forecast predicts that fossil fuels will meet a significant share of the world’ future energy needs. Those forecasts are what energy company executives fall back on in arguing that they should be investing their shareholders’ money in new oil, gas, coal and uranium resources, which will be needed to meet growing future global energy needs

While it is difficult to see these anti-fossil fuel efforts gaining significant traction, one would have said the same thing about the first Earth Day, the anti-tobacco effort, the anti-apartheid boycotts of South Africa and various other socially-motivated movements throughout history. Energy executives must understand that whether they like it or not; or even if they approve of it or not; new energy sources will gain market share. Renewables are here to stay – economic or not, because a portion of our society has determined they should be a part of our energy supply mix and legal action will be taken to insure their role. Natural gas-powered and electric vehicles will represent a growing portion of America’s transportation fleet. Energy companies also can expect increased regulation that will squeeze profit margins. Boards of directors of energy companies increasingly will be challenged over their enterprise risk assessment of their company’s business model in a world regulated to reduce carbon emissions. But just as Thomas Watson wouldn’t recognize the modern computing industry, Henry Ford, the latest automobiles, or the Wright brothers, the modern airplane, the energy industry of the next quarter century will be different from the one that survived the 1985 oil price collapse.

EIA Introduces New Data To Help Understand O & G Output (Top)

The U.S. Energy Information Administration (EIA) introduced new data series last week to help analysts and forecasters understand industry dynamics underlying today’s oil and gas markets. The agency has shifted its focus for reporting new data series from a state- and product-oriented basis to a basin-oriented one. This shift is significant as it recognizes that virtually all the increases in liquid and gas output have come from six basins in the country where producers are exploiting the resources trapped in the shale underlying those basins. The EIA has also made a significant analytical move by understanding that more than half of the new wells drilled in the U.S. produce both crude oil and natural gas making the focus on one particular fuel targeted by a drilling rig of diminishing value.

Exhibit 8. Six Basins Yield New Data Analysis

Source: EIA

The six basins analyzed include the Eagle Ford, Permian, Haynesville, Niobrara, Bakken and Marcellus. The EIA’s approach in its new data report and the analysis from the trends isolates the impact of drilling on production-growth per working-rig in each basin. The information is presented in a summary report for the six basins along with a separate page for each basin. On the basin-specific pages, data and charts highlight oil and gas production trends from the start of 2007 to now. The entire data presentation is based on a belief that drilling efficiency and well productivity increases are the reasons for the growth in basin production and not gains in the rig count. This belief is the essence of what the analytical community has been seeking to understand and explain, in order to apply the latest data to projections of future petroleum output. In this case, the EIA projects the current and next month’s output. This data and analysis is a very good start, although based on the information released by the EIA there are questions for how it easily it can be used. Much like scientific experiments, analysts like to be able to replicate the EIA’s analysis, which may be impossible given the lack of clarity about what the data represents and how it is used.

The first question is about the definitions of oil and gas. Does the data for oil mean only crude oil or does it include all liquids – crude oil, natural gas liquids (NGLs) and lease condensate? In the case of natural gas, does the production data mean gross gas withdrawals or is it net of re-injected or flared gas? These definitional issues may not seem like significant points, but for those who are detail-oriented and/or attempting to tie the new data series to earlier data or proprietary analyses, the answers to these questions are important.

Another area of inquiry is to understand the exact geographical regions covered. For example, in North Dakota, the state reports about 50,000 barrels per day (b/d) of conventional oil with the balance being shale oil output. So the discrepancy between the EIA Bakken total oil production and that reported by the North Dakota Department of Mineral Resources raises a question as to exactly which figure is being reported. The geographical definition is a significant issue for the Eagle Ford formation that spans numerous counties in Texas. Exactly which counties are included in the EIA’s Eagle Ford definition can make a difference as to the number reported and how it might change in the future. These are just some of the unanswered questions at the moment. However, it is likely these issues will be answered in the coming months – and possibly the presentation will be adjusted to become even more robust and useful for analysts.

We decided to play around with the data from a presentation basis and concluded that only the oil data could easily be displayed in one graph. All the data for oil output and activity in the Bakken is in Exhibit 9. When we attempted to put the similar data for natural gas from the Marcellus into one chart, the relative sizes of the numbers

Exhibit 9. The Source Of U.S. Oil Output Growth

Source: EIA, PPHB

creates a challenge, forcing us to have to re-scale the output numbers to make everything fit. Visually, these charts do show the general trends but are not as easy to analyze as the separate charts contained in the one-page summaries of each basin.

Exhibit 10. Changing The Nation’s Gas Supply

Source: EIA, PPHB

With time, there will likely be a number of studies trying to explain the exact reasons for the production gains. Analysts will utilize the rig efficiency and well productivity measures to attempt to predict future oil and gas output trends. People will need the answers to the questions we posed above in order to effectively utilize the data, however, the shift to a basin focus is an important development. The EIA data, coupled with the basin-oriented rig data from Baker Hughes (BHI-NYSE), should improve analysts’ understanding of the dynamics of oil and gas shale producing trends, a welcomed event.

Has EPA Become The Punching Bag For Industry And States? (Top)

On September 20th, the Environmental Protection Agency (EPA) announced new regulations to limit greenhouse gas emissions from stationary sources including newly built coal-fired power plants. The agency is still considering proposing guidelines for limiting carbon emissions from existing coal-fired power plants, but those rules would have to be enforced by the various states since the regulations would come under another section of the Clean Air Act (CAA). The newly proposed rules are based on the expanded authority the agency has been handed since the 2009 Supreme Court case of Massachusetts vs. EPA. In that case, the EPA Administrator had decided not to regulate greenhouse gas emissions, including carbon dioxide emissions, from automobiles because there was a scientific investigation underway and these pollutants were being reduced by the agency’s efforts to boost the fuel efficiency of automobiles, which was the favored approach.

The plaintiffs in the Massachusetts case argued that carbon dioxide was specifically identified in the CAA legislation so the action of the EPA Administrator to not regulate that emission was a clear violation of the law and the Federal courts should enforce the regulation. The relevant section of the CAA stated: “The Administrator shall by regulation prescribe (and from time to time revise) in accordance with the provisions of this section, standards applicable to the emission of any air pollutant from any class or classes of new motor vehicles or new motor vehicle engines, which in his judgment cause, or contribute to, air pollution which may reasonably be anticipated to endanger public health or welfare.”

At the root of the case was the battle over whether greenhouse gases, including carbon dioxide, were tied to the rise in global temperatures and climate change. What the court ruled was that under the CAA, the EPA Administrator actually needed to make a declarative ruling – one way or the other – on that issue. But the EPA couldn’t elect to avoid the issue as it appeared to be doing. That mandate became a clarion call for the new EPA Administrator, Lisa Jackson, an Obama appointee, to act. On December 7, 2009, the EPA issued a 52-page Endangerment Finding concluding that carbon dioxide and five other greenhouse gases emitted by U.S. industry and vehicles were causing dangerous global warming. According to the EPA, these gases “ threaten the public health and welfare of current and future generations.” The agency relied on studies by the Intergovernmental Panel on Climate Change of the United Nations, the U.S. Global Climate Research Program and the National Research Council. It is interesting to note the date of the release of the finding – the anniversary of the Japanese attack at Pearl Harbor that started World War II for the United States. The EPA release was also timed to coincide with a gathering of 190 countries in Doha, Qatar trying to negotiate a global emissions control agreement.

As would be expected, both sides of the global warming debate claimed victory in the Supreme Court’s decision. The proponents of EPA regulation of all greenhouse gas emissions seized on the fact that the court did not challenge the regulation of tailpipe emissions, nor did it question the linkage of pollutants being regulated to their contribution to global warming. Rather, the issue appears to be limited to the concept as acted on by the EPA that its determination for the regulation of carbon emissions from tailpipes triggered a Prevention of Significant Deterioration (PSD) permit requirement for stationary source emissions. According to Thomas Lorenzen, a former assistant chief of the Department of Justice’s Environment and Natural Resource Division and now a partner with Dorsey & Whitney LLP, the “EPA will press ahead with its new source performance standards for GHG emissions from new power plants and its GHG emissions guidelines from existing power plants.”

Industry representatives were also heartened by the Supreme Court’s decision. One aspect of the impact of the trigger was that the EPA quickly realized that such a requirement would lead to the absurd result of requiring thousands of previously uncovered facilities to obtain a PSD permit. To forestall that problem, the EPA issued is “tailoring” rule, which phased in when and which sources would need the permit. It is also possible that the EPA could have made a different interpretation, such as not requiring a PSD permit for greenhouse gases that would not have created the so-called absurd results.

We wonder whether the new case, which will be heard in the spring court session, might rival the battle over the Affordable Care Act. If you remember that battle was quite contentious since it involved a significant slice of the economy and the health care for 330 million Americans. While broad in scope, the case was argued around fairly narrow legal points – the federal regulation over interstate commerce and state’s rights issues. In the end, the decision was determined through mental gymnastics by Chief Justice John Roberts who transformed the penalties in the law into taxes, enabling the law to be judged constitutional under the federal taxing authority, even though the legislative language rigorously avoided the use of the word “tax” for the very specific purpose that had it done so, it likely would have never been passed by the Congress. Further complicating the ruling was the court’s determination that federal government threats to withhold all federal Medicare reimbursement funds from those states who failed to expand their systems as dictated by the federal government was coercive and illegal. While American businesses complained about the costs imposed on them by the ACA, the Roberts court has been judged to be the most business-friendly court in years, suggesting the EPA may need to be prepared for a set-back in its greenhouse gas regulation. Those hoping the court will rule broadly about carbon emissions and climate change are disappointed, since the scope of the case limits any discussion, but with courts one can never be sure. In the end, this case could be a game-changer for the coal industry that has been badly beaten up by the Obama administration with its agenda to limit dirty fossil fuels as part of its plan to restructure the nation’s energy business.

Acceleration In Patents Should Help World’s Energy Markets (Top)

The phrase, “Necessity is the mother of invention” is widely attributed to translations of Plato’s Republic, and it suggests that difficult situations drive ingenious solutions. So we were intrigued when we read of a new study about the pace of energy technology innovation, especially given the climb in global oil prices over the past decade. The abstract for the paper, Determinants of the Pace of Global Innovation in Energy Technologies, opened with the following statement: “Understanding the factors driving innovation in energy technologies is of critical importance to mitigating climate change and addressing other energy-related global challenges.” So was this study about energy technology or a justification for an improved future for renewables? The basic conclusion of the study is that there has been significant growth in patents granted for energy technologies over the last decade with the greatest growth in the renewable energy technology sector. That should not be a surprise given the financial incentives being offered to solar and wind energy projects.

The three scientist authors point out that there have been bursts of technological innovation in the past. The energy crisis of the 1970s sparked enthusiasm for renewable and other energy technologies. That enthusiasm ended with the drop in oil prices in the mid 1980s, causing alternative energy technologies to fall out of favor. Those conclusions were reached from noting that the pace of patents granted stagnated between 1980 and 2000. As the new century opened there was greater interest in climate change and energy security concerns that generated increased interest in alternative energy technologies, which presumably is continuing today.

To further examine trends, the researchers developed a global database of 73,000 patents granted in 1970-2009. They worked diligently to guard against the data being distorted by changes in public policies regulating intellectual property or changes to patent quality. What the study concluded was there has been a significant increase in patents in energy technologies over the last decade. Almost every energy technology sector experienced increases in patents granted, with renewable energy technologies – solar and wind – growing the fastest. During 2004-2009, on average these two technologies grew annually at 13% and 19%, respectively, compared to an 11.9% per year increase in total energy technologies.

The World Intellectual Property Organization reports that the worldwide figure for energy patent growth was 4.6% per year for 2001-2005, which matches or surpasses the growth rate in patent grants for high-tech sectors such as semiconductors (+4.9%) and digital communications (+3.0%). The Japan Patent Office data showed that the country is number one in total cumulative patents filed for all energy technologies apart from coal, hydroelectric, biofuels and natural gas. The European Patent Office data showed that there has been a downturn in fossil fuel technology patents granted over the last decade, particularly for coal. Over the last decade, China has granted more energy patents per year than the European Patent Office, and it is growing at a faster pace than any other nation. There are more coal technology patents in China than any other nation, and China is second behind Japan in cumulative wind patents.

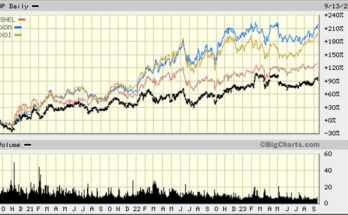

Exhibit 11. Renewable Energy Patents Soar

Source: PLoS ONE

While we were not totally surprised by the conclusions of the study – it was oriented to show how well renewables are doing – we wondered how the authors determined the significance of each patent included in their database. After we read about the study, which covers 100 countries, we did a patent search on Schlumberger (SLB-NYSE) patents granted in the U.S. since we know it has the largest research and development budget in the oilfield service sector. Between May 1st and October 10th of 2010, Schlumberger was granted 151 patents, or 27.45 per month. At that rate, the company would have been granted 329 patents in 2010, or more than the total number of U.S. fossil fuel patents issued in 2009. We know that since 2010, Schlumberger has ramped up its R&D budget and the number of patents it has been awarded has increased. At the same time, wind and solar manufacturers have been struggling in the past few years, so we wonder whether the pace of patent grants for those renewable fuels has slowed.

We were surprised by the funding graphs in the bottom of Exhibit 11 that show much more money in 2009 dollars being spent in the 1970s than at any point since. It is entirely possible the patents granted then and the dollars spent on those patents were more significant in improving America’s and the world’s energy business than patents being issued now. Unfortunately, we can’t know, nor do we believe the researchers can know the significance of any one of the 73,000 individual patents granted during the study period. The study supports the conclusion that technology will play an important role in our future energy mix.

Contact PPHB:

1900 St. James Place, Suite 125

Houston, Texas 77056

Main Tel: (713) 621-8100

Main Fax: (713) 621-8166

www.pphb.com

Parks Paton Hoepfl & Brown is an independent investment banking firm providing financial advisory services, including merger and acquisition and capital raising assistance, exclusively to clients in the energy service industry.